Kubernetes Service Concepts: Difference between revisions

| (160 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=External= | =External= | ||

* | * https://kubernetes.io/docs/concepts/services-networking/service/ | ||

* https://www.ibm.com/support/knowledgecenter/en/SSBS6K_3.1.1/manage_network/kubernetes_types.html | |||

=Internal= | =Internal= | ||

| Line 15: | Line 16: | ||

A service is a Kubernetes resource that provides stable network access to a set of ephemeral [[Kubernetes_Pod_and_Container_Concepts|pods]]. The need for such a resource is explained in the [[#Need_for_Services|Need for Services]] section. The simplest kind of [[#Service|service]] provides a stable [[Kubernetes_Networking_Concepts#Cluster_IP_Address|Cluster IP address]]. More complex services, such as [[#NodePort_Service|NodePort]] and [[#LoadBalancer_Service|LoadBalancer]] services, use ClusterIP services and expand their functionality. The services and the pods they are exposed [[Kubernetes_Service_Concepts#Associating_a_Service_with_Pods| | A service is a Kubernetes resource that provides stable network access to a set of ephemeral [[Kubernetes_Pod_and_Container_Concepts|pods]]. The need for such a resource is explained in the [[#Need_for_Services|Need for Services]] section. The simplest kind of [[#Service|service]] provides a stable [[Kubernetes_Networking_Concepts#Cluster_IP_Address|Cluster IP address]]. More complex services, such as [[#NodePort_Service|NodePort]] and [[#LoadBalancer_Service|LoadBalancer]] services, use ClusterIP services and expand their functionality. The services and the pods they are exposed [[Kubernetes_Service_Concepts#Associating_a_Service_with_Pods|form a dynamic association labels and selectors]]. Once the logical association is declared, the Kubernetes cluster monitors the state of the pods and includes or excludes pods from the pod set exposed by the service. Other Kubernetes API resources, such as [[#Endpoints|Endpoints]], are involved in this process. | ||

=Need for Services= | =Need for Services= | ||

Most [[Kubernetes_Pod_and_Container_Concepts|pods]] exist to serve requests sent over the network, so the pods' clients need to know how to open a network connection into the pod | Most [[Kubernetes_Pod_and_Container_Concepts|pods]] exist to serve requests sent over the network, so the pods' clients need to know how to open a network connection into the pod: they need an IP address and a port. This is true for clients external to the Kubernetes cluster, and also for pods running inside the cluster, which act as clients for other pods. | ||

However, the pods are '''ephemeral''', they come and go. If a pod fails, it is not resurrected, but its failure is detected as result of lack of response from the liveness probe embedded within it and another pod is usually scheduled as replacement. The pod does not need to fail to be removed, the removal can be the result of a normal scale-down operation. In consequence, the IP address of an individual pod cannot be relied on. | However, as described in the [[Kubernetes_Pod_and_Container_Concepts#Lifecycle|Pod Lifecycle]] section, the pods are '''ephemeral''', they come and go. If a pod fails, it is not resurrected, but its failure is detected as result of lack of response from the liveness probe embedded within it and another pod is usually scheduled as replacement. The pod does not need to fail to be removed, the removal can be the result of a normal scale-down operation. In consequence, the IP address of an individual pod cannot be relied on. | ||

First off, the IP address is '''dynamically allocated''' to the pod at the time of pod initialization, after the pod has been scheduled to a node and before the pod is started, and secondly, the IP address becomes obsolete the moment the pod disappears. As such, it is practically very difficult, if not impossible for application's clients running outside the Kubernetes cluster, or even for other pods acting as clients to keep track of ephemeral pod IP addresses. | First off, the IP address is '''dynamically allocated''' to the pod at the time of pod initialization, after the pod has been scheduled to a node and before the pod is started, and secondly, the IP address becomes obsolete the moment the pod disappears. As such, it is practically very difficult, if not impossible for application's clients running outside the Kubernetes cluster, or even for other pods acting as clients to keep track of ephemeral pod IP addresses. | ||

| Line 27: | Line 28: | ||

Multiple pods may be '''providing the same service''', and each of them has its own IP address. The client must not be put in the position to have to chose a specific IP address and pod, they should not even need to know there are multiple pods providing the service. | Multiple pods may be '''providing the same service''', and each of them has its own IP address. The client must not be put in the position to have to chose a specific IP address and pod, they should not even need to know there are multiple pods providing the service. | ||

The solution to all these problems | The solution to all these problems consists of maintaining an instance of a specialized resource, the [[#Service|service]], for each of such group of pods. The service instance exposes a stable IP address and set of ports for the entire life of the service instance. | ||

=Service Manifest= | =Service Manifest= | ||

{{Internal|Kubernetes Service Manifest|Service Manifest}} | {{Internal|Kubernetes Service Manifest|Service Manifest}} | ||

=<span id='Service'></span>Service (ClusterIP Service)= | =<span id='Service'></span>Service (ClusterIP Service)= | ||

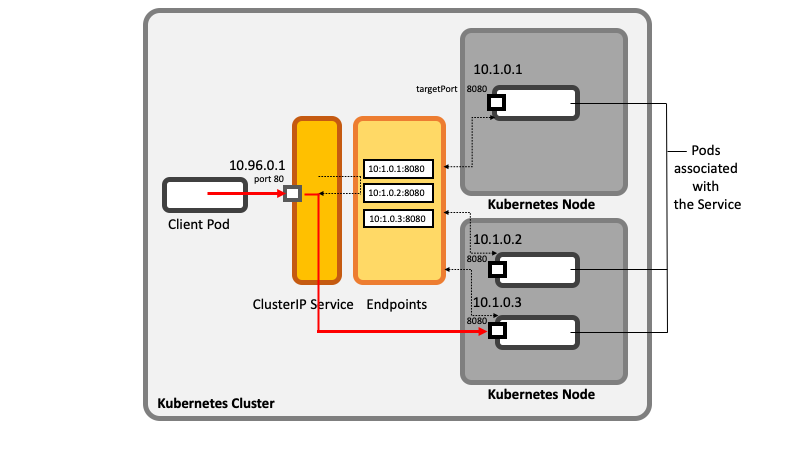

In its simplest form, a service is a [[Kubernetes API Resources Concepts#Service|Kubernetes API resource]] providing a single, stable [[Kubernetes_Networking_Concepts#Cluster_IP_Address|Cluster IP address]] and a set of ports that serve as point of entry to a group of pods providing the same service. A service that does not specify a type is by default a "ClusterIP" service. | In its simplest form, a service is a [[Kubernetes API Resources Concepts#Service|Kubernetes API resource]] providing a single, stable [[Kubernetes_Networking_Concepts#Cluster_IP_Address|Cluster IP address]] and a set of ports that serve as point of entry to a group of pods providing the same service. A service that does not specify a type is by default a "ClusterIP" service. The service provides connection forwarding and load balancing: each connection to the service is forwarded to a '''randomly selected''' backing pod. The service also provides discovery facilities, advertising itself via DNS and environment variables, as described in the [[#Discovering_ClusterIP_Services_within_the_Cluster|Discovering ClusterIP Services within the Cluster]] section. | ||

The IP address | The IP address stays unchanged for the entire lifetime of the service. A client opens a network connection to the IP address and port, and the service load-balances the connection to one of the pods associated with it. Individual pods exposed by the service may come and go, and the service makes this transparent to the clients, as explained in the [[Kubernetes_Service_Concepts#Associating_a_Service_with_Pods|Associating a Service with Pods]] section. | ||

Internal [[Kubernetes_Control_Plane_and_Data_Plane_Concepts#Cluster|Kubernetes cluster]] clients, such as other pods, can use the service's IP address and ports to connect to the pods exposed by the service. This type of service is know as a <span id='ClusterIP'></span><span id='ClusterIP_Service'></span>'''ClusterIP service'''. Only internal clients can use a ClusterIP service, because the Cluster IP address is only routable from inside the Kubernetes cluster. Designating a service as a ClusterIP service by specifying [[Kubernetes_Service_Manifest#type|spec.type]]=ClusterIP in the service's manifest is optional. | Internal [[Kubernetes_Control_Plane_and_Data_Plane_Concepts#Cluster|Kubernetes cluster]] clients, such as other pods, can use the service's IP address and ports to connect to the pods exposed by the service. This type of service is know as a <span id='ClusterIP'></span><span id='ClusterIP_Service'></span>'''ClusterIP service'''. Only internal clients can use a ClusterIP service, because the Cluster IP address is only routable from inside the Kubernetes cluster. Designating a service as a ClusterIP service by specifying <code>[[Kubernetes_Service_Manifest#type|spec.type]]=ClusterIP</code> in the service's manifest is optional. | ||

The pods may be scheduled on different physical [[Kubernetes_Control_Plane_and_Data_Plane_Concepts#Node|Kubernetes nodes]], but they are accessible to the service and each other via the virtual [[Kubernetes_Networking_Concepts#Pod_Network|pod network]]. | The pods may be scheduled on different physical [[Kubernetes_Control_Plane_and_Data_Plane_Concepts#Node|Kubernetes nodes]], but they are accessible to the service and each other via the virtual [[Kubernetes_Networking_Concepts#Pod_Network|pod network]]. | ||

<br> | |||

:[[File:Learning_Kubernetes_KubernetesServices_ClusterIPService.png]] | :[[File:Learning_Kubernetes_KubernetesServices_ClusterIPService.png]] | ||

<br> | |||

The Cluster IP and the service's external port is reported by [[Kubernetes_Service_Operations#List_Services|kubectl get svc]]: | The Cluster IP and the service's external port is reported by [[Kubernetes_Service_Operations#List_Services|kubectl get svc]]: | ||

| Line 48: | Line 51: | ||

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h26m | kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h26m | ||

</syntaxhighlight> | </syntaxhighlight> | ||

Services operate at the TCP/UDP layer (level 4) and in consequence cannot provide application-layer routing. If such routing is needed, and [[#Ingress|Ingress]] must be used. | |||

==Playground== | |||

{{External|https://github.com/ovidiuf/playground/tree/master/kubernetes/services/ClusterIP}} | |||

==<span id='Service_Port'></span>Service Port(s)== | ==<span id='Service_Port'></span>Service Port(s)== | ||

A service port designates a port exposed by the service externally. This is a port the service will be available on, and the value will be used by the external clients connecting to the service. It is declared with [[Kubernetes_Service_Manifest#port|.spec.ports[*].port]] in the service manifest. | A service port designates a port exposed by the service externally. This is a port the service will be available on, and the value will be used by the external clients connecting to the service. It is declared with <code>[[Kubernetes_Service_Manifest#port|.spec.ports[*].port]]</code> in the service manifest. | ||

A service may expose more than one port, and the requests arrived on any of these ports will be forwarded to the corresponding configured [[#Service_Target_Port|target ports]]. | A service may expose more than one port, and the requests arrived on any of these ports will be forwarded to the corresponding configured [[#Service_Target_Port|target ports]]. | ||

<syntaxhighlight lang='yaml'> | <syntaxhighlight lang='yaml'> | ||

kind: Service | kind: Service | ||

... | |||

spec: | spec: | ||

ports: | ports: | ||

| Line 72: | Line 80: | ||

===Port Name=== | ===Port Name=== | ||

When more than one port is specified for a service, a name must be specified for each port with [[Kubernetes_Service_Manifest#name|.spec.ports[*].name]], as shown in the above example. If there is just one port per service, the name element is optional. | When more than one port is specified for a service, a name must be specified for each port with <code>[[Kubernetes_Service_Manifest#name|.spec.ports[*].name]]</code>, as shown in the above example. If there is just one port per service, the name element is optional. | ||

==Service Target Port== | ==Service Target Port== | ||

A target port represents the port exposed by a container from the pods associated with the service, as declared by the [[Kubernetes_Pod_Manifest#ports|.spec.containers[*].ports]] array elements of the pod manifest. The service will forward requests over connections opened with this port as target. It is declared with [[Kubernetes_Service_Manifest#targetPort|.spec.ports[*].targetPort]] in the service manifest. | A target port represents the port exposed by a container from the pods associated with the service, as declared by the <code>[[Kubernetes_Pod_Manifest#ports|.spec.containers[*].ports]]</code> array elements of the pod manifest. The service will forward requests over connections opened with this port as target. It is declared with <code>[[Kubernetes_Service_Manifest#targetPort|.spec.ports[*].targetPort]]</code> in the service manifest. | ||

In most cases, an integral port value is used as [[Kubernetes_Service_Manifest#targetPort|.spec.ports[*].targetPort]] value, but it is also it is possible to name ports in the pod's manifest using [[Kubernetes_Pod_Manifest#port_name |.spec.containers[*].ports.name]] elements, and use those names as value for [[Kubernetes_Service_Manifest#targetPort|targetPort]] in the service definition: | In most cases, an integral port value is used as <code>[[Kubernetes_Service_Manifest#targetPort|.spec.ports[*].targetPort]]</code> value, but it is also it is possible to name ports in the pod's manifest using <code>[[Kubernetes_Pod_Manifest#port_name |.spec.containers[*].ports.name]]</code> elements, and use those names as value for <code>[[Kubernetes_Service_Manifest#targetPort|targetPort]]</code> in the service definition: | ||

<syntaxhighlight lang='yaml'> | <syntaxhighlight lang='yaml'> | ||

kind: Service | kind: Service | ||

... | |||

spec: | spec: | ||

ports: | ports: | ||

| Line 88: | Line 96: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

The benefit of using names instead of port numbers is that the port numbers can be changed by the pods without changing the service spec. | The benefit of using names instead of port numbers is that the port numbers can be changed by the pods without changing the service spec. | ||

==Discovering ClusterIP Services | |||

==<span id='Discovering_ClusterIP_Services_within_the_Cluster'></span><span id='Discovering_ClusterIP_Services_inside_the_Cluster'></span>Service Discovery - Discovering ClusterIP Services within the Cluster== | |||

A Kubernetes cluster automatically advertises its ClusterIP services throughout the cluster by publishing their name/IP address association as they come online. The potential clients can use two mechanism to learn the cluster IP address and ports associated with a specific service: [[#Environment_Variables|environment variables]] and [[#DNS|DNS]]. | A Kubernetes cluster automatically advertises its ClusterIP services throughout the cluster by publishing their name/IP address association as they come online. The potential clients can use two mechanism to learn the cluster IP address and ports associated with a specific service: [[#Environment_Variables|environment variables]] and [[#DNS|DNS]]. | ||

===Environment Variables=== | ===Environment Variables=== | ||

| Line 96: | Line 105: | ||

⚠️ If a service comes on-line after a potential client pod has been deployed, the potential client pod's environment will not be updated. | ⚠️ If a service comes on-line after a potential client pod has been deployed, the potential client pod's environment will not be updated. | ||

The IP address of a service will be exposed as <SERVICE-NAME>_SERVICE_HOST and the port will be exposed as <SERVICE-NAME>_SERVICE_PORT, where all letters in the service name are uppercased and the dashes in the name are converted to underscores (some-service → SOME_SERVICE). If more than one port is declared for the service, the mandatory "name" element is uppercased and used to assemble the environment variable carrying the port value, following the pattern: | The IP address of a service will be exposed as <code><SERVICE-NAME>_SERVICE_HOST</code> and the port will be exposed as <code><SERVICE-NAME>_SERVICE_PORT</code>, where all letters in the service name are uppercased and the dashes in the name are converted to underscores ("some-service" → "SOME_SERVICE"). If more than one port is declared for the service, the mandatory "name" element is uppercased and used to assemble the environment variable carrying the port value, following the pattern: | ||

<SERVICE-NAME>_SERVICE_PORT_<PORT-NAME>: | <code><SERVICE-NAME>_SERVICE_PORT_<PORT-NAME></code>: | ||

<syntaxhighlight lang='text'> | <syntaxhighlight lang='text'> | ||

| Line 112: | Line 121: | ||

<service-name>.<namespace>.svc.cluster.local | <service-name>.<namespace>.svc.cluster.local | ||

</syntaxhighlight> | </syntaxhighlight> | ||

where <namespace> is the namespace the service was defined in and [[Kubernetes_DNS_Concepts#svc.cluster.local|svc.cluster.local]] is a configurable suffix used in all local cluster service names. | where <code><namespace></code> is the namespace the service was defined in and <code>[[Kubernetes_DNS_Concepts#svc.cluster.local|svc.cluster.local]]</code> is a configurable suffix used in all local cluster service names. <code>svc.cluster.local</code> can be omitted, because all pods are configured to list <code>svc.cluster.local</code> in their [[Kubernetes_DNS_Concepts#Name_Resolution_inside_a_Pod|<code>/etc/resolv.conf</code> search clause]]. If the client pod and the service are in the same namespace, the namespace name from the FQDN can also be omitted. | ||

⚠️ Unlike | ⚠️ Unlike in the case of [[#Environment_Variables|environment variables]], the service must still know the service port number, as that information is not disseminated with DNS. If the client pod and the service are deployed in the same namespace, the client pod can get the port number from the corresponding [[#Environment_Variables|environment variable]]. | ||

More details about DNS support in a Kubernetes cluster are available in: {{Internal|Kubernetes DNS Concepts|Kubernetes DNS Concepts}} | More details about DNS support in a Kubernetes cluster are available in: {{Internal|Kubernetes DNS Concepts|Kubernetes DNS Concepts}} | ||

==Session Affinity== | ==Session Affinity== | ||

With no additional configuration, successive requests to a service, even if they are made by the same client, will be forwarded to different pods, randomly. By default, there is no session affinity, and this corresponds to a [[Kubernetes_Service_Manifest#sessionAffinity|.spec.sessionAffinity]] configuration of "None". | With no additional configuration, successive requests to a service, even if they are made by the same client, will be forwarded to different pods, randomly. By default, there is no session affinity, and this corresponds to a <code>[[Kubernetes_Service_Manifest#sessionAffinity|.spec.sessionAffinity]]</code> configuration of "None". | ||

However, even if session affinity is set to "None" but the client uses keep-alive connections, successive requests over the same connection will be sent to the same pod. This is because the services work at connection level, so when a connection to a service is first opened, it extends all the way to the target pod, and the same pod will be used until the connection is closed. | |||

The only kind of session affinity that can be configured is "ClientIP": all requests made by a certain client, identified by its IP, are proxied to the same pod every time. | The only kind of session affinity that can be configured is "ClientIP": all requests made by a certain client, identified by its IP, are proxied to the same pod every time. | ||

Services do not provide cookie-based session affinity because they operate at transport level (TCP/UDP) and do not inspect the payload carried by the packets. To | Services do not provide cookie-based session affinity because they operate at transport level (TCP/UDP) and do not inspect the payload carried by the packets. To enable cookie-based session affinity, the proxy must be capable of understanding HTTP, which is not the case for services. | ||

==Kubernetes API Server Service== | ==Kubernetes API Server Service== | ||

| Line 135: | Line 146: | ||

=Associating a Service with Pods= | =Associating a Service with Pods= | ||

The pods are loosely associated with the service exposing them: a ClusterIP service identifies the pods to forward request to by declaring a [[Kubernetes_Service_Manifest#selector|selector]]: | |||

<syntaxhighlight lang='yaml'> | <syntaxhighlight lang='yaml'> | ||

| Line 147: | Line 158: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

The pods that intend to | The pods that intend to serve requests proxied by the service must declare '''all''' key/value pairs listed by the service selector as metadata labels to be considered: | ||

<syntaxhighlight lang='yaml'> | |||

kind: Pod | |||

metadata: | |||

... | |||

labels: | |||

key1: value1 | |||

key2: value2 | |||

... | |||

</syntaxhighlight> | |||

The pod may declare extra labels in additions with those specified by the service selector, and those will not interfere with the service selection process. The label selector needs to match some of the labels declared by the pod, but for a pod to match a service, in must declare all of the labels the service is looking for. If the number of pods increases as result of scaling up the deployment, no service modification is required - the service dynamically identifies the new pods and starts to load-balance requests to them, as soon as they are [[#Readiness_Probe|ready to serve requests]]. | |||

The list of active pods associated with the service is maintained by [[#Endpoints|Endpoints]], which is another [[Kubernetes API Resources Concepts#Endpoints|Kubernetes API resource]]. All pods capable of handling requests for the service are represented by entries in the [[#Endpoints|Endpoints]] instance associated with the service. | |||

==Endpoints== | ==Endpoints== | ||

Although the pod selector is defined in the service spec, it is not used directly when redirecting incoming connections. Instead, the selector is used to build a list of container endpoints (IP:port pairs), which is then stored in the associated [[Kubernetes API Resources Concepts#Endpoints|Kubernetes API]] Endpoints resource. The Endpoints instance has the same name as the service it is associated with. | |||

When a client connects to the ClusterIP service, the service randomly selects one of the endpoints and redirects the incoming connection to the containerized server listening at that location. The list is dynamic, as new pods may be deployed, or suddenly become unavailable. The Endpoints instances are updated by the [[#Endpoints_Controller|endpoints controller]]. Various factors are taken into consideration: label match, pod availability, the state of each of the pod's container [[#Readiness_Probe|readiness probe]], etc. There is a one-to-one association between a service and its Endpoints instance, but the Endpoints is a separate resource and not an attribute of the service. | |||

<br> | |||

::[[File:Learning_Kubernetes_KubernetesServices_Endpoints.png]] | |||

<br> | |||

An endpoint stays in the list as long as its corresponding readiness probe succeeds. If a readiness probe fails, the corresponding endpoint, and the endpoints for all containers from the same pod are removed. The relationship between Endpoints, containers, pods and readiness probes is explained in: {{Internal|Kubernetes_Container_Probes#Readiness_Probe_Operations|Readiness Probe Operations}} | |||

The endpoint is also removed if the pod is deleted. | |||

An Endpoints instance can be inspected with <code>kubectl get endpoints <name></code>: | |||

<syntaxhighlight lang='text'> | <syntaxhighlight lang='text'> | ||

| Line 181: | Line 218: | ||

protocol: TCP | protocol: TCP | ||

</syntaxhighlight> | </syntaxhighlight> | ||

If the service is created without a pod selector, the associated Endpoints won't even be created. However, it can be created, configured and updated manually. To create a service with manually managed endpoints, you need to create both the service and the Endpoints resource. They are connected via name: the name of the service and of the Endpoints instance must be the same. | |||

===Endpoints Controller=== | |||

The endpoints controller is part of the [[Kubernetes Control Plane and Data Plane Concepts#Controller_Manager|controller manager]]. | |||

==Readiness Probe== | ==Readiness Probe== | ||

By default, a container endpoint, which consists of the IP address and port pair, is added to the service's [[#Endpoints|Endpoints]] list only if its parent container is ready, as reflected by the result of all its containers' readiness probe. This behavior can be changed via configuration, as described in [[Kubernetes_Container_Probes#Container_and_Pod_Readiness_Check|Container and Pod Readiness Check]]. The probes are executed periodically and the container endpoint is taken out of the list if any of the probe fails - and possibly re-added if the probe starts succeeding again. Usually, there's just one container per pod, so "ready pod" and "ready container" are equivalent. More details about readiness probes: | |||

{{Internal|Kubernetes_Container_Probes#Container_and_Pod_Readiness_Check|Container and Pod Readiness Check}} | |||

=<span id='ExternalName'></span>ExternalName Service= | =<span id='Connecting_to_External_Services_from_inside_the_Cluster'></span><span id='ExternalName'></span><span id='ExternalName_Service'></span>Connecting to External Services from within the Cluster (ExternalName Service)= | ||

An ExternalName service is a service that proxies to an external service instead of a set of pods. | An ExternalName service is a service that proxies to an external service instead of a set of pods. | ||

<syntaxhighlight lang='yaml'> | <syntaxhighlight lang='yaml'> | ||

| Line 192: | Line 236: | ||

name: 'external-service' | name: 'external-service' | ||

spec: | spec: | ||

type: | type: ExternalName | ||

externalName: someservice.someserver.com | externalName: someservice.someserver.com | ||

ports: | ports: | ||

- port: 80 | - port: 80 | ||

</syntaxhighlight> | </syntaxhighlight> | ||

ExternalName services are implemented in DNS - a [[DNS_Concepts#CNAME_.28Alias.29|CNAME]] DNS record is created for the service. Therefore, clients connecting to the service will connect to the external service directly, bypassing the service proxy completely. For this reason, these type of service do not even get a cluster IP. | |||

The benefit of using an ExternalService is that it allows to modify the service definition and point to a different service any time later by only changing the <code>externalName</code>, or by changing the type back to ClusterIP and creating an Endpoints object for the service - either manually or via a selector. | The benefit of using an ExternalService is that it allows to modify the service definition and point to a different service any time later by only changing the <code>externalName</code>, or by changing the type back to ClusterIP and creating an Endpoints object for the service - either manually or via a selector. | ||

| Line 203: | Line 247: | ||

=Exposing Services outside the Cluster= | =Exposing Services outside the Cluster= | ||

==<span id='NodePort'></span>NodePort Service== | ==<span id='NodePort'></span>NodePort Service== | ||

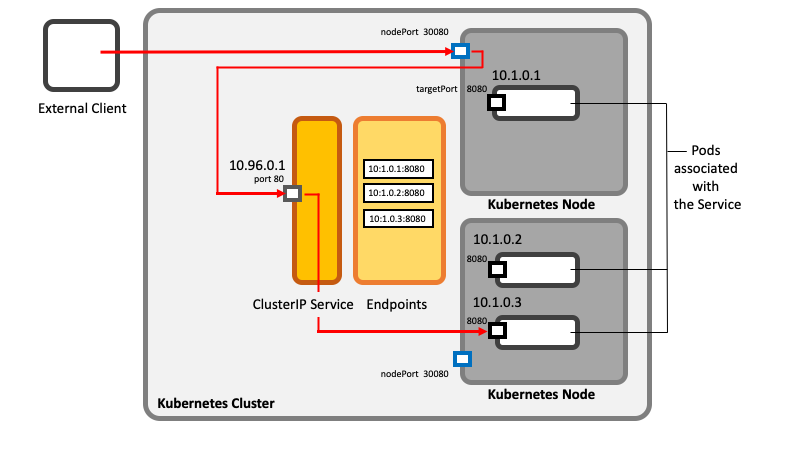

A NodePort service is a type of service that builds upon [[#Service|ClusterIP service]] functionality and provides an additional feature: it allows clients external to the [[Kubernetes_Control_Plane_and_Data_Plane_Concepts#Cluster|Kubernetes cluster]] to connect to the service's pods. The feature is implemented by having each [[Kubernetes_Control_Plane_and_Data_Plane_Concepts#Node|Kubernetes node]] open a port on the node itself, hence the name, and forwarding incoming node port connections to the underlying ClusterIP service. All features of the underlying ClusterIP service are also available on the NodePort service: internal clients can use the NodePort service as a ClusterIP service. | |||

The port reserved by a NodePort service has the same value on all nodes. | |||

<br> | |||

::[[File:Learning_Kubernetes_KubernetesServices_NodePort.png]] | |||

<br> | |||

A NodePort service can be created posting a manifest similar to: | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: v1 | |||

kind: Service | |||

metadata: | |||

name: example-nodeport | |||

spec: | |||

type: NodePort | |||

ports: | |||

- port: 80 | |||

targetPort: 8080 | |||

nodePort: 30080 | |||

selector: | |||

function: serves-http | |||

</syntaxhighlight> | |||

What makes the manifest different form a ClusterIP service manifest are the <code>[[Kubernetes_Service_Manifest#type|type]]</code> (<code>NodePort</code>) and the optional <code>[[Kubernetes_Service_Manifest#nodePort|nodePort]]</code> configuration elements. Everything else is the same. | |||

The valid range for node ports is 30000-32767. It is not mandatory to provide a value for <code>nodePort</code>. If not specified, a random port value will be chosen. | |||

More details about the service manifest are available in: {{Internal|Kubernetes_Service_Manifest#Example|Service Manifest}} | |||

Inspection of a NodePort service reveals that it also exposes a cluster IP address, as a ClusterIP service. The PORT(S) column reports both the internal port of the cluster IP (80) and the node port (30080). The service is available inside the cluster at 10.107.165.237:80 and externally, on any node, at <node-ip>:30080. | |||

<syntaxhighlight lang='text'> | |||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE | |||

example NodePort 10.107.165.237 <none> 80:30080/TCP 6s | |||

</syntaxhighlight> | |||

===Playground=== | |||

{{External|https://github.com/ovidiuf/playground/tree/master/kubernetes/services/NodePort}} | |||

===Node/Pod Relationship=== | |||

Without additional configuration, a connection received on a particular node may or may not be forwarded to a pod deployed on that node, because the connection is forwarded to the ClusterIP service first, and the ClusterIP service chooses a pod randomly. | |||

An extra network hop may not be desirable, and it can be prevented by configuring the service to redirect external traffic only to pods running on the node that receives the connection. This is done by setting <code>[[Kubernetes_Service_Manifest#externalTrafficPolicy|externalTrafficPolicy]]</code> to <code>Local</code> in the service specification section. If <code>[[Kubernetes_Service_Manifest#externalTrafficPolicy|externalTrafficPolicy]]</code> is configured to <code>Local</code> and a connection is established with the service's node port, the service proxy will choose a locally running pod. ⚠️ If no local pod exists, the connection will hang, so only nodes that have qualified pods deploying on them must be chosen. Using this annotation has the additional drawback that will prevent the service to evenly spread load across pod, and some pods may be get more load than others. | |||

===Client IP Preservation=== | |||

When an internal cluster connection is handled by a [[#Service|ClusterIP service]], the pods backing the service can obtain the client IP address. However, when the connection is proxied by a Node Port service, the packets' source IP is changes by Source Network Address Translation (SNAT). The backing pod will not be able to see the client IP address anymore. This is not the case when <code>[[Kubernetes_Service_Manifest#externalTrafficPolicy|externalTrafficPolicy]]</code> is set to <code>Local</code>, because there is no additional hop between the node receiving the connection and the node hosting the target pod - SNAT is not performed. | |||

==<span id='LoadBalancer'></span>LoadBalancer Service== | ==<span id='LoadBalancer'></span>LoadBalancer Service== | ||

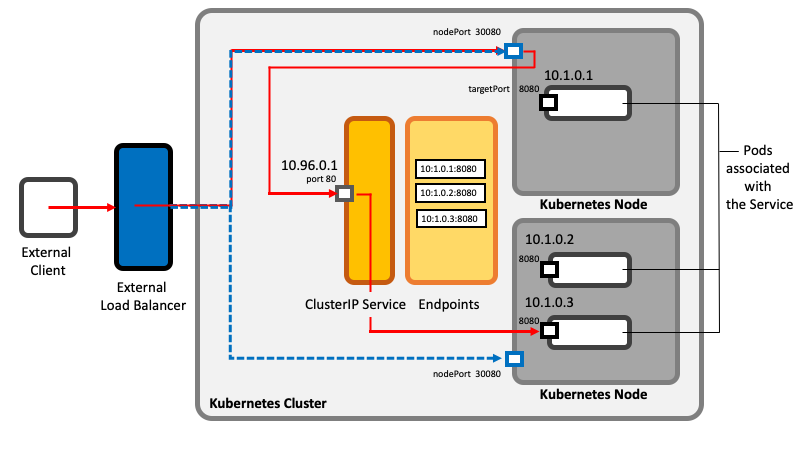

{{External|https://kubernetes.io/docs/concepts/services-networking/service/#loadbalancer}} | |||

A LoadBalancer service is a type of service that builds upon [[#NodePort_Service|NodePort service]] functionality and provides an additional feature: it interacts with the cloud infrastructure the [[Kubernetes_Control_Plane_and_Data_Plane_Concepts#Cluster|Kubernetes cluster]] is deployed on and triggers the provisioning of an external load balancer. The load balancer proxies traffic to the Kubernetes nodes, using the node port opened by the underlying NodeService. External clients connect to the service through the load balancer's IP. | |||

Note that each LoadBalancer service requires its own external load balancer. If this is a limitation, consider using an [[Kubernetes_Ingress_Concepts#Need_for_Ingress|ingress]]. | |||

<br> | |||

::[[File:Learning_Kubernetes_KubernetesServices_LoadBalancer.png]] | |||

<br> | |||

A LoadBalancer service can be created posting a manifest similar to the one below: | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: v1 | |||

kind: Service | |||

metadata: | |||

name: example-loadbalancer | |||

spec: | |||

type: LoadBalancer | |||

ports: | |||

- port: 80 | |||

targetPort: 8080 | |||

selector: | |||

function: serves-http | |||

</syntaxhighlight> | |||

It is similar to a NodePort manifest. | |||

If Kubernetes is running in an environment that does not support LoadBalancer services, the load balancer will not be provisioned, and the service will behave as NodePort service. | |||

===Playground=== | |||

{{External|https://github.com/ovidiuf/playground/tree/master/kubernetes/services/LoadBalancer}} | |||

===Limit Client IP Allowed to Access the Load Balancer=== | |||

Specify: | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: v1 | |||

kind: Service | |||

spec: | |||

type: LoadBalancer | |||

[...] | |||

loadBalancerSourceRanges: | |||

- "143.231.0.0/16" | |||

</syntaxhighlight> | |||

===LoadBalancers and Different Kubernetes Implementations=== | |||

====Docker Desktop Kubernetes==== | |||

A physical load balancer will not be provisioned. However, the service will become accessible on localhost (127.0.0.1) on the port declared as "port" (not nodePort) because Docker Desktop Kubernetes implementation will automatically tunnel to the node port, at the Docker Desktop Kubernetes VM's IP address: | |||

<syntaxhighlight lang='text'> | |||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE | |||

example LoadBalancer 10.104.90.206 localhost 80:31353/TCP 10s | |||

curl http://localhost/ | |||

IP address: 10.1.0.75 | |||

</syntaxhighlight> | |||

====minikube==== | |||

<font color=darkkhaki> | |||

A physical load balancer will not be provisioned. However, the service will become accessible on localhost (127.0.0.1) because minikube implementation will automatically tunnel to the node port, at the minikube VM's IP address. | |||

</font> | |||

====kind==== | |||

====EKS==== | |||

{{Internal|Amazon_EKS_Concepts#Using_a_NLB|Using an AWS NLB as LoadBalancer}} | |||

==Ingress== | |||

An Ingress resource is a different mechanism for exposing multiple services through a single IP address. Unlike the services presented so far, which operate at layer 4, an Ingress operates at HTTP protocol layer (layer 7). | |||

{{Internal|Kubernetes Ingress Concepts|Ingress Concepts}} | |||

=Headless Service= | |||

A headless service is even a lower-level mechanism than a [[#Service|ClusterIP service]]. A headless service does not expose any stable Cluster IP address on its own, nor it directly load balances any requests. It simply encapsulates logic to publish the matching pods' IP addresses in the [[Kubernetes_DNS_Concepts#The_DNS_Service|internal DNS]], leaving the interested clients to look up the names in DNS, obtain the pod's IP addresses and connect to pods directly. The headless service facilitates load balancing across pods through the DNS round-robin mechanism. | |||

A headless service can be created posting a manifest similar to the one below - the key element is <code>clusterIP: None</code>: | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: v1 | |||

kind: Service | |||

metadata: | |||

name: example-headless | |||

spec: | |||

clusterIP: None | |||

ports: | |||

- port: 80 | |||

targetPort: 8080 | |||

selector: | |||

function: serves-http | |||

</syntaxhighlight> | |||

==Playground== | |||

{{External|https://github.com/ovidiuf/playground/tree/master/kubernetes/services/headless}} | |||

==How Headless Services Work== | |||

Upon service deployment, the associated [[#Endpoints|Endpoints]] instance builds the list of matching ready pods, and the pod IP addresses are added to DNS. Label matching and pod's [[Kubernetes_Service_Concepts#Readiness_Probe|container readiness probes]] are handled as for any other service. | |||

The DNS is updated to resolve the service name to multiple [[DNS_Concepts#A_.28Host.29|A records]] for the service, each pointing to the IP of an individual pod backing the service '''at the moment''': | |||

<syntaxhighlight lang='text'> | |||

nslookup example-headless.default.svc.cluster.local | |||

Server: 10.96.0.10 | |||

Address: 10.96.0.10#53 | |||

Name: example-headless.default.svc.cluster.local | |||

Address: 10.1.1.188 | |||

Name: example-headless.default.svc.cluster.local | |||

Address: 10.1.1.189 | |||

Name: example-headless.default.svc.cluster.local | |||

Address: 10.1.1.187 | |||

</syntaxhighlight> | |||

For pods deployed by [[Kubernetes StatefulSet|StatefulSets]], individual pods can be looked up by name - this is a feature particular to the StatefulSets. | |||

=Service Operations= | =Service Operations= | ||

* [[Kubernetes_Service_Operations#List_Services|List services]] | * [[Kubernetes_Service_Operations#List_Services|List services]] | ||

| Line 212: | Line 410: | ||

* [[Kubernetes_Service_Operations#With_CLI|Create a service with CLI]] | * [[Kubernetes_Service_Operations#With_CLI|Create a service with CLI]] | ||

* [[Kubernetes_Service_Operations#With_Metadata|Create a service with Metadata]] | * [[Kubernetes_Service_Operations#With_Metadata|Create a service with Metadata]] | ||

* [[Kubernetes_Service_Operations#Service_Troubleshooting|Service troubleshooting]] | |||

Latest revision as of 22:10, 22 March 2024

External

- https://kubernetes.io/docs/concepts/services-networking/service/

- https://www.ibm.com/support/knowledgecenter/en/SSBS6K_3.1.1/manage_network/kubernetes_types.html

Internal

Playground

Overview

A service is a Kubernetes resource that provides stable network access to a set of ephemeral pods. The need for such a resource is explained in the Need for Services section. The simplest kind of service provides a stable Cluster IP address. More complex services, such as NodePort and LoadBalancer services, use ClusterIP services and expand their functionality. The services and the pods they are exposed form a dynamic association labels and selectors. Once the logical association is declared, the Kubernetes cluster monitors the state of the pods and includes or excludes pods from the pod set exposed by the service. Other Kubernetes API resources, such as Endpoints, are involved in this process.

Need for Services

Most pods exist to serve requests sent over the network, so the pods' clients need to know how to open a network connection into the pod: they need an IP address and a port. This is true for clients external to the Kubernetes cluster, and also for pods running inside the cluster, which act as clients for other pods.

However, as described in the Pod Lifecycle section, the pods are ephemeral, they come and go. If a pod fails, it is not resurrected, but its failure is detected as result of lack of response from the liveness probe embedded within it and another pod is usually scheduled as replacement. The pod does not need to fail to be removed, the removal can be the result of a normal scale-down operation. In consequence, the IP address of an individual pod cannot be relied on.

First off, the IP address is dynamically allocated to the pod at the time of pod initialization, after the pod has been scheduled to a node and before the pod is started, and secondly, the IP address becomes obsolete the moment the pod disappears. As such, it is practically very difficult, if not impossible for application's clients running outside the Kubernetes cluster, or even for other pods acting as clients to keep track of ephemeral pod IP addresses.

Multiple pods may be providing the same service, and each of them has its own IP address. The client must not be put in the position to have to chose a specific IP address and pod, they should not even need to know there are multiple pods providing the service.

The solution to all these problems consists of maintaining an instance of a specialized resource, the service, for each of such group of pods. The service instance exposes a stable IP address and set of ports for the entire life of the service instance.

Service Manifest

Service (ClusterIP Service)

In its simplest form, a service is a Kubernetes API resource providing a single, stable Cluster IP address and a set of ports that serve as point of entry to a group of pods providing the same service. A service that does not specify a type is by default a "ClusterIP" service. The service provides connection forwarding and load balancing: each connection to the service is forwarded to a randomly selected backing pod. The service also provides discovery facilities, advertising itself via DNS and environment variables, as described in the Discovering ClusterIP Services within the Cluster section.

The IP address stays unchanged for the entire lifetime of the service. A client opens a network connection to the IP address and port, and the service load-balances the connection to one of the pods associated with it. Individual pods exposed by the service may come and go, and the service makes this transparent to the clients, as explained in the Associating a Service with Pods section.

Internal Kubernetes cluster clients, such as other pods, can use the service's IP address and ports to connect to the pods exposed by the service. This type of service is know as a ClusterIP service. Only internal clients can use a ClusterIP service, because the Cluster IP address is only routable from inside the Kubernetes cluster. Designating a service as a ClusterIP service by specifying spec.type=ClusterIP in the service's manifest is optional.

The pods may be scheduled on different physical Kubernetes nodes, but they are accessible to the service and each other via the virtual pod network.

The Cluster IP and the service's external port is reported by kubectl get svc:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

httpd-svc ClusterIP 10.106.185.218 <none> 9898/TCP 43m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h26m

Services operate at the TCP/UDP layer (level 4) and in consequence cannot provide application-layer routing. If such routing is needed, and Ingress must be used.

Playground

Service Port(s)

A service port designates a port exposed by the service externally. This is a port the service will be available on, and the value will be used by the external clients connecting to the service. It is declared with .spec.ports[*].port in the service manifest.

A service may expose more than one port, and the requests arrived on any of these ports will be forwarded to the corresponding configured target ports.

kind: Service

...

spec:

ports:

- name: http

port: 80

targetPort: 8080

- name: https

port: 443

targetPort: 8443

...

Note that the label selector applies to the service as a whole. It cannot be configured on a per-port basis. If different ports must map to a different subset of pods, different services must be created.

Port Name

When more than one port is specified for a service, a name must be specified for each port with .spec.ports[*].name, as shown in the above example. If there is just one port per service, the name element is optional.

Service Target Port

A target port represents the port exposed by a container from the pods associated with the service, as declared by the .spec.containers[*].ports array elements of the pod manifest. The service will forward requests over connections opened with this port as target. It is declared with .spec.ports[*].targetPort in the service manifest.

In most cases, an integral port value is used as .spec.ports[*].targetPort value, but it is also it is possible to name ports in the pod's manifest using .spec.containers[*].ports.name elements, and use those names as value for targetPort in the service definition:

kind: Service

...

spec:

ports:

- name: http

port: 80

targetPort: 'http'

The benefit of using names instead of port numbers is that the port numbers can be changed by the pods without changing the service spec.

Service Discovery - Discovering ClusterIP Services within the Cluster

A Kubernetes cluster automatically advertises its ClusterIP services throughout the cluster by publishing their name/IP address association as they come online. The potential clients can use two mechanism to learn the cluster IP address and ports associated with a specific service: environment variables and DNS.

Environment Variables

Any pod gets its environment automatically initialized by the cluster with special environment variables pointing to the IP address and ports for all services from the same namespace that exist at the moment of initialization.

⚠️ If a service comes on-line after a potential client pod has been deployed, the potential client pod's environment will not be updated.

The IP address of a service will be exposed as <SERVICE-NAME>_SERVICE_HOST and the port will be exposed as <SERVICE-NAME>_SERVICE_PORT, where all letters in the service name are uppercased and the dashes in the name are converted to underscores ("some-service" → "SOME_SERVICE"). If more than one port is declared for the service, the mandatory "name" element is uppercased and used to assemble the environment variable carrying the port value, following the pattern:

<SERVICE-NAME>_SERVICE_PORT_<PORT-NAME>:

EXAMPLE_SERVICE_HOST=10.103.254.75

EXAMPLE_SERVICE_PORT_A=80

EXAMPLE_SERVICE_PORT_B=81

DNS

During a service deployment, the Kubernetes API server updates the internal database of the cluster's DNS server so the name of the newly deployed service is mapped to its ClusterIP address. Each service gets a DNS entry in the internal DNS server. Any DNS query performed by a process running in a pod will be handled by the Kubernetes DNS server, which knows all services running in the cluster. This way, a client pod that knows the name of the service can access it through its fully qualified domain name (FQDN).

The FQDN of a service matches the following pattern:

<service-name>.<namespace>.svc.cluster.local

where <namespace> is the namespace the service was defined in and svc.cluster.local is a configurable suffix used in all local cluster service names. svc.cluster.local can be omitted, because all pods are configured to list svc.cluster.local in their /etc/resolv.conf search clause. If the client pod and the service are in the same namespace, the namespace name from the FQDN can also be omitted.

⚠️ Unlike in the case of environment variables, the service must still know the service port number, as that information is not disseminated with DNS. If the client pod and the service are deployed in the same namespace, the client pod can get the port number from the corresponding environment variable.

More details about DNS support in a Kubernetes cluster are available in:

Session Affinity

With no additional configuration, successive requests to a service, even if they are made by the same client, will be forwarded to different pods, randomly. By default, there is no session affinity, and this corresponds to a .spec.sessionAffinity configuration of "None".

However, even if session affinity is set to "None" but the client uses keep-alive connections, successive requests over the same connection will be sent to the same pod. This is because the services work at connection level, so when a connection to a service is first opened, it extends all the way to the target pod, and the same pod will be used until the connection is closed.

The only kind of session affinity that can be configured is "ClientIP": all requests made by a certain client, identified by its IP, are proxied to the same pod every time.

Services do not provide cookie-based session affinity because they operate at transport level (TCP/UDP) and do not inspect the payload carried by the packets. To enable cookie-based session affinity, the proxy must be capable of understanding HTTP, which is not the case for services.

Kubernetes API Server Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7h47m

ClusterIP Service Implementation Details

Pinging ClusterIP Services

The cluster IP address associated with a service cannot be ping-ed. This is because the service's cluster IP is a virtual IP, and it only has meaning when combined with the service port.

Associating a Service with Pods

The pods are loosely associated with the service exposing them: a ClusterIP service identifies the pods to forward request to by declaring a selector:

kind: Service

spec:

...

selector:

key1: value1

key2: value2

...

The pods that intend to serve requests proxied by the service must declare all key/value pairs listed by the service selector as metadata labels to be considered:

kind: Pod

metadata:

...

labels:

key1: value1

key2: value2

...

The pod may declare extra labels in additions with those specified by the service selector, and those will not interfere with the service selection process. The label selector needs to match some of the labels declared by the pod, but for a pod to match a service, in must declare all of the labels the service is looking for. If the number of pods increases as result of scaling up the deployment, no service modification is required - the service dynamically identifies the new pods and starts to load-balance requests to them, as soon as they are ready to serve requests.

The list of active pods associated with the service is maintained by Endpoints, which is another Kubernetes API resource. All pods capable of handling requests for the service are represented by entries in the Endpoints instance associated with the service.

Endpoints

Although the pod selector is defined in the service spec, it is not used directly when redirecting incoming connections. Instead, the selector is used to build a list of container endpoints (IP:port pairs), which is then stored in the associated Kubernetes API Endpoints resource. The Endpoints instance has the same name as the service it is associated with.

When a client connects to the ClusterIP service, the service randomly selects one of the endpoints and redirects the incoming connection to the containerized server listening at that location. The list is dynamic, as new pods may be deployed, or suddenly become unavailable. The Endpoints instances are updated by the endpoints controller. Various factors are taken into consideration: label match, pod availability, the state of each of the pod's container readiness probe, etc. There is a one-to-one association between a service and its Endpoints instance, but the Endpoints is a separate resource and not an attribute of the service.

An endpoint stays in the list as long as its corresponding readiness probe succeeds. If a readiness probe fails, the corresponding endpoint, and the endpoints for all containers from the same pod are removed. The relationship between Endpoints, containers, pods and readiness probes is explained in:

The endpoint is also removed if the pod is deleted.

An Endpoints instance can be inspected with kubectl get endpoints <name>:

kubectl get endpoints example

NAME ENDPOINTS AGE

example 10.1.0.67:80,10.1.0.69:80 7m53s

kubectl -o yaml get endpoints example

apiVersion: v1

kind: Endpoints

metadata:

name: example

subsets:

- addresses:

- ip: 10.1.0.67

nodeName: docker-desktop

targetRef:

kind: Pod

name: httpd

- ip: 10.1.0.69

nodeName: docker-desktop

targetRef:

kind: Pod

name: httpd-3

ports:

- port: 80

protocol: TCP

If the service is created without a pod selector, the associated Endpoints won't even be created. However, it can be created, configured and updated manually. To create a service with manually managed endpoints, you need to create both the service and the Endpoints resource. They are connected via name: the name of the service and of the Endpoints instance must be the same.

Endpoints Controller

The endpoints controller is part of the controller manager.

Readiness Probe

By default, a container endpoint, which consists of the IP address and port pair, is added to the service's Endpoints list only if its parent container is ready, as reflected by the result of all its containers' readiness probe. This behavior can be changed via configuration, as described in Container and Pod Readiness Check. The probes are executed periodically and the container endpoint is taken out of the list if any of the probe fails - and possibly re-added if the probe starts succeeding again. Usually, there's just one container per pod, so "ready pod" and "ready container" are equivalent. More details about readiness probes:

Connecting to External Services from within the Cluster (ExternalName Service)

An ExternalName service is a service that proxies to an external service instead of a set of pods.

apiVersion: v1

kind: Service

metadata:

name: 'external-service'

spec:

type: ExternalName

externalName: someservice.someserver.com

ports:

- port: 80

ExternalName services are implemented in DNS - a CNAME DNS record is created for the service. Therefore, clients connecting to the service will connect to the external service directly, bypassing the service proxy completely. For this reason, these type of service do not even get a cluster IP.

The benefit of using an ExternalService is that it allows to modify the service definition and point to a different service any time later by only changing the externalName, or by changing the type back to ClusterIP and creating an Endpoints object for the service - either manually or via a selector.

Exposing Services outside the Cluster

NodePort Service

A NodePort service is a type of service that builds upon ClusterIP service functionality and provides an additional feature: it allows clients external to the Kubernetes cluster to connect to the service's pods. The feature is implemented by having each Kubernetes node open a port on the node itself, hence the name, and forwarding incoming node port connections to the underlying ClusterIP service. All features of the underlying ClusterIP service are also available on the NodePort service: internal clients can use the NodePort service as a ClusterIP service.

The port reserved by a NodePort service has the same value on all nodes.

A NodePort service can be created posting a manifest similar to:

apiVersion: v1

kind: Service

metadata:

name: example-nodeport

spec:

type: NodePort

ports:

- port: 80

targetPort: 8080

nodePort: 30080

selector:

function: serves-http

What makes the manifest different form a ClusterIP service manifest are the type (NodePort) and the optional nodePort configuration elements. Everything else is the same.

The valid range for node ports is 30000-32767. It is not mandatory to provide a value for nodePort. If not specified, a random port value will be chosen.

More details about the service manifest are available in:

Inspection of a NodePort service reveals that it also exposes a cluster IP address, as a ClusterIP service. The PORT(S) column reports both the internal port of the cluster IP (80) and the node port (30080). The service is available inside the cluster at 10.107.165.237:80 and externally, on any node, at <node-ip>:30080.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

example NodePort 10.107.165.237 <none> 80:30080/TCP 6s

Playground

Node/Pod Relationship

Without additional configuration, a connection received on a particular node may or may not be forwarded to a pod deployed on that node, because the connection is forwarded to the ClusterIP service first, and the ClusterIP service chooses a pod randomly.

An extra network hop may not be desirable, and it can be prevented by configuring the service to redirect external traffic only to pods running on the node that receives the connection. This is done by setting externalTrafficPolicy to Local in the service specification section. If externalTrafficPolicy is configured to Local and a connection is established with the service's node port, the service proxy will choose a locally running pod. ⚠️ If no local pod exists, the connection will hang, so only nodes that have qualified pods deploying on them must be chosen. Using this annotation has the additional drawback that will prevent the service to evenly spread load across pod, and some pods may be get more load than others.

Client IP Preservation

When an internal cluster connection is handled by a ClusterIP service, the pods backing the service can obtain the client IP address. However, when the connection is proxied by a Node Port service, the packets' source IP is changes by Source Network Address Translation (SNAT). The backing pod will not be able to see the client IP address anymore. This is not the case when externalTrafficPolicy is set to Local, because there is no additional hop between the node receiving the connection and the node hosting the target pod - SNAT is not performed.

LoadBalancer Service

A LoadBalancer service is a type of service that builds upon NodePort service functionality and provides an additional feature: it interacts with the cloud infrastructure the Kubernetes cluster is deployed on and triggers the provisioning of an external load balancer. The load balancer proxies traffic to the Kubernetes nodes, using the node port opened by the underlying NodeService. External clients connect to the service through the load balancer's IP.

Note that each LoadBalancer service requires its own external load balancer. If this is a limitation, consider using an ingress.

A LoadBalancer service can be created posting a manifest similar to the one below:

apiVersion: v1

kind: Service

metadata:

name: example-loadbalancer

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

selector:

function: serves-http

It is similar to a NodePort manifest.

If Kubernetes is running in an environment that does not support LoadBalancer services, the load balancer will not be provisioned, and the service will behave as NodePort service.

Playground

Limit Client IP Allowed to Access the Load Balancer

Specify:

apiVersion: v1

kind: Service

spec:

type: LoadBalancer

[...]

loadBalancerSourceRanges:

- "143.231.0.0/16"

LoadBalancers and Different Kubernetes Implementations

Docker Desktop Kubernetes

A physical load balancer will not be provisioned. However, the service will become accessible on localhost (127.0.0.1) on the port declared as "port" (not nodePort) because Docker Desktop Kubernetes implementation will automatically tunnel to the node port, at the Docker Desktop Kubernetes VM's IP address:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

example LoadBalancer 10.104.90.206 localhost 80:31353/TCP 10s

curl http://localhost/

IP address: 10.1.0.75

minikube

A physical load balancer will not be provisioned. However, the service will become accessible on localhost (127.0.0.1) because minikube implementation will automatically tunnel to the node port, at the minikube VM's IP address.

kind

EKS

Ingress

An Ingress resource is a different mechanism for exposing multiple services through a single IP address. Unlike the services presented so far, which operate at layer 4, an Ingress operates at HTTP protocol layer (layer 7).

Headless Service

A headless service is even a lower-level mechanism than a ClusterIP service. A headless service does not expose any stable Cluster IP address on its own, nor it directly load balances any requests. It simply encapsulates logic to publish the matching pods' IP addresses in the internal DNS, leaving the interested clients to look up the names in DNS, obtain the pod's IP addresses and connect to pods directly. The headless service facilitates load balancing across pods through the DNS round-robin mechanism.

A headless service can be created posting a manifest similar to the one below - the key element is clusterIP: None:

apiVersion: v1

kind: Service

metadata:

name: example-headless

spec:

clusterIP: None

ports:

- port: 80

targetPort: 8080

selector:

function: serves-http

Playground

How Headless Services Work

Upon service deployment, the associated Endpoints instance builds the list of matching ready pods, and the pod IP addresses are added to DNS. Label matching and pod's container readiness probes are handled as for any other service.

The DNS is updated to resolve the service name to multiple A records for the service, each pointing to the IP of an individual pod backing the service at the moment:

nslookup example-headless.default.svc.cluster.local

Server: 10.96.0.10

Address: 10.96.0.10#53

Name: example-headless.default.svc.cluster.local

Address: 10.1.1.188

Name: example-headless.default.svc.cluster.local

Address: 10.1.1.189

Name: example-headless.default.svc.cluster.local

Address: 10.1.1.187

For pods deployed by StatefulSets, individual pods can be looked up by name - this is a feature particular to the StatefulSets.