Linux Logical Volume Management Concepts: Difference between revisions

| Line 63: | Line 63: | ||

<font color=red> | <font color=red> | ||

This allows you to create logical volumes that are larger than the available extents. Using thin provisioning, you can manage a storage pool of free space, known as a thin pool, which can be allocated to an arbitrary number of devices when needed by applications. You can then create devices that can be bound to the thin pool for later allocation when an application actually writes to the logical volume. The thin pool can be expanded dynamically when needed for cost-effective allocation of storage space. | This allows you to create logical volumes that are larger than the available extents. Using thin provisioning, you can manage a storage pool of free space, known as a <span id='thin_pool'></span>''thin pool'', which can be allocated to an arbitrary number of devices when needed by applications. You can then create devices that can be bound to the thin pool for later allocation when an application actually writes to the logical volume. The thin pool can be expanded dynamically when needed for cost-effective allocation of storage space. | ||

Thin volumes are not supported across the nodes in a cluster. The thin pool and all its thin volumes must be exclusively activated on only one cluster node. | Thin volumes are not supported across the nodes in a cluster. The thin pool and all its thin volumes must be exclusively activated on only one cluster node. | ||

Revision as of 00:43, 23 May 2017

External

- https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Logical_Volume_Manager_Administration/index.html

- http://www.tldp.org/HOWTO/LVM-HOWTO/

Internal

Overview

LVM provides a higher-level view of the disk storage than the traditional view of disks and partitions. Storage volumes created under the control of the logical volume manager can be resized and moved around. The logical volume manager also allows management of storage volumes in user-defined groups, allowing the system administrator to deal with sensibly named volume groups such as "development" and "sales" rather than physical disk names such as "sda" and "sdb".

The whole disk can be allocated to a single volume group and logical volumes created to hold various file systems. If, for example the /home logical volume later filled up but there was still space available on /usr then it would be possible to shrink /usr by a few megabytes and reallocate that space to /home. When new drives are added to the system, it is no longer necessary to move users files around to make the best use of the new storage; simply add the new disk into an existing volume group or groups and extend the logical volumes as necessary.

The boot partition should not be included boot on the LV because some bootloaders don't understand LVM.

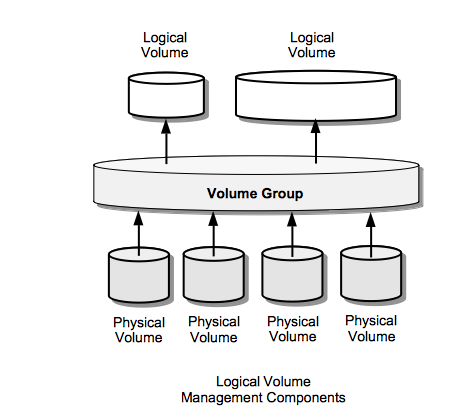

Physical Volumes (PVs)

Is typically a hard disk, or any device that looks like a hard disk. Physical volumes are allocated to a volume group (VG).

Physical Extent (PE ) / Logical Extents (LE)

Each physical volume is divided in chunks of data - they have the same size as the logical extents for the volume group. Each logical volume is also split into chunks of data with the same size for all logical volumes in the volume group.

Volume Group (VG)

A Volume Group (VG) gathers together a collection of Logical Volumes and Physical Volumes into one administrative unit.

The Volume Groups are mapped under /dev:

/dev/<VG-name>

Logical Volume (LV)

The equivalent of a partition on a non-LVM system. The LV is visible as a standard block device, it can contain a file system.

The LV is mapped under /dev/<VG-name>:

/dev/<VG-name>/<LV-name>

Linear Volumes

A linear volume aggregates space from one or more physical volumes into one logical volume. The physical storage is concatenated.The physical extents are assigned to an area of the logical volume in order. The physical volumes that make up a logical volume do not have to be the same size. More than one logical volume of whatever size can be configured from a pool of physical extents.

Striped Logical Volumes

For a striped logical volume the data is written across different underlying physical volumes, which, for large sequential reads and writes, can improve the efficiency of I/O: I/O can be done in parallel.

RAID Logical Volumes

LVM supports RAID0/1/4/5/6/10.

Thinly-Provisioned Logical Volumes (Thin Volumes)

This allows you to create logical volumes that are larger than the available extents. Using thin provisioning, you can manage a storage pool of free space, known as a thin pool, which can be allocated to an arbitrary number of devices when needed by applications. You can then create devices that can be bound to the thin pool for later allocation when an application actually writes to the logical volume. The thin pool can be expanded dynamically when needed for cost-effective allocation of storage space.

Thin volumes are not supported across the nodes in a cluster. The thin pool and all its thin volumes must be exclusively activated on only one cluster node.

By using thin provisioning, a storage administrator can overcommit the physical storage, often avoiding the need to purchase additional storage. For example, if ten users each request a 100GB file system for their application, the storage administrator can create what appears to be a 100GB file system for each user but which is backed by less actual storage that is used only when needed.

When using thin provisioning, it is important that the storage administrator monitor the storage pool and add more capacity if it starts to become full.

To make sure that all available space can be used, LVM supports data discard. This allows for re-use of the space that was formerly used by a discarded file or other block range.

Thin volumes provide support for a new implementation of copy-on-write (COW) snapshot logical volumes, which allow many virtual devices to share the same data in the thin pool.

Snapshot Volumes

The LVM snapshot feature provides the ability to create virtual images of a device at a particular instant without causing a service interruption. When a change is made to the original device (the origin) after a snapshot is taken, the snapshot feature makes a copy of the changed data area as it was prior to the change so that it can reconstruct the state of the device.

LVM supports thinly-provisioned snapshots.

LVM snapshots are not supported across the nodes in a cluster. You cannot create a snapshot volume in a clustered volume group.

Because a snapshot copies only the data areas that change after the snapshot is created, the snapshot feature requires a minimal amount of storage. For example, with a rarely updated origin, 3-5 % of the origin's capacity is sufficient to maintain the snapshot.

Snapshot copies of a file system are virtual copies, not an actual media backup for a file system. Snapshots do not provide a substitute for a backup procedure.

The size of the snapshot governs the amount of space set aside for storing the changes to the origin volume. For example, if you made a snapshot and then completely overwrote the origin the snapshot would have to be at least as big as the origin volume to hold the changes. You need to dimension a snapshot according to the expected level of change. So for example a short-lived snapshot of a read-mostly volume, such as /usr, would need less space than a long-lived snapshot of a volume that sees a greater number of writes, such as /home.

If a snapshot runs full, the snapshot becomes invalid, since it can no longer track changes on the origin volume. You should regularly monitor the size of the snapshot. Snapshots are fully resizeable, however, so if you have the storage capacity you can increase the size of the snapshot volume to prevent it from getting dropped. Conversely, if you find that the snapshot volume is larger than you need, you can reduce the size of the volume to free up space that is needed by other logical volumes. When you create a snapshot file system, full read and write access to the origin stays possible. If a chunk on a snapshot is changed, that chunk is marked and never gets copied from the original volume.

There are several uses for the snapshot feature:

- Most typically, a snapshot is taken when you need to perform a backup on a logical volume without halting the live system that is continuously updating the data.

- You can execute the fsck command on a snapshot file system to check the file system integrity and determine whether the original file system requires file system repair.

- Because the snapshot is read/write, you can test applications against production data by taking a snapshot and running tests against the snapshot, leaving the real data untouched.

- You can create LVM volumes for use with Red Hat Virtualization. LVM snapshots can be used to create snapshots of virtual guest images. These snapshots can provide a convenient way to modify existing guests or create new guests with minimal additional storage.

Thinly-Provisioned Snapshot Volumes

Thin snapshot volumes allow many virtual devices to be stored on the same data volume. This simplifies administration and allows for the sharing of data between snapshot volumes. As for all LVM snapshot volumes, as well as all thin volumes, thin snapshot volumes are not supported across the nodes in a cluster. The snapshot volume must be exclusively activated on only one cluster node.

Thin snapshot volumes provide the following benefits:

- A thin snapshot volume can reduce disk usage when there are multiple snapshots of the same origin volume.

- If there are multiple snapshots of the same origin, then a write to the origin will cause one COW operation to preserve the data. Increasing the number of snapshots of the origin should yield no major slowdown.

- Thin snapshot volumes can be used as a logical volume origin for another snapshot. This allows for an arbitrary depth of recursive snapshots (snapshots of snapshots of snapshots...).

- A snapshot of a thin logical volume also creates a thin logical volume. This consumes no data space until a COW operation is required, or until the snapshot itself is written.

- A thin snapshot volume does not need to be activated with its origin, so a user may have only the origin active while there are many inactive snapshot volumes of the origin.

- When you delete the origin of a thinly-provisioned snapshot volume, each snapshot of that origin volume becomes an independent thinly-provisioned volume. This means that instead of merging a snapshot with its origin volume, you may choose to delete the origin volume and then create a new thinly-provisioned snapshot using that independent volume as the origin volume for the new snapshot.

Although there are many advantages to using thin snapshot volumes, there are some use cases for which the older LVM snapshot volume feature may be more appropriate to your needs:

- You cannot change the chunk size of a thin pool. If the thin pool has a large chunk size (for example, 1MB) and you require a short-living snapshot for which a chunk size that large is not efficient, you may elect to use the older snapshot feature.

- You cannot limit the size of a thin snapshot volume; the snapshot will use all of the space in the thin pool, if necessary. This may not be appropriate for your needs.

Cache Volumes

Topology

Volume Groups present on a system are available as directories right under /dev. Each Volume Group directory contains "Logical Volumes", which are links to /dev/mapper block devices:

/dev

|

+ VolGroup00

|

+ LogVol00 -> /dev/mapper/VolGroup00-LogVol00

+ LogVol01 -> /dev/mapper/VolGroup00-LogVol01

+ borabora -> /dev/mapper/VolGroup00-borabora

+ centos55reference -> /dev/mapper/VolGroup00-centos55reference

+ raiatea

...