Kubernetes Horizontal Pod Autoscaler: Difference between revisions

| Line 39: | Line 39: | ||

=CPU-based Scaling= | =CPU-based Scaling= | ||

The CPU-based scaling algorithm uses the guaranteed CPU amount (the [[Kubernetes_Resource_Management_Concepts#CPU_Request|CPU requests]]) when determining the CPU utilization of the pod. | |||

=Playground= | =Playground= | ||

Revision as of 19:28, 12 October 2020

External

- https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/

- https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

- https://towardsdatascience.com/kubernetes-hpa-with-custom-metrics-from-prometheus-9ffc201991e

- https://github.com/kubernetes/community/blob/master/contributors/design-proposals/instrumentation/custom-metrics-api.md

Internal

Overview

Horizontal pod autoscaling is the automatic increase or decrease the number of pod replicas managed by a higher level controller that supports scaling, such as deployments, replica sets and stateful sets. By increasing the number of pods, the average value per pod for the metric autoscaling is based on should come down.

How it Works

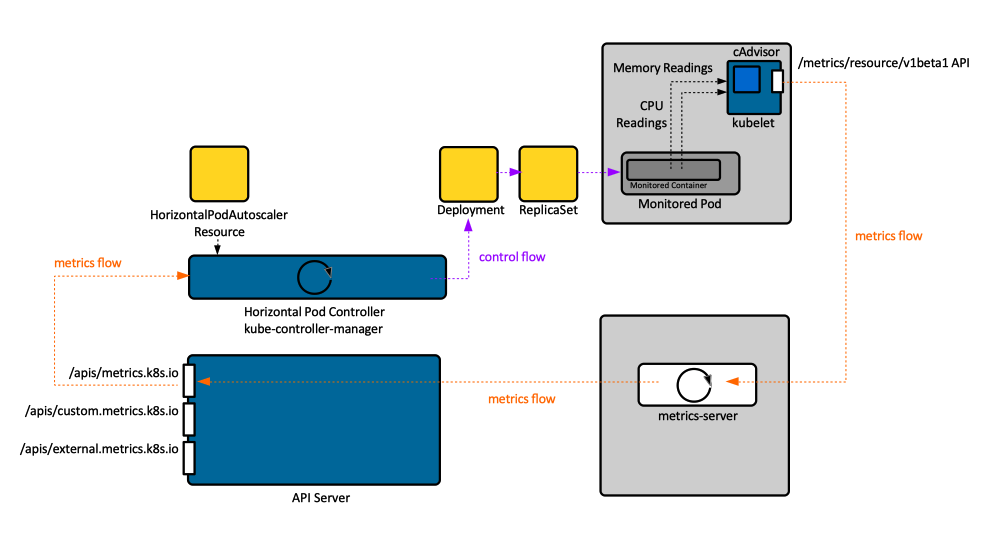

The scaling is performed by a horizontal pod controller and it is controlled by a HorizontalPodAutoscaler Kubernetes API resource, which enables and configures the horizontal pod autoscaler. For an horizontal pod autoscaler to work correctly, a source of metrics, in particular resource metrics, must be deployed. The simplest source of resource metrics is the metrics server.

The controller periodically reads the appropriate metrics API to obtain metrics for the pods it monitors. The set of pods to watch is provided by the higher level pod controller the autoscaler is associated with. The autoscaler calculates the number of replicas required to meet the target metric configured on the HorizontalPodAutoscaler resource, as described in the Autoscaling Algorithm section, below. If there is a mismatch, the controller adjusts the "replicas" field of the scaled resource through the Scale sub-resource. The target pod controller is not aware of the autoscaler. In what it is concerned, anybody, including the autoscaler, may update the replica count.

Each component gets metrics from its source periodically. The end effect is that it takes a while for metrics to be propagated and a rescaling action to be performed.

Horizontal Pod Autoscaler Controller

The horizontal pod autoscaler controller is part of the cluster's controller manager process.

Autoscaling Algorithm

Autoscaling can be performed for one metric or multiple metrics. If multiple metrics are involved, the controller computes the required number of pods for each metric, and picks the larger value.

For a specific metric, the goal of the algorithm is to compute the number of replicas that will bring the average value of the metric as close to the target value as possible. The input is a set of metrics, one for each pod, and the output is an integer, which represents the target number of pod replicas.

The algorithm also needs to make sure that the autoscaler does not thrash around when the metric value is unstable and changes rapidly.

Horizontal Pod Autoscaler Resource

The HorizontalPodAutoscaler Kubernetes API resource is deployed as any other Kubernetes resource by posting a manifest to the API server.

HorizontalPodAutoscaler Manifest

CPU-based Scaling

The CPU-based scaling algorithm uses the guaranteed CPU amount (the CPU requests) when determining the CPU utilization of the pod.