Kubernetes Horizontal Pod Autoscaler: Difference between revisions

| (59 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

* https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/ | * https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/ | ||

* https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/ | * https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/ | ||

* https://github.com/kubernetes/community/blob/master/contributors/design-proposals/instrumentation/custom-metrics-api.md | |||

* https://github.com/kubernetes/community/blob/master/contributors/design-proposals/autoscaling/hpa-v2.md | |||

* https://towardsdatascience.com/kubernetes-hpa-with-custom-metrics-from-prometheus-9ffc201991e | * https://towardsdatascience.com/kubernetes-hpa-with-custom-metrics-from-prometheus-9ffc201991e | ||

=Internal= | =Internal= | ||

| Line 11: | Line 12: | ||

=Overview= | =Overview= | ||

One of the basic Kubernetes features is the ability to manually horizontally scale pods up and down by increasing or decreasing the desired replica count field on the pod controller. Automatic scaling is built in top of that. Horizontal pod autoscaling is the automatic increase or decrease the number of pod replicas managed by a higher level controller that supports scaling, such as [[Kubernetes_Deployments#Scaling|deployments]], replica sets and stateful sets. By increasing the number of pods, the average value per pod for the autoscaling target value should come down. Metrics appropriate for autoscaling are those metrics whose average value decreases linearly with the increase of pod replica count. | One of the basic Kubernetes features is the ability to manually horizontally scale pods up and down by increasing or decreasing the desired replica count field on the pod controller. Automatic scaling is built in top of that. Horizontal pod autoscaling is the automatic increase or decrease the number of pod replicas managed by a higher level controller that supports scaling, such as [[Kubernetes_Deployments#Scaling|deployments]], replica sets and stateful sets. The autoscaling mechanism aims for a target value for one or more [[Monitoring_Concepts#Metric|metrics]]. By increasing the number of pods, the average value per pod for the autoscaling target value should come down. Metrics appropriate for autoscaling are those metrics whose average value decreases linearly with the increase of pod replica count. | ||

=How it Works= | =How it Works= | ||

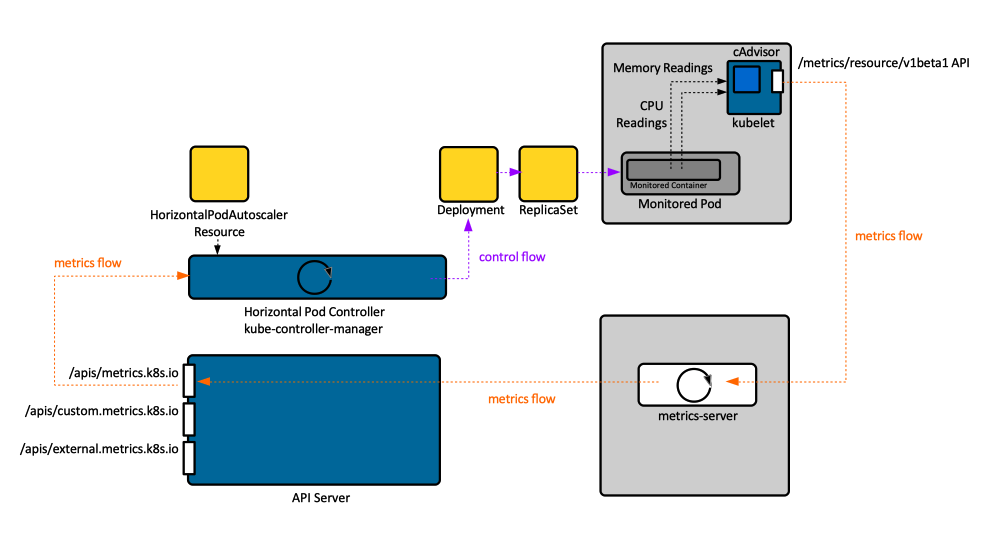

The scaling is performed by a [[#HorizontalPodAutoscaler_Controller|horizontal pod controller]] and it is | The scaling is performed by a [[#HorizontalPodAutoscaler_Controller|horizontal pod controller]] and it is configured by a [[#HorizontalPodAutoscaler_Resource|HorizontalPodAutoscaler Kubernetes API resource]], which enables and configures the horizontal pod autoscaler. For an horizontal pod autoscaler to work correctly, a source of metrics, most commonly [[Metrics_in_Kubernetes#Resource_Metrics|resource metrics]], must be deployed. In case of resource metrics, the autoscaler uses the corresponding resource request as a reference, so if some of the containers do not have the relevant resource request set, the autoscaler will not take any action for that metric. <font color=darkgray>This does not apply for custom and external metrics</font>. The simplest source of resource metrics is the [[Kubernetes Metrics Server|metrics server]]. | ||

The controller periodically reads the appropriate [[Metrics_in_Kubernetes#Metrics|metrics API]]s, as specified in the [[#Resource|HorizontalPodAutoscaler resource]] definition, and obtains metrics for the pods it monitors. To be able to access metrics APIs, [[Horizontal_Pod_Autoscaler_Operations#--horizontal-pod-autoscaler-use-rest-clients|--horizontal-pod-autoscaler-use-rest-clients]] must be set to true, the [[Kubernetes_Aggregation_Layer|API aggregation layer]] must be enabled, and the corresponding APIs ([[Metrics_in_Kubernetes#Resource_Metrics_API|resource metrics API]], [[Metrics_in_Kubernetes#Custom_Metrics_API|custom metrics API]] or [[Metrics_in_Kubernetes#External_Metrics_API|external metrics API]]) | The controller periodically reads the appropriate [[Metrics_in_Kubernetes#Metrics|metrics API]]s, as specified in the [[#Resource|HorizontalPodAutoscaler resource]] definition, and obtains metrics for the pods it monitors. To be able to access metrics APIs, [[Horizontal_Pod_Autoscaler_Operations#--horizontal-pod-autoscaler-use-rest-clients|--horizontal-pod-autoscaler-use-rest-clients]] must be set to true, the [[Kubernetes_Aggregation_Layer|API aggregation layer]] must be enabled, and the corresponding APIs ([[Metrics_in_Kubernetes#Resource_Metrics_API|resource metrics API]], [[Metrics_in_Kubernetes#Custom_Metrics_API|custom metrics API]] or [[Metrics_in_Kubernetes#External_Metrics_API|external metrics API]]) must be registered. The query cycle period is set with [[Horizontal_Pod_Autoscaler_Operations#--horizontal-pod-autoscaler-sync-period|--horizontal-pod-autoscaler-sync-period]]. | ||

The set of pods to watch is provided by the higher level pod controller the autoscaler is associated with. The autoscaler calculates the number of replicas required to meet the target metric | The set of pods to watch is provided by the higher level pod controller the autoscaler is associated with. The autoscaler calculates the number of replicas required to meet the target metric, as described in the [[#Autoscaling_Algorithm|Autoscaling Algorithm]] section, below. If there is a mismatch, the controller adjusts the "replicas" field through the Scale sub-resource of the pod controller. The target pod controller is not aware of the autoscaler. In what it is concerned, anybody, including the autoscaler, may update the replica count. | ||

Each component gets metrics from its source periodically. The end effect is that it takes a while for metrics to be propagated and a rescaling action to be performed. | Each component involved in autoscaling gets metrics from its source periodically. The end effect is that it takes a while for metrics to be propagated and a rescaling action to be performed. | ||

Autoscaling can be performed for one metric or multiple metrics. If multiple metrics are involved, the controller computes the required number of pods for each metric, and picks the larger value. | Autoscaling can be performed for one metric or multiple metrics. If multiple metrics are involved, the controller computes the required number of pods for each metric, and picks the larger value. | ||

'''Utilization vs. raw values'''. If a target utilization value is set, the controller calculates the utilization value as a percentage of the equivalent resource request on the containers in each pod. If a target raw value is set, the raw metric values are used directly. The controller then takes the mean of the utilization or the raw value across all targeted pods, and produces a ratio used to scale the number of desired replicas. | '''Utilization vs. raw values'''. If a target utilization value is set, the controller calculates the utilization value as a percentage of the equivalent resource request on the containers in each pod. Utilization values can only be used with resource metrics, because only for resource metrics we can set requests. If a target raw value is set, the raw metric values are used directly. The controller then takes the mean <font color=darkgray>or average(?)</font> of the utilization or the raw value across all targeted pods, and produces a ratio used to scale the number of desired replicas. If [[Metrics_in_Kubernetes#Pod_Metrics|per-pod custom metrics]] are used in autoscaling, the controller works with raw values, not utilization values. | ||

For [[Metrics_in_Kubernetes#Object_Metrics|object metrics]] and [[Metrics_in_Kubernetes#External_Metrics|external]] metrics, a single metric is fetched and it compared to the target value to produce a ratio. In [[Kubernetes_Horizontal_Pod_Autoscaler_Manifest_Version_2_beta_2|autoscaling/v2beta2]] API version, the value can optionally be divided by the number of pods before the comparison is made. | |||

Deployments should be preferred for autoscaling instead of ReplicaSets. This way, the desired replica count is preserved across application updates, because a Deployment creates a new ReplicaSet for each version. | |||

::[[File:Metrics_and_Autoscaling.png]] | ::[[File:Metrics_and_Autoscaling.png]] | ||

| Line 38: | Line 40: | ||

{{External|https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#algorithm-details}} | {{External|https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#algorithm-details}} | ||

For a specific metric, the goal of the algorithm is to compute the number of replicas that will bring the average value of the metric as close to the target value as possible. The tolerance can be set with [[Horizontal Pod Autoscaler Operations#--horizontal-pod-autoscaler-tolerance|--horizontal-pod-autoscaler-tolerance]]. The input is a set of metrics, one for each pod, | For a specific metric, the goal of the algorithm is to compute the number of replicas that will bring the average value of the metric as close to the target value as possible. The tolerance can be set with [[Horizontal Pod Autoscaler Operations#--horizontal-pod-autoscaler-tolerance|--horizontal-pod-autoscaler-tolerance]]. The input is a set of metrics, one for each pod in case of resource metrics or pod custom metrics, or just one metric for object or external metrics. The output is an integer, which represents the target number of pod replicas. | ||

The algorithm also needs to make sure that the autoscaler does not thrash around when the metric value is unstable and changes rapidly. One parameter that can be used to control this behavior is [[Horizontal_Pod_Autoscaler_Operations#--horizontal-pod-autoscaler-downscale-stabilization|--horizontal-pod-autoscaler-downscale-stabilization]]. | The algorithm also needs to make sure that the autoscaler does not thrash around when the metric value is unstable and changes rapidly. One parameter that can be used to control this behavior is [[Horizontal_Pod_Autoscaler_Operations#--horizontal-pod-autoscaler-downscale-stabilization|--horizontal-pod-autoscaler-downscale-stabilization]]. | ||

The autoscaler will at most double the number of replicas in a single operation, if more that two current replicas exist. If only one replica exists, there is no such limitation. The autoscaler also has a limit of how soon a subsequent autoscale operation can occur after the previous one. | The autoscaler will at most double the number of replicas in a single operation, if more that two current replicas exist. If only one replica exists, there is no such limitation. The autoscaler also has a limit of how soon a subsequent autoscale operation can occur after the previous one. By default, a scale-up will occur only if no rescaling event occurred in the last three minutes and a scale-down is performed every five minutes. | ||

<font color=darkgray>This makes sense if the autoscaler reads metrics from pods and average the value per pod - it will know how many target pods needs to start (or shut down) to reach the target value. How about a custom object metric, that is not correlated to pods?</font> | |||

=<span id='Resource'></span><span id='HorizontalPodAutoscaler_Resource'></span>Horizontal Pod Autoscaler Resource= | =<span id='Resource'></span><span id='HorizontalPodAutoscaler_Resource'></span>Horizontal Pod Autoscaler Resource= | ||

The HorizontalPodAutoscaler [[Kubernetes_API_Resources_Concepts#HorizontalPodAutoscaler|Kubernetes API resource]] is deployed as any other Kubernetes resource by posting a [[Kubernetes_Horizontal_Pod_Autoscaler_Manifest|manifest]] to the API server. The resource determines the behavior of the [[#Horizontal_Pod_Autoscaler_Controller|controller]]. The HorizontalPodAutoscaler minReplicas field cannot be set to zero, so the autoscaler will never scale down to zero pods | The HorizontalPodAutoscaler [[Kubernetes_API_Resources_Concepts#HorizontalPodAutoscaler|Kubernetes API resource]] is deployed as any other Kubernetes resource by posting a [[Kubernetes_Horizontal_Pod_Autoscaler_Manifest|manifest]] to the API server. The resource determines the behavior of the [[#Horizontal_Pod_Autoscaler_Controller|controller]]. The HorizontalPodAutoscaler minReplicas field cannot be set to zero, so the autoscaler will never scale down to zero pods, as currently idling and un-idling is not supported. The resource can be changed after deployment, the changes will be detected and acted upon. | ||

==<span id='HPA_Manifest'>HorizontalPodAutoscaler Manifest</span>== | ==<span id='HPA_Manifest'>HorizontalPodAutoscaler Manifest</span>== | ||

| Line 52: | Line 56: | ||

=CPU-based Scaling= | =CPU-based Scaling= | ||

The CPU-based scaling algorithm uses the guaranteed CPU amount (the [[Kubernetes_Resource_Management_Concepts#CPU_Request|CPU requests]]) when determining the CPU utilization of the pod. This means the pod needs to have the CPU requests set, either directly or through a [[Kubernetes_Resource_Management_Concepts#Limit_Ranges|LimitRange]] object, to be eligible for autoscaling. | The CPU-based scaling algorithm uses the guaranteed CPU amount (the [[Kubernetes_Resource_Management_Concepts#CPU_Request|CPU requests]]) when determining the CPU utilization of the pod. This means the pod needs to have the CPU requests set, either directly or through a [[Kubernetes_Resource_Management_Concepts#Limit_Ranges|LimitRange]] object, to be eligible for autoscaling. CPU average utilization is the container's actual CPU usage divided by its CPU request value. | ||

CPU average utilization is the container's actual CPU usage divided by its CPU request value | |||

Also see: | Also see: | ||

* [[Horizontal_Pod_Autoscaler_Operations#With_CLI|Create a CPU-based horizontal pod autoscaler with CLI]] | * [[Horizontal_Pod_Autoscaler_Operations#With_CLI|Create a CPU-based horizontal pod autoscaler with CLI]] | ||

* [[Horizontal_Pod_Autoscaler_Operations#With_Metadata|Create a CPU-based horizontal pod autoscaler with Metadata]] | * [[Horizontal_Pod_Autoscaler_Operations#With_Metadata|Create a CPU-based horizontal pod autoscaler with Metadata]] | ||

=Memory-based Scaling= | =Memory-based Scaling= | ||

Memory-based autoscaling is much more problematic than CPU-based autoscaling. The main reason is because after scaling up, the old pods would somehow need to be forced to release memory. This needs to be done by the application itself, it cannot be done by the system. All the system could do is to kill and restart the application, hoping that it would use less memory than before. | Memory-based autoscaling is much more problematic than CPU-based autoscaling. The main reason is because after scaling up, the old pods would somehow need to be forced to release memory. This needs to be done by the application itself, it cannot be done by the system. All the system could do is to kill and restart the application, hoping that it would use less memory than before. | ||

=Custom Metrics-based Scaling= | =Custom Metrics-based Scaling= | ||

Autoscaling solutions based on custom metrics are natively supported by [[Kubernetes_Horizontal_Pod_Autoscaler_Manifest_Version_2_beta_2|v2beta2]] Horizontal Pod Autoscalers. There are two types of custom metrics that can be used: [[#Custom_Pod_Metrics|custom pod metrics]] and [[#Custom_Object_Metrics|custom object metrics]]. In both cases, they are commonly collected with a Prometheus-based metric pipeline and exposed over [[Metrics_in_Kubernetes#Custom_Metrics_API|Custom Metrics API]] by the [[Prometheus_Adapter_for_Kubernetes_Metrics_APIs#Overview|Prometheus adapter for Kubernetes metrics API]]. The Prometheus adapter will act as an [[Kubernetes_Aggregation_Layer#Extension_API_Server|extension API server]], operating at the [[Kubernetes_Aggregation_Layer#Overview|Kubernetes aggregation layer]], so the aggregation layer needs to be enabled in the cluster for the solution to work. | |||

This article describes enabling custom metrics-based autoscaling, step-by-step: {{Internal|Kubernetes Horizontal Custom Metrics Autoscaling Walkthrough|Horizontal Custom Metrics Autoscaling Walkthrough}} | |||

{{External|https:// | |||

==Custom Pod Metrics== | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: autoscaling/v2beta2 | |||

kind: HorizontalPodAutoscaler | |||

spec: | |||

metrics: | |||

- type: Pods | |||

pods: | |||

metric: | |||

name: packets-per-second | |||

target: | |||

type: AverageValue | |||

averageValue: 1K | |||

</syntaxhighlight> | |||

For more details, see: {{Internal|Metrics_in_Kubernetes#Pod_Metrics|Pod Metrics}} | |||

==Custom Object Metrics== | |||

{{External|https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.19/#objectmetricsource-v2beta2-autoscaling}} | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: autoscaling/v2beta2 | |||

kind: HorizontalPodAutoscaler | |||

... | |||

spec: | |||

metrics: | |||

metrics: | |||

- type: Object | |||

object: | |||

metric: | |||

name: synthetic_service_metric | |||

describedObject: | |||

kind: Service | |||

# the service does not actually need to exist | |||

name: synthetic-service | |||

target: | |||

type: Value | |||

value: 2000 | |||

</syntaxhighlight> | |||

This causes the autoscaler to invoke: | |||

<syntaxhighlight lang='yaml'> | |||

GET /apis/custom.metrics.k8s.io/v1beta1/namespaces/default/services/synthetic-service/synthetic_service_metric | |||

</syntaxhighlight> | |||

In return, gets something similar to: | |||

<syntaxhighlight lang='json'> | |||

{ | |||

"kind": "MetricValueList", | |||

"apiVersion": "custom.metrics.k8s.io/v1beta1", | |||

"metadata": { | |||

"selfLink": "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/services/synthetic-service/synthetic_service_metric" | |||

}, | |||

"items": [ | |||

{ | |||

"describedObject": { | |||

"kind": "Service", | |||

"namespace": "default", | |||

"name": "synthetic-service", | |||

"apiVersion": "/v1" | |||

}, | |||

"metricName": "synthetic_service_metric", | |||

"timestamp": "2020-10-15T22:25:36Z", | |||

"value": "1k", | |||

"selector": null | |||

} | |||

] | |||

} | |||

</syntaxhighlight> | |||

For more details, see: {{Internal|Metrics_in_Kubernetes#Object_Metrics|Object Metrics}} | |||

=External Metrics-based Scaling= | |||

{{External|https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/#autoscaling-on-metrics-not-related-to-kubernetes-objects}} | |||

Autoscaling solutions based on external metrics are natively supported by [[Kubernetes_Horizontal_Pod_Autoscaler_Manifest_Version_2_beta_2|v2beta2]] Horizontal Pod Autoscalers. | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: autoscaling/v2beta2 | |||

kind: HorizontalPodAutoscaler | |||

spec: | |||

metrics: | |||

- type: External | |||

external: | |||

metric: | |||

name: queue_messages_ready | |||

selector: "queue=worker_tasks" | |||

target: | |||

type: AverageValue | |||

averageValue: 30 | |||

</syntaxhighlight> | |||

For more details, see: {{Internal|Metrics_in_Kubernetes#External_Metrics|External Metrics}} | |||

=Configurable Scaling Behavior= | =Configurable Scaling Behavior= | ||

{{External|https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-configurable-scaling-behavior}} | {{External|https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-configurable-scaling-behavior}} | ||

Scaling behavior can be configured with the HorizontalPodAutoscaler resource "behavior" field. Behaviors are specified separately for scaling up, in "scaleUp" section and scaling down, in "scaleDown" section. A stabilization window can be specified for both directions. | |||

<font color=darkgray> | |||

TODO: | |||

* https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#scaling-policies | |||

* https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#stabilization-window | |||

* https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#default-behavior | |||

</font> | |||

=Horizontal Pod Autoscaler Status Conditions= | |||

{{External|https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/#appendix-horizontal-pod-autoscaler-status-conditions}} | |||

* AbleToScale | |||

* ScalingActive | |||

* ScalingLimited | |||

=Playground= | =Playground= | ||

| Line 76: | Line 176: | ||

* [[Horizontal_Pod_Autoscaler_Operations#With_CLI|Create a CPU-based horizontal pod autoscaler with CLI]] | * [[Horizontal_Pod_Autoscaler_Operations#With_CLI|Create a CPU-based horizontal pod autoscaler with CLI]] | ||

* [[Horizontal_Pod_Autoscaler_Operations#With_Metadata|Create a CPU-based horizontal pod autoscaler with Metadata]] | * [[Horizontal_Pod_Autoscaler_Operations#With_Metadata|Create a CPU-based horizontal pod autoscaler with Metadata]] | ||

* [[Horizontal_Pod_Autoscaler_Operations#Horizontal_Pod_Autoscaler_Controller_Troubleshooting|Horizontal Pod Autoscaler troubleshooting]] | |||

Latest revision as of 17:23, 23 October 2020

External

- https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/

- https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

- https://github.com/kubernetes/community/blob/master/contributors/design-proposals/instrumentation/custom-metrics-api.md

- https://github.com/kubernetes/community/blob/master/contributors/design-proposals/autoscaling/hpa-v2.md

- https://towardsdatascience.com/kubernetes-hpa-with-custom-metrics-from-prometheus-9ffc201991e

Internal

Overview

One of the basic Kubernetes features is the ability to manually horizontally scale pods up and down by increasing or decreasing the desired replica count field on the pod controller. Automatic scaling is built in top of that. Horizontal pod autoscaling is the automatic increase or decrease the number of pod replicas managed by a higher level controller that supports scaling, such as deployments, replica sets and stateful sets. The autoscaling mechanism aims for a target value for one or more metrics. By increasing the number of pods, the average value per pod for the autoscaling target value should come down. Metrics appropriate for autoscaling are those metrics whose average value decreases linearly with the increase of pod replica count.

How it Works

The scaling is performed by a horizontal pod controller and it is configured by a HorizontalPodAutoscaler Kubernetes API resource, which enables and configures the horizontal pod autoscaler. For an horizontal pod autoscaler to work correctly, a source of metrics, most commonly resource metrics, must be deployed. In case of resource metrics, the autoscaler uses the corresponding resource request as a reference, so if some of the containers do not have the relevant resource request set, the autoscaler will not take any action for that metric. This does not apply for custom and external metrics. The simplest source of resource metrics is the metrics server.

The controller periodically reads the appropriate metrics APIs, as specified in the HorizontalPodAutoscaler resource definition, and obtains metrics for the pods it monitors. To be able to access metrics APIs, --horizontal-pod-autoscaler-use-rest-clients must be set to true, the API aggregation layer must be enabled, and the corresponding APIs (resource metrics API, custom metrics API or external metrics API) must be registered. The query cycle period is set with --horizontal-pod-autoscaler-sync-period.

The set of pods to watch is provided by the higher level pod controller the autoscaler is associated with. The autoscaler calculates the number of replicas required to meet the target metric, as described in the Autoscaling Algorithm section, below. If there is a mismatch, the controller adjusts the "replicas" field through the Scale sub-resource of the pod controller. The target pod controller is not aware of the autoscaler. In what it is concerned, anybody, including the autoscaler, may update the replica count.

Each component involved in autoscaling gets metrics from its source periodically. The end effect is that it takes a while for metrics to be propagated and a rescaling action to be performed.

Autoscaling can be performed for one metric or multiple metrics. If multiple metrics are involved, the controller computes the required number of pods for each metric, and picks the larger value.

Utilization vs. raw values. If a target utilization value is set, the controller calculates the utilization value as a percentage of the equivalent resource request on the containers in each pod. Utilization values can only be used with resource metrics, because only for resource metrics we can set requests. If a target raw value is set, the raw metric values are used directly. The controller then takes the mean or average(?) of the utilization or the raw value across all targeted pods, and produces a ratio used to scale the number of desired replicas. If per-pod custom metrics are used in autoscaling, the controller works with raw values, not utilization values.

For object metrics and external metrics, a single metric is fetched and it compared to the target value to produce a ratio. In autoscaling/v2beta2 API version, the value can optionally be divided by the number of pods before the comparison is made.

Deployments should be preferred for autoscaling instead of ReplicaSets. This way, the desired replica count is preserved across application updates, because a Deployment creates a new ReplicaSet for each version.

Horizontal Pod Autoscaler Controller

The horizontal pod autoscaler controller is part of the cluster's controller manager process. The controller periodically adjusts the number of replicas.

Autoscaling Algorithm

For a specific metric, the goal of the algorithm is to compute the number of replicas that will bring the average value of the metric as close to the target value as possible. The tolerance can be set with --horizontal-pod-autoscaler-tolerance. The input is a set of metrics, one for each pod in case of resource metrics or pod custom metrics, or just one metric for object or external metrics. The output is an integer, which represents the target number of pod replicas.

The algorithm also needs to make sure that the autoscaler does not thrash around when the metric value is unstable and changes rapidly. One parameter that can be used to control this behavior is --horizontal-pod-autoscaler-downscale-stabilization.

The autoscaler will at most double the number of replicas in a single operation, if more that two current replicas exist. If only one replica exists, there is no such limitation. The autoscaler also has a limit of how soon a subsequent autoscale operation can occur after the previous one. By default, a scale-up will occur only if no rescaling event occurred in the last three minutes and a scale-down is performed every five minutes.

This makes sense if the autoscaler reads metrics from pods and average the value per pod - it will know how many target pods needs to start (or shut down) to reach the target value. How about a custom object metric, that is not correlated to pods?

Horizontal Pod Autoscaler Resource

The HorizontalPodAutoscaler Kubernetes API resource is deployed as any other Kubernetes resource by posting a manifest to the API server. The resource determines the behavior of the controller. The HorizontalPodAutoscaler minReplicas field cannot be set to zero, so the autoscaler will never scale down to zero pods, as currently idling and un-idling is not supported. The resource can be changed after deployment, the changes will be detected and acted upon.

HorizontalPodAutoscaler Manifest

CPU-based Scaling

The CPU-based scaling algorithm uses the guaranteed CPU amount (the CPU requests) when determining the CPU utilization of the pod. This means the pod needs to have the CPU requests set, either directly or through a LimitRange object, to be eligible for autoscaling. CPU average utilization is the container's actual CPU usage divided by its CPU request value.

Also see:

- Create a CPU-based horizontal pod autoscaler with CLI

- Create a CPU-based horizontal pod autoscaler with Metadata

Memory-based Scaling

Memory-based autoscaling is much more problematic than CPU-based autoscaling. The main reason is because after scaling up, the old pods would somehow need to be forced to release memory. This needs to be done by the application itself, it cannot be done by the system. All the system could do is to kill and restart the application, hoping that it would use less memory than before.

Custom Metrics-based Scaling

Autoscaling solutions based on custom metrics are natively supported by v2beta2 Horizontal Pod Autoscalers. There are two types of custom metrics that can be used: custom pod metrics and custom object metrics. In both cases, they are commonly collected with a Prometheus-based metric pipeline and exposed over Custom Metrics API by the Prometheus adapter for Kubernetes metrics API. The Prometheus adapter will act as an extension API server, operating at the Kubernetes aggregation layer, so the aggregation layer needs to be enabled in the cluster for the solution to work.

This article describes enabling custom metrics-based autoscaling, step-by-step:

Custom Pod Metrics

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

spec:

metrics:

- type: Pods

pods:

metric:

name: packets-per-second

target:

type: AverageValue

averageValue: 1K

For more details, see:

Custom Object Metrics

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

...

spec:

metrics:

metrics:

- type: Object

object:

metric:

name: synthetic_service_metric

describedObject:

kind: Service

# the service does not actually need to exist

name: synthetic-service

target:

type: Value

value: 2000

This causes the autoscaler to invoke:

GET /apis/custom.metrics.k8s.io/v1beta1/namespaces/default/services/synthetic-service/synthetic_service_metric

In return, gets something similar to:

{

"kind": "MetricValueList",

"apiVersion": "custom.metrics.k8s.io/v1beta1",

"metadata": {

"selfLink": "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/services/synthetic-service/synthetic_service_metric"

},

"items": [

{

"describedObject": {

"kind": "Service",

"namespace": "default",

"name": "synthetic-service",

"apiVersion": "/v1"

},

"metricName": "synthetic_service_metric",

"timestamp": "2020-10-15T22:25:36Z",

"value": "1k",

"selector": null

}

]

}

For more details, see:

External Metrics-based Scaling

Autoscaling solutions based on external metrics are natively supported by v2beta2 Horizontal Pod Autoscalers.

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

spec:

metrics:

- type: External

external:

metric:

name: queue_messages_ready

selector: "queue=worker_tasks"

target:

type: AverageValue

averageValue: 30

For more details, see:

Configurable Scaling Behavior

Scaling behavior can be configured with the HorizontalPodAutoscaler resource "behavior" field. Behaviors are specified separately for scaling up, in "scaleUp" section and scaling down, in "scaleDown" section. A stabilization window can be specified for both directions.

TODO:

- https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#scaling-policies

- https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#stabilization-window

- https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#default-behavior

Horizontal Pod Autoscaler Status Conditions

- AbleToScale

- ScalingActive

- ScalingLimited