Binary Search Trees: Difference between revisions

| (50 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=External= | |||

* https://www.coursera.org/learn/algorithms-graphs-data-structures/lecture/juAOg/balanced-search-trees-operations-and-applications | |||

* https://www.coursera.org/learn/algorithms-graphs-data-structures/lecture/1FBl2/binary-search-tree-basics-part-i | |||

* https://www.coursera.org/learn/algorithms-graphs-data-structures/lecture/cXMbA/binary-search-tree-basics-part-ii | |||

=Internal= | =Internal= | ||

* [[Tree_Concepts#Binary_Search_Tree|Tree Concepts]] | * [[Tree_Concepts#Binary_Search_Tree|Tree Concepts]] | ||

* [[Binary Search]] | |||

=Overview= | =Overview= | ||

A binary search tree is a [[Tree_Concepts#Binary_Tree|binary tree]] that has the Binary Search Tree Property. A binary search tree is represented in memory using three pointers: left child, right child and the parent. More details on binary tree representation in memory are available here: {{Internal|Tree_Representation_in_Memory#Binary_Trees|Binary Tree Representation in Memory}} | A binary search tree is a [[Tree_Concepts#Binary_Tree|binary tree]] that has the [[#Binary_Search_Tree_Property|Binary Search Tree Property]]. It supports insertion, deletions and searches and can be thought as the dynamic version of a [[Sorting_Algorithms#Static_Sorted_Array|sorted array]]: any [[Sorting_Algorithms#Static_Sorted_Array_Operations|operation exposed by a static sorted array]] is available, but additionally, we have access to insertion and deletion. | ||

While in general the running time of the insertion, deletion and search operations on a binary search tree is asymptotically bounded by the height of the tree, these operations become efficient when the binary search tree is kept balanced, and thus its height is the minimum possible. [[#Balanced_Binary_Search_Tree|Balanced binary search trees]] are addressed in detail below. | |||

A binary search tree is represented in memory using three pointers: left child, right child and the parent. More details on binary tree representation in memory are available here: {{Internal|Tree_Representation_in_Memory#Binary_Trees|Binary Tree Representation in Memory}} | |||

For a set of keys, there are many search tree corresponding to those keys | |||

=Binary Search Tree Property= | |||

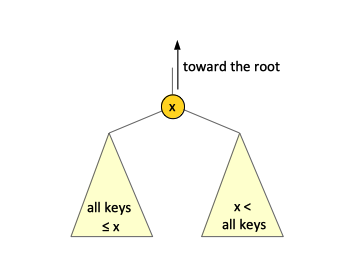

The fundamental property of the binary search tree, called the '''Binary Search Tree Property''': | |||

{{Note|For '''every single node''' of a binary search tree, if the node has a key value, then all of the keys stored in the '''left subtree''' should be less than the node's key value and all of the keys stored in the '''right subtree''' should be bigger than the node's key value. This property holds not only at the root, but in every single node of the three.}} | |||

<center>[[File:Binary_Search_Tree_Property.png|355px]]</center> | |||

The binary search tree property is preserved through [[Search Tree Rotation#Overview|rotations]]. | |||

Note that the Binary Search Tree Property is different from the [[Heap#The_Heap_Property|Heap Property]]. Search tree are designed so we can search easily through them, unlike heaps that are designed to find the minimum (or maximum) easily. | |||

<font color=darkkhaki>TODO [[CLRS]] Page 286.</font> | |||

=Canonical Use= | |||

A '''balanced''' binary search tree supports efficiently the same operations as a static [[Sorting_Algorithms#Static_Sorted_Array|sorted array]], but additionally allows for insertion and deletion. The same operations are exposed by unbalanced binary search trees, but their efficiency degrades in case of degraded binary search tree, so keeping the tree balanced is essential. For more details see [[#Balanced_Binary_Search_Trees|Balanced Binary Search Trees]] below. | |||

=Supported Operations= | |||

The binary search tree support the following operations: | |||

{| class="wikitable" style="text-align: left;" | |||

! Operation | |||

! Running Time | |||

! Notes | |||

|- | |||

| [[Data_Structures#INSERT.28X.29|INSERT(X)]] || Θ(tree_height), Θ(log n) for balanced trees|| [[#INSERT_Implementation|INSERT Implementation]] | |||

|- | |||

| [[Data_Structures#DELETE.28X.29|DELETE(X)]] ||Θ(tree_height), Θ(log n) for balanced trees || [[#DELETE_Implementation|DELETE Implementation]] | |||

|- | |||

| [[Data_Structures#SEARCH.28K.29|SEARCH(K)]] ||Θ(tree_height), Θ(log n) for balanced trees || [[#SEARCH_Implementation|SEARCH Implementation]] | |||

|- | |||

| [[Data_Structures#SELECT|SELECT(i<sup>th</sup> order statistics)]] ||Θ(tree_height), Θ(log n) for balanced trees || [[#SELECT_Implementation|SELECT Implementation]] | |||

|- | |||

| [[Data_Structures#MINIMUM.28.29|MINIMUM()]] ||Θ(tree_height), Θ(log n) for balanced trees || [[#MINIMUM_Implementation|MINIMUM Implementation]] | |||

|- | |||

| [[Data_Structures#MAXIMUM.28.29|MAXIMUM()]] || Θ(tree_height), Θ(log n) for balanced trees || [[#MAXIMUM_Implementation|MAXIMUM Implementation]] | |||

|- | |||

| [[Data_Structures#PREDECESSOR.28X.29|PREDECESSOR(X)]] || Θ(tree_height), Θ(log n) for balanced trees || [[#PREDECESSOR_Implementation|PREDECESSOR Implementation]] | |||

|- | |||

| [[Data_Structures#SUCCESSOR.28X.29|SUCCESSOR(X)]] || Θ(tree_height), Θ(log n) for balanced trees || [[#SUCCESSOR_Implementation|SUCCESSOR Implementation]] | |||

|- | |||

| [[Data_Structures#RANK|RANK(X)]] || Θ(tree_height), Θ(log n) for balanced trees || [[#RANK_Implementation|RANK Implementation]] | |||

|- | |||

|} | |||

==<span id='SEARCH_Implementation'></span><tt>SEARCH</tt>== | |||

The motivation for the search tree property is that searching in a search tree just involves "following your nose", like a [[Binary_Search#Sorted_Arrays|binary search]] in a sorted array. | |||

<font size=-1> | |||

SEARCH(root, X) | |||

</font> | |||

<font size=-1> | |||

SEARCH(n, X) | |||

if X == value(n) return n | |||

if X < value(n) | |||

if there is no left child return NULL | |||

else SEARCH(left_child(n), X) | |||

if X > value(n) | |||

if there is no right child return NULL | |||

else SEARCH(right_child(n), X) | |||

</font> | |||

The running time is Θ(tree_height). | |||

==<span id='INSERT_Implementation'></span><tt>INSERT</tt>== | |||

One implementation, that does not care about keeping the tree balanced, uses the [[#SEARCH_Implementation|recursive search procedure]] described above to keep searching for the key to be inserted until a NULL child pointer is encountered. At that point, the procedure wires a new tree node in that position: | |||

<font size=-1> | |||

INSERT(root, X) | |||

</font> | |||

<font size=-1> | |||

INSERT(n, X) | |||

if X <= value(n) | |||

if there is no left child wire X as left child | |||

else INSERT(left_child(n), X) | |||

if X > value(n) | |||

if there is no right child wire X as right child | |||

else INSERT(right_child(n), X) | |||

</font> | |||

The running time is Θ(tree_height). | |||

==<span id='DELETE_Implementation'></span><tt>DELETE</tt>== | |||

The <code>[[#DELETE|DELETE(X)]]</code> function gets as argument the node to delete. The <code>DELETE(K)</code> function gets as argument the key to delete and in this case we need to locate the node X corresponding to the given key K with <code>[[#SEARCH|SEARCH(K)]]</code>. If no such node exists, nothing is deleted. | |||

To continue, we assume the node X exists. | |||

There are three distinct cases that need to be handled: | |||

1. X has no children. This is the easiest case, the node is just simply deleted from the three. | |||

2. X has just one child - a left child or a right child. In this case, splice out the node to delete, which creates a hole in the tree, and then rewire its child into its parent. The unique child assumes the position of the deleted node. | |||

3. X has both the left and right child. Find the predecessor of the node to be deleted using the <code>[[#PREDECESSOR|PREDECESSOR()]]</code> function. Since X has both left and right subtrees, the predecessor will be the rightmost leaf of its left subtree, as explained in [[#PREDECESSOR_Implementation|PREDECESSOR Implementation]]. Then splice out the node to be deleted and wire the predecessor such found in its place. If the predecessor node has a left child, you may need to wire it into its parent. | |||

==<span id='MINIMUM_Implementation'></span><tt>MINIMUM</tt>== | |||

Search for -∞: Start with the root and follow the left child until the leftmost leaf. The running time is Θ(tree_height). | |||

==<span id='MAXIMUM_Implementation'></span><tt>MAXIMUM</tt>== | |||

Search for +∞: Start with the root and follow the right child until the rightmost leaf. The running time is Θ(tree_height). | |||

==<span id='PREDECESSOR_Implementation'></span><tt>PREDECESSOR</tt>== | |||

Computing the predecessor of a node X in a binary search tree involves finding the node whose key is the next smaller element relative to the X's key. | |||

If the argument to the <code>PREDECESSOR()</code> function is the key itself, the implementation first must search the tree and find the corresponding node. If no node is found, the problem has no solution. | |||

To continue, we assume that the node corresponding to the given key K is found in the tree, and it is X. Computing the predecessor of the key K reduces to two cases: | |||

1. The node X has a left subtree. In this case, the predecessor node is the node with the maximum key in the left subtree, which can be compute with <code>MAXIMUM(left_child(X))</code>, and it is the rightmost leaf. | |||

2. The node X has no left subtree. In this case, the predecessor node is found by walking up in the tree until we find an [[Tree_Concepts#Ancestors_and_Descendants|ancestor]] node with a key smaller than K. It is possible to not find a predecessor, if the node we start with is the minimum in the tree. | |||

==<span id='SUCCESSOR_Implementation'></span><tt>SUCCESSOR</tt>== | |||

<font color=darkkhaki>TODO</font> | |||

==<span id='SELECT_Implementation'></span><tt>SELECT</tt>== | |||

To implement <tt>SELECT</tt> efficiently, we need to [[Data_Structures#Augmenting_a_Data_Structure|augment]] the binary tree structure by storing extra information about the tree itself in each tree node. In this specific case, we maintain a "size" field (size(X)) which contains the number of tree nodes that exist in subtree rooted at X. | |||

<font size=-1> | |||

Start at root X with children Y and Z. | |||

Let a = size(Y) <font color=teal># a = 0 if X has no left child</font> | |||

if a = i - 1 return X's key | |||

if a ≥ i recursively compute i<sup>th</sup> order statistic of the search tree rooted at Y | |||

if a < i - 1 recursively compute (i - a - 1)<sup>th</sup> order statistic of the search tree rooted at Z | |||

</font> | |||

The running time is O(tree_height). | |||

Also see: {{Internal|Selection_Problem#Randomized_Selection|Randomized Selection in an Unsorted Array}} | |||

==<span id='RANK_Implementation'></span><tt>RANK</tt>== | |||

=Balanced vs. Unbalanced Binary Search Trees= | |||

A binary search tree can be unbalanced or [[#Balanced_Binary_Search_Tree|balanced]]. For the same set of key, the search tree can vary in height between log n and n, where n is the number of keys. Balanced trees are more useful for search because they yield better running times, so techniques to keep the tree balanced while inserting or deleting nodes have been developed. | |||

=<span id='Balanced_Binary_Search_Tree'></span>Balanced Binary Search Trees= | |||

The reason to exist for balanced binary search trees is to expose the [[Sorting_Algorithms#Static_Sorted_Array_Operations|same set of operations as a static sorted array]], while allowing dynamic insertion and deletions. Some of the operations won't be as fast as in the case of a static sorted array, though. Unlike generic binary search trees, the balanced binary search trees are guaranteed to stay balanced, which means their height is guaranteed to stay logarithmic, which means all of the operations search trees support will also have a running time logarithmic in the number of keys that they're storing. The height of a binary tree can't be better than the logarithm of its number of keys, so the balanced binary trees have the best performance of all binary trees. | |||

<span id='Computing_Optimal_Binary_Search_Trees'></span>If we know apriori the relative frequency of various search terms, a balanced search tree is not optimal relative to the total number of node traversals. Intuitively, higher in the tree we place the most frequently search terms, the fewer overall node traversals we get. Building '''optimal binary search trees''' can be approached using dynamic programming algorithms: | |||

{{Internal|Optimal Binary Search Trees#Overview|Computing Optimal Binary Search Trees with Dynamic Programming}} | |||

==Primitives== | |||

===Rotation=== | |||

All balanced binary search trees use this primitive to rebalance themselves. | |||

{{Internal|Search Tree Rotation|Search Tree Rotation}} | |||

==Balanced Binary Search Tree Types== | |||

===Red-Black Trees=== | |||

{{Internal|Red-black_Tree#Overview|Red-Black Trees}} | |||

===AVL Trees=== | |||

{{Internal|AVL Trees#Overview|AVL Trees}} | |||

===Splay Trees=== | |||

{{Internal|Splay Trees#Overview|Splay Trees}} | |||

===B-Trees=== | |||

B-trees are [[Tree_Concepts#K-ary_Tree|k-ary]], '''not''' binary, but they are search trees and they are balanced. | |||

{{Internal|B and B+ Trees|B and B+ Trees}} | |||

==Comparison between a Balanced Binary Search Tree and a Heap== | |||

A [[Heap#Overview|heap]] does not do as much as a balanced binary search tree, but what it does, it does very well. The heap is as dynamic as a search tree, in what insertion and deletion is concerned, it implements insertion and deletion in logarithmic time. It also keeps track of the minimum element (or maximum element, but not both). So if only [[Data_Structures#INSERT|INSERT()]], [[Data_Structures#DELETE|DELETE()]] and [[Data_Structures#MINIMUM|MINIMUM()]] (or [[Data_Structures#MAXIMUM|MAXIMUM()]]) are needed, a binary search tree is overkill, the heap implements these better, with smallest constant factors both in space and time. | |||

=Organizatorium= | |||

* https://github.com/jwasham/coding-interview-university#binary-search-trees-bsts | |||

Latest revision as of 20:21, 3 November 2021

External

- https://www.coursera.org/learn/algorithms-graphs-data-structures/lecture/juAOg/balanced-search-trees-operations-and-applications

- https://www.coursera.org/learn/algorithms-graphs-data-structures/lecture/1FBl2/binary-search-tree-basics-part-i

- https://www.coursera.org/learn/algorithms-graphs-data-structures/lecture/cXMbA/binary-search-tree-basics-part-ii

Internal

Overview

A binary search tree is a binary tree that has the Binary Search Tree Property. It supports insertion, deletions and searches and can be thought as the dynamic version of a sorted array: any operation exposed by a static sorted array is available, but additionally, we have access to insertion and deletion.

While in general the running time of the insertion, deletion and search operations on a binary search tree is asymptotically bounded by the height of the tree, these operations become efficient when the binary search tree is kept balanced, and thus its height is the minimum possible. Balanced binary search trees are addressed in detail below.

A binary search tree is represented in memory using three pointers: left child, right child and the parent. More details on binary tree representation in memory are available here:

For a set of keys, there are many search tree corresponding to those keys

Binary Search Tree Property

The fundamental property of the binary search tree, called the Binary Search Tree Property:

For every single node of a binary search tree, if the node has a key value, then all of the keys stored in the left subtree should be less than the node's key value and all of the keys stored in the right subtree should be bigger than the node's key value. This property holds not only at the root, but in every single node of the three.

The binary search tree property is preserved through rotations.

Note that the Binary Search Tree Property is different from the Heap Property. Search tree are designed so we can search easily through them, unlike heaps that are designed to find the minimum (or maximum) easily.

TODO CLRS Page 286.

Canonical Use

A balanced binary search tree supports efficiently the same operations as a static sorted array, but additionally allows for insertion and deletion. The same operations are exposed by unbalanced binary search trees, but their efficiency degrades in case of degraded binary search tree, so keeping the tree balanced is essential. For more details see Balanced Binary Search Trees below.

Supported Operations

The binary search tree support the following operations:

| Operation | Running Time | Notes |

|---|---|---|

| INSERT(X) | Θ(tree_height), Θ(log n) for balanced trees | INSERT Implementation |

| DELETE(X) | Θ(tree_height), Θ(log n) for balanced trees | DELETE Implementation |

| SEARCH(K) | Θ(tree_height), Θ(log n) for balanced trees | SEARCH Implementation |

| SELECT(ith order statistics) | Θ(tree_height), Θ(log n) for balanced trees | SELECT Implementation |

| MINIMUM() | Θ(tree_height), Θ(log n) for balanced trees | MINIMUM Implementation |

| MAXIMUM() | Θ(tree_height), Θ(log n) for balanced trees | MAXIMUM Implementation |

| PREDECESSOR(X) | Θ(tree_height), Θ(log n) for balanced trees | PREDECESSOR Implementation |

| SUCCESSOR(X) | Θ(tree_height), Θ(log n) for balanced trees | SUCCESSOR Implementation |

| RANK(X) | Θ(tree_height), Θ(log n) for balanced trees | RANK Implementation |

SEARCH

The motivation for the search tree property is that searching in a search tree just involves "following your nose", like a binary search in a sorted array.

SEARCH(root, X)

SEARCH(n, X)

if X == value(n) return n

if X < value(n)

if there is no left child return NULL

else SEARCH(left_child(n), X)

if X > value(n)

if there is no right child return NULL

else SEARCH(right_child(n), X)

The running time is Θ(tree_height).

INSERT

One implementation, that does not care about keeping the tree balanced, uses the recursive search procedure described above to keep searching for the key to be inserted until a NULL child pointer is encountered. At that point, the procedure wires a new tree node in that position:

INSERT(root, X)

INSERT(n, X)

if X <= value(n)

if there is no left child wire X as left child

else INSERT(left_child(n), X)

if X > value(n)

if there is no right child wire X as right child

else INSERT(right_child(n), X)

The running time is Θ(tree_height).

DELETE

The DELETE(X) function gets as argument the node to delete. The DELETE(K) function gets as argument the key to delete and in this case we need to locate the node X corresponding to the given key K with SEARCH(K). If no such node exists, nothing is deleted.

To continue, we assume the node X exists.

There are three distinct cases that need to be handled:

1. X has no children. This is the easiest case, the node is just simply deleted from the three.

2. X has just one child - a left child or a right child. In this case, splice out the node to delete, which creates a hole in the tree, and then rewire its child into its parent. The unique child assumes the position of the deleted node.

3. X has both the left and right child. Find the predecessor of the node to be deleted using the PREDECESSOR() function. Since X has both left and right subtrees, the predecessor will be the rightmost leaf of its left subtree, as explained in PREDECESSOR Implementation. Then splice out the node to be deleted and wire the predecessor such found in its place. If the predecessor node has a left child, you may need to wire it into its parent.

MINIMUM

Search for -∞: Start with the root and follow the left child until the leftmost leaf. The running time is Θ(tree_height).

MAXIMUM

Search for +∞: Start with the root and follow the right child until the rightmost leaf. The running time is Θ(tree_height).

PREDECESSOR

Computing the predecessor of a node X in a binary search tree involves finding the node whose key is the next smaller element relative to the X's key.

If the argument to the PREDECESSOR() function is the key itself, the implementation first must search the tree and find the corresponding node. If no node is found, the problem has no solution.

To continue, we assume that the node corresponding to the given key K is found in the tree, and it is X. Computing the predecessor of the key K reduces to two cases:

1. The node X has a left subtree. In this case, the predecessor node is the node with the maximum key in the left subtree, which can be compute with MAXIMUM(left_child(X)), and it is the rightmost leaf.

2. The node X has no left subtree. In this case, the predecessor node is found by walking up in the tree until we find an ancestor node with a key smaller than K. It is possible to not find a predecessor, if the node we start with is the minimum in the tree.

SUCCESSOR

TODO

SELECT

To implement SELECT efficiently, we need to augment the binary tree structure by storing extra information about the tree itself in each tree node. In this specific case, we maintain a "size" field (size(X)) which contains the number of tree nodes that exist in subtree rooted at X.

Start at root X with children Y and Z. Let a = size(Y) # a = 0 if X has no left child if a = i - 1 return X's key if a ≥ i recursively compute ith order statistic of the search tree rooted at Y if a < i - 1 recursively compute (i - a - 1)th order statistic of the search tree rooted at Z

The running time is O(tree_height).

Also see:

RANK

Balanced vs. Unbalanced Binary Search Trees

A binary search tree can be unbalanced or balanced. For the same set of key, the search tree can vary in height between log n and n, where n is the number of keys. Balanced trees are more useful for search because they yield better running times, so techniques to keep the tree balanced while inserting or deleting nodes have been developed.

Balanced Binary Search Trees

The reason to exist for balanced binary search trees is to expose the same set of operations as a static sorted array, while allowing dynamic insertion and deletions. Some of the operations won't be as fast as in the case of a static sorted array, though. Unlike generic binary search trees, the balanced binary search trees are guaranteed to stay balanced, which means their height is guaranteed to stay logarithmic, which means all of the operations search trees support will also have a running time logarithmic in the number of keys that they're storing. The height of a binary tree can't be better than the logarithm of its number of keys, so the balanced binary trees have the best performance of all binary trees.

If we know apriori the relative frequency of various search terms, a balanced search tree is not optimal relative to the total number of node traversals. Intuitively, higher in the tree we place the most frequently search terms, the fewer overall node traversals we get. Building optimal binary search trees can be approached using dynamic programming algorithms:

Primitives

Rotation

All balanced binary search trees use this primitive to rebalance themselves.

Balanced Binary Search Tree Types

Red-Black Trees

AVL Trees

Splay Trees

B-Trees

B-trees are k-ary, not binary, but they are search trees and they are balanced.

Comparison between a Balanced Binary Search Tree and a Heap

A heap does not do as much as a balanced binary search tree, but what it does, it does very well. The heap is as dynamic as a search tree, in what insertion and deletion is concerned, it implements insertion and deletion in logarithmic time. It also keeps track of the minimum element (or maximum element, but not both). So if only INSERT(), DELETE() and MINIMUM() (or MAXIMUM()) are needed, a binary search tree is overkill, the heap implements these better, with smallest constant factors both in space and time.