Concurrent (Parallel) Programming: Difference between revisions

| (116 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=External= | |||

* http://www.gotw.ca/publications/concurrency-ddj.htm | |||

=Internal= | =Internal= | ||

* [[Programming_Languages_Concepts#Concurrent_.28Parallel.29_Programming|Programming Languages Concepts]] | * [[Programming_Languages_Concepts#Concurrent_.28Parallel.29_Programming|Programming Languages Concepts]] | ||

| Line 7: | Line 9: | ||

=Overview= | =Overview= | ||

Concurrency | Concurrency, or concurrent programming, is the management of multiple tasks that exist, and possibly execute, at the same time. "asynchronous" and "threaded" are other terms that are also used to refer to concurrent programming. The difference between concurrency and parallelism is explained in the [[#Concurrency_vs._Parallelism|Concurrecy vs. Parallelism]] section. | ||

Concurrent programming | Concurrent programming is becoming more and more important as the hardware executing code comes in form of multiple cores that share the system memory. Moore Law stated that the transistor density on the chip doubles every two years. This observation was true for a while, but processor engineering has reached the limits of what is physically possible when it comes to shrinking transistors. The difficulties are mainly related to power consumptions and temperature dissipation, generically known as the “power wall”. | ||

Moore Law | |||

The relationship between dynamic power consumed by a transistor and percentage of time spent switching (α), capacitance (C), clock frequency (F) and voltage differential (V) is given by the following equation: | The relationship between dynamic power consumed by a transistor and percentage of time spent switching (α), capacitance (C), clock frequency (F) and voltage differential (V) is given by the following equation: | ||

| Line 19: | Line 17: | ||

P = αCFV<sup>2</sup> | P = αCFV<sup>2</sup> | ||

Smaller transistors have a lower capacitance, so C goes down as the transistor shrinks. | Smaller transistors have a lower capacitance, so C goes down as the transistor shrinks. Also, Dennard scaling law says that as transistors get smaller, both voltage and current scale downward, so the V<sup>2</sup> term scales down in the equation. For constant α, whose maximum and desired value is 1, the dynamic power consumption equation would allow increasing the clock frequency, hence the performance of the processor, as C and V decrease. | ||

Also, Dennard scaling law says that as transistors get smaller, both voltage and current scale downward, so the | |||

For constant α, whose maximum and desired value is 1, the dynamic power consumption equation would allow increasing the clock frequency, hence the performance of the processor, as C and V decrease. | |||

However, transistors cannot get smaller than a certain size. One of the reasons is that the voltage has to stay above a specific limit, otherwise the noise would become unmanageable. In consequence, the voltage swings cannot get infinitely small. Thinner insulator layers increase static power leakage. Increasing the clock frequency under these circumstances would mean that the dynamic power consumption goes up, the temperature of the circuit goes up, as not sufficient heat can be dissipated fast enough, and the circuit simply melts. | However, transistors cannot get smaller than a certain size. One of the reasons is that the voltage has to stay above a specific limit, otherwise the noise would become unmanageable. In consequence, the voltage swings cannot get infinitely small. Thinner insulator layers increase static power leakage. Increasing the clock frequency under these circumstances would mean that the dynamic power consumption goes up, the temperature of the circuit goes up, as not sufficient heat can be dissipated fast enough, and the circuit simply melts. | ||

| Line 29: | Line 23: | ||

There is another factor limits the overall system's performance even if the processor frequency could be increased further, and that his the Von Neumann bottleneck: the main memory access is orders of magnitude slower than the processor, so even if the processor is fast, it'll idle waiting for memory access. People work around this by onboarding fast caches on processors. | There is another factor limits the overall system's performance even if the processor frequency could be increased further, and that his the Von Neumann bottleneck: the main memory access is orders of magnitude slower than the processor, so even if the processor is fast, it'll idle waiting for memory access. People work around this by onboarding fast caches on processors. | ||

All these factors determined hardware | All these factors determined hardware designers to implement a different scaling strategy: not so much shrinking the transistors in a single but packing more cores per chip. Suddenly, there’s not just one single processor that executes instructions serially, one at a time, but more of them, which can execute instruction in a truly parallel fashion. | ||

This development is what makes concurrent programming very relevant. A core is essentially sequential, so in order to use the system effectively, concurrent programming is necessary. Programs must take advantage of parallel hardware, otherwise the majority of the cores in the processor would idle, so concurrent programming support in the language is very important. Even though there is research going on into parallel compilers, attempts to automatically parallelize a program are not going very well, which makes the programmer's job to decompose the task so it renders itself to parallel execution. | This development is what makes concurrent programming very relevant. A core is essentially sequential, so in order to use the system effectively, concurrent programming is necessary. Programs must take advantage of parallel hardware, otherwise the majority of the cores in the processor would idle, so concurrent programming support in the language is very important. Even though there is research going on into parallel compilers, attempts to automatically parallelize a program are not going very well, which makes the programmer's job to decompose the task so it renders itself to parallel execution. | ||

The performance of a program that executes on multiple core is governed by <span id='Amdahl'></span>[[Amdahl's Law#Overview|Amdahl's Law]], which says that the gains in performance of such a program are bounded by how much of the program must be written in sequential manner. | |||

= | =<span id='Parallel_vs._Concurrent'></span>Concurrency vs. Parallelism= | ||

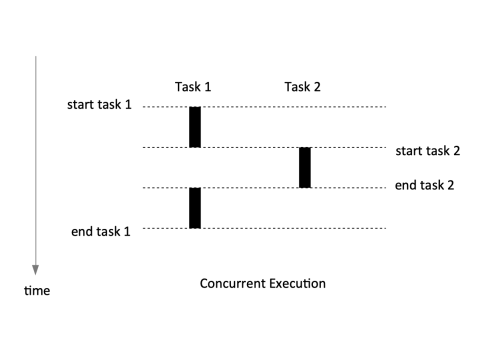

"Concurrent execution" of two tasks means that the tasks execution overlaps in time. This does not necessarily means that both tasks are executing at the same time | "Concurrent execution" of two tasks means that the tasks execution '''overlaps in time'''. This does not necessarily means that both tasks '''are executing at the same time'''. <span id='Concurrency'></span><span id='Concurrent'></span>Concurrency can be achieved with serial hardware, such as a processor that executes one instructions at the time. Concurrency can be of course achieved on parallel hardware too. | ||

:::[[File:Concurrent_Execution.png|500px]] | :::[[File:Concurrent_Execution.png|500px]] | ||

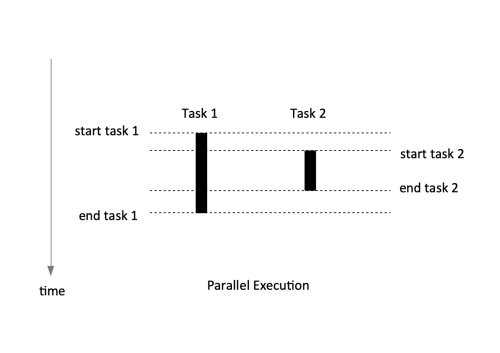

"Parallel execution" means executing two tasks '''at the same time'''. <span id='Parallelism'></span>Parallelism is only achievable with replicated hardware, usually a multi-core processor. Determining which hardware performs which task is called hardware mapping. | "Parallel execution" means '''executing''' two tasks '''at the same time'''. <span id='Parallelism'></span>Parallelism is only achievable with replicated hardware, usually a multi-core processor. Determining which hardware performs which task is called <span id='Hardware_Mapping'></span>'''hardware mapping'''. Hardware mapping is usually not under the control of the programmer. The completion time for parallel execution is usually shorter than for concurrent execution. | ||

:::[[File:Parallel_Execution.png|500px]] | :::[[File:Parallel_Execution.png|500px]] | ||

In concurrent programming, the programmer usually determines which tasks can be executed in parallel, and implements communication and synchronization between tasks, using programming languages primitives and patterns. The programmer does not decide which tasks will be performed in parallel and they don't specify the [[#Hardware_Mapping|hardware mapping]]. That is left to the programming language runtime scheduler and to the O/S. Internally, concurrency is implemented by interleaving instructions from different threads of executions. Note that the interleaving of the instructions happens at machine code level, not at the programming language statement level. | |||

From this perspective, '''concurrency is a property of the code'''. '''Parallelism is a property of the running program''': a concurrent program may or may not run in parallel mode. Programmers do not write parallel code, only concurrent code they hope will be run in parallel. | |||

Concurrency is | Concurrency improves performance even without parallelism. This is because tasks usually wait for some event (over network, for example) and concurrent programming helps to preempt that task and use the processor for other tasks that are ready for compute. Concurrency can help to "hide latency". Also, done correctly, concurrency provides ways to structure the program to make it easy to understand and scalable. | ||

For problems that can be resolved in concurrent manner, it is recommended to write the application so that it can '''scale horizontally''', which means running instances of the program, or subroutines, on more cores in parallel. If done correctly, this increases resource utilization and the performance of the program. | |||

= | |||

=Atomicity= | |||

When something is considered atomic, or has the property of '''atomicity''', this means that within the <span id='Concurrent_Context'></span>'''context''' that it is operating, it is indivisible or uninterruptible. These terms mean that within the defined context, something that is atomic will happen in its entirety without anything else happening in that context simultaneously. | |||

The context is important. Something that can be atomic in the context of a thread may not be atomic in the context of a process, which is executed on multiple threads. In other words, the atomicity of an operation ca change depending on the currently defined scope. Context examples: process, O/S thread, machine, data center. | |||

Combining atomic operations does not necessarily produce a larger atomic operation. If something is atomic, it is implicitly safe within concurrent contexts. Programming languages provide atomicity primitives, such as [[#Atomic_Data_Types|atomic data types]], or [[#Memory_Access_Synchronization_Primitives|atomic access to memory]], where only one thread is guaranteed exclusive access to shared data. | |||

=Critical Section= | |||

A '''critical section''' is a section of a program that needs '''exclusive''' access to a shared resource. Exclusive access is also referred to as <span id='Synchronized_Access'></span>'''synchronized''' access. [[#Mutual_Exclusion_(Mutex)_Locks|Mutual exclusion locks]] can be used to programmatically guard access to a program's critical sections. Since it is somewhat expensive to enter and exit a critical section if locking is used, people attempt to minimize the time spent in critical section. See [[#Memory_Access_Synchronization_Primitives|Memory Access Synchronization Primitives]] section below for a discussion on the tradeoffs. | |||

=<span id='Situations_that_Make_Concurrent_Programming_Hard'></span>Concurrent Programming is Hard= | |||

Writing concurrent code is hard, because it is difficult to maintain a mental model of the state of the program at any particular time. The problems a programmer typically runs into while writing concurrent code belong to several classes of problems: [[#Race_Conditions|race conditions]], [[#Deadlock|deadlocks]], [[#Livelock|livelocks]] and [[#Starvation|starvation]]. | |||

==Race Condition== | ==Race Condition== | ||

A race condition is a | A race condition is a class of concurrency problems that are caused by non-deterministic interleavings of machine code executed in different threads. The usually non-deterministic order in which the threads and instructions within a thread are scheduled for execution leads to different, unwelcome results of the program for subsequent executions. In other words, the outcome of the program depends on the interleaving, or scheduling details. The race conditions that involve access to shared data are called [[#Data_Race|data races]], but data races are not the only existing race conditions. | ||

Races are introduced because developers are thinking about the problem sequentially: they assume that because a line of code falls before another in the text of the program, that line will be executed first. This assumption true if those lines of code are executed on the same thread, but false if they are executed on different threads. In those situation, a helpful technique is to imagine that large amounts of time pass between different operations, and think about how the program would behave if those operations occur in different order. One naive approach to address race conditions is to sprinkle sleep statements throughout the code, in an attempt to "break the race". This does not solve the problem, it just modifies the timing. The program does not become '''logical correct''', and the downside is that this approach usually degrades the performance. | |||

Race conditions are an insidious type of concurrency bug, because they may not show up until years after the code has been placed in production. They are usually precipitated by an environmental change that modifies the execution timing. | |||

===Data Race=== | |||

Most of the time, race condition occur due to the fact that the threads share data. A very common example is a concurrent operation attempts to read a variable while at some undetermined time another concurrent operation is attempting to write the same variable. This is called a '''data race'''. If threads shared no data, then different interleavings should have no effect on the effect of the instructions on their particular threads. | |||

==Deadlock== | ==Deadlock== | ||

A deadlock is an abnormal condition of a program that appears when some or all threads are waiting on one another, due to circular dependencies on exclusively held shared resources. In this situation, the program will never recover without external intervention. | |||

===Coffman Deadlock Conditions=== | |||

Edgar Coffman published the [https://dl.acm.org/doi/10.1145/356586.356588 System Deadlocks] paper in 1971, which enumerates four conditions that must be present for a deadlock to arise: | |||

# Mutual Exclusion: A concurrent process holds exclusive access to a resource at any one time. | |||

# Wait for Condition: A concurrent process must simultaneously hold a resource and be waiting for an additional resource. | |||

# No Preemption: A resource held by a concurrent process can only be released by that process. | |||

# Circular Wait: A concurrent process P1 must be waiting on a chain of other concurrent processes (P2), which are in turn waiting on it (P1). | |||

To prevent deadlocks, ensure that at least one of these conditions is not true. | |||

==Livelock== | |||

A lovelock is a condition occurring in a concurrent program that is actively performing concurrent operations, but these operations do nothing to move the state of the program forward. This analogy gives an example of a lovelock: you are on the hallway moving towards another person. She moves to one side to let you pass, but you've just done the same. So you move to the other side, but she's also done the same. This goes on forever. | |||

Livelocks are more difficult to spot than deadlocks because it can appear as if the program is doing work. There's CPU utilization, and there is activity in the logs. | |||

==Starvation== | ==Starvation== | ||

Starvation is any situation where a concurrent process cannot get all the resources in needs to perform work. [[#Livelock|Livelocks]] are a special case of starvation because in a lovelock, all the concurrent processes are starved equally and no work is accomplished. More broadly, starvation usually implies that there are one or more greedy concurrent processes that are unfairly preventing one or more concurrent processes from accomplishing work as efficiently as possible, or maybe at all. Starvation is usually detected by analyzing metrics that reflect the amount of work performed. | |||

=<span id='Programming_Models'></span>Concurrency Programming Models= | =<span id='Programming_Models'></span>Concurrency Programming Models= | ||

The majority of languages address concurrency by providing a representation of O/S threads or green threads at language level, and exposing [[#Memory_Access_Synchronization_Primitives|memory access synchronization primitives]] to protect shared data in presence of concurrent access. An alternative model is based on Communicating Sequential Processes (CSP), a model introduced by A. Hoare in the "Communicating Sequential Processes" 1978 ACM paper. This concurrency programming model is centered around passing data between independent sequential processes. | |||

==<span id='Synchronization'></span>Memory Access Synchronization Primitives== | |||

'''Synchronization''' is the opposite of [[#Concurrency|concurrency]]. The advantages of [[#Concurrency|concurrency]] and [[#Parallelism|parallelism]] is that the instruction interleavings are arbitrary, and that gives the scheduler options that lead to efficient utilization of the processor, which ultimately speeds up the execution of the code. With synchronization, we restrict the scheduler's options and we force scheduling to happen in a certain way. Every time we use synchronization, we are reducing the efficiency, the amount of possible concurrency. Also, using synchronization does not automatically solve [[#Race_Condition|race conditions]]. However, there are cases when synchronization is necessary, when certain things in different threads of execution have to happen in a certain order. | |||

Memory access synchronization is expensive, so it may look that is advantageous to broaden your lock beyond the [[#Critical_Section|critical section]]. On the other hand, by doing so we run the risk of starving other concurrent processes, by preventing access to resources that might be otherwise available to them. If you use memory access synchronization, you will have to find a balance between preferring coarse-grained synchronization for performance, and fine-grained synchronization for fairness. You should start by constraining memory access synchronization only to critical sections; if the synchronization becomes a performance problem, you can always broaden the scope. It is much more difficult to go the other way. | |||

===<span id='Mutual_Exclusion'></span>Mutual Exclusion (Mutex) Locks=== | |||

==<span id=' | <span id='Concurrency_and_Locking'></span>One way to approach concurrent updates of shared state in concurrent programming is to use mutual exclusion locks. Mutual exclusion locks are programming primitives that ensure [[#Synchronized_Access|exclusive (synchronized) access]] to memory for the thread that holds the lock. The locks must be acquired and released. Go has <code>[[Go_Mutex_and_RWMutex#Mutex|sync.Mutex]]</code> and <code>[[Go_Mutex_and_RWMutex#RWMutex|sync.RWMutex]]</code>. | ||

Locks are problematic because they rely of the capacity of the programer to correctly identify [[#Critical_Section|critical sections]] that require concurrent access protection. As the complexity of the program increases, the reliance on this capacity may become problematic. Another problem that comes with using synchronized access with locks is that programmers rely on a convention for declaring they need exclusive access to memory. Conventions are easy to ignore. Locks negatively impact the performance of the program, as described in the [[#Memory_Access_Synchronization_Primitives|Memory Access Synchronization Primitives]] section. An alternative approach is to use communication, either in the context of [[#Communicating_Sequential_Processes_(CSP)|communicating sequential processes]] or [[Actor_Model#Overview|actor model]]. | |||

===Semaphore=== | ===Semaphore=== | ||

====Binary Semaphore==== | ====Binary Semaphore==== | ||

====Counting Semaphore==== | ====Counting Semaphore==== | ||

The Go implementation of a counting semaphore is <code>[[Go_WaitGroup#Overview|sync.WaitGroup]]</code>. | |||

==<span id='Communication'></span>Communicating Sequential Processes (CSP)== | ==<span id='Communication'></span>Communicating Sequential Processes (CSP)== | ||

Communicating Sequential Processes (CSP) is a theoretical concurrency model introduced by | Communicating Sequential Processes (CSP) is a theoretical concurrency model introduced by Anthony Hoare in the "[https://kb.novaordis.com/index.php/Concurrent_(Parallel)_Programming#Counting_Semaphore Communicating Sequential Processes]" 1978 ACM paper. Over the next six years, the idea of CSP was refined into a formal representation of something called process calculus. Process calculus is a way to mathematically model concurrent systems and provide algebraic laws to perform transformations on these systems to analyze their various properties, like efficiency and correctness. In this context, processes can also be thought of as "functions". | ||

Communicating sequential processes provide "synchronization via communicating", as opposite to synchronization with [[#Memory_Access_Synchronization_Primitives|memory access synchronization primitives]]. | |||

==Immutable Data== | ==Immutable Data== | ||

== | Immutable data is implicitly concurrent-safe. Each concurrent process may operate on the same data, but it may not modify it. If it wants to change the data, it must create a new copy of the data with the desired modifications. This programming model may be achieved by wiring code that utilizes copies of values instead of pointers to values in memory. | ||

==<span id='Data_Protected_by_Confinement'></span>Confinement== | |||

Confinement is a programming model that ensures information is only ever available from one concurrent process. When this is achieved, a concurrent program is implicitly safe and no synchronization is needed. There are two types of confinement: ad-hoc and lexical. Ad-hoc confinement is when you achieve confinement through a convention. However, sticking to a convention is difficult unless you have tools to perform static analysis on the code every time someone commits new code. Lexical confinement involves using lexical scope to expose only the correct data and concurrency primitives for multiple concurrent processes to use. | |||

=Commenting Concurrent Code= | |||

When writing concurrent functions and methods, it is helpful to document in the function's documentation the following: | |||

* Who is responsible for concurrency (does the function internally start multiple threads, or it is expecting to be called on multiple threads)? | |||

* How is the problem space mapped onto concurrency primitives? | |||

* Who is responsible for synchronization. | |||

=O/S Level Concurrency= | |||

==Process== | |||

A process is an instance of a running program. Each process has a context: its own memory, within an address space. It has code, stack, heap, registers, program counter, stack pointer, data registers. It may share libraries with other processes. | |||

===Process Context Switch=== | |||

When the operating system switches from one process to another, that is called a context switch. The process context must be swapped: saved and replaced with another's. | |||

==<span id='Thread'></span><span id='Threads'></span>O/S Threads== | |||

An O/S thread is similar conceptually with a process, but it has less context, and it shares some of its context with other threads in the process. A process can have multiple threads inside it. A thread's context includes unique stacks, data registers, code. The context that is shared between threads include the virtual memory, the file descriptors. | |||

Threads should be mostly independent of each other. The whole reasons there are different threads is because you feel that the tasks executed by the threads can be executed concurrently at the same time. However, they're not completely independent, they're part of the same process and they share information - heap memory space among other things. When threads communicate inappropriately, it is when [[#Race_Condition|race conditions]] occur. | |||

===Thread Context Switch=== | |||

When the operating system switches from one thread to another, that is called a thread context switch. Upon a context switch, the O/S must save register values, lookup tables and memory maps for the thread being preempted, and load the equivalent data for the thread being activated. | |||

===Green Threads=== | |||

Green threads are threads managed by a language's runtime. | |||

=Concurrency in Programming Languages= | =Concurrency in Programming Languages= | ||

== | ==<span id='Concurrency_in_Go'></span>Go== | ||

{{Internal|Go Concurrency#Overview|Go Concurrency}} | {{Internal|Go Concurrency#Overview|Go Concurrency}} | ||

== | ==<span id='Concurrency_in_Python'></span>Python== | ||

{{Internal|Python Threads and Concurrency|Python Threads and Concurrency}} | {{Internal|Python Threads and Concurrency|Python Threads and Concurrency}} | ||

Latest revision as of 01:52, 24 January 2024

External

Internal

- Programming Languages Concepts

- Programming Languages Concepts

- Actor Model

- Concurrency in Go

- Amdahl's Law

Overview

Concurrency, or concurrent programming, is the management of multiple tasks that exist, and possibly execute, at the same time. "asynchronous" and "threaded" are other terms that are also used to refer to concurrent programming. The difference between concurrency and parallelism is explained in the Concurrecy vs. Parallelism section.

Concurrent programming is becoming more and more important as the hardware executing code comes in form of multiple cores that share the system memory. Moore Law stated that the transistor density on the chip doubles every two years. This observation was true for a while, but processor engineering has reached the limits of what is physically possible when it comes to shrinking transistors. The difficulties are mainly related to power consumptions and temperature dissipation, generically known as the “power wall”.

The relationship between dynamic power consumed by a transistor and percentage of time spent switching (α), capacitance (C), clock frequency (F) and voltage differential (V) is given by the following equation:

P = αCFV2

Smaller transistors have a lower capacitance, so C goes down as the transistor shrinks. Also, Dennard scaling law says that as transistors get smaller, both voltage and current scale downward, so the V2 term scales down in the equation. For constant α, whose maximum and desired value is 1, the dynamic power consumption equation would allow increasing the clock frequency, hence the performance of the processor, as C and V decrease.

However, transistors cannot get smaller than a certain size. One of the reasons is that the voltage has to stay above a specific limit, otherwise the noise would become unmanageable. In consequence, the voltage swings cannot get infinitely small. Thinner insulator layers increase static power leakage. Increasing the clock frequency under these circumstances would mean that the dynamic power consumption goes up, the temperature of the circuit goes up, as not sufficient heat can be dissipated fast enough, and the circuit simply melts.

There is another factor limits the overall system's performance even if the processor frequency could be increased further, and that his the Von Neumann bottleneck: the main memory access is orders of magnitude slower than the processor, so even if the processor is fast, it'll idle waiting for memory access. People work around this by onboarding fast caches on processors.

All these factors determined hardware designers to implement a different scaling strategy: not so much shrinking the transistors in a single but packing more cores per chip. Suddenly, there’s not just one single processor that executes instructions serially, one at a time, but more of them, which can execute instruction in a truly parallel fashion.

This development is what makes concurrent programming very relevant. A core is essentially sequential, so in order to use the system effectively, concurrent programming is necessary. Programs must take advantage of parallel hardware, otherwise the majority of the cores in the processor would idle, so concurrent programming support in the language is very important. Even though there is research going on into parallel compilers, attempts to automatically parallelize a program are not going very well, which makes the programmer's job to decompose the task so it renders itself to parallel execution.

The performance of a program that executes on multiple core is governed by Amdahl's Law, which says that the gains in performance of such a program are bounded by how much of the program must be written in sequential manner.

Concurrency vs. Parallelism

"Concurrent execution" of two tasks means that the tasks execution overlaps in time. This does not necessarily means that both tasks are executing at the same time. Concurrency can be achieved with serial hardware, such as a processor that executes one instructions at the time. Concurrency can be of course achieved on parallel hardware too.

"Parallel execution" means executing two tasks at the same time. Parallelism is only achievable with replicated hardware, usually a multi-core processor. Determining which hardware performs which task is called hardware mapping. Hardware mapping is usually not under the control of the programmer. The completion time for parallel execution is usually shorter than for concurrent execution.

In concurrent programming, the programmer usually determines which tasks can be executed in parallel, and implements communication and synchronization between tasks, using programming languages primitives and patterns. The programmer does not decide which tasks will be performed in parallel and they don't specify the hardware mapping. That is left to the programming language runtime scheduler and to the O/S. Internally, concurrency is implemented by interleaving instructions from different threads of executions. Note that the interleaving of the instructions happens at machine code level, not at the programming language statement level.

From this perspective, concurrency is a property of the code. Parallelism is a property of the running program: a concurrent program may or may not run in parallel mode. Programmers do not write parallel code, only concurrent code they hope will be run in parallel.

Concurrency improves performance even without parallelism. This is because tasks usually wait for some event (over network, for example) and concurrent programming helps to preempt that task and use the processor for other tasks that are ready for compute. Concurrency can help to "hide latency". Also, done correctly, concurrency provides ways to structure the program to make it easy to understand and scalable.

For problems that can be resolved in concurrent manner, it is recommended to write the application so that it can scale horizontally, which means running instances of the program, or subroutines, on more cores in parallel. If done correctly, this increases resource utilization and the performance of the program.

Atomicity

When something is considered atomic, or has the property of atomicity, this means that within the context that it is operating, it is indivisible or uninterruptible. These terms mean that within the defined context, something that is atomic will happen in its entirety without anything else happening in that context simultaneously.

The context is important. Something that can be atomic in the context of a thread may not be atomic in the context of a process, which is executed on multiple threads. In other words, the atomicity of an operation ca change depending on the currently defined scope. Context examples: process, O/S thread, machine, data center.

Combining atomic operations does not necessarily produce a larger atomic operation. If something is atomic, it is implicitly safe within concurrent contexts. Programming languages provide atomicity primitives, such as atomic data types, or atomic access to memory, where only one thread is guaranteed exclusive access to shared data.

Critical Section

A critical section is a section of a program that needs exclusive access to a shared resource. Exclusive access is also referred to as synchronized access. Mutual exclusion locks can be used to programmatically guard access to a program's critical sections. Since it is somewhat expensive to enter and exit a critical section if locking is used, people attempt to minimize the time spent in critical section. See Memory Access Synchronization Primitives section below for a discussion on the tradeoffs.

Concurrent Programming is Hard

Writing concurrent code is hard, because it is difficult to maintain a mental model of the state of the program at any particular time. The problems a programmer typically runs into while writing concurrent code belong to several classes of problems: race conditions, deadlocks, livelocks and starvation.

Race Condition

A race condition is a class of concurrency problems that are caused by non-deterministic interleavings of machine code executed in different threads. The usually non-deterministic order in which the threads and instructions within a thread are scheduled for execution leads to different, unwelcome results of the program for subsequent executions. In other words, the outcome of the program depends on the interleaving, or scheduling details. The race conditions that involve access to shared data are called data races, but data races are not the only existing race conditions.

Races are introduced because developers are thinking about the problem sequentially: they assume that because a line of code falls before another in the text of the program, that line will be executed first. This assumption true if those lines of code are executed on the same thread, but false if they are executed on different threads. In those situation, a helpful technique is to imagine that large amounts of time pass between different operations, and think about how the program would behave if those operations occur in different order. One naive approach to address race conditions is to sprinkle sleep statements throughout the code, in an attempt to "break the race". This does not solve the problem, it just modifies the timing. The program does not become logical correct, and the downside is that this approach usually degrades the performance.

Race conditions are an insidious type of concurrency bug, because they may not show up until years after the code has been placed in production. They are usually precipitated by an environmental change that modifies the execution timing.

Data Race

Most of the time, race condition occur due to the fact that the threads share data. A very common example is a concurrent operation attempts to read a variable while at some undetermined time another concurrent operation is attempting to write the same variable. This is called a data race. If threads shared no data, then different interleavings should have no effect on the effect of the instructions on their particular threads.

Deadlock

A deadlock is an abnormal condition of a program that appears when some or all threads are waiting on one another, due to circular dependencies on exclusively held shared resources. In this situation, the program will never recover without external intervention.

Coffman Deadlock Conditions

Edgar Coffman published the System Deadlocks paper in 1971, which enumerates four conditions that must be present for a deadlock to arise:

- Mutual Exclusion: A concurrent process holds exclusive access to a resource at any one time.

- Wait for Condition: A concurrent process must simultaneously hold a resource and be waiting for an additional resource.

- No Preemption: A resource held by a concurrent process can only be released by that process.

- Circular Wait: A concurrent process P1 must be waiting on a chain of other concurrent processes (P2), which are in turn waiting on it (P1).

To prevent deadlocks, ensure that at least one of these conditions is not true.

Livelock

A lovelock is a condition occurring in a concurrent program that is actively performing concurrent operations, but these operations do nothing to move the state of the program forward. This analogy gives an example of a lovelock: you are on the hallway moving towards another person. She moves to one side to let you pass, but you've just done the same. So you move to the other side, but she's also done the same. This goes on forever.

Livelocks are more difficult to spot than deadlocks because it can appear as if the program is doing work. There's CPU utilization, and there is activity in the logs.

Starvation

Starvation is any situation where a concurrent process cannot get all the resources in needs to perform work. Livelocks are a special case of starvation because in a lovelock, all the concurrent processes are starved equally and no work is accomplished. More broadly, starvation usually implies that there are one or more greedy concurrent processes that are unfairly preventing one or more concurrent processes from accomplishing work as efficiently as possible, or maybe at all. Starvation is usually detected by analyzing metrics that reflect the amount of work performed.

Concurrency Programming Models

The majority of languages address concurrency by providing a representation of O/S threads or green threads at language level, and exposing memory access synchronization primitives to protect shared data in presence of concurrent access. An alternative model is based on Communicating Sequential Processes (CSP), a model introduced by A. Hoare in the "Communicating Sequential Processes" 1978 ACM paper. This concurrency programming model is centered around passing data between independent sequential processes.

Memory Access Synchronization Primitives

Synchronization is the opposite of concurrency. The advantages of concurrency and parallelism is that the instruction interleavings are arbitrary, and that gives the scheduler options that lead to efficient utilization of the processor, which ultimately speeds up the execution of the code. With synchronization, we restrict the scheduler's options and we force scheduling to happen in a certain way. Every time we use synchronization, we are reducing the efficiency, the amount of possible concurrency. Also, using synchronization does not automatically solve race conditions. However, there are cases when synchronization is necessary, when certain things in different threads of execution have to happen in a certain order.

Memory access synchronization is expensive, so it may look that is advantageous to broaden your lock beyond the critical section. On the other hand, by doing so we run the risk of starving other concurrent processes, by preventing access to resources that might be otherwise available to them. If you use memory access synchronization, you will have to find a balance between preferring coarse-grained synchronization for performance, and fine-grained synchronization for fairness. You should start by constraining memory access synchronization only to critical sections; if the synchronization becomes a performance problem, you can always broaden the scope. It is much more difficult to go the other way.

Mutual Exclusion (Mutex) Locks

One way to approach concurrent updates of shared state in concurrent programming is to use mutual exclusion locks. Mutual exclusion locks are programming primitives that ensure exclusive (synchronized) access to memory for the thread that holds the lock. The locks must be acquired and released. Go has sync.Mutex and sync.RWMutex.

Locks are problematic because they rely of the capacity of the programer to correctly identify critical sections that require concurrent access protection. As the complexity of the program increases, the reliance on this capacity may become problematic. Another problem that comes with using synchronized access with locks is that programmers rely on a convention for declaring they need exclusive access to memory. Conventions are easy to ignore. Locks negatively impact the performance of the program, as described in the Memory Access Synchronization Primitives section. An alternative approach is to use communication, either in the context of communicating sequential processes or actor model.

Semaphore

Binary Semaphore

Counting Semaphore

The Go implementation of a counting semaphore is sync.WaitGroup.

Communicating Sequential Processes (CSP)

Communicating Sequential Processes (CSP) is a theoretical concurrency model introduced by Anthony Hoare in the "Communicating Sequential Processes" 1978 ACM paper. Over the next six years, the idea of CSP was refined into a formal representation of something called process calculus. Process calculus is a way to mathematically model concurrent systems and provide algebraic laws to perform transformations on these systems to analyze their various properties, like efficiency and correctness. In this context, processes can also be thought of as "functions".

Communicating sequential processes provide "synchronization via communicating", as opposite to synchronization with memory access synchronization primitives.

Immutable Data

Immutable data is implicitly concurrent-safe. Each concurrent process may operate on the same data, but it may not modify it. If it wants to change the data, it must create a new copy of the data with the desired modifications. This programming model may be achieved by wiring code that utilizes copies of values instead of pointers to values in memory.

Confinement

Confinement is a programming model that ensures information is only ever available from one concurrent process. When this is achieved, a concurrent program is implicitly safe and no synchronization is needed. There are two types of confinement: ad-hoc and lexical. Ad-hoc confinement is when you achieve confinement through a convention. However, sticking to a convention is difficult unless you have tools to perform static analysis on the code every time someone commits new code. Lexical confinement involves using lexical scope to expose only the correct data and concurrency primitives for multiple concurrent processes to use.

Commenting Concurrent Code

When writing concurrent functions and methods, it is helpful to document in the function's documentation the following:

- Who is responsible for concurrency (does the function internally start multiple threads, or it is expecting to be called on multiple threads)?

- How is the problem space mapped onto concurrency primitives?

- Who is responsible for synchronization.

O/S Level Concurrency

Process

A process is an instance of a running program. Each process has a context: its own memory, within an address space. It has code, stack, heap, registers, program counter, stack pointer, data registers. It may share libraries with other processes.

Process Context Switch

When the operating system switches from one process to another, that is called a context switch. The process context must be swapped: saved and replaced with another's.

O/S Threads

An O/S thread is similar conceptually with a process, but it has less context, and it shares some of its context with other threads in the process. A process can have multiple threads inside it. A thread's context includes unique stacks, data registers, code. The context that is shared between threads include the virtual memory, the file descriptors.

Threads should be mostly independent of each other. The whole reasons there are different threads is because you feel that the tasks executed by the threads can be executed concurrently at the same time. However, they're not completely independent, they're part of the same process and they share information - heap memory space among other things. When threads communicate inappropriately, it is when race conditions occur.

Thread Context Switch

When the operating system switches from one thread to another, that is called a thread context switch. Upon a context switch, the O/S must save register values, lookup tables and memory maps for the thread being preempted, and load the equivalent data for the thread being activated.

Green Threads

Green threads are threads managed by a language's runtime.