Jenkins Pipeline Syntax: Difference between revisions

| (205 intermediate revisions by the same user not shown) | |||

| Line 9: | Line 9: | ||

* [[Jenkins_Concepts#Pipeline|Jenkins Concepts]] | * [[Jenkins_Concepts#Pipeline|Jenkins Concepts]] | ||

* [[Writing a Jenkins Pipeline]] | * [[Writing a Jenkins Pipeline]] | ||

* [[Jenkins Simple Pipeline Configuration|Simple Pipeline Configuration]] | |||

=Scripted Pipeline= | =Scripted Pipeline= | ||

{{External|https://www.jenkins.io/doc/book/pipeline/syntax/#scripted-pipeline}} | |||

Scripted Pipeline is classical way of declaring Jenkins Pipeline, preceding [[#Declarative_Pipeline|Declarative Pipeline]]. Unlike the Declarative Pipeline, the Scripted Pipeline is a general-purpose DSL built with [[Groovy]]. The pipelines are declared in [[Jenkins_Concepts#Jenkinsfile|Jenkinsfile]]s and executed from the top of the Jenkinsfile downwards, like most traditional scripts in Groovy. Groovy syntax is available directly in the Scripted Pipeline declaration. The flow control can be declared with <code>if</code>/<code>else</code> conditionals or via Groovy's exception handling support with <code>try</code>/<code>catch</code>/<code>finally</code>. | |||

The simplest pipeline declaration: | |||

<syntaxhighlight lang='groovy'> | <syntaxhighlight lang='groovy'> | ||

echo 'pipeline started' | |||

</syntaxhighlight> | |||

A more complex one: | |||

<syntaxhighlight lang='groovy'> | |||

node('some-worker-label') { | |||

echo 'Pipeline logic starts' | |||

stage('Build') { | |||

if (env.BRANCH_NAME == 'master') { | |||

echo 'this is only executed on master' | |||

} | |||

else { | |||

echo 'this is executed elsewhere' | |||

} | |||

} | |||

stage('Test') { | |||

// ... | |||

} | |||

stage('Deploy') { | |||

// ... | |||

} | |||

stage('Example') { | |||

try { | |||

sh 'exit 1' | |||

} | |||

catch(ex) { | |||

echo 'something failed' | |||

throw | |||

} | |||

} | |||

} | |||

</syntaxhighlight> | </syntaxhighlight> | ||

== | The basic building block of the Scripted Pipeline syntax is the step. The Scripted Pipeline does not introduce any steps that are specific to its syntax. The generic pipeline steps, such as [[#node|node]], [[#stage|stage]], [[#parallel|parallel]], etc. are available here: [[#Pipeline_Steps|Pipeline Steps]]. | ||

==Scripted Pipeline at Runtime== | |||

When the Jenkins server starts to execute the pipeline, it pulls the Jenkinsfile either [[Jenkins_Simple_Pipeline_Configuration#Pipeline_Script_from_SCM|from a repository]], following a checkout sequence similar to the one shown [[Jenkins_Simple_Pipeline_Configuration#Checkout_Sequence|here]], or from the pipeline configuration, if it is specified in-line. Then the Jenkins instance instantiates a WorkflowScript ([https://github.com/jenkinsci/workflow-cps-plugin/blob/master/src/main/java/org/jenkinsci/plugins/workflow/cps/CpsScript.java org.jenkinsci.plugins.workflow.cps.CpsScript.java]) instance. The "script" instance can be used to access the following state elements: | |||

* <span id='Pipeline_Parameters'></span>[[Jenkins_Pipeline_Parameters#Overview|pipeline parameters]], with <code>this.params</code>, which is a Map. | |||

==Scripted Pipeline Failure Handling== | |||

Scripted pipeline fail when an exception is thrown and reached the pipeline layer. The pipeline code can use <code>try/catch/finally</code> semantics to control this behavior, by catching the exceptions and preventing them from reaching the pipeline layer. | |||

<syntaxhighlight lang='groovy'> | <syntaxhighlight lang='groovy'> | ||

stage('Some Stage') { | |||

try { | |||

throw new Exception ("the build has failed") | |||

} | |||

catch(Exception e) { | |||

// squelch the exception, the pipeline will not fail | |||

} | |||

} | |||

</syntaxhighlight> | |||

The pipeline also fails when a command invoked with [[#sh|sh]] exits with a non-zero exit code. The underlying implementation throws an exception and that makes the build fail. It is possible to configure sh to not fail the build automatically on non-zero exit code, with its [[#returnStatus|returnStatus]] option. | |||

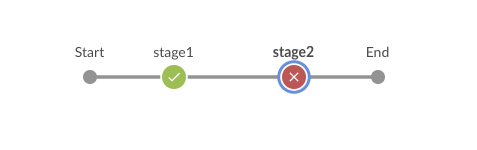

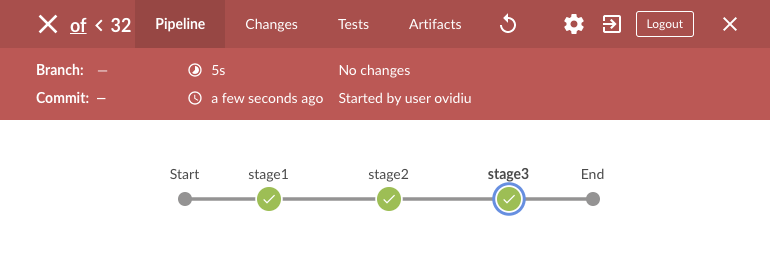

The first failure in a sequential execution will stop the build, no subsequent stages will be executed. The stage that caused the failure will be shown in red in Blue Ocean (in the example below, there were three sequential stages but stage3 did not get executed): | |||

[[Image:Failed_Jenkins_Stage.png|477px]] | |||

In this case the corresponding stage and the entire build will be marked as 'FAILURE'. | |||

A build can be programmatically marked as fail by setting the value of the [[Jenkins_currentBuild#result|currentBuild.result]] variable: | |||

<syntaxhighlight lang='groovy'> | |||

currentBuild.result = 'FAILURE' | |||

</syntaxhighlight> | |||

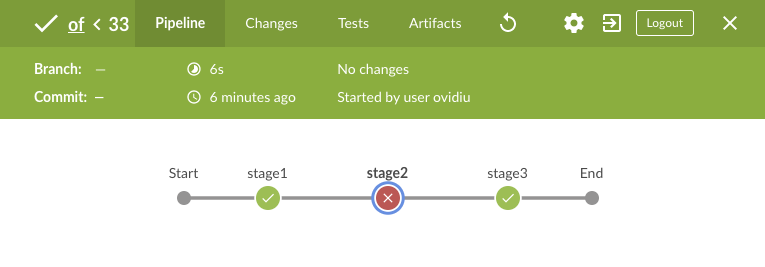

The entire build will be marked as failed ('FAILURE', 'red' build), but the stage in which the variable assignment was done, stage2 in this case, will not show as failed: | |||

:[[Image:Programmatic_Jenkins_Red_Build.png|770px]] | |||

<span id='catchError_Usage'></span>The opposite behavior of marking a specific stage as failed ('FAILURE'), but allowing the overall build to be successful can be obtained by using the [[#catchError|catchError]] basic step: | |||

<syntaxhighlight lang='groovy'> | |||

stage('stage2') { | |||

catchError(buildResult: 'SUCCESS', stageResult: 'FAILURE') { | |||

throw new RuntimeException("synthetic") | |||

} | |||

} | } | ||

</syntaxhighlight> | |||

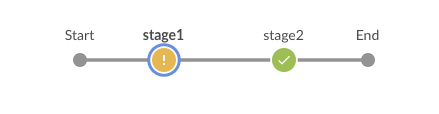

The result is a failed 'stage2' but a successful build: | |||

:[[Image:Programmatic_Jenkins_Red_Stage.png|765px]] | |||

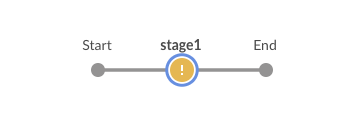

A stage result and the entire build result may be also influenced by the JUnit test report produced for the stage, if any. If the test report exists and it is processed by the [[Jenkins_Pipeline_Syntax#junit|junit]] step, and if the report contains test errors and failures, they're both handled as "instability" of the build and the corresponding stage and the entire build will be marked as UNSTABLE. The classic view and Blue ocean will render a yellow stage and build: | |||

:[[Image:Unstable_and_Stable_Jenkins_Stages.png|439px]] | |||

Also see [[#junit|junit]] below and: {{Internal|Jenkins_currentBuild#result|currentBuild.result}}{{Internal|Jenkins_currentBuild#currentResult|currentBuild.currentResult}} | |||

=Declarative Pipeline= | =Declarative Pipeline= | ||

{{External|https://www.jenkins.io/doc/book/pipeline/syntax/#declarative-pipeline}} | |||

Declarative Pipeline is a new way of declaring Jenkins pipelines, and consists in a more simplified and opinionated syntax. Declarative Pipeline is an alternative to [[#Scripted_Pipeline|Scripted Pipeline]]. | |||

<syntaxhighlight lang='groovy'> | <syntaxhighlight lang='groovy'> | ||

| Line 78: | Line 137: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=Environment Variables= | ==Declarative Pipeline Directives== | ||

====environment==== | |||

{{External|https://jenkins.io/doc/book/pipeline/syntax/#environment}} | |||

See: {{Internal|Jenkins_Pipeline_Environment_Variables#Declarative_Pipeline|Jenkins Pipeline Environment Variables}} | |||

====parameters==== | |||

{{External|https://jenkins.io/doc/book/pipeline/syntax/#parameters}} | |||

See: {{Internal|Jenkins_Pipeline_Parameters#Declarative_Pipeline_Paramenter_Declaration|Jenkins Pipeline Parameters}} | |||

==Declarative Pipeline Failure Handling== | |||

<font color=darkgray>TODO: https://www.jenkins.io/doc/book/pipeline/jenkinsfile/#handling-failure</font> | |||

=<span id='Parameters_Types'></span><span id='String'></span><span id='Multi-Line_String_Parameter'></span><span id='File_Parameter'></span>Parameters= | |||

{{Internal|Jenkins Pipeline Parameters|Jenkins Pipeline Parameters}} | |||

=<span id='BUILD_TAG'></span><span id='JOB_NAME'></span><span id='JOB_BASE_NAME'></span>Environment Variables= | |||

{{Internal|Jenkins Pipeline Environment Variables|Jenkins Pipeline Environment Variables}} | |||

== | =Pipeline Steps= | ||

{{External|https://jenkins.io/doc/pipeline/steps/}} | |||

====node==== | |||

{{External|https://jenkins.io/doc/pipeline/steps/workflow-durable-task-step/#-node-allocate-node}} | |||

Allocates an executor or a node, typically a worker, and runs the enclosed code in the context of the workspace of that worker. Node may take a label name, computer name or an expression. The labels are declared on workers when they are defined in the master configuration, in their respective "clouds". | |||

<syntaxhighlight lang='groovy'> | <syntaxhighlight lang='groovy'> | ||

String NODE_LABEL = 'infra-worker' | |||

node(NODE_LABEL) { | |||

sh 'uname -a' | |||

} | |||

</syntaxhighlight> | |||

====stage==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/pipeline-stage-step/#stage-stage}} | |||

The <code>stage</code> step defines a logical [[Jenkins_Concepts#Stage|stage]] of the pipeline. The <code>stage</code> creates a labeled block in the pipeline and allows executing a closure in the context of that block: | |||

<syntaxhighlight lang='groovy'> | |||

stage('stage A') { | |||

print 'pipeline> in stage A' | |||

} | |||

stage('stage B') { | |||

print 'pipeline> in stage B' | |||

} | |||

</syntaxhighlight> | |||

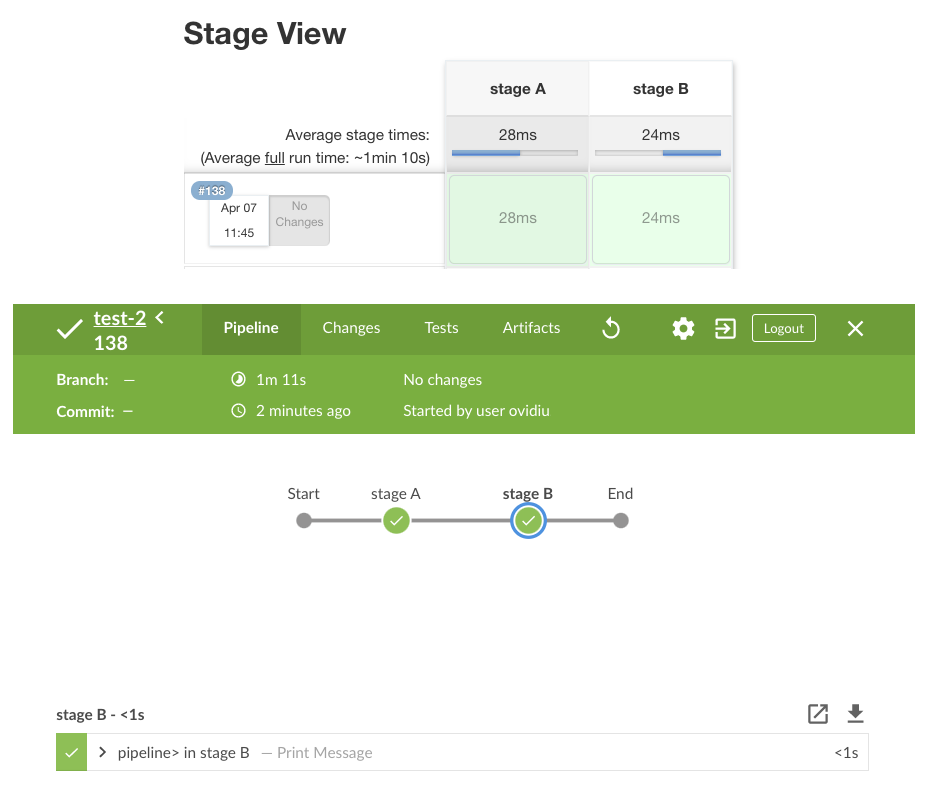

[[Image:Jenkins_Pipeline_Stages.png|925px]] | |||

Embedded stages as in this example are possible, and they will execute correctly, but they do not render well in Blue Ocean (Stage A.1 and Stage A.2 are not represented, just Stage A and B): | |||

<syntaxhighlight lang='groovy'> | |||

stage('stage A') { | |||

print('pipeline> in stage A') | |||

stage('stage A.1') { | |||

print('pipeline> in stage A.1') | |||

} | |||

stage('stage A.2') { | |||

print('pipeline> in stage A.1') | |||

} | |||

} | |||

stage('stage B') { | |||

print('pipeline> in stage B') | |||

} | |||

} | |||

</syntaxhighlight> | </syntaxhighlight> | ||

To control failure behavior at stage level, use [[#catchError|catchError]] step, described below. | |||

====<span id='Parallel_Stages'></span>parallel==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/workflow-cps/#parallel-execute-in-parallel}} | |||

Takes a map from branch names to closures and an optional argument <code>failFast</code>, and executes the closure code in parallel. | |||

<syntaxhighlight lang='groovy'> | |||

parallel firstBranch: { | |||

// do something | |||

}, secondBranch: { | |||

// do something else | |||

}, | |||

failFast: true|false | |||

</syntaxhighlight> | |||

<syntaxhighlight lang='groovy'> | <syntaxhighlight lang='groovy'> | ||

stage("tests") { | |||

parallel( | |||

"unit tests": { | |||

// run unit tests | |||

}, | |||

"coverage tests": { | |||

// run coverage tests | |||

} | |||

) | |||

} | |||

</syntaxhighlight> | |||

Allocation to different nodes can be performed inside the closure: | |||

<syntaxhighlight lang='groovy'> | |||

def tasks = [:] | |||

tasks["branch-1"] = { | |||

stage("task-1") { | |||

node('node_1') { | |||

sh 'echo $NODE_NAME' | |||

} | |||

} | |||

} | |||

tasks["branch-2"] = { | |||

stage("task-2") { | |||

node('node_1') { | |||

sh 'echo $NODE_NAME' | |||

} | |||

} | |||

} | |||

parallel tasks | |||

</syntaxhighlight> | </syntaxhighlight> | ||

====sh==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/workflow-durable-task-step/#sh-shell-script}} | |||

{{External|[https://github.com/ovidiuf/playground/tree/master/jenkins/pipelines/sh Playground sh]}} | |||

Execute a shell script command, or multiple commands, on multiple lines. It can be specified in-line or it can refer to a file available on the filesystem exposed to the Jenkins node. It needs to be enclosed by a [[#node|node]] to work. | |||

The metacharacter $ must be escaped: <code> \${LOGDIR}</code>, unless it refers to a variable form the Groovy context. | |||

= | Example: | ||

{{ | |||

<syntaxhighlight lang="groovy"> | |||

stage.sh """ | |||

LOGDIR=${fileName}-logs | |||

mkdir -p \${LOGDIR}/something | |||

""".stripIndent() | |||

</syntaxhighlight> | |||

<syntaxhighlight lang="groovy"> | |||

stage.sh ''' | |||

LOGDIR=some-logs | |||

mkdir -p ${LOGDIR}/something | |||

'''.stripIndent() | |||

</syntaxhighlight> | |||

Both <code>"""..."""</code> and <code>'''...'''</code> Groovy constructs can be used. For more details on enclosing representing multi-line strings with <code>"""</code> or <code>'''</code>, see: {{Internal|Groovy#Multi-Line_Strings|Groovy | Multi-Line_Strings}} | |||

=====<span id='returnStatus'></span>sh - Script Return Status===== | |||

By default, a script exits with a non-zero return code will cause the step and the pipeline [[Jenkins_Pipeline_Syntax#Scripted_Pipeline_Failure_Handling|to fail with an exception]]: | |||

<syntaxhighlight lang='text'> | |||

ERROR: script returned exit code 1 | |||

</syntaxhighlight> | |||

To prevent that, configure <code>returnStatus</code> to be equal with true, and the step will return the exit value of the script, instead of failing on non-zero exit value. You may then compare to zero and decide whether to fail the pipeline (throw an exception) or not from the Groovy layer that invoked <code>sh</code>. | |||

<syntaxhighlight lang="groovy"> | |||

int exitCode = sh(returnStatus: true, script: './bin/do-something') | |||

if (exitCode != 0) throw new RuntimeException('my script failed') | |||

</syntaxhighlight> | |||

The pipeline log result on failure looks similar to: | |||

<syntaxhighlight lang='text'> | |||

[Pipeline] End of Pipeline | |||

java.lang.RuntimeException: my script failed | |||

at WorkflowScript.run(WorkflowScript:17) | |||

... | |||

</syntaxhighlight> | |||

Also see [[#Scripted_Pipeline_Failure_Handling|Scripted Pipeline Failure Handling]] section above. | |||

==== | =====sh - Script stdout===== | ||

By default, the standard output of the script is send to the log. If <code>returnStdout</code> is set to true, the script standard output is returned as String as the step value. Call <code>trim()</code> to strip off the trailing newline. | |||

The script's stderr is always sent to the log. | |||

<syntaxhighlight lang="groovy"> | |||

String result = sh(returnStdout: true, script: './bin/do-something').trim() | |||

</syntaxhighlight> | |||

=====sh - Obtaining both the Return Status and stdout===== | |||

If both <code>returnStatus</code> and <code>returnStdout</code> are turned on, <code>returnStatus</code> takes priority and the function returns the exit code. ⚠️ The stdout is discarded. | |||

{ | =====sh - Obtaining stdout and Preventing the Pipeline to Fail on Error===== | ||

In case you want to use the external shell command to return a result to the pipeline, but not fail the pipeline when the external command fails, use this pattern: | |||

<syntaxhighlight lang='groovy'> | |||

try { | |||

String stdout = sh(returnStdout: true, returnStatus: false, script: 'my-script') | |||

// use the content returned by stdout in the pipeline | |||

print "we got this as result of sh invocation: ${stdout.trim()}" | |||

} | |||

catch(Exception e) { | |||

// catching the error will prevent pipeline failure, both stdout and stderr are captured in the pipeline log | |||

print "the invocation failed" | |||

} | |||

</syntaxhighlight> | |||

If the command fails, both its stdout and stderr are captured in the pipeline log. | |||

=====sh - Label===== | |||

If a "label" argument is specified, the stage will render that label in the Jenkins and Blue Ocean logs: | |||

<syntaxhighlight lang="groovy"> | |||

sh(script: './bin/do-something', label: 'this will show in logs') | |||

</syntaxhighlight> | |||

====ws==== | ====ws==== | ||

| Line 170: | Line 338: | ||

<font color=darkgray>This is how we may be able to return the result: https://support.cloudbees.com/hc/en-us/articles/218554077-How-to-set-current-build-result-in-Pipeline</font> | <font color=darkgray>This is how we may be able to return the result: https://support.cloudbees.com/hc/en-us/articles/218554077-How-to-set-current-build-result-in-Pipeline</font> | ||

====junit==== | |||

{{External|https://jenkins.io/doc/pipeline/steps/junit/#-junit-archive-junit-formatted-test-results}} | |||

Jenkins understands the [[JUnit#JUnit_XML_Reporting_File_Format|JUnit test report XML format]] (which is also used by TestNG). To use this feature, set up the build to run tests, which will generate their test reports into a local agent directory, then specify the path to the test reports in [http://ant.apache.org/manual/Types/fileset.html Ant glob syntax] to the JUnit plugin pipeline step <code>junit</code>: | |||

<syntaxhighlight lang='groovy'> | |||

stage.junit '**/target/*-report/TEST-*.xml' | |||

</syntaxhighlight> | |||

⚠️ Do not specify the path to a single test report file. The <code>junit</code> step will not load the file, even if it exists and it is a valid report, and will print an error message similar to: | |||

<syntaxhighlight lang='text'> | |||

[Pipeline] junit | |||

Recording test results | |||

No test report files were found. Configuration error? | |||

</syntaxhighlight> | |||

Always use Ant glob syntax to specify how the report(s) are to be located: | |||

Jenkins uses this step to ingest the test results, process them and provide historical test result trends, a web UI for viewing test reports, tracking failures, etc. | |||

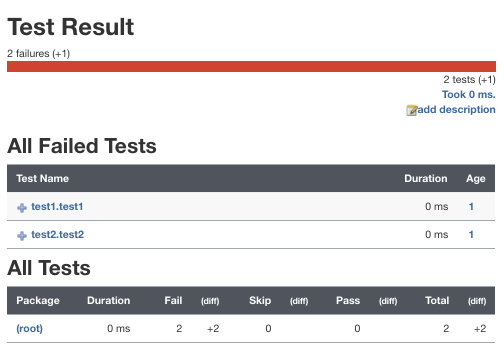

Both JUnit errors and failures are reported by the <code>junit</code> step as "failures", even if the JUnit XML report indicates both errors and failures. The following JUnit report: | |||

<syntaxhighlight lang='xml'> | |||

<testsuite name="testsuite1" tests="2" errors="1" failures="1"> | |||

<testcase name="test1" classname="test1"> | |||

<error message="I have errored out"></error> | |||

</testcase> | |||

<testcase name="test2" classname="test2"> | |||

<failure message="I have failed"></failure> | |||

</testcase> | |||

</testsuite> | |||

</syntaxhighlight> | |||

produces this Jenkins report: | |||

::::[[Image:Jenkins_JUnit_Errors_and_Failures.png|504px]] | |||

The presence of at least one JUnit failure marks the corresponding stage, and the entire build as "UNSTABLE". The stage is rendered in the classical view and also in Blue Ocean in yellow: | |||

[[Image:Unstable_Jenkins_Stage.png|349px]] | |||

Also see: [[#Scripted_Pipeline_Failure_Handling|Scripted Pipeline Failure Handling]] above. | |||

====checkout==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/workflow-scm-step/}} | |||

The "checkout" step is provided by the pipeline "SCM" plugin. | |||

=====Git Plugin===== | |||

{{External|https://plugins.jenkins.io/git/}} | |||

<syntaxhighlight lang='groovy'> | |||

checkout([ | |||

$class: 'GitSCM', | |||

branches: [[name: 'develop']], | |||

doGenerateSubmoduleConfigurations: false, | |||

extensions: [ | |||

[$class: 'GitLFSPull'], | |||

[$class: 'CloneOption', noTags: true, reference: '', timeout: 40, depth: 1], | |||

[$class: 'PruneStaleBranch'] | |||

], | |||

submoduleCfg: [], | |||

userRemoteConfigs: [ | |||

[url: 'git@github.com:some-org/some-project.git', | |||

credentialsId: 'someCredId'] | |||

] | |||

]) | |||

</syntaxhighlight> | |||

The simplest configuration that works: | |||

<syntaxhighlight lang='groovy'> | |||

checkout([ | |||

$class: 'GitSCM', | |||

branches: [[name: 'master']], | |||

userRemoteConfigs: [ | |||

[url: 'https://github.com/ovidiuf/playground.git'] | |||

] | |||

]) | |||

</syntaxhighlight> | |||

The step checks out the repository into the current directory, in does not create a top-level directory ('some-project' in this case). .git will be created in the current directory. If the current directory is the workspace, .git will be created in the workspace root. | |||

====withCredentials==== | |||

A step that allows using credentials defined in the Jenkins server. See: {{Internal|Jenkins Credentials Binding Plugin#Overview|Jenkins Credentials Binding Plugin}} | |||

==Basic Steps== | ==Basic Steps== | ||

| Line 175: | Line 419: | ||

These basic steps are used invoking on <code>stage.</code>. In a Jenkinsfile, and inside a stage, invoke on <code>this.</code> or simply invoking directly, without qualifying. | These basic steps are used invoking on <code>stage.</code>. In a Jenkinsfile, and inside a stage, invoke on <code>this.</code> or simply invoking directly, without qualifying. | ||

==== | ====echo==== | ||

{ | <syntaxhighlight lang='groovy'> | ||

echo "pod memory limit: ${params.POD_MEMORY_LIMIT_Gi}" | |||

</syntaxhighlight> | |||

<syntaxhighlight lang='groovy'> | |||

echo """ | |||

Run Configuration: | |||

something: ${SOMETHING} | |||

something else: ${SOMETHING_ELSE} | |||

""" | |||

</syntaxhighlight> | |||

====error==== | ====error==== | ||

{{External|https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#error-error-signal}} | {{External|https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#error-error-signal}} | ||

= | |||

This step signals an error and fails the pipeline. | |||

<syntaxhighlight lang='groovy'> | |||

error 'some message' | |||

</syntaxhighlight> | |||

Alternatively, you can simply: | |||

<syntaxhighlight lang='groovy'> | <syntaxhighlight lang='groovy'> | ||

throw new Exception("some message") | |||

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 230: | Line 487: | ||

} | } | ||

</syntaxhighlight> | </syntaxhighlight> | ||

====withEnv==== | |||

{{External|https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#withenv-set-environment-variables}} | |||

Sets one more more environment variables within a block, making them available to any external process initiated within that scope. If a variable value contains spaces, it does need to be quoted inside the '' sequence, as shown below: | |||

<syntaxhighlight lang='groovy'> | |||

node { | |||

withEnv(['VAR_A=something', 'VAR_B=something else']) { | |||

sh 'echo "VAR_A: ${VAR_A}, VAR_B: ${VAR_B}"' | |||

} | |||

} | |||

</syntaxhighlight> | |||

====catchError==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#catcherror-catch-error-and-set-build-result-to-failure}} | |||

<syntaxhighlight lang='groovy'> | |||

catchError { | |||

sh 'some-command-that-might-fail' | |||

} | |||

</syntaxhighlight> | |||

If the body throws an exception, mark the build as a failure, but continue to execute the pipeline from the statement following <code>catchError</code> step. If an exception is thrown, the behavior can be configured to: | |||

* print a message | |||

* set the build result other than failure | |||

* change the [[#stage|stage]] result | |||

* ignore certain kinds of exceptions that are used to interrupt the build | |||

If <code>catchError</code> is used, there's no need for <code>finally</code>, as the exception is caught and does not propagates up. | |||

The alternative is to use plain <code>try/catch/finally</code> blocks. | |||

Configuration: | |||

<syntaxhighlight lang='groovy'> | |||

catchError(message: 'some message', stageResult: 'FAILURE'|'SUCCESS'|... , buildResult: 'FAILURE'|'SUCCESS'|..., catchInterruptions: true) { | |||

sh 'some-command-that-might-fail' | |||

} | |||

</syntaxhighlight> | |||

* '''message''' an an optional String message that will be logged to the console. If the stage result is specified, the message will also be associated with that result and may be shown in visualizations. | |||

* '''stageResult''' an optional String that will set as stage result when an error is caught. Use SUCCESS or null to keep the stage result from being set when an error is caught. | |||

* '''buildResult''' an optional String that will be set as overall build result when an error is caught. Note that the build result can only get worse, so you cannot change the result to SUCCESS if the current result is UNSTABLE or worse. Use SUCCESS or null to keep the build result from being set when an error is caught. | |||

* '''catchInterruptions''' If true, certain types of exceptions that are used to interrupt the flow of execution for Pipelines will be caught and handled by the step. If false, those types of exceptions will be caught and immediately rethrown. Examples of these types of exceptions include those thrown when a build is manually aborted through the UI and those thrown by the timeout step. | |||

The default behavior for <code>catchInterruptions</code> is "true": the code executing inside <code>catchError()</code> will be interrupted, whether it is an external command or pipeline code, and the code immediately following <code>catchError()</code> closure '''is executed'''. | |||

<syntaxhighlight lang='groovy'> | |||

stage('stage1') { | |||

catchError() { | |||

sh 'jenkins/pipelines/failure/long-running' | |||

} | |||

print ">>>> post catchError()" | |||

} | |||

</syntaxhighlight> | |||

<syntaxhighlight lang='text'> | |||

[Pipeline] stage | |||

[Pipeline] { (stage1) | |||

[Pipeline] catchError | |||

[Pipeline] { | |||

[Pipeline] sh | |||

entering long running .... | |||

sleeping for 60 secs | |||

Aborted by ovidiu | |||

Sending interrupt signal to process | |||

jenkins/pipelines/failure/long-running: line 6: 5147 Terminated sleep ${sleep_secs} | |||

done sleeping, exiting long running .... | |||

[Pipeline] } | |||

[Pipeline] // catchError | |||

[Pipeline] echo | |||

>>>> post catchError() | |||

</syntaxhighlight> | |||

<font color=darkgray>However, it seems that the same behavior occurs if <code>catchError()</code> is invoked with <code>catchInteruptions: true</code>, so it's not clear what is the difference.</font>. | |||

Probably the safest way to invoke is to use this pattern, this way we're sure that even some exceptions bubble up, the cleanup work will be performed: | |||

<syntaxhighlight lang='groovy'> | |||

stage('stage1') { | |||

try { | |||

catchError() { | |||

sh 'jenkins/pipelines/failure/long-running' | |||

} | |||

} | |||

finally { | |||

print ">>>> execute mandatory cleanup code" | |||

} | |||

} | |||

</syntaxhighlight> | |||

Also see [[#catchError_Usage|Scripted Pipeline Failure Handling]] section above. | |||

==Basic Steps that Deal with Files== | |||

====dir==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#dir-change-current-directory}} | |||

Change current directory, on the node, while the pipeline code runs on the master. | |||

If the <code>dir()</code> argument is a relative directory, the new directory available to the code in the closure is relative to the current directory before the call, obtained with <code>pwd()</code>: | |||

<syntaxhighlight lang='groovy'> | |||

dir("dirA") { | |||

// execute in the context of pwd()/dirA", where pwd() | |||

// is the current directory before and a subdirectory of the workspace | |||

} | |||

</syntaxhighlight> | |||

If the <code>dir()</code> argument is an absolute directory, the new directory available to the code in the closure is the absolute directory specified as argument: | |||

<syntaxhighlight lang='groovy'> | |||

dir("/tmp") { | |||

// execute in /tmp | |||

} | |||

</syntaxhighlight> | |||

In both cases, the current directory is restored upon closure exit. | |||

Also see: {{Internal|Jenkins_Concepts#Pipeline_and_Files|Pipeline and Files}} | |||

====deleteDir==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#deletedir-recursively-delete-the-current-directory-from-the-workspace}} | |||

To recursively delete a directory and all its contents, step into the directory with <code>dir()</code> and then use <code>deleteDir()</code>: | |||

<syntaxhighlight lang='groovy'> | |||

dir('tmp') { | |||

// ... | |||

deleteDir() | |||

} | |||

</syntaxhighlight> | |||

This will delete ./tmp content and the ./tmp directory itself. | |||

====pwd==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#pwd-determine-current-directory}} | |||

Return the current directory path on node as a string, while the pipeline code runs on the master. | |||

Parameters: | |||

'''tmp''' (boolean, optional) If selected, return a temporary directory associated with the workspace rather than the workspace itself. This is an appropriate place to put temporary files which should not clutter a source checkout; local repositories or caches; etc. | |||

<syntaxhighlight lang='groovy'> | |||

println "current directory: ${pwd()}" | |||

</syntaxhighlight> | |||

<syntaxhighlight lang='groovy'> | |||

println "temporary directory: ${pwd(tmp: true)}" | |||

</syntaxhighlight> | |||

Also see: {{Internal|Jenkins_Concepts#Pipeline_and_Files|Pipeline and Files}} | |||

====readFile==== | |||

{{External|https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#readfile-read-file-from-workspace}} | |||

Read a file from the workspace, '''on the node this operation is made in context of'''. | |||

<syntaxhighlight lang='groovy'> | |||

String versionFile = readFile("${stage.WORKSPACE}/terraform/my-module/VERSION") | |||

</syntaxhighlight> | |||

If the file does not exist, the step throws <code>java.nio.file.NoSuchFileException: /no/such/file.txt</code> | |||

====writeFile==== | |||

{{External|https://jenkins.io/doc/pipeline/steps/workflow-basic-steps/#writefile-write-file-to-workspace}} | |||

writeFile will create any intermediate directory if necessary. | |||

To create a directory, <code>dir()</code> into the inexistent directory then create a dummy file <code>writeFile(file: '.dummy', text: '')</code>: | |||

<syntaxhighlight lang='groovy'> | |||

dir('tmp') { | |||

writeFile(file: '.dummy', text: '') | |||

} | |||

</syntaxhighlight> | |||

Alternatively, the directory can be created with a shell command: | |||

<syntaxhighlight lang='groovy'> | |||

sh 'mkdir ./tmp' | |||

</syntaxhighlight> | |||

Also see: {{Internal|Jenkins_Concepts#Pipeline_and_Files|Pipeline and Files}} | |||

====fileExists==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/workflow-basic-steps/#fileexists-verify-if-file-exists-in-workspace}} | |||

Also see: {{Internal|Jenkins_Concepts#Pipeline_and_Files|Pipeline and Files}} | |||

fileExists can be used on directories as well. This is how to check whether a directory exists: | |||

<syntaxhighlight lang='groovy'> | |||

dir('dirA') { | |||

if (fileExists('/')) { | |||

println "directory exists" | |||

} | |||

else { | |||

println "directory does not exist" | |||

} | |||

} | |||

</syntaxhighlight> | |||

<code>fileExists('/')</code>, <code>fileExists('.')</code> and <code>fileExists('')</code> are equivalent, they all check for the existence of a directory into which the last <code>dir()</code> stepped into. The last form <code>fileExists('')</code> issues a warning, so it's not preferred: | |||

<syntaxhighlight lang='text'> | |||

The fileExists step was called with a null or empty string, so the current directory will be checked instead. | |||

</syntaxhighlight> | |||

====findFiles==== | |||

{{External|https://www.jenkins.io/doc/pipeline/steps/pipeline-utility-steps/#findfiles-find-files-in-the-workspace}} | |||

Find files in workspace: | |||

<syntaxhighlight lang='groovy'> | |||

def files = findFiles(glob: '**/Test-*.xml', excludes: '') | |||

</syntaxhighlight> | |||

Uses Ant style pattern: https://ant.apache.org/manual/dirtasks.html#patterns | |||

Returns an array of instance for which the following attributes are available: | |||

* name: the name of the file and extension, without any path component (e.g. "test1.bats") | |||

* path: the relative path to the current directory set with [[#dir|dir()]], including the name of the file (e.g. "dirA/subDirA/test1.bats") | |||

* directory: a boolean which is true if the file is a directory, false otherwise. | |||

* length: length in bytes. | |||

* lastModified: 1617772442000 | |||

Example: | |||

<syntaxhighlight lang='groovy'> | |||

dir('test') { | |||

def files = findFiles(glob: '**/*.bats') | |||

for(def f: files) { | |||

print "name: ${f.name}, path: ${f.path}, directory: ${f.directory}, length: ${f.length}, lastModified: ${f.lastModified}" | |||

} | |||

} | |||

</syntaxhighlight> | |||

Playground: {{External|[https://github.com/ovidiuf/playground/tree/master/jenkins/pipelines/findFiles playground/jenkins/pipelines/findFiles]}} | |||

==Core== | ==Core== | ||

| Line 236: | Line 693: | ||

===archiveArtifacts=== | ===archiveArtifacts=== | ||

{{External|https://jenkins.io/doc/pipeline/steps/core/#-archiveartifacts-archive-the-artifacts}} | {{External|https://jenkins.io/doc/pipeline/steps/core/#-archiveartifacts-archive-the-artifacts}} | ||

Archives the build artifacts (for example, distribution zip files or jar files) so that they can be downloaded later. Archived files will be accessible from the Jenkins webpage. Normally, Jenkins keeps artifacts for a build as long as a build log itself is kept. Note that the Maven job type automatically archives any produced Maven artifacts. Any artifacts configured here will be archived on top of that. Automatic artifact archiving can be disabled under the advanced Maven options. | |||

<syntaxhighlight lang='groovy'> | |||

</syntaxhighlight> | |||

===fingerprint=== | ===fingerprint=== | ||

| Line 285: | Line 748: | ||

=@NonCPS= | =@NonCPS= | ||

{{ | {{Internal|Jenkins_Concepts#CPS|Jenkins Concepts | CPS}} | ||

=Build Summary= | |||

<syntaxhighlight lang='groovy'> | |||

// | |||

// write /tmp/summary-section-1.html | |||

// | |||

def summarySection1 = util.catFile('/tmp/summary-section-1.html') | |||

if (summarySection1) { | |||

def summary = manager.createSummary('document.png') | |||

summary.appendText(summarySection1, false) | |||

} | |||

// | |||

// write /tmp/summary-section-2.html | |||

// | |||

def summarySection2 = util.catFile('/tmp/summary-section-2.html') | |||

if (summarySection2) { | |||

def summary = manager.createSummary('document.png') | |||

summary.appendText(summarySection2, false) | |||

} | |||

</syntaxhighlight> | |||

=Dynamically Loaded Classes and Constructors= | |||

If classes are loaded dynamically in the Jenkinsfile, do not use constructors and <code>new</code>. Use MyClass.newInstance(...). | |||

=Fail a Build= | |||

See [[#error|error]] above. | |||

=Dynamically Loading Groovy Code from Repository into a Pipeline= | |||

This playground example shows how to dynamically load Groovy classes stored in a GitHub repository into a pipeline. | |||

<font color=darkgray>The example is not complete, in that invocation of a static method from Jenkinsfile does not work yet.</font> | |||

{{External|https://github.com/ovidiuf/playground/tree/master/jenkins/pipelines/dynamic-groovy-loader}} | |||

=Groovy on Jenkins Idiosyncrasies= | |||

==Prefix Static Method Invocations with Declaring Class Name when Calling from Subclass== | |||

Prefix the static method calls with the class name that declares them when calling from a subclass, otherwise you'll get a: | |||

<syntaxhighlight lang='text'> | |||

hudson.remoting.ProxyException: groovy.lang.MissingMethodException: No signature of method: java.lang.Class.locateOverlay() is applicable for argument types: (WorkflowScript, playground.jenkins.kubernetes.KubernetesCluster, playground.jenkins.PlatformVersion, java.lang.String, java.lang.String) values: [WorkflowScript@1db9ab90, <playground.jenkins.kubernetes.KubernetesCluster@376dc438>, ...] | |||

</syntaxhighlight> | |||

Latest revision as of 22:16, 15 May 2021

External

- https://jenkins.io/doc/book/pipeline/syntax/

- https://jenkins.io/doc/pipeline/steps/

- https://jenkins.io/doc/pipeline/steps/core/

Internal

Scripted Pipeline

Scripted Pipeline is classical way of declaring Jenkins Pipeline, preceding Declarative Pipeline. Unlike the Declarative Pipeline, the Scripted Pipeline is a general-purpose DSL built with Groovy. The pipelines are declared in Jenkinsfiles and executed from the top of the Jenkinsfile downwards, like most traditional scripts in Groovy. Groovy syntax is available directly in the Scripted Pipeline declaration. The flow control can be declared with if/else conditionals or via Groovy's exception handling support with try/catch/finally.

The simplest pipeline declaration:

echo 'pipeline started'

A more complex one:

node('some-worker-label') {

echo 'Pipeline logic starts'

stage('Build') {

if (env.BRANCH_NAME == 'master') {

echo 'this is only executed on master'

}

else {

echo 'this is executed elsewhere'

}

}

stage('Test') {

// ...

}

stage('Deploy') {

// ...

}

stage('Example') {

try {

sh 'exit 1'

}

catch(ex) {

echo 'something failed'

throw

}

}

}

The basic building block of the Scripted Pipeline syntax is the step. The Scripted Pipeline does not introduce any steps that are specific to its syntax. The generic pipeline steps, such as node, stage, parallel, etc. are available here: Pipeline Steps.

Scripted Pipeline at Runtime

When the Jenkins server starts to execute the pipeline, it pulls the Jenkinsfile either from a repository, following a checkout sequence similar to the one shown here, or from the pipeline configuration, if it is specified in-line. Then the Jenkins instance instantiates a WorkflowScript (org.jenkinsci.plugins.workflow.cps.CpsScript.java) instance. The "script" instance can be used to access the following state elements:

- pipeline parameters, with

this.params, which is a Map.

Scripted Pipeline Failure Handling

Scripted pipeline fail when an exception is thrown and reached the pipeline layer. The pipeline code can use try/catch/finally semantics to control this behavior, by catching the exceptions and preventing them from reaching the pipeline layer.

stage('Some Stage') {

try {

throw new Exception ("the build has failed")

}

catch(Exception e) {

// squelch the exception, the pipeline will not fail

}

}

The pipeline also fails when a command invoked with sh exits with a non-zero exit code. The underlying implementation throws an exception and that makes the build fail. It is possible to configure sh to not fail the build automatically on non-zero exit code, with its returnStatus option. The first failure in a sequential execution will stop the build, no subsequent stages will be executed. The stage that caused the failure will be shown in red in Blue Ocean (in the example below, there were three sequential stages but stage3 did not get executed):

In this case the corresponding stage and the entire build will be marked as 'FAILURE'.

A build can be programmatically marked as fail by setting the value of the currentBuild.result variable:

currentBuild.result = 'FAILURE'

The entire build will be marked as failed ('FAILURE', 'red' build), but the stage in which the variable assignment was done, stage2 in this case, will not show as failed:

The opposite behavior of marking a specific stage as failed ('FAILURE'), but allowing the overall build to be successful can be obtained by using the catchError basic step:

stage('stage2') {

catchError(buildResult: 'SUCCESS', stageResult: 'FAILURE') {

throw new RuntimeException("synthetic")

}

}

The result is a failed 'stage2' but a successful build:

A stage result and the entire build result may be also influenced by the JUnit test report produced for the stage, if any. If the test report exists and it is processed by the junit step, and if the report contains test errors and failures, they're both handled as "instability" of the build and the corresponding stage and the entire build will be marked as UNSTABLE. The classic view and Blue ocean will render a yellow stage and build:

Also see junit below and:

Declarative Pipeline

Declarative Pipeline is a new way of declaring Jenkins pipelines, and consists in a more simplified and opinionated syntax. Declarative Pipeline is an alternative to Scripted Pipeline.

pipeline {

agent any

options {

skipStagesAfterUnstable()

}

stages {

stage('Build') {

steps {

sh 'make'

}

}

stage('Test'){

steps {

sh 'make check'

junit 'reports/**/*.xml'

}

}

stage('Deploy') {

steps {

sh 'make publish'

}

}

}

}

Declarative Pipeline Directives

environment

See:

parameters

See:

Declarative Pipeline Failure Handling

TODO: https://www.jenkins.io/doc/book/pipeline/jenkinsfile/#handling-failure

Parameters

Environment Variables

Pipeline Steps

node

Allocates an executor or a node, typically a worker, and runs the enclosed code in the context of the workspace of that worker. Node may take a label name, computer name or an expression. The labels are declared on workers when they are defined in the master configuration, in their respective "clouds".

String NODE_LABEL = 'infra-worker'

node(NODE_LABEL) {

sh 'uname -a'

}

stage

The stage step defines a logical stage of the pipeline. The stage creates a labeled block in the pipeline and allows executing a closure in the context of that block:

stage('stage A') {

print 'pipeline> in stage A'

}

stage('stage B') {

print 'pipeline> in stage B'

}

Embedded stages as in this example are possible, and they will execute correctly, but they do not render well in Blue Ocean (Stage A.1 and Stage A.2 are not represented, just Stage A and B):

stage('stage A') {

print('pipeline> in stage A')

stage('stage A.1') {

print('pipeline> in stage A.1')

}

stage('stage A.2') {

print('pipeline> in stage A.1')

}

}

stage('stage B') {

print('pipeline> in stage B')

}

}

To control failure behavior at stage level, use catchError step, described below.

parallel

Takes a map from branch names to closures and an optional argument failFast, and executes the closure code in parallel.

parallel firstBranch: {

// do something

}, secondBranch: {

// do something else

},

failFast: true|false

stage("tests") {

parallel(

"unit tests": {

// run unit tests

},

"coverage tests": {

// run coverage tests

}

)

}

Allocation to different nodes can be performed inside the closure:

def tasks = [:]

tasks["branch-1"] = {

stage("task-1") {

node('node_1') {

sh 'echo $NODE_NAME'

}

}

}

tasks["branch-2"] = {

stage("task-2") {

node('node_1') {

sh 'echo $NODE_NAME'

}

}

}

parallel tasks

sh

Execute a shell script command, or multiple commands, on multiple lines. It can be specified in-line or it can refer to a file available on the filesystem exposed to the Jenkins node. It needs to be enclosed by a node to work.

The metacharacter $ must be escaped: \${LOGDIR}, unless it refers to a variable form the Groovy context.

Example:

stage.sh """

LOGDIR=${fileName}-logs

mkdir -p \${LOGDIR}/something

""".stripIndent()

stage.sh '''

LOGDIR=some-logs

mkdir -p ${LOGDIR}/something

'''.stripIndent()

Both """...""" and '''...''' Groovy constructs can be used. For more details on enclosing representing multi-line strings with """ or ''', see:

sh - Script Return Status

By default, a script exits with a non-zero return code will cause the step and the pipeline to fail with an exception:

ERROR: script returned exit code 1

To prevent that, configure returnStatus to be equal with true, and the step will return the exit value of the script, instead of failing on non-zero exit value. You may then compare to zero and decide whether to fail the pipeline (throw an exception) or not from the Groovy layer that invoked sh.

int exitCode = sh(returnStatus: true, script: './bin/do-something')

if (exitCode != 0) throw new RuntimeException('my script failed')

The pipeline log result on failure looks similar to:

[Pipeline] End of Pipeline

java.lang.RuntimeException: my script failed

at WorkflowScript.run(WorkflowScript:17)

...

Also see Scripted Pipeline Failure Handling section above.

sh - Script stdout

By default, the standard output of the script is send to the log. If returnStdout is set to true, the script standard output is returned as String as the step value. Call trim() to strip off the trailing newline.

The script's stderr is always sent to the log.

String result = sh(returnStdout: true, script: './bin/do-something').trim()

sh - Obtaining both the Return Status and stdout

If both returnStatus and returnStdout are turned on, returnStatus takes priority and the function returns the exit code. ⚠️ The stdout is discarded.

sh - Obtaining stdout and Preventing the Pipeline to Fail on Error

In case you want to use the external shell command to return a result to the pipeline, but not fail the pipeline when the external command fails, use this pattern:

try {

String stdout = sh(returnStdout: true, returnStatus: false, script: 'my-script')

// use the content returned by stdout in the pipeline

print "we got this as result of sh invocation: ${stdout.trim()}"

}

catch(Exception e) {

// catching the error will prevent pipeline failure, both stdout and stderr are captured in the pipeline log

print "the invocation failed"

}

If the command fails, both its stdout and stderr are captured in the pipeline log.

sh - Label

If a "label" argument is specified, the stage will render that label in the Jenkins and Blue Ocean logs:

sh(script: './bin/do-something', label: 'this will show in logs')

ws

Allocate workspace.

build

This is how a main pipeline launches in execution a subordinate pipeline.

This is how we may be able to return the result: https://support.cloudbees.com/hc/en-us/articles/218554077-How-to-set-current-build-result-in-Pipeline

junit

Jenkins understands the JUnit test report XML format (which is also used by TestNG). To use this feature, set up the build to run tests, which will generate their test reports into a local agent directory, then specify the path to the test reports in Ant glob syntax to the JUnit plugin pipeline step junit:

stage.junit '**/target/*-report/TEST-*.xml'

⚠️ Do not specify the path to a single test report file. The junit step will not load the file, even if it exists and it is a valid report, and will print an error message similar to:

[Pipeline] junit

Recording test results

No test report files were found. Configuration error?

Always use Ant glob syntax to specify how the report(s) are to be located:

Jenkins uses this step to ingest the test results, process them and provide historical test result trends, a web UI for viewing test reports, tracking failures, etc.

Both JUnit errors and failures are reported by the junit step as "failures", even if the JUnit XML report indicates both errors and failures. The following JUnit report:

<testsuite name="testsuite1" tests="2" errors="1" failures="1">

<testcase name="test1" classname="test1">

<error message="I have errored out"></error>

</testcase>

<testcase name="test2" classname="test2">

<failure message="I have failed"></failure>

</testcase>

</testsuite>

produces this Jenkins report:

The presence of at least one JUnit failure marks the corresponding stage, and the entire build as "UNSTABLE". The stage is rendered in the classical view and also in Blue Ocean in yellow:

Also see: Scripted Pipeline Failure Handling above.

checkout

The "checkout" step is provided by the pipeline "SCM" plugin.

Git Plugin

checkout([

$class: 'GitSCM',

branches: [[name: 'develop']],

doGenerateSubmoduleConfigurations: false,

extensions: [

[$class: 'GitLFSPull'],

[$class: 'CloneOption', noTags: true, reference: '', timeout: 40, depth: 1],

[$class: 'PruneStaleBranch']

],

submoduleCfg: [],

userRemoteConfigs: [

[url: 'git@github.com:some-org/some-project.git',

credentialsId: 'someCredId']

]

])

The simplest configuration that works:

checkout([

$class: 'GitSCM',

branches: [[name: 'master']],

userRemoteConfigs: [

[url: 'https://github.com/ovidiuf/playground.git']

]

])

The step checks out the repository into the current directory, in does not create a top-level directory ('some-project' in this case). .git will be created in the current directory. If the current directory is the workspace, .git will be created in the workspace root.

withCredentials

A step that allows using credentials defined in the Jenkins server. See:

Basic Steps

These basic steps are used invoking on stage.. In a Jenkinsfile, and inside a stage, invoke on this. or simply invoking directly, without qualifying.

echo

echo "pod memory limit: ${params.POD_MEMORY_LIMIT_Gi}"

echo """

Run Configuration:

something: ${SOMETHING}

something else: ${SOMETHING_ELSE}

"""

error

This step signals an error and fails the pipeline.

error 'some message'

Alternatively, you can simply:

throw new Exception("some message")

stash

input

In its basic form, renders a "Proceed"/"Abort" input box with a custom message. Selecting "Proceed" passes the control to the next step in the pipeline. Selecting "Abort" throws a org.jenkinsci.plugins.workflow.steps.FlowInterruptedException, which produces "gray" pipelines.

input(

id: 'Proceed1',

message: 'If the manual test is successful, select \'Proceed\'. Otherwise, you can abort the pipeline.'

)

timeout

Upon timeout, an org.jenkinsci.plugins.workflow.steps.FlowInterruptedException is thrown from the closure that is being executed, and not from the timeout() invocation. The code shown below prints "A", "B", "D":

timeout(time: 5, unit: 'SECONDS') {

echo "A"

try {

echo "B"

doSometing(); // this step takes a very long time and will time out

echo "C"

}

catch(org.jenkinsci.plugins.workflow.steps.FlowInterruptedException e) {

// if this exception propagates up without being caught, the pipeline gets aborted

echo "D"

}

}

withEnv

Sets one more more environment variables within a block, making them available to any external process initiated within that scope. If a variable value contains spaces, it does need to be quoted inside the sequence, as shown below:

node {

withEnv(['VAR_A=something', 'VAR_B=something else']) {

sh 'echo "VAR_A: ${VAR_A}, VAR_B: ${VAR_B}"'

}

}

catchError

catchError {

sh 'some-command-that-might-fail'

}

If the body throws an exception, mark the build as a failure, but continue to execute the pipeline from the statement following catchError step. If an exception is thrown, the behavior can be configured to:

- print a message

- set the build result other than failure

- change the stage result

- ignore certain kinds of exceptions that are used to interrupt the build

If catchError is used, there's no need for finally, as the exception is caught and does not propagates up.

The alternative is to use plain try/catch/finally blocks.

Configuration:

catchError(message: 'some message', stageResult: 'FAILURE'|'SUCCESS'|... , buildResult: 'FAILURE'|'SUCCESS'|..., catchInterruptions: true) {

sh 'some-command-that-might-fail'

}

- message an an optional String message that will be logged to the console. If the stage result is specified, the message will also be associated with that result and may be shown in visualizations.

- stageResult an optional String that will set as stage result when an error is caught. Use SUCCESS or null to keep the stage result from being set when an error is caught.

- buildResult an optional String that will be set as overall build result when an error is caught. Note that the build result can only get worse, so you cannot change the result to SUCCESS if the current result is UNSTABLE or worse. Use SUCCESS or null to keep the build result from being set when an error is caught.

- catchInterruptions If true, certain types of exceptions that are used to interrupt the flow of execution for Pipelines will be caught and handled by the step. If false, those types of exceptions will be caught and immediately rethrown. Examples of these types of exceptions include those thrown when a build is manually aborted through the UI and those thrown by the timeout step.

The default behavior for catchInterruptions is "true": the code executing inside catchError() will be interrupted, whether it is an external command or pipeline code, and the code immediately following catchError() closure is executed.

stage('stage1') {

catchError() {

sh 'jenkins/pipelines/failure/long-running'

}

print ">>>> post catchError()"

}

[Pipeline] stage

[Pipeline] { (stage1)

[Pipeline] catchError

[Pipeline] {

[Pipeline] sh

entering long running ....

sleeping for 60 secs

Aborted by ovidiu

Sending interrupt signal to process

jenkins/pipelines/failure/long-running: line 6: 5147 Terminated sleep ${sleep_secs}

done sleeping, exiting long running ....

[Pipeline] }

[Pipeline] // catchError

[Pipeline] echo

>>>> post catchError()

However, it seems that the same behavior occurs if catchError() is invoked with catchInteruptions: true, so it's not clear what is the difference..

Probably the safest way to invoke is to use this pattern, this way we're sure that even some exceptions bubble up, the cleanup work will be performed:

stage('stage1') {

try {

catchError() {

sh 'jenkins/pipelines/failure/long-running'

}

}

finally {

print ">>>> execute mandatory cleanup code"

}

}

Also see Scripted Pipeline Failure Handling section above.

Basic Steps that Deal with Files

dir

Change current directory, on the node, while the pipeline code runs on the master.

If the dir() argument is a relative directory, the new directory available to the code in the closure is relative to the current directory before the call, obtained with pwd():

dir("dirA") {

// execute in the context of pwd()/dirA", where pwd()

// is the current directory before and a subdirectory of the workspace

}

If the dir() argument is an absolute directory, the new directory available to the code in the closure is the absolute directory specified as argument:

dir("/tmp") {

// execute in /tmp

}

In both cases, the current directory is restored upon closure exit.

Also see:

deleteDir

To recursively delete a directory and all its contents, step into the directory with dir() and then use deleteDir():

dir('tmp') {

// ...

deleteDir()

}

This will delete ./tmp content and the ./tmp directory itself.

pwd

Return the current directory path on node as a string, while the pipeline code runs on the master.

Parameters:

tmp (boolean, optional) If selected, return a temporary directory associated with the workspace rather than the workspace itself. This is an appropriate place to put temporary files which should not clutter a source checkout; local repositories or caches; etc.

println "current directory: ${pwd()}"

println "temporary directory: ${pwd(tmp: true)}"

Also see:

readFile

Read a file from the workspace, on the node this operation is made in context of.

String versionFile = readFile("${stage.WORKSPACE}/terraform/my-module/VERSION")

If the file does not exist, the step throws java.nio.file.NoSuchFileException: /no/such/file.txt

writeFile

writeFile will create any intermediate directory if necessary.

To create a directory, dir() into the inexistent directory then create a dummy file writeFile(file: '.dummy', text: ):

dir('tmp') {

writeFile(file: '.dummy', text: '')

}

Alternatively, the directory can be created with a shell command:

sh 'mkdir ./tmp'

Also see:

fileExists

Also see:

fileExists can be used on directories as well. This is how to check whether a directory exists:

dir('dirA') {

if (fileExists('/')) {

println "directory exists"

}

else {

println "directory does not exist"

}

}

fileExists('/'), fileExists('.') and fileExists('') are equivalent, they all check for the existence of a directory into which the last dir() stepped into. The last form fileExists('') issues a warning, so it's not preferred:

The fileExists step was called with a null or empty string, so the current directory will be checked instead.

findFiles

Find files in workspace:

def files = findFiles(glob: '**/Test-*.xml', excludes: '')

Uses Ant style pattern: https://ant.apache.org/manual/dirtasks.html#patterns Returns an array of instance for which the following attributes are available:

- name: the name of the file and extension, without any path component (e.g. "test1.bats")

- path: the relative path to the current directory set with dir(), including the name of the file (e.g. "dirA/subDirA/test1.bats")

- directory: a boolean which is true if the file is a directory, false otherwise.

- length: length in bytes.

- lastModified: 1617772442000

Example:

dir('test') {

def files = findFiles(glob: '**/*.bats')

for(def f: files) {

print "name: ${f.name}, path: ${f.path}, directory: ${f.directory}, length: ${f.length}, lastModified: ${f.lastModified}"

}

}

Playground:

Core

archiveArtifacts

Archives the build artifacts (for example, distribution zip files or jar files) so that they can be downloaded later. Archived files will be accessible from the Jenkins webpage. Normally, Jenkins keeps artifacts for a build as long as a build log itself is kept. Note that the Maven job type automatically archives any produced Maven artifacts. Any artifacts configured here will be archived on top of that. Automatic artifact archiving can be disabled under the advanced Maven options.

fingerprint

Obtaining the Current Pipeline Build Number

def buildNumber = currentBuild.rawBuild.getNumber()

FlowInterruptedException

throw new FlowInterruptedException(Result.ABORTED)

String branch="..."

String projectName = JOB_NAME.substring(0, JOB_NAME.size() - JOB_BASE_NAME.size() - 1)

WorkflowMultiBranchProject project = Jenkins.instance.getItemByFullName("${projectName}")

if (project == null) {

...

}

WorkflowJob job = project.getBranch(branch)

if (job == null) {

...

}

WorkflowRun run = job.getLastSuccessfulBuild()

if (run == null) {

...

}

List<Run.Artifact> artifacts = run.getArtifacts()

...

Passing an Environment Variable from Downstream Build to Upstream Build

Upstream build:

...

def result = build(job: jobName, parameters: params, quietPeriod: 0, propagate: true, wait: true);

result.getBuildVariables()["SOME_VAR"]

...

Downstream build:

env.SOME_VAR = "something"

@NonCPS

Build Summary

//

// write /tmp/summary-section-1.html

//

def summarySection1 = util.catFile('/tmp/summary-section-1.html')

if (summarySection1) {

def summary = manager.createSummary('document.png')

summary.appendText(summarySection1, false)

}

//

// write /tmp/summary-section-2.html

//

def summarySection2 = util.catFile('/tmp/summary-section-2.html')

if (summarySection2) {

def summary = manager.createSummary('document.png')

summary.appendText(summarySection2, false)

}

Dynamically Loaded Classes and Constructors

If classes are loaded dynamically in the Jenkinsfile, do not use constructors and new. Use MyClass.newInstance(...).

Fail a Build

See error above.

Dynamically Loading Groovy Code from Repository into a Pipeline

This playground example shows how to dynamically load Groovy classes stored in a GitHub repository into a pipeline.

The example is not complete, in that invocation of a static method from Jenkinsfile does not work yet.

Groovy on Jenkins Idiosyncrasies

Prefix Static Method Invocations with Declaring Class Name when Calling from Subclass

Prefix the static method calls with the class name that declares them when calling from a subclass, otherwise you'll get a:

hudson.remoting.ProxyException: groovy.lang.MissingMethodException: No signature of method: java.lang.Class.locateOverlay() is applicable for argument types: (WorkflowScript, playground.jenkins.kubernetes.KubernetesCluster, playground.jenkins.PlatformVersion, java.lang.String, java.lang.String) values: [WorkflowScript@1db9ab90, <playground.jenkins.kubernetes.KubernetesCluster@376dc438>, ...]