Kubernetes Control Plane and Data Plane Concepts: Difference between revisions

| (55 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

=Internal= | =Internal= | ||

* [[Kubernetes Concepts# | * [[Kubernetes Concepts#Subjects|Kubernetes Concepts]] | ||

=Overview= | =Overview= | ||

When Kubernetes | When you deploy Kubernetes, you get a [[#Cluster|cluster]]. | ||

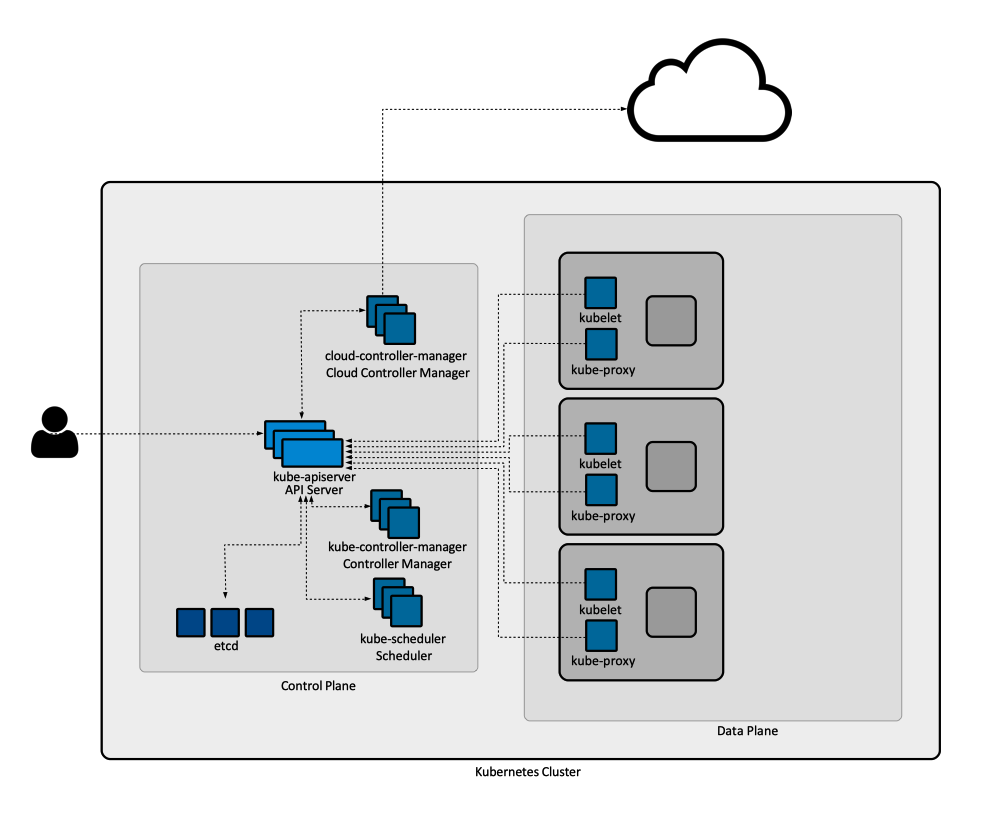

::::[[File:Kubernetes_Cluster.png]] | ::::[[File:Kubernetes_Cluster.png]] | ||

| Line 13: | Line 13: | ||

=Cluster= | =Cluster= | ||

A Kubernetes cluster consists of a set of [[#Node|nodes]], which all run containerized applications. Of those, a small number are running applications that manage the cluster. They are referred to as [[#Master_Node|master nodes]], also collectively known as the [[#Control_Plane|control plane]]. The rest of the nodes, a potentially relatively larger number, but at least one, are the [[#Worker_Node|worker nodes]]. | A Kubernetes cluster consists of a set of [[#Node|nodes]], which all run containerized applications. Of those, a small number are running applications that manage the cluster. They are referred to as [[#Master_Node|master nodes]], also collectively known as the [[#Control_Plane|control plane]]. The rest of the nodes, a potentially relatively larger number, but at least one, are the [[#Worker_Node|worker nodes]]. Kubernetes cluster with zero workers nodes are possible, and had been seen, but they are quite useless. The worker nodes run the cluster's workload and are collectively known as the [[#Data_Plane|data plane]]. | ||

=Node= | =Node= | ||

{{External|https://kubernetes.io/docs/concepts/architecture/nodes/}} | {{External|https://kubernetes.io/docs/concepts/architecture/nodes/}} | ||

A node is a Linux host that can run as a VM, a bare-metal device or an instance in a private or public cloud. A node can be a [[#Master_Node|master]] or [[#Worker_Node|worker]]. In most cases, the term "node" means [[#Worker_Node|worker node]]. | A node is a Linux host that can run as a VM, a bare-metal device or an instance in a private or public cloud. A node can be a [[#Master_Node|master]] or [[#Worker_Node|worker]]. In most cases, the term "node" means [[#Worker_Node|worker node]]. The [[#Controller_Manager|controller manager]] includes a [[#Node_Controller|node controller]]. [[Kubernetes_Pod_and_Container_Concepts#Pods_and_Nodes|Pods]] are scheduled on nodes. Each Kubernetes node runs a standard set of node components: the [[#kubelet|kubelet]], the [[#kube-proxy|kube-proxy]] and the [[#Container_Runtime|container runtime]]. | ||

====kubelet==== | ====kubelet==== | ||

Each node runs an agent called kubelet: {{Internal|kubelet|kubelet}} | |||

Each node runs | ====<span id='Kube-proxy'></span>kube-proxy==== | ||

Each node runs a process called kube-proxy that is involved in establishing a cluster-wide virtual network across nodes: {{Internal|kube-proxy|kube-proxy}} | |||

====Container Runtime==== | ====Container Runtime==== | ||

{{Internal|Kubernetes_Container_Runtime_Concepts#Container_Runtime|Container Runtime}} | {{Internal|Kubernetes_Container_Runtime_Concepts#Container_Runtime|Container Runtime}} | ||

=Control Plane= | =Control Plane= | ||

The control plane is the collective name for a cluster's [[#Master_Node|master nodes]]. The control plane consists of cluster control components that expose APIs and interfaces to define, deploy, and manage the lifecycle of containers. The control plane exposes the API via the [[#API_Server|API Server]] and contains the [[#Cluster_Store|cluster store]], which stores state in [[Etcd|etcd]], the [[#Controller_Manager|controller manager]], the [[#Cloud_Controller_Manager|cloud controller manager]], the [[#Scheduler|scheduler]] and other management components. The control plane makes workload scheduling decisions, performs monitoring and responds to external and internal events. These components can be run as traditional operating system services (daemons) or as containers. In production environments, the control plane usually runs across multiple computers, providing fault-tolerance and high availability. | |||

The control plane is the collective name for a cluster's [[#Master_Node|master nodes]]. The control plane consists of cluster control components. The control plane exposes the API via the [[#API_Server|API Server]] and contains the [[#Cluster_Store|cluster store]], which stores state in [[Etcd|etcd]], [[#Controller_Manager|controller manager]], [[#Cloud_Controller_Manager|cloud controller manager]], [[#Scheduler|scheduler]] and other management components. The control plane makes workload scheduling decisions, performs monitoring and responds to external and internal events. These components can be run as traditional operating system services (daemons) or as containers. In production environments, the control plane usually runs across multiple computers, providing fault-tolerance and high availability. | |||

==<span id='Master'></span>Master Node== | ==<span id='Master'></span>Master Node== | ||

A master node is a collection of system services that manage the Kubernetes cluster. The master nodes are sometimes called heads or head nodes, and most often simply masters. Collectively, they represent the [[#Control_Plane|control plane]]. While it is possible to execute user workloads on the master node, this is generally not recommended. This approach frees up the master nodes' resources to be exclusively used for cluster management activities. | A master [[#Node|node]] is a collection of system services that manage the Kubernetes cluster. The master nodes are sometimes called heads or head nodes, and most often simply masters or control plane nodes. Collectively, they represent the [[#Control_Plane|control plane]]. While it is possible to execute user workloads on the master node, this is generally not recommended. This approach frees up the master nodes' resources to be exclusively used for cluster management activities. | ||

===HA Master Nodes=== | ===HA Master Nodes=== | ||

| Line 47: | Line 41: | ||

==<span id='Control_Plane_Components'></span>Control Plane System Services== | ==<span id='Control_Plane_Components'></span>Control Plane System Services== | ||

===API Server=== | ===<span id='API_Server'></span>API Server <tt>kube-apiserver</tt>=== | ||

{{External| | {{External|https://kubernetes.io/docs/concepts/overview/components/#kube-apiserver}} | ||

The API server is the control plane front-end service. All components | The API server is the control plane front-end service. All components - internal system components and external user components - communicate exclusively via the API Server and use the same API. The most common operation is to POST a [[Kubernetes Manifests|manifest]] as part of a REST API invocation - once the invocation is authenticated and authorized, the manifest content is validated, then persisted into the [[#Cluster_Store|cluster store]] and various controllers kick in to insure that the cluster state matches the desired state expressed in the manifest. The API Server runtime is represented by the <code>kube-apiserver</code> binary. The API server has [[#Admission_Controllers|admission controllers]] compiled into its binary. The clients sending API requests into the server can use different [[Kubernetes_Security_Concepts#API_Authentication_Strategies|authentication strategies]]. The API server can be accessed from inside pods as https://kubernetes.default. | ||

====Admission Controllers==== | ====Admission Controllers==== | ||

An admission controller is a piece of code that intercepts requests to the API Server prior to persistence of the object, but after the request is authenticated and authorized. Some of these controllers allow dynamic admission control, implemented as extensible functionality that can run as webhooks configured at runtime. More details: {{Internal|Kubernetes Admission Controller Concepts#Overview|Admission Controller Concepts}} | |||

====API Server Endpoints==== | |||

{{Internal|Kubernetes API Server Endpoints|API Server Endpoints}} | |||

===Cluster Store=== | ===Cluster Store=== | ||

| Line 61: | Line 57: | ||

The cluster store is the service that persistently stores the entire configuration and state of the [[#Cluster|cluster]]. The cluster store is the only stateful part of the [[#Control_Plane|control plane]], and the single source of truth for the cluster. The current implementation is based on [[Etcd Concepts#Overview|etcd]]. Productions deployments run in a [[#HA_Master_Nodes|HA configuration]], with 3 - 5 replicas. etcd prefers consistence over availability, and it will halt updates to the cluster in split brain situations to maintain consistency. However, if etcd becomes unavailable, the applications running on the cluster will continue to work. | The cluster store is the service that persistently stores the entire configuration and state of the [[#Cluster|cluster]]. The cluster store is the only stateful part of the [[#Control_Plane|control plane]], and the single source of truth for the cluster. The current implementation is based on [[Etcd Concepts#Overview|etcd]]. Productions deployments run in a [[#HA_Master_Nodes|HA configuration]], with 3 - 5 replicas. etcd prefers consistence over availability, and it will halt updates to the cluster in split brain situations to maintain consistency. However, if etcd becomes unavailable, the applications running on the cluster will continue to work. | ||

===Controller Manager=== | Also see: {{Internal|etcd|etcd}} | ||

===<span id='Controller_Manager'></span>Controller Manager <tt>kube-controller-manager</tt>=== | |||

{{External|https://kubernetes.io/docs/reference/command-line-tools-reference/kube-controller-manager}} | {{External|https://kubernetes.io/docs/reference/command-line-tools-reference/kube-controller-manager}} | ||

{{External|https://kubernetes.io/docs/concepts/architecture/controller/}} | {{External|https://kubernetes.io/docs/concepts/architecture/controller/}} | ||

The controller manager implements multiple specialized and independent control loops that monitor the state of the cluster and respond to events, insured that the current state of the cluster matches the desired state, as declared to the API server, thus implementing the [[Kubernetes_Concepts#Declarative_versus_Imperative_Approach|declarative approach]] to operations. The controller manager is shipped as a monolithic binary, usually named <code>kube-controller-manager</code>, which exists either as a pod in the "kube-system" namespace or a process on one of the control plane nodes. The controller manager is a "controller of controllers", providing the functionality of a [[#Node_Controller|node controller]], [[#Endpoints_Controller|endpoints controller]], a [[#Replicaset_Controller|replicaset controller]], a [[#Persistent_Volume_Controller|persistent volume controller]] and a [[#Horizontal_Pod_Autoscaler_Controller|horizontal pod autoscaler controller]]. <span id='Control_Loop'></span>The logic implemented by each [[Kubernetes_Concepts#Control_Loop|control loop]] consists of obtaining the desired state, observing the current state, determining differences and, if differences exist, reconciling differences. | |||

====Node Controller==== | |||

====Endpoints Controller==== | |||

{{Internal|Kubernetes_Service_Concepts#Endpoints_Controller|Endpoints Controller}} | |||

< | ====Replicaset Controller==== | ||

{{Internal|Kubernetes_ReplicaSet#Replicaset_Controller|Replicaset Controller}} | |||

====Persistent Volume Controller==== | |||

{{Internal|Kubernetes_Storage_Concepts#Persistent_Volume_Controller|Persistent Volume Controller}} | |||

====Horizontal Pod Autoscaler Controller==== | |||

{{Internal|Kubernetes_Horizontal_Pod_Autoscaler#Horizontal_Pod_Autoscaler_Controller|Horizontal Pod Autoscaler Controller}} | |||

====Ingress Controller==== | |||

<font color=darkgray>Does it belong here?</font> | |||

===Cloud Controller Manager=== | ===Cloud Controller Manager=== | ||

{{External| | {{External|https://kubernetes.io/docs/concepts/architecture/cloud-controller/}} | ||

The cloud controller manager is a | The cloud controller manager is a Kubernetes control plane component that embeds cloud-specific control logic and manages integration with the underlying cloud technology and services such as storage and load balancers. It is only present if Kubernetes runs on a cloud like AWS, Azure or GCP. The cloud controller manager lets you link the Kubernetes cluster into the cloud provider's API, and separates out the components that interact with that cloud platform from components that only interact with your cluster. By decoupling the interoperability logic between Kubernetes and the underlying cloud infrastructure, the cloud controller manager component enables cloud providers to release features at a different pace compared to the main Kubernetes project. The cloud controller manager is structured using a plugin mechanism that allows different cloud providers to integrate their platforms with Kubernetes. | ||

===Scheduler=== | ===Scheduler=== | ||

The scheduler is a system service whose job is to distribute [[Kubernetes_Pod_and_Container_Concepts#Deployment|pods]] to [[#Worker_Node|nodes]] for execution. For more details see: {{Internal|Kubernetes_Scheduling,_Preemption_and_Eviction_Concepts#Kubernetes_Scheduler|Kubernetes Scheduling, Preemption and Eviction Concepts | Kubernetes Scheduler}} | |||

The scheduler is a system service whose job is to distribute [[Kubernetes_Pod_and_Container_Concepts#Deployment|pods]] to [[#Worker_Node|nodes]] for execution. | |||

=Data Plane= | =Data Plane= | ||

The data plane, also known as the application plane, is the layer that provides capacity such as CPU, memory, network, and storage so that the containers can run and connect to a network. It consists in a set of [[#Worker_Node|worker nodes]]. | |||

==Worker Node== | ==Worker Node== | ||

A worker node, most often referred simply as "node" (as opposite to [[#Master|master]]), is where the application services run. Collectively, the worker nodes make up the [[#Data_Plane|data plane]]. A worker node constantly watches for new work assignments, which materialize in the form of [[Kubernetes_Pod_and_Container_Concepts#Pod|pods]], which are the components of the application workload. | A worker [[#Node|node]], most often referred simply as "node" (as opposite to control plane node or [[#Master|master]]), is where the application services run. Collectively, the worker nodes make up the [[#Data_Plane|data plane]]. A worker node constantly watches for new work assignments, which materialize in the form of [[Kubernetes_Pod_and_Container_Concepts#Pod|pods]], which are the components of the application workload. A Kubernetes cluster can be operational, in the sense that its API server can answer requests, without any worker node. However, that cluster will not be very useful, as it will not be capable of scheduling workloads. | ||

Latest revision as of 19:22, 6 December 2023

External

Internal

Overview

When you deploy Kubernetes, you get a cluster.

Cluster

A Kubernetes cluster consists of a set of nodes, which all run containerized applications. Of those, a small number are running applications that manage the cluster. They are referred to as master nodes, also collectively known as the control plane. The rest of the nodes, a potentially relatively larger number, but at least one, are the worker nodes. Kubernetes cluster with zero workers nodes are possible, and had been seen, but they are quite useless. The worker nodes run the cluster's workload and are collectively known as the data plane.

Node

A node is a Linux host that can run as a VM, a bare-metal device or an instance in a private or public cloud. A node can be a master or worker. In most cases, the term "node" means worker node. The controller manager includes a node controller. Pods are scheduled on nodes. Each Kubernetes node runs a standard set of node components: the kubelet, the kube-proxy and the container runtime.

kubelet

Each node runs an agent called kubelet:

kube-proxy

Each node runs a process called kube-proxy that is involved in establishing a cluster-wide virtual network across nodes:

Container Runtime

Control Plane

The control plane is the collective name for a cluster's master nodes. The control plane consists of cluster control components that expose APIs and interfaces to define, deploy, and manage the lifecycle of containers. The control plane exposes the API via the API Server and contains the cluster store, which stores state in etcd, the controller manager, the cloud controller manager, the scheduler and other management components. The control plane makes workload scheduling decisions, performs monitoring and responds to external and internal events. These components can be run as traditional operating system services (daemons) or as containers. In production environments, the control plane usually runs across multiple computers, providing fault-tolerance and high availability.

Master Node

A master node is a collection of system services that manage the Kubernetes cluster. The master nodes are sometimes called heads or head nodes, and most often simply masters or control plane nodes. Collectively, they represent the control plane. While it is possible to execute user workloads on the master node, this is generally not recommended. This approach frees up the master nodes' resources to be exclusively used for cluster management activities.

HA Master Nodes

The recommended configuration includes 3 or 5 replicated masters.

Control Plane System Services

API Server kube-apiserver

The API server is the control plane front-end service. All components - internal system components and external user components - communicate exclusively via the API Server and use the same API. The most common operation is to POST a manifest as part of a REST API invocation - once the invocation is authenticated and authorized, the manifest content is validated, then persisted into the cluster store and various controllers kick in to insure that the cluster state matches the desired state expressed in the manifest. The API Server runtime is represented by the kube-apiserver binary. The API server has admission controllers compiled into its binary. The clients sending API requests into the server can use different authentication strategies. The API server can be accessed from inside pods as https://kubernetes.default.

Admission Controllers

An admission controller is a piece of code that intercepts requests to the API Server prior to persistence of the object, but after the request is authenticated and authorized. Some of these controllers allow dynamic admission control, implemented as extensible functionality that can run as webhooks configured at runtime. More details:

API Server Endpoints

Cluster Store

The cluster store is the service that persistently stores the entire configuration and state of the cluster. The cluster store is the only stateful part of the control plane, and the single source of truth for the cluster. The current implementation is based on etcd. Productions deployments run in a HA configuration, with 3 - 5 replicas. etcd prefers consistence over availability, and it will halt updates to the cluster in split brain situations to maintain consistency. However, if etcd becomes unavailable, the applications running on the cluster will continue to work.

Also see:

Controller Manager kube-controller-manager

The controller manager implements multiple specialized and independent control loops that monitor the state of the cluster and respond to events, insured that the current state of the cluster matches the desired state, as declared to the API server, thus implementing the declarative approach to operations. The controller manager is shipped as a monolithic binary, usually named kube-controller-manager, which exists either as a pod in the "kube-system" namespace or a process on one of the control plane nodes. The controller manager is a "controller of controllers", providing the functionality of a node controller, endpoints controller, a replicaset controller, a persistent volume controller and a horizontal pod autoscaler controller. The logic implemented by each control loop consists of obtaining the desired state, observing the current state, determining differences and, if differences exist, reconciling differences.

Node Controller

Endpoints Controller

Replicaset Controller

Persistent Volume Controller

Horizontal Pod Autoscaler Controller

Ingress Controller

Does it belong here?

Cloud Controller Manager

The cloud controller manager is a Kubernetes control plane component that embeds cloud-specific control logic and manages integration with the underlying cloud technology and services such as storage and load balancers. It is only present if Kubernetes runs on a cloud like AWS, Azure or GCP. The cloud controller manager lets you link the Kubernetes cluster into the cloud provider's API, and separates out the components that interact with that cloud platform from components that only interact with your cluster. By decoupling the interoperability logic between Kubernetes and the underlying cloud infrastructure, the cloud controller manager component enables cloud providers to release features at a different pace compared to the main Kubernetes project. The cloud controller manager is structured using a plugin mechanism that allows different cloud providers to integrate their platforms with Kubernetes.

Scheduler

The scheduler is a system service whose job is to distribute pods to nodes for execution. For more details see:

Data Plane

The data plane, also known as the application plane, is the layer that provides capacity such as CPU, memory, network, and storage so that the containers can run and connect to a network. It consists in a set of worker nodes.

Worker Node

A worker node, most often referred simply as "node" (as opposite to control plane node or master), is where the application services run. Collectively, the worker nodes make up the data plane. A worker node constantly watches for new work assignments, which materialize in the form of pods, which are the components of the application workload. A Kubernetes cluster can be operational, in the sense that its API server can answer requests, without any worker node. However, that cluster will not be very useful, as it will not be capable of scheduling workloads.