Software Testing Concepts: Difference between revisions

(→Load) |

|||

| (152 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

* [[Software Engineering#Subjects|Software Engineering]] | * [[Software Engineering#Subjects|Software Engineering]] | ||

* [[System_Design#Correctness|System Design]] | * [[System_Design#Correctness|System Design]] | ||

* [[Designing_Modular_Systems#Use_Testing_to_Drive_Design_Decisions|Designing Modular Systems]] | |||

=Overview= | =Overview= | ||

[[#Automated_Testing|Automated testing]] provides an effective mechanism for catching regressions, especially when combined with [[#Test-Driven_Development|test-driven development]]. Well written tests can also act as a form of a system documentation but if not used carefully, writing tests may produce meaningless boilerplate test cases. Automated testing is also valuable for covering corner cases that rarely arise in normal operations. Thinking about writing the tests improves the understanding of the problem and in some cases leads to better solutions. | [[#Automated_Testing|Automated testing]] provides an effective mechanism for catching regressions, especially when combined with [[#Test-Driven_Development|test-driven development]]. Well written tests can also act as a form of a system documentation but if not used carefully, writing tests may produce meaningless boilerplate test cases. Automated testing is also valuable for covering corner cases that rarely arise in normal operations. Thinking about writing the tests improves the understanding of the problem and in some cases leads to better solutions. | ||

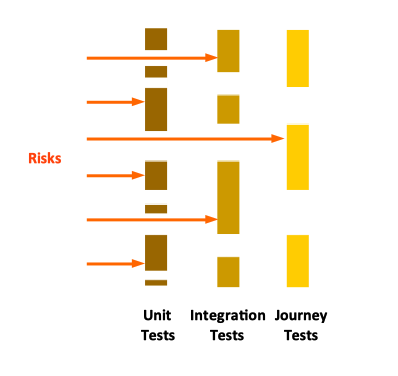

Testing is ultimately about managing risks. | |||

=<span id='Test-Driven_Development'></span>Test-Driven Development (TDD)= | =<span id='Test-Driven_Development'></span>Test-Driven Development (TDD)= | ||

| Line 13: | Line 16: | ||

* Write the functional code until the test passes. | * Write the functional code until the test passes. | ||

* Refactor both new and old code to make it well structured. | * Refactor both new and old code to make it well structured. | ||

Writing the tests first and exercising them in process of writing the code makes the code more modular, and also prevents it to break if changed later - the tests become part of the code base. This is referring to as "building quality in" instead of "testing quality in". | This is another set of three rules that aims to define TDD: | ||

# Write production code only to pass a failing test | |||

# Write no more of a unit test that sufficient to fail | |||

# Write no more production code than necessary to pass one failing unit test | |||

Writing the tests first and exercising them in process of writing the code [[Designing_Modular_Systems#Use_Testing_to_Drive_Design_Decisions|makes the code more modular]], and also prevents it to break if changed later - the tests become part of the code base. This is referring to as "building quality in" instead of "testing quality in". Writing tests while designing and building the system, rather than afterward, forces you to improve the design. Tests shape the design and act as contracts that the code must meet. A component that is difficult to test in isolation is a symptom of design issues. A well-designed system should have loosely coupled components. Clean design and loosely coupled code is a byproduct of making a system testable. | |||

TDD was created by Kent Beck. | |||

Other articles on this subject: | |||

* https://medium.com/@kyodo-tech/tdd-and-behavioral-testing-in-go-mocking-considered-harmful-f9d621487f6 | |||

=<span id='Automated_Testing'></span>Automated Test= | =<span id='Automated_Testing'></span>Automated Test= | ||

| Line 19: | Line 31: | ||

=Continuous Testing= | =Continuous Testing= | ||

Continuous testing means the test suite is run as often as possible, ideally after each commit. The [[Continuous Integration#Overview|CI]] build and the [[Continuous Delivery#Overview|CD]] pipeline should run immediately someone pushes a change to the codebase. Continuous testing provide continuous feedback. Quick feedback, ideally immediately after a change was committed, allows developers to respond to the feedback with as little interruption to their flow as possible. Additionally, more often the tests run, the smaller scope of change is examined for each run, so it's easier to figure out where the problems were introduced, if any. | |||

=Test Categories= | |||

==Test Pyramid== | |||

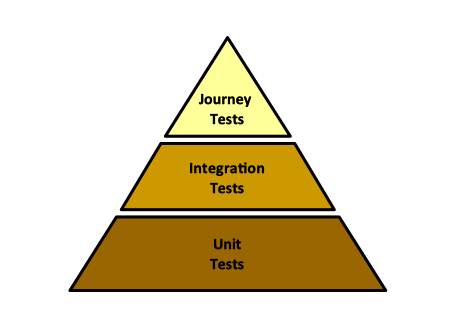

The test pyramid is a well known-model for software testing. It prescribes more "cheaper" tests at the lower level, and a fewer, more expensive tests at the higher levels. The lower level of the pyramid contains [[#Unit_Test|unit tests]]. The middle layer contains [[#Integration_Test|integration tests]], which cover collections of components assembled together. The higher stage contain [[#Journey_Test|journey tests]], driven through the UI, which test the application as a whole. | |||

:::[[File:Test_Pyramid.png|463px]] | |||

The tests in the higher level of the pyramid cover the same code base as the tests in the lower levels, which means they can be fewer, and less comprehensive. They only need to tests the functionality that emerges from the interaction of components, in way the tests at the lower levels can't. They don't need to prove the behavior of the lower-level components, that's already done by the lower level tests. | |||

=Unit Test= | <font color=darkkhaki>TO PROCESS: [https://martinfowler.com/articles/practical-test-pyramid.html The Practical Test Pyramid] by Ham Vocke | ||

Unit testing is the testing of the smallest possible part of | </font> | ||

Note that the "classical" test pyramid does not apply entirely to infrastructure software. The model for infrastructure code testing is the "[[Infrastructure_Code_Testing_Concepts#The_Infrastructure_Test_Diamond|infrastructure test diamond]]". | |||

==Functional Tests== | |||

===Unit Test=== | |||

{{External|[https://martinfowler.com/bliki/UnitTest.html Unit Test] by Martin Fowler}} | |||

{{External|[http://www.extremeprogramming.org/rules/unittests.html extremeprogramming.org Definition of a Unit Test]}} | |||

Unit testing is the testing of the smallest possible part of the logic, such a single method, a small set of related methods or a class. In reality, we test logical units - which can extend to a method, a single class or multiple classes. A unit test has the following characteristics: | |||

* It should be '''[[#Automated_Test|automated]]'''. Continually verified assumptions encourage refactoring. Code should not be refactored without proper automated test coverage. | * It should be '''[[#Automated_Test|automated]]'''. Continually verified assumptions encourage refactoring. Code should not be refactored without proper automated test coverage. | ||

* It should be '''reliable''' - it should fail if, and only if, the production code is broken. If the test starts failing for some other reason, for example if the internet connection is not available, that implies the unit testing code is broken. | * It should be '''reliable''' - it should fail if, and only if, the production code is broken. If the test starts failing for some other reason, for example if the internet connection is not available, that implies the unit testing code is broken. | ||

* It should be '''fast''' | * It should be '''small''' and '''fast''', and inexpensive to run, not more than a few milliseconds to finish execution. | ||

* It should be '''self-contained''' and runnable in isolation, and then in any order as part of the test suite. The unit test should not depend on the result of another test or on the execution order, or external state (such as configuration files stored on the developer's machine). One should understand what is going on in a unit test without having to look at other parts of the code. | * It should be '''self-contained''' and runnable in isolation, and then in any order as part of the [[#Test_Suite|test suite]]. The unit test should not depend on the result of another test or on the execution order, or external state (such as configuration files stored on the developer's machine). One should understand what is going on in a unit test without having to look at other parts of the code. | ||

* It should not depend on database access, or any long running task. | * It should not depend on database access, in general any '''external dependency''' or any long running task. | ||

* They should not use '''real''' [[#External_Dependency|external dependencies]]. A test that calls into the database is '''not''' a unit test. If the presence of external dependencies are necessary to test the logic, they should be provided as [[#Test_Double|test doubles]]. Using mocks with unit tests is acceptable, but the moment mocks enter the scene, the test begins to straddle the unit/integration test boundary. | |||

* It should be '''time (time of the day, timezone) and location independent'''. | * It should be '''time (time of the day, timezone) and location independent'''. | ||

* It should be meaningful. Getter or setter testing is not meaningful. | * It should be meaningful. Getter or setter testing is not meaningful. | ||

* It should be usable as '''documentation''': readable and expressive. | * It should be usable as '''documentation''': readable and expressive. | ||

=External Dependency= | Also see: {{Internal|Go_Testing#Unit_Tests|Unit Tests in Go}} | ||

The following are examples of situations when the test relies on external dependencies: | ====External Dependency==== | ||

* The test | The following are examples of situations when the test relies on external dependencies (which should not be the case for unit tests): | ||

* The test acquires a database connection and fetches/updates data. | |||

* The test connects to the internet and downloads files. | * The test connects to the internet and downloads files. | ||

* The test interacts with a mail server to | * The test interacts with a mail server to send an e-mail. | ||

* The test relies on the presence, and uses an external tool in the testing environment, as part of the testing logic. | |||

* The test looks up JNDI objects. | * The test looks up JNDI objects. | ||

* The test invokes a web service. | * The test invokes a web service. | ||

* ... and more. | |||

===Integration Test=== | |||

{{External|https://martinfowler.com/bliki/IntegrationTest.html}} | |||

Integration testing is a software testing phase in which individual software components are combined and tested as a group, and aims to prove compliance with functional requirements. Integration testing occurs after [[#Unit_Test|unit testing]] and before [[#System_Test|system testing]]. Also see [[#Progressive_Testing|Progressive Testing]]. | |||

An integration test covers a collection of components, assembled together and interacting with each via their interfaces. The components have been previously unit tested. Integration tests depend on live (real) [[#External_Dependency|external dependencies]], such databases, and they are inherently slower than the [[#Unit_Test|unit tests]]. Integration tests should be automated, but they should run outside the continuous unit test feedback loop. | |||

Integration tests '''may''' be written in such a way they can use in-memory mocks if the test environment is appropriately configured, turning into "unit tests with mocks", which are a lot less expensive to run. This is especially useful if running the test with live external dependency is expensive, and it is best left to an overnight CI pipeline. Writing tests this way opens up both possibilities: it makes feasible running those tests in the local development environment, using mocks, and also as part of a continuous delivery pipeline, with real dependencies. | |||

Writing an integration test to work both with mocks and with a live dependency is a bit more complex than simply writing the straightforward integration test, but not with much. The decision is left to the developer. What you're getting is a more opaque [[#Swiss_Cheese_Testing_Model|Swiss cheese]]. | |||

Also see: {{Internal|Go_Testing#Integration_Tests|Integration Tests in Go}} | |||

====Broad Integration Tests==== | |||

A '''broad integration test''' is a test that requires live versions of all run-time dependencies. Such tests are expensive, because they require a full-featured test environment with all the necessary infrastructure, data and services. Managing the right versions of all those dependencies requires significant coordination overhead, which tends to slow down the release cycles. The tests themselves are often fragile and unhelpful. For example, it takes effort to determine if a test failed because of the new code, mismatched version dependencies or the environment. | |||

Thoughtworks classified broad integration tests as a "hold" technique. While they don't have an issue with automated "black box" integration testing in general, they find that a more helpful approach is one that balances the need for confidence with release frequency. This can be done in two stages by first validating the behavior of the system under test assuming a certain set of responses from runtime dependencies, and then validating the assumptions. The first stage uses service virtualization to create test doubles of run-time dependencies and validates the behavior of the system under test. This simplifies test data management concerns and allows for deterministic tests. The second stage uses [[#Contract_Testing|contract tests]] to validate those environmental assumptions with real dependencies. Full justification [https://www.thoughtworks.com/radar/techniques/summary/broad-integration-tests here]. | |||

===System Test=== | |||

A system test exercises individual features in a setup as close to production as possible. It involves building a complete application binary, then running the binary on a set of representative inputs, or under load similar to production load, if it is a server, to ensure that all components are working correctly together, and with real external dependencies. We use real, authenticated HTTP invocations are sent by a remote client, the service talks to a real database, etc. These tests are expensive to run locally, so usually they run as part of a dedicated continuous delivery pipeline. | |||

Also see: {{Internal|Go_Testing#System_Tests|System Tests in Go}} | |||

===Smoke Test=== | |||

See [[#Smoke_Testing|Smoke Testing]] below. | |||

===<span id='Journey_Test'></span>UI-Driven Journey Test=== | |||

The journey tests, driven through the UI, test the application as a whole. | |||

==Acceptance Test== | |||

{{External|http://wiki.c2.com/?AcceptanceTest}} | |||

Acceptance tests are written by analysts and other stakeholders, in a [[Agile_Software_Development#User_Story|user story]] manner. | |||

==Other Kinds of Tests== | |||

When people think about automated testing, they generally think about [[#Functional_Tests|functional tests]] like [[#Unit_Test|unit tests]], [[#Integration_Test|integration tests]] or [[#UI-Driven_Journey_Test|UI-driven journey tests]]. However, the scope of risks is broader than functional defects, so the scope of validation should be broader as well. Constraints and requirements beyond the purely functional ones are called Non-Functional Requirements (NFR) or Cross-Functional Requirements (CFR) (see [https://sarahtaraporewalla.com/agile/design/decade_of_cross_functional_requirements_cfrs A Decade of Cross Functional Requirements (CFRs)] by Sarah Taraporewalla): | |||

===Code Quality=== | |||

* Test coverage | |||

* Amount of duplicated code | |||

* Cyclomatic complexity https://www.ibm.com/docs/en/raa/6.1?topic=metrics-cyclomatic-complexity | |||

* Afferent and efferent coupling | |||

* Number of warnings | |||

* Code style | |||

===Security=== | |||

===Compliance=== | |||

===Performance=== | |||

===Scalability=== | |||

Automated tests can prove that scaling works correctly. | |||

===Load=== | |||

{{Internal|Performance_Concepts#Load_Testing|Load Testing}} | |||

===Availability=== | |||

Automated tests can prove that failover works. | |||

===Smoke Testing=== | |||

{{External|https://en.wikipedia.org/wiki/Smoke_testing_(software)}} | |||

Also see: {{Internal|Infrastructure_Code_Testing_Concepts#Sample_Application|Infrastructure Code Testing Concepts | Sample Application}} | |||

=Test Suite= | |||

A test suite is a collection of automated tests that are run as a group. | |||

=Test Double= | =Test Double= | ||

A test double is meant to replace a real [[#External_Dependency|external dependency]] in the unit test cycle, isolating it from the real dependency and allowing the test to run in standalone mode. This may be necessary either because the external dependency is unavailable, or the interaction with it is slow. The term was introduced by Gerard Meszaros in his [https://www.amazon.com/xUnit-Test-Patterns-Refactoring-Addison-Wesley-ebook/dp/B004X1D36K/ref=sr_1_3 xUnit Test Patterns] book. | A test double is meant to replace a real [[#External_Dependency|external dependency]] in the unit test cycle, isolating it from the real dependency and allowing the test to run in standalone mode. This may be necessary either because the external dependency is unavailable, or the interaction with it is slow. The term was introduced by Gerard Meszaros in his [https://www.amazon.com/xUnit-Test-Patterns-Refactoring-Addison-Wesley-ebook/dp/B004X1D36K/ref=sr_1_3 xUnit Test Patterns] book. Modular systems encourage [[Designing_Modular_Systems#Testability|testability]] by design. | ||

==Dummy== | ==Dummy== | ||

A '''dummy object''' is passed as a mandatory parameter object but is not directly used in the test code or the code under test. The dummy object is required for the creation of another object required in the code under test. When implemented as a class, all methods should throw a "Not Implemented" runtime exception. | A '''dummy object''' is passed as a mandatory parameter object but is not directly used in the test code or the code under test. The dummy object is required for the creation of another object required in the code under test. When implemented as a class, all methods should throw a "Not Implemented" runtime exception. | ||

==Stub== | ==Stub== | ||

A '''stub''' delivers indirect inputs to the caller when the stub's methods are invoked. Stubs are programmed only for the test scope. Stubs' methods can be programmed to return hardcoded results or to throw specific exceptions. Stubs are useful in impersonating error conditions in [[#External_Dependency|external dependencies]]. The same result can be achieve with a [[#Mock|mock]]. | A '''stub''' delivers indirect inputs to the caller when the stub's methods are invoked. Stubs are programmed only for the test scope. Stubs' methods can be programmed to return hardcoded results or to throw specific exceptions. Stubs are useful in impersonating error conditions in [[#External_Dependency|external dependencies]]. The same result can be achieve with a [[#Mock|mock]]. | ||

==Spy== | ==Spy== | ||

A '''spy''' is a variation of a [[#Stub|stub]] but instead of only <font color= | A '''spy''' is a variation of a [[#Stub|stub]] but instead of only <font color=darkkhaki>setting the expectation</font>, a spy records the method calls made to the collaborator. A spy can act as an indirect output of the unit under test and can also act as an audit log. | ||

==Mock== | ==Mock== | ||

A '''mock''' object is a combination of a [[#Stub|stub]] and a [[#Spy|spy]]. It stubs methods to return values and throw exceptions, like a stub, but it acts as an indirect output for code under test, as a spy. A mock object fails a test if an expected method is not invoked, or if the parameter of the method do not match. The [[Mockito#Overview|Mockito framework]] provides an API to mock objects. | A '''mock''' object is a combination of a [[#Stub|stub]] and a [[#Spy|spy]]. It stubs methods to return values and throw exceptions, like a stub, but it acts as an indirect output for code under test, as a spy. A mock object fails a test if an expected method is not invoked, or if the parameter of the method do not match. The [[Mockito#Overview|Mockito framework]] provides an API to mock objects. | ||

==Fake== | ==Fake== | ||

A '''fake''' object is a [[#Test_Double|test double]] with real logic, unlike [[#Stub|stubs]], but it is much more simplified and cheaper than the real [[#External_Dependency|external dependency]]. This way, the external dependencies of the unit <font color= | A '''fake''' object is a [[#Test_Double|test double]] with real logic, unlike [[#Stub|stubs]], but it is much more simplified and cheaper than the real [[#External_Dependency|external dependency]]. This way, the external dependencies of the unit <font color=darkkhaki>are mocked or stubbed</font> so that the output of the dependent objects can be controlled and observed from the test. A classic example is a database fake, which is an entirely in-memory non-persistent database that otherwise is fully functional. By contrast, a database stub would return a fixed value. More generically, a fake is a full implementation of an interface, and mimics real-world behavior, whereas mocks typically simulate responses for specific method calls without encompassing full behavior. Fakes don’t require setting expectations, and are not designed to verify interactions. | ||

Fake objects are extensively used in legacy code, in scenarios like: | Fake objects are extensively used in legacy code, in scenarios like: | ||

| Line 64: | Line 148: | ||

Fake objects are working implementations. Fake classes extends the original classes, but it usually performs some sort of hack which makes them unsuitable for production. | Fake objects are working implementations. Fake classes extends the original classes, but it usually performs some sort of hack which makes them unsuitable for production. | ||

=Testing and | ="Given, When, Then" Testing= | ||

{{Internal| | <font color=darkkhaki>TO PROCESS: https://archive.is/20140814232159/http://www.jroller.com/perryn/entry/given_when_then_and_how#selection-149.0-149.17</font> | ||

=Swiss Cheese Testing Model= | |||

{{External|https://en.wikipedia.org/wiki/Swiss_cheese_model}} | |||

The Swiss cheese testing model is a risk management model juxtaposed on the typical software test layers. The idea is that a given layer of testing, or a test mesh, may have holes, like one slice of Swiss cheese, and those holes might miss a defect, or fail to uncover a risk. But when multiple layers (meshes) are combined, the totality of tests look more like a block of Swiss cheese, with no holes that go all the way through, so all the risks are properly managed. | |||

:::[[File:Swiss_Cheese_Model.png|398px]] | |||

=Strategies to Improve Testing Speed and Efficiency= | |||

==<span id='Components'></span>Divide the System into Small, Tractable Components== | |||

Design the system as a set of small, loosely coupled components that can be tested independently. Testing a component in isolation with unit test at the lower level of the test hierarchy is faster and less expensive. A smaller component has a smaller surface area of risk. Testing component interaction is still needed, but with fewer of more complex and expensive tests. | |||

==<span id='Use_Test_Doubles'></span>Test Doubles== | |||

[[#External_Dependency|External dependencies]] impact testing, especially if you need to rely on someone else to provide instances to support the tests. Even if they are available, they may be slow, expensive, unreliable and might have inconsistent state, especially if they are shared in testing. A strategy to speed up testing and make it more deterministic is to use [[#Test_Double|test doubles]] at the unit and integration test level, as part of the [[#Progressive_Testing|progressive testing]] strategy. Actual dependencies might be used for testing in the later stages, but the number of high level tests that use them should be minimal. | |||

==<span id='Use_Progressive_Testing'></span>Progressive Testing== | |||

Progressive testing is running the cheaper, faster, narrower scope and fewer dependencies unit tests first, to get quicker feedback if they fail, then run integration tests, and only run journey tests if all lower level tests pass successfully. The [[#Swiss_Cheese_Testing_Model|Swiss cheese model]] provides the intuition of why this approach makes sense: the failures that are detected most cheaply are detected first. They can be quickly fixed and tests repeated. Given that all tests need to pass, starting with the fastest and cheapest tests makes economical sense. | |||

==Add Narrower Scope Tests for Failures Detected by the Broader Scope Tests== | |||

If a broader scope test, like an integration test or a journey test, finds a problem, add a unit tests that would catch the problem earlier, if possible, and also try to remove the specific part from the broader test, also if possible. This is to avoid duplicating tests at different levels. | |||

=Testing Infrastructure Code= | |||

{{Internal|Infrastructure Code Testing Concepts#Overview|Testing Infrastructure Code}} | |||

=Testing in Production= | |||

<font color=darkkhaki>TO PROCESS: [https://www.infoq.com/presentations/testing-production-2018/ Yes, I Test in Production (And So Do You)] by Charity Majors</font> | |||

This section is by no means an argument against pre-production testing. Done properly, pre-production testing will identify most of the defects before reaching production, saving lost time and revenue. Pre-production testing categorically helps eliminating issues caused by code that does not compile and run, ensure against predictable failures and makes sure that problems identified before do not show up again. | |||

However, it is possible to reach a point of diminishing returns where further investments in pre-production testing do not yield valuable results. Production provides conditions that cannot be replicate in a testing environment: | |||

* '''Data'''. The production system may have larger data sets that can be replicated in a testing environment, and will have unexpected data values and combinations. | |||

* '''Users'''. Doing their sheer numbers, the users will be far more creating at doing strange things that the testing staff. | |||

* '''Traffic'''. Production traffic is generally higher than what can be achieved in testing. A week-long soak test is trivial compared to a year of running in production. | |||

* '''Concurrency'''. Testing tools can emulate multiple users using the system at the same time, but they can't replicate the combinations of things that users can do. | |||

Testing in productions takes advantage of the unique conditions that exist there. The risks of testing in production can be managed by ensuring that [[Monitoring Concepts|monitoring tools]] can detect the problem caused by testing so tests can be stopped quickly. Better system [[Monitoring_Concepts#Observability|observability]] helps with that. The capability for zero-downtime deployments helps with quick rollbacks if necessary. Production tests should not make inappropriate changes to data or expose sensitive data. A set of test data records, such as test users and test credit card numbers, which will not trigger real-world actions, can be maintained in production to address this issue. Techniques like [[Blue-Green_Deployments#Overview|blue-green deployments]] and [[Canary_Release#Overview|canary releases]] have been developed to help with testing in production. | |||

=Monitoring as Testing= | |||

Monitoring can be seen as passive testing in production, and it should form a part of the testing strategy. | |||

=Staging Environment= | |||

An environment that is as close to production as possible. This is where the final testing phase takes place, before deploying in production. Also known as "pre-production". | |||

=Contract Testing= | |||

<font color=darkkhaki>TODO: https://martinfowler.com/bliki/ContractTest.html</font> | |||

=To Process= | |||

<font color=darkkhaki> | |||

* [https://martinfowler.com/articles/mocksArentStubs.html Mocks Aren't Stubs] by Martin Fowler | |||

</font> | |||

Latest revision as of 00:39, 1 August 2024

Internal

Overview

Automated testing provides an effective mechanism for catching regressions, especially when combined with test-driven development. Well written tests can also act as a form of a system documentation but if not used carefully, writing tests may produce meaningless boilerplate test cases. Automated testing is also valuable for covering corner cases that rarely arise in normal operations. Thinking about writing the tests improves the understanding of the problem and in some cases leads to better solutions.

Testing is ultimately about managing risks.

Test-Driven Development (TDD)

Test-Driven Development (TDD) is an agile software development technique for writing software that guides software development by writing tests. It consists of the following steps, applied repeatedly:

- Write a test for the next bit of functionality you want to add.

- Write the functional code until the test passes.

- Refactor both new and old code to make it well structured.

This is another set of three rules that aims to define TDD:

- Write production code only to pass a failing test

- Write no more of a unit test that sufficient to fail

- Write no more production code than necessary to pass one failing unit test

Writing the tests first and exercising them in process of writing the code makes the code more modular, and also prevents it to break if changed later - the tests become part of the code base. This is referring to as "building quality in" instead of "testing quality in". Writing tests while designing and building the system, rather than afterward, forces you to improve the design. Tests shape the design and act as contracts that the code must meet. A component that is difficult to test in isolation is a symptom of design issues. A well-designed system should have loosely coupled components. Clean design and loosely coupled code is a byproduct of making a system testable.

TDD was created by Kent Beck.

Other articles on this subject:

Automated Test

An automated test verifies an assumption about the behavior of the system and provides a safety mesh that is exercised continuously, again and again, in an automated fashion, in most cases on each commit in the repository. The benefits of automated testing and that the software is continuously verified, maintaining its quality. Another benefit of tests is that they serve as documentation for code. Yet another benefit is that enables refactoring.

Continuous Testing

Continuous testing means the test suite is run as often as possible, ideally after each commit. The CI build and the CD pipeline should run immediately someone pushes a change to the codebase. Continuous testing provide continuous feedback. Quick feedback, ideally immediately after a change was committed, allows developers to respond to the feedback with as little interruption to their flow as possible. Additionally, more often the tests run, the smaller scope of change is examined for each run, so it's easier to figure out where the problems were introduced, if any.

Test Categories

Test Pyramid

The test pyramid is a well known-model for software testing. It prescribes more "cheaper" tests at the lower level, and a fewer, more expensive tests at the higher levels. The lower level of the pyramid contains unit tests. The middle layer contains integration tests, which cover collections of components assembled together. The higher stage contain journey tests, driven through the UI, which test the application as a whole.

The tests in the higher level of the pyramid cover the same code base as the tests in the lower levels, which means they can be fewer, and less comprehensive. They only need to tests the functionality that emerges from the interaction of components, in way the tests at the lower levels can't. They don't need to prove the behavior of the lower-level components, that's already done by the lower level tests.

TO PROCESS: The Practical Test Pyramid by Ham Vocke

Note that the "classical" test pyramid does not apply entirely to infrastructure software. The model for infrastructure code testing is the "infrastructure test diamond".

Functional Tests

Unit Test

- Unit Test by Martin Fowler

Unit testing is the testing of the smallest possible part of the logic, such a single method, a small set of related methods or a class. In reality, we test logical units - which can extend to a method, a single class or multiple classes. A unit test has the following characteristics:

- It should be automated. Continually verified assumptions encourage refactoring. Code should not be refactored without proper automated test coverage.

- It should be reliable - it should fail if, and only if, the production code is broken. If the test starts failing for some other reason, for example if the internet connection is not available, that implies the unit testing code is broken.

- It should be small and fast, and inexpensive to run, not more than a few milliseconds to finish execution.

- It should be self-contained and runnable in isolation, and then in any order as part of the test suite. The unit test should not depend on the result of another test or on the execution order, or external state (such as configuration files stored on the developer's machine). One should understand what is going on in a unit test without having to look at other parts of the code.

- It should not depend on database access, in general any external dependency or any long running task.

- They should not use real external dependencies. A test that calls into the database is not a unit test. If the presence of external dependencies are necessary to test the logic, they should be provided as test doubles. Using mocks with unit tests is acceptable, but the moment mocks enter the scene, the test begins to straddle the unit/integration test boundary.

- It should be time (time of the day, timezone) and location independent.

- It should be meaningful. Getter or setter testing is not meaningful.

- It should be usable as documentation: readable and expressive.

Also see:

External Dependency

The following are examples of situations when the test relies on external dependencies (which should not be the case for unit tests):

- The test acquires a database connection and fetches/updates data.

- The test connects to the internet and downloads files.

- The test interacts with a mail server to send an e-mail.

- The test relies on the presence, and uses an external tool in the testing environment, as part of the testing logic.

- The test looks up JNDI objects.

- The test invokes a web service.

- ... and more.

Integration Test

Integration testing is a software testing phase in which individual software components are combined and tested as a group, and aims to prove compliance with functional requirements. Integration testing occurs after unit testing and before system testing. Also see Progressive Testing.

An integration test covers a collection of components, assembled together and interacting with each via their interfaces. The components have been previously unit tested. Integration tests depend on live (real) external dependencies, such databases, and they are inherently slower than the unit tests. Integration tests should be automated, but they should run outside the continuous unit test feedback loop.

Integration tests may be written in such a way they can use in-memory mocks if the test environment is appropriately configured, turning into "unit tests with mocks", which are a lot less expensive to run. This is especially useful if running the test with live external dependency is expensive, and it is best left to an overnight CI pipeline. Writing tests this way opens up both possibilities: it makes feasible running those tests in the local development environment, using mocks, and also as part of a continuous delivery pipeline, with real dependencies.

Writing an integration test to work both with mocks and with a live dependency is a bit more complex than simply writing the straightforward integration test, but not with much. The decision is left to the developer. What you're getting is a more opaque Swiss cheese.

Also see:

Broad Integration Tests

A broad integration test is a test that requires live versions of all run-time dependencies. Such tests are expensive, because they require a full-featured test environment with all the necessary infrastructure, data and services. Managing the right versions of all those dependencies requires significant coordination overhead, which tends to slow down the release cycles. The tests themselves are often fragile and unhelpful. For example, it takes effort to determine if a test failed because of the new code, mismatched version dependencies or the environment.

Thoughtworks classified broad integration tests as a "hold" technique. While they don't have an issue with automated "black box" integration testing in general, they find that a more helpful approach is one that balances the need for confidence with release frequency. This can be done in two stages by first validating the behavior of the system under test assuming a certain set of responses from runtime dependencies, and then validating the assumptions. The first stage uses service virtualization to create test doubles of run-time dependencies and validates the behavior of the system under test. This simplifies test data management concerns and allows for deterministic tests. The second stage uses contract tests to validate those environmental assumptions with real dependencies. Full justification here.

System Test

A system test exercises individual features in a setup as close to production as possible. It involves building a complete application binary, then running the binary on a set of representative inputs, or under load similar to production load, if it is a server, to ensure that all components are working correctly together, and with real external dependencies. We use real, authenticated HTTP invocations are sent by a remote client, the service talks to a real database, etc. These tests are expensive to run locally, so usually they run as part of a dedicated continuous delivery pipeline.

Also see:

Smoke Test

See Smoke Testing below.

UI-Driven Journey Test

The journey tests, driven through the UI, test the application as a whole.

Acceptance Test

Acceptance tests are written by analysts and other stakeholders, in a user story manner.

Other Kinds of Tests

When people think about automated testing, they generally think about functional tests like unit tests, integration tests or UI-driven journey tests. However, the scope of risks is broader than functional defects, so the scope of validation should be broader as well. Constraints and requirements beyond the purely functional ones are called Non-Functional Requirements (NFR) or Cross-Functional Requirements (CFR) (see A Decade of Cross Functional Requirements (CFRs) by Sarah Taraporewalla):

Code Quality

- Test coverage

- Amount of duplicated code

- Cyclomatic complexity https://www.ibm.com/docs/en/raa/6.1?topic=metrics-cyclomatic-complexity

- Afferent and efferent coupling

- Number of warnings

- Code style

Security

Compliance

Performance

Scalability

Automated tests can prove that scaling works correctly.

Load

Availability

Automated tests can prove that failover works.

Smoke Testing

Also see:

Test Suite

A test suite is a collection of automated tests that are run as a group.

Test Double

A test double is meant to replace a real external dependency in the unit test cycle, isolating it from the real dependency and allowing the test to run in standalone mode. This may be necessary either because the external dependency is unavailable, or the interaction with it is slow. The term was introduced by Gerard Meszaros in his xUnit Test Patterns book. Modular systems encourage testability by design.

Dummy

A dummy object is passed as a mandatory parameter object but is not directly used in the test code or the code under test. The dummy object is required for the creation of another object required in the code under test. When implemented as a class, all methods should throw a "Not Implemented" runtime exception.

Stub

A stub delivers indirect inputs to the caller when the stub's methods are invoked. Stubs are programmed only for the test scope. Stubs' methods can be programmed to return hardcoded results or to throw specific exceptions. Stubs are useful in impersonating error conditions in external dependencies. The same result can be achieve with a mock.

Spy

A spy is a variation of a stub but instead of only setting the expectation, a spy records the method calls made to the collaborator. A spy can act as an indirect output of the unit under test and can also act as an audit log.

Mock

A mock object is a combination of a stub and a spy. It stubs methods to return values and throw exceptions, like a stub, but it acts as an indirect output for code under test, as a spy. A mock object fails a test if an expected method is not invoked, or if the parameter of the method do not match. The Mockito framework provides an API to mock objects.

Fake

A fake object is a test double with real logic, unlike stubs, but it is much more simplified and cheaper than the real external dependency. This way, the external dependencies of the unit are mocked or stubbed so that the output of the dependent objects can be controlled and observed from the test. A classic example is a database fake, which is an entirely in-memory non-persistent database that otherwise is fully functional. By contrast, a database stub would return a fixed value. More generically, a fake is a full implementation of an interface, and mimics real-world behavior, whereas mocks typically simulate responses for specific method calls without encompassing full behavior. Fakes don’t require setting expectations, and are not designed to verify interactions.

Fake objects are extensively used in legacy code, in scenarios like:

- the real object cannot be instantiated (such as when the constructor reads a file or performs a JNDI lookup).

- the real object has slow methods - a

calculate()method that invokes aload()methods that reads from a database.

Fake objects are working implementations. Fake classes extends the original classes, but it usually performs some sort of hack which makes them unsuitable for production.

"Given, When, Then" Testing

Swiss Cheese Testing Model

The Swiss cheese testing model is a risk management model juxtaposed on the typical software test layers. The idea is that a given layer of testing, or a test mesh, may have holes, like one slice of Swiss cheese, and those holes might miss a defect, or fail to uncover a risk. But when multiple layers (meshes) are combined, the totality of tests look more like a block of Swiss cheese, with no holes that go all the way through, so all the risks are properly managed.

Strategies to Improve Testing Speed and Efficiency

Divide the System into Small, Tractable Components

Design the system as a set of small, loosely coupled components that can be tested independently. Testing a component in isolation with unit test at the lower level of the test hierarchy is faster and less expensive. A smaller component has a smaller surface area of risk. Testing component interaction is still needed, but with fewer of more complex and expensive tests.

Test Doubles

External dependencies impact testing, especially if you need to rely on someone else to provide instances to support the tests. Even if they are available, they may be slow, expensive, unreliable and might have inconsistent state, especially if they are shared in testing. A strategy to speed up testing and make it more deterministic is to use test doubles at the unit and integration test level, as part of the progressive testing strategy. Actual dependencies might be used for testing in the later stages, but the number of high level tests that use them should be minimal.

Progressive Testing

Progressive testing is running the cheaper, faster, narrower scope and fewer dependencies unit tests first, to get quicker feedback if they fail, then run integration tests, and only run journey tests if all lower level tests pass successfully. The Swiss cheese model provides the intuition of why this approach makes sense: the failures that are detected most cheaply are detected first. They can be quickly fixed and tests repeated. Given that all tests need to pass, starting with the fastest and cheapest tests makes economical sense.

Add Narrower Scope Tests for Failures Detected by the Broader Scope Tests

If a broader scope test, like an integration test or a journey test, finds a problem, add a unit tests that would catch the problem earlier, if possible, and also try to remove the specific part from the broader test, also if possible. This is to avoid duplicating tests at different levels.

Testing Infrastructure Code

Testing in Production

TO PROCESS: Yes, I Test in Production (And So Do You) by Charity Majors

This section is by no means an argument against pre-production testing. Done properly, pre-production testing will identify most of the defects before reaching production, saving lost time and revenue. Pre-production testing categorically helps eliminating issues caused by code that does not compile and run, ensure against predictable failures and makes sure that problems identified before do not show up again.

However, it is possible to reach a point of diminishing returns where further investments in pre-production testing do not yield valuable results. Production provides conditions that cannot be replicate in a testing environment:

- Data. The production system may have larger data sets that can be replicated in a testing environment, and will have unexpected data values and combinations.

- Users. Doing their sheer numbers, the users will be far more creating at doing strange things that the testing staff.

- Traffic. Production traffic is generally higher than what can be achieved in testing. A week-long soak test is trivial compared to a year of running in production.

- Concurrency. Testing tools can emulate multiple users using the system at the same time, but they can't replicate the combinations of things that users can do.

Testing in productions takes advantage of the unique conditions that exist there. The risks of testing in production can be managed by ensuring that monitoring tools can detect the problem caused by testing so tests can be stopped quickly. Better system observability helps with that. The capability for zero-downtime deployments helps with quick rollbacks if necessary. Production tests should not make inappropriate changes to data or expose sensitive data. A set of test data records, such as test users and test credit card numbers, which will not trigger real-world actions, can be maintained in production to address this issue. Techniques like blue-green deployments and canary releases have been developed to help with testing in production.

Monitoring as Testing

Monitoring can be seen as passive testing in production, and it should form a part of the testing strategy.

Staging Environment

An environment that is as close to production as possible. This is where the final testing phase takes place, before deploying in production. Also known as "pre-production".

Contract Testing

TODO: https://martinfowler.com/bliki/ContractTest.html

To Process

- Mocks Aren't Stubs by Martin Fowler