Datadog Concepts: Difference between revisions

| (83 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

=Overview= | =Overview= | ||

Datadog is an observability platform that includes products for monitoring, alerting, metrics, dashboard, big logs, <font color=darkkhaki>synthetics</font>, user monitoring, <font color=darkkhaki>CI/CD (how?)</font>. Datadog is API driven. | Datadog is an observability platform that includes products for monitoring, alerting, metrics, dashboard, big logs, <font color=darkkhaki>synthetics</font>, user monitoring, <font color=darkkhaki>CI/CD (how?)</font>. Datadog is API driven. | ||

=Organization= | |||

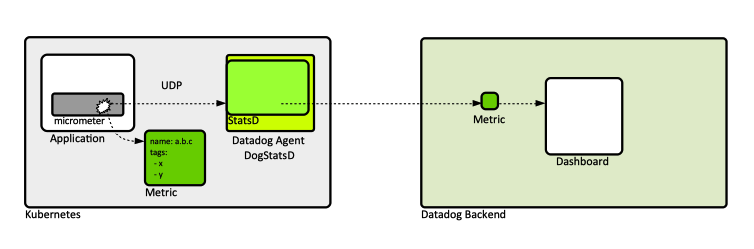

=Route of a Metric from Application to Dashboard= | =Route of a Metric from Application to Dashboard= | ||

[[#DogStatsD|DogStatsD]] → [[# | Application (specialized library) → [[#Metric|metric]] → [[#DogStatsD|DogStatsD]] → Datadog Backend → [[#Metric|metric]] → [[Datadog Dashboard#Overview|Dashboard]] | ||

::::[[File:Datadog_Metric_Propagation.png|754px]] | |||

The [[#Metric|metrics]] are generated by an application-level library, such as [[Micrometer]]. For more details, see [[#Metric_Lifecycle|Metric Lifecycle]] below. The Datadog agent annotates the metric with additional tags (cluster name, pod name, etc.) | |||

=Metrics= | |||

{{External|https://docs.datadoghq.com/metrics/}} | |||

Metrics are numerical values that can track anything about your environment over time. Example: latency, error rates, user signups. Metric data is ingested and stored as a datapoint with a value and a timestamp. The timestamp is rounded to the nearest second. If there is more than one value with the same timestamp, the latest received value overwrites the previous one. A sequence of metrics is stored as a timeseries. There are standard metrics, such as CPU, memory, etc, but metrics specific to business can be defined. Those are [[#Custom_Metrics|custom metrics]]. Metrics can be visualized in [[Datadog Dashboard#Overview|dashboards]], [[#Metrics_Explorer|Metrics Explorer]] and [[#Notebook|Notebooks]] | |||

==Metric Name== | |||

<font color=darkkhaki>Valid characters?</font> | |||

==Metric Tags== | |||

==Metric Lifecycle== | |||

Metrics are created by application-level specialized libraries, such as [[Micrometer#Overview|Micrometer]]. For example, Micrometer creates [[Micrometer#Measurement|measurements]] <font color=darkkhaki>, which are semantically equivalent</font>. The metric has a [[#Metric_Name|name]], and it can optionally have one or more [[#Metric_Tags|tags]]. | |||

Metrics can be sent to Datadog from: | |||

* Datadog-supported integrations. <font color=darkkhaki>More: https://docs.datadoghq.com/integrations/</font>. | |||

* Directly from the Datadog platform, for example counting errors showing up in the logs and publishing that as a new metric. <font color=darkkhaki>More: https://docs.datadoghq.com/logs/logs_to_metrics/</font>. | |||

* [[#Custom_Metrics|Custom metrics]] generators. | |||

* [[#Agent|Datadog Agent]], which automatically sends standard metrics as CPU and disk usage. | |||

==Metric Types== | |||

{{External|https://docs.datadoghq.com/metrics/types/}} | |||

Metric types determine which graphs and functions are available to use with the metric. | |||

===Count=== | |||

A count metric adds up all the submitted values in a time interval. This would be suitable for a metric tracking the number of website hits, for example. | |||

===Rate=== | |||

The rate metric takes the count and divides it by the length of the type interval (example: hits per second). | |||

===Gauge=== | |||

A gauge metric takes the last value reported during the interval. This could be used to track values such as CPU or memory, where taking the last value provides a representative picture of the host's behavior during the time interval. | |||

===Histogram=== | |||

A histogram reports five different values summarizing the submitted values: the average, count, median, 95th percentile, and max. This produces five different timeseries. This type of metric is suitable for things like latency, for which were is not enough to know the average value. Histograms allow you to understand how your data was spread out without recording every single data point. | |||

===Distribution=== | |||

{{External|https://docs.datadoghq.com/metrics/distributions/}} | |||

A distribution is similar to a [[#Histogram|histogram]] but it summarizes values submitted during a time interval across all hosts in your environment. | |||

===Set=== | |||

==<span id='Custom_Metric'></span>Custom Metrics== | |||

{{External|https://docs.datadoghq.com/metrics/custom_metrics/}} | |||

=Metric Query= | |||

{{External|https://docs.datadoghq.com/metrics/#querying-metrics}} | |||

<font size=-1> | |||

<font color=burgundy>space-aggregation</font>:<font color=skyblue>metric.name</font>{filter/scope} <font color=burgundy>by {space_aggregation_grouping_by_tag}</font>.time-aggregation | |||

</font> | |||

<font size=-1> | |||

<font color=burgundy>avg</font>:<font color=skyblue>system.io.r_s</font>{app:myapp} <font color=burgundy>by {host}</font>.rollup(avg, 3600) | |||

</font> | |||

<font color=darkkhaki>TO PROCESS: | |||

* https://docs.datadoghq.com/metrics/advanced-filtering | |||

</font> | |||

==Metric Query Elements== | |||

===Metric Name in Metric Query=== | |||

<code>system.io.r_s</code> | |||

See [[#Metric_Name|Metric Name]] above. | |||

===Filter/Scope=== | |||

The query metric values can be filtered based on tags. The <code>{...}</code> section contains a comma-separated list of <code>tag-name:tag-value</code> pairs. It is said that the list of <code>tag-name:tag-value</code> pairs, also known as the '''query filter''', scope the query. Example: | |||

<syntaxhighlight lang='text'> | |||

{app:myapp, something:somethingelse} | |||

</syntaxhighlight> | |||

<syntaxhighlight lang='text'> | |||

{status:true,!condition:ready,cluster_name:my-cluster} | |||

</syntaxhighlight> | |||

<syntaxhighlight lang='text'> | |||

{table_name:*event_f_incomplete AND time_hours:0 AND time_interval:0 AND time_unit:hour AND target_hour:-2 AND environment:myenv AND component:mycomp AND NOT (table_name:*tier1_event_f_incomplete)} | |||

</syntaxhighlight> | |||

The curly braces <code>{...}</code> must always be present in the metric query definition <code>{...}</code>. If there are no particular tags to filter by, use the <code>{*}</code> syntax. | |||

For more details on tags, see [[#Tag|Tags]]. | |||

===Space Aggregation=== | |||

"Space" refers to the way metrics are distributed over hosts and tags. There are two different aspects of "space" that can be controlled when aggregating metrics: [[#Grouping|grouping]] and the [[#Space_Aggregator|space aggregator]]. | |||

====<span id='Grouping'></span><span id='Space_Aggregator_Tags'></span>Grouping or Space Aggregator Tags==== | |||

Grouping defines what constitutes a line on the graph. Grouping splits a single metric into multiple timeseries by tags such as host, container, and region. For example, if you have hundreds of hosts spread across four regions, grouping by region allows you to graph one line for every region. This would reduce the number of timeseries to four. | |||

<font size=-1> | |||

... by {host} ... | |||

</font> | |||

When more that one value is available for a group such defined, we need to instruct Datadog how to combine such values, with the [[#Space_Aggregator|space aggregator]]. | |||

====Space Aggregator==== | |||

The space aggregator defines how the metrics in each group are combined. There are four main aggregations types available: <code>sum</code>, <code>min</code>, <code>max</code>, and <code>avg</code>. There are also <code>count</code>, <code>p50</code>, <code>p75</code>, <code>p95</code>, <code>p99</code>, <code>0-sum</code>, <code>1-avg</code>, <code>100-avg</code>. | |||

===Time Aggregation=== | |||

Datadog stores a large volume of points, and in most cases it’s not possible to display all of them on a graph. There would be more datapoints than pixels. Datadog uses time aggregation to solve this problem by combining data points into time buckets. For example, when examining four hours, data points are combined into two-minute buckets. This is called a '''rollup'''. As the time interval you’ve defined for your query increases, the granularity of your data becomes coarser. | |||

There are five aggregations you can apply to combine your data in each time bucket: <code>sum</code>, <code>min</code>, <code>max</code>, <code>avg</code>, and <code>count</code>. By default, <code>avg</code> is applied, in which case <code>rollup(...)</code> does not show up in the metric query. | |||

<font size=-1> | |||

space_agg:metric{...}.rollup(max, 60) | |||

</font> | |||

Time aggregation is always applied in every query you make. | |||

===Operations=== | |||

===Functions=== | |||

Graph values can be modified with mathematical functions. This can mean performing arithmetic between an integer and a metric (for example, multiplying a metric by 2). Or performing arithmetic between two metrics (for example, creating a new timeseries for the memory utilization rate like this: jvm.heap_memory / jvm.heap_memory_max). Functions are optional. | |||

===Rollup=== | |||

<code>rollup(avg, 3600)</code> | |||

==Other Examples== | |||

<font size=-1> | |||

avg:myapp.smoketest.run_time{$cluster_name}/1000 | |||

</font> | |||

=<span id='Tag'>Tags</span>= | |||

{{External|https://docs.datadoghq.com/getting_started/tagging/using_tags/}} | |||

=<span id='Event'></span>Events= | |||

{{External|https://docs.datadoghq.com/events/}} | |||

Events are records of notable changes relevant for managing and troubleshooting IT operations, such as code deployments, service health, configuration changes or monitoring alerts. | |||

<font color=darkkhaki> | |||

TO PROCESS: | |||

* https://docs.datadoghq.com/events/ | |||

* https://docs.datadoghq.com/events/guides/ | |||

</font> | |||

=Agent= | =Agent= | ||

| Line 16: | Line 131: | ||

DogStatsD accepts [[#Custom_Metric|custom metrics]],[[#Event|events]] and service checks over UDP and periodically aggregates them and forwards them to Datadog. | DogStatsD accepts [[#Custom_Metric|custom metrics]],[[#Event|events]] and service checks over UDP and periodically aggregates them and forwards them to Datadog. | ||

=<span id='Monitor'></span>Monitors and Alerting= | |||

{{ | {{Internal|Datadog Concepts Monitors and Alerting#Overivew|Monitors and Alerting}} | ||

=Unified Service Tagging= | =Unified Service Tagging= | ||

There are three reserved tags: "env", "service", "version". | There are three reserved tags: "env", "service", "version". | ||

=Dashboard= | =Dashboard= | ||

{{Internal|Datadog Dashboard#Overview|Dashboard}} | {{Internal|Datadog Dashboard#Overview|Dashboard}} | ||

=Metrics Explorer= | |||

Tool to browse arbitrary metrics, by selecting their [[#Metric_Name|name]]. | |||

=Notebook= | |||

=Security= | =Security= | ||

| Line 41: | Line 152: | ||

An API key is required by the [[#Agent|Datadog Agent]] to submit metrics and events to Datadog. The API keys are also used by other third-party clients, such as, for example, the [[Managing_Datadog_with_Pulumi#Datadog_Resource_Provider|Pulumi Datadog resource provider]], which provisions infrastructure on the Datadog backend. API keys are unique to an organization. To see API Keys: Console → Hover over the user name at the bottom of the left side menu → Organization Settings → API Keys. | An API key is required by the [[#Agent|Datadog Agent]] to submit metrics and events to Datadog. The API keys are also used by other third-party clients, such as, for example, the [[Managing_Datadog_with_Pulumi#Datadog_Resource_Provider|Pulumi Datadog resource provider]], which provisions infrastructure on the Datadog backend. API keys are unique to an organization. To see API Keys: Console → Hover over the user name at the bottom of the left side menu → Organization Settings → API Keys. | ||

To invoke into the API, the client expects the environment variable <code>DATADOG_API_KEY</code> to be set. | |||

An API key is unique to an [[#Organization|organization]]. | |||

==Application Key== | ==Application Key== | ||

{{External|https://docs.datadoghq.com/account_management/api-app-keys/#application-keys}} | {{External|https://docs.datadoghq.com/account_management/api-app-keys/#application-keys}} | ||

Application keys, in conjunction with the organization’s [[#API_Key|API key]], give users access to Datadog’s programmatic API. Application keys are associated with the user account that created them and by default have the permissions and scopes of the user who created them. To see or create Application Keys: Console → Hover over the user name at the bottom of the left side menu → Organization Settings → Application Keys. | Application keys, in conjunction with the organization’s [[#API_Key|API key]], give users access to Datadog’s programmatic API. Application keys are associated with the user account that created them and by default have the permissions and scopes of the user who created them. To see or create Application Keys: Console → Hover over the user name at the bottom of the left side menu → Organization Settings → Application Keys. | ||

=API= | |||

{{Internal|Datadog API|Datadog API}} | |||

=Kubernetes Support= | |||

<font color=darkkhaki>Understand this:</font> | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: apps/v1 | |||

kind: Deployment | |||

metadata: | |||

name: myapp | |||

spec: | |||

template: | |||

metadata: | |||

annotations | |||

ad.datadoghq.com/myapp.check_names: '["myapp"]' | |||

ad.datadoghq.com/myapp.init_configs: '[{"is_jmx": true, "collect_default_metrics": true}]' | |||

ad.datadoghq.com/myapp.instances: '[{"host": "%%host%%","port":"19081"}]' | |||

spec: | |||

[...] | |||

</syntaxhighlight> | |||

Latest revision as of 20:47, 25 May 2022

Internal

Overview

Datadog is an observability platform that includes products for monitoring, alerting, metrics, dashboard, big logs, synthetics, user monitoring, CI/CD (how?). Datadog is API driven.

Organization

Route of a Metric from Application to Dashboard

Application (specialized library) → metric → DogStatsD → Datadog Backend → metric → Dashboard

The metrics are generated by an application-level library, such as Micrometer. For more details, see Metric Lifecycle below. The Datadog agent annotates the metric with additional tags (cluster name, pod name, etc.)

Metrics

Metrics are numerical values that can track anything about your environment over time. Example: latency, error rates, user signups. Metric data is ingested and stored as a datapoint with a value and a timestamp. The timestamp is rounded to the nearest second. If there is more than one value with the same timestamp, the latest received value overwrites the previous one. A sequence of metrics is stored as a timeseries. There are standard metrics, such as CPU, memory, etc, but metrics specific to business can be defined. Those are custom metrics. Metrics can be visualized in dashboards, Metrics Explorer and Notebooks

Metric Name

Valid characters?

Metric Tags

Metric Lifecycle

Metrics are created by application-level specialized libraries, such as Micrometer. For example, Micrometer creates measurements , which are semantically equivalent. The metric has a name, and it can optionally have one or more tags.

Metrics can be sent to Datadog from:

- Datadog-supported integrations. More: https://docs.datadoghq.com/integrations/.

- Directly from the Datadog platform, for example counting errors showing up in the logs and publishing that as a new metric. More: https://docs.datadoghq.com/logs/logs_to_metrics/.

- Custom metrics generators.

- Datadog Agent, which automatically sends standard metrics as CPU and disk usage.

Metric Types

Metric types determine which graphs and functions are available to use with the metric.

Count

A count metric adds up all the submitted values in a time interval. This would be suitable for a metric tracking the number of website hits, for example.

Rate

The rate metric takes the count and divides it by the length of the type interval (example: hits per second).

Gauge

A gauge metric takes the last value reported during the interval. This could be used to track values such as CPU or memory, where taking the last value provides a representative picture of the host's behavior during the time interval.

Histogram

A histogram reports five different values summarizing the submitted values: the average, count, median, 95th percentile, and max. This produces five different timeseries. This type of metric is suitable for things like latency, for which were is not enough to know the average value. Histograms allow you to understand how your data was spread out without recording every single data point.

Distribution

A distribution is similar to a histogram but it summarizes values submitted during a time interval across all hosts in your environment.

Set

Custom Metrics

Metric Query

space-aggregation:metric.name{filter/scope} by {space_aggregation_grouping_by_tag}.time-aggregation

avg:system.io.r_s{app:myapp} by {host}.rollup(avg, 3600)

TO PROCESS:

Metric Query Elements

Metric Name in Metric Query

system.io.r_s

See Metric Name above.

Filter/Scope

The query metric values can be filtered based on tags. The {...} section contains a comma-separated list of tag-name:tag-value pairs. It is said that the list of tag-name:tag-value pairs, also known as the query filter, scope the query. Example:

{app:myapp, something:somethingelse}

{status:true,!condition:ready,cluster_name:my-cluster}

{table_name:*event_f_incomplete AND time_hours:0 AND time_interval:0 AND time_unit:hour AND target_hour:-2 AND environment:myenv AND component:mycomp AND NOT (table_name:*tier1_event_f_incomplete)}

The curly braces {...} must always be present in the metric query definition {...}. If there are no particular tags to filter by, use the {*} syntax.

For more details on tags, see Tags.

Space Aggregation

"Space" refers to the way metrics are distributed over hosts and tags. There are two different aspects of "space" that can be controlled when aggregating metrics: grouping and the space aggregator.

Grouping or Space Aggregator Tags

Grouping defines what constitutes a line on the graph. Grouping splits a single metric into multiple timeseries by tags such as host, container, and region. For example, if you have hundreds of hosts spread across four regions, grouping by region allows you to graph one line for every region. This would reduce the number of timeseries to four.

... by {host} ...

When more that one value is available for a group such defined, we need to instruct Datadog how to combine such values, with the space aggregator.

Space Aggregator

The space aggregator defines how the metrics in each group are combined. There are four main aggregations types available: sum, min, max, and avg. There are also count, p50, p75, p95, p99, 0-sum, 1-avg, 100-avg.

Time Aggregation

Datadog stores a large volume of points, and in most cases it’s not possible to display all of them on a graph. There would be more datapoints than pixels. Datadog uses time aggregation to solve this problem by combining data points into time buckets. For example, when examining four hours, data points are combined into two-minute buckets. This is called a rollup. As the time interval you’ve defined for your query increases, the granularity of your data becomes coarser.

There are five aggregations you can apply to combine your data in each time bucket: sum, min, max, avg, and count. By default, avg is applied, in which case rollup(...) does not show up in the metric query.

space_agg:metric{...}.rollup(max, 60)

Time aggregation is always applied in every query you make.

Operations

Functions

Graph values can be modified with mathematical functions. This can mean performing arithmetic between an integer and a metric (for example, multiplying a metric by 2). Or performing arithmetic between two metrics (for example, creating a new timeseries for the memory utilization rate like this: jvm.heap_memory / jvm.heap_memory_max). Functions are optional.

Rollup

rollup(avg, 3600)

Other Examples

avg:myapp.smoketest.run_time{$cluster_name}/1000

Tags

Events

Events are records of notable changes relevant for managing and troubleshooting IT operations, such as code deployments, service health, configuration changes or monitoring alerts. TO PROCESS:

Agent

The Datadog agent has a built-in StatsD server, exposed over a configurable port. It's written in Go.

Agent and Kubernetes

TO CONTINUE: https://docs.datadoghq.com/developers/dogstatsd/?tab=kubernetes#

DogStatsD

DogStatsD is a metrics aggregation service bundled with the Datadog agent. DogStatsD implements the StatsD protocol and a few extensions (histogram metric type, service checks, events and tagging).

DogStatsD accepts custom metrics,events and service checks over UDP and periodically aggregates them and forwards them to Datadog.

Monitors and Alerting

Unified Service Tagging

There are three reserved tags: "env", "service", "version".

Dashboard

Metrics Explorer

Tool to browse arbitrary metrics, by selecting their name.

Notebook

Security

User

Service Account

API Key

An API key is required by the Datadog Agent to submit metrics and events to Datadog. The API keys are also used by other third-party clients, such as, for example, the Pulumi Datadog resource provider, which provisions infrastructure on the Datadog backend. API keys are unique to an organization. To see API Keys: Console → Hover over the user name at the bottom of the left side menu → Organization Settings → API Keys.

To invoke into the API, the client expects the environment variable DATADOG_API_KEY to be set.

An API key is unique to an organization.

Application Key

Application keys, in conjunction with the organization’s API key, give users access to Datadog’s programmatic API. Application keys are associated with the user account that created them and by default have the permissions and scopes of the user who created them. To see or create Application Keys: Console → Hover over the user name at the bottom of the left side menu → Organization Settings → Application Keys.

API

Kubernetes Support

Understand this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp

spec:

template:

metadata:

annotations

ad.datadoghq.com/myapp.check_names: '["myapp"]'

ad.datadoghq.com/myapp.init_configs: '[{"is_jmx": true, "collect_default_metrics": true}]'

ad.datadoghq.com/myapp.instances: '[{"host": "%%host%%","port":"19081"}]'

spec:

[...]