Lakehouse: Difference between revisions

(Created page with "=External= * https://www.cidrdb.org/cidr2021/papers/cidr2021_paper17.pdf Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics by Mi...") |

|||

| (21 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=External= | =External= | ||

* https://www.cidrdb.org/cidr2021/papers/cidr2021_paper17.pdf Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics by Michael Armbrust, Ali Ghodsi, Reynold Xin, Matei Zaharia | * https://www.cidrdb.org/cidr2021/papers/cidr2021_paper17.pdf Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics by Michael Armbrust, Ali Ghodsi, Reynold Xin, Matei Zaharia | ||

=Internal= | |||

* [[Data Lake]] | |||

* [[Data Warehouse]] | |||

* [[Machine Learning]] | |||

=Overview= | =Overview= | ||

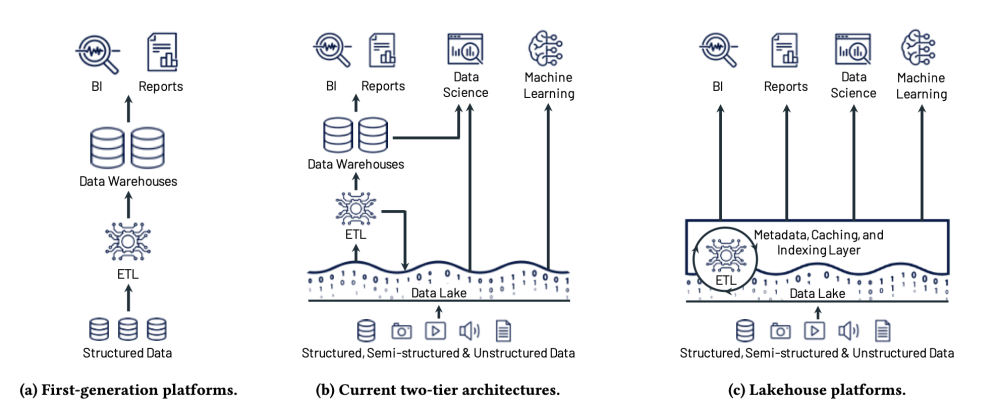

An architectural pattern used to implement access to data that is based on open direct-access [[Data Formats|data formats]] (such as Apache Parquet and ORC), has support for machine learning and data science and offers state-of-the-art performance. The implementation of a Lakehouse pattern is a data management system, which provides traditional analytical DBMS management and performance features such as ACID transactions, data versioning, auditing, indexing, caching and query optimization. It hovers of a [[Data Lake]] and combines its key benefits with those of a [[Data Warehouse|data warehouse]]. Works well with cloud environment where compute and storage are separated. | |||

A lakehouse stores data in low-cost objects stores (S3) using a standard file format such as [[Apache Parquet]], but also implements a transactional metadata layer on top of the object store that defines which objects are part of a table version. | |||

[[File:Lakehouse.png|1000px]] | |||

=Related Concepts= | |||

[[Data Warehouse]]. Schema-on-write. Business Intelligence (BI). Unstructured data. [[Data Lake]]. Schema-on-read. ETL, ELT, [[Machine Learning]], data management, zero-copy cloning. DataFrame and declarative DataFrame API, data pipeline, batch job, streaming pipeline, SQL engines: (Spark SQL, [[PrestoDB]], [[Hive]], AWS Athena), data layout optimizations. | |||

=Implementations= | |||

* [[Apache Iceberg]] | |||

Latest revision as of 23:17, 18 May 2023

External

- https://www.cidrdb.org/cidr2021/papers/cidr2021_paper17.pdf Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics by Michael Armbrust, Ali Ghodsi, Reynold Xin, Matei Zaharia

Internal

Overview

An architectural pattern used to implement access to data that is based on open direct-access data formats (such as Apache Parquet and ORC), has support for machine learning and data science and offers state-of-the-art performance. The implementation of a Lakehouse pattern is a data management system, which provides traditional analytical DBMS management and performance features such as ACID transactions, data versioning, auditing, indexing, caching and query optimization. It hovers of a Data Lake and combines its key benefits with those of a data warehouse. Works well with cloud environment where compute and storage are separated.

A lakehouse stores data in low-cost objects stores (S3) using a standard file format such as Apache Parquet, but also implements a transactional metadata layer on top of the object store that defines which objects are part of a table version.

Related Concepts

Data Warehouse. Schema-on-write. Business Intelligence (BI). Unstructured data. Data Lake. Schema-on-read. ETL, ELT, Machine Learning, data management, zero-copy cloning. DataFrame and declarative DataFrame API, data pipeline, batch job, streaming pipeline, SQL engines: (Spark SQL, PrestoDB, Hive, AWS Athena), data layout optimizations.