Prometheus Adapter for Kubernetes Metrics APIs: Difference between revisions

| Line 153: | Line 153: | ||

<syntaxhighlight lang='bash'> | <syntaxhighlight lang='bash'> | ||

kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/" | kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/" | ||

kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1 | jq '.resources[] | select(.name | contains("action_queue_depth"))' | |||

</syntaxhighlight> | </syntaxhighlight> | ||

Revision as of 21:42, 15 October 2020

External

- https://github.com/DirectXMan12/k8s-prometheus-adapter

- https://github.com/prometheus-community/helm-charts/tree/main/charts/prometheus-adapter

Internal

Overview

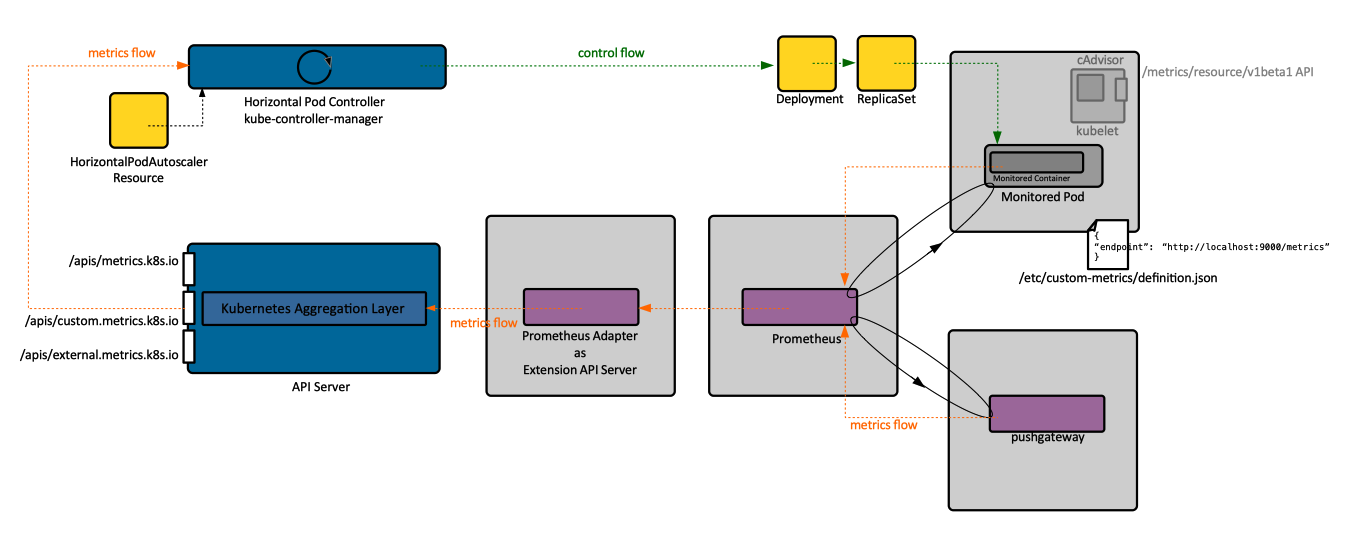

The Prometheus adapter is a Kubernetes Aggregation Layer extension and operates as an extension API server. It knows how to communicate with both Kubernetes and Prometheus, acting as a translator between the two. The API is registered as "custom.metrics.k8s.io/v1beta1".

How it Works

The adapter processes the metrics coming from Prometheus as follows:

Discovery: it discovers available metrics.

Association: it determines which kubernetes resource each metric is associated with.

Naming: it determines how it should expose the metrics in the custom metric API.

Querying: Finally, it figures out how it should query Prometheus to get the actual numbers.

The adapter performs each of the steps for each metric. These steps are formally described for each metric with a rule:

rules:

- {...}

- {...}

The discovery step is defined by a "seriesQuery", which is a query that returns a metric series definition (not numbers).

rules:

- seriesQuery: 'http_requests_total{kubernetes_namespace!="",kubernetes_pod_name!=""}'

The association step maps labels to known resources. It is introduced by the "resources" keyword, followed by an "overrides" map, where labels are mapped to known resources.

rules:

- seriesQuery: 'http_requests_total{kubernetes_namespace!="",kubernetes_pod_name!=""}'

resources:

overrides:

kubernetes_namespace: {resource: "namespace"}

kubernetes_pod_name: {resource: "pod"}

This says that each label represents its corresponding resource. For resources in the "core" kubernetes API the group does not need to be specified. The adapter will handle pluralization, "pod" is equivalent with "pods". The resources can be any resource available in your kubernetes cluster, as long as you've got a corresponding label.

If labels follow a consistent pattern, like "kubernetes_<resource>", the mapping can be done with an expression similar to:

resources: {template: "kubernetes_<<.Resource>>"}

instead of using an overrides map. The list of resources, including the custom resources, is provided by kubectl api-resources.

The name of the metric can be changed with a "name" translation rule:

rules:

- ...

name:

matches: "^(.*)_total"

as: "${1}_per_second"

The last part is specifying the query that provides numeric values:

rules:

- ...

metricsQuery: 'sum(rate(<<.Series>>{<<.LabelMatchers>>}[2m])) by (<<.GroupBy>>)'

Helm Installation

The adapter will be installed in the same namespace as Prometheus.

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm search repo prometheus-community

helm install -n prom prometheus-adapter prometheus-community/prometheus-adapter -f ./prometheus-adapter-overlay.yaml

See Configuration below for prometheus-adapter-overlay.yaml.

Test installation:

kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1 | jq

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "custom.metrics.k8s.io/v1beta1",

"resources": [

{

"name": "jobs.batch/kubernetes_build_info",

"singularName": "",

"namespaced": true,

"kind": "MetricValueList",

"verbs": [

"get"

]

},

{

"name": "services/kube_deployment_spec_strategy_rollingupdate_max_surge",

"singularName": "",

"namespaced": true,

"kind": "MetricValueList",

"verbs": [

"get"

]

},

...

}

Configuration

Use the following prometheus-adapter-overlay.yaml:

helm show values prometheus-community/prometheus-adapter > ~/tmp/prometheus-adapter-overlay.yaml

prometheus:

url: http://prometheus-kube-prometheus-prometheus.prom.svc

Increase log level when installing the chart.

Test:

kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/pods/*/http_requests?selector=app%3Dsample-app"

Configuration Resources:

- Configuration Reference: https://github.com/DirectXMan12/k8s-prometheus-adapter/blob/master/docs/config.md

- Basics of setting the Prometheus Adapter walkthrough (the full walkthrough): https://github.com/DirectXMan12/k8s-prometheus-adapter/blob/master/docs/walkthrough.md

- Configuration walkthrough: https://github.com/DirectXMan12/k8s-prometheus-adapter/blob/master/docs/config-walkthrough.md

- Default configuration: https://github.com/DirectXMan12/k8s-prometheus-adapter/blob/master/deploy/manifests/custom-metrics-config-map.yaml

- Chart README configuration section: https://github.com/prometheus-community/helm-charts/tree/main/charts/prometheus-adapter#configuration

- Boilerplate guide: https://github.com/kubernetes-sigs/custom-metrics-apiserver/blob/master/docs/getting-started.md#kinds-resources-and-scopes

Troubleshooting

Tailing the logs is useful, Prometheus connection refused are reported there. Also, since the horizontal pod autoscaler will make the invocations into the metrics APIs, its logs are also useful, see Horizontal Pod Autoscaler Controller Troubleshooting.

To check what is the adapter exposing, execute:

kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/"

kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1 | jq '.resources[] | select(.name | contains("action_queue_depth"))'