Software Testing Concepts: Difference between revisions

| Line 107: | Line 107: | ||

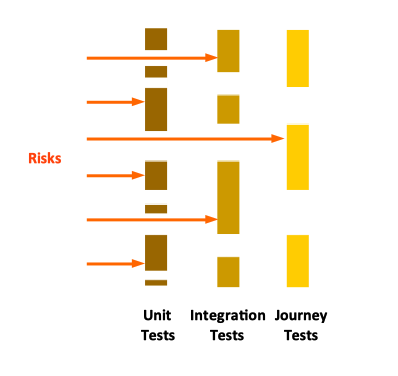

The Swiss cheese testing model is a risk management model juxtaposed on the typical structure of software tests. The idea is that a given layer of testing, or a test mesh, may have holes, like one slice of Swiss cheese, and those holes might miss a defect, or fail to uncover a risk. But when multiple layers (meshes) are combined, the totality of tests look more like a block of Swiss cheese, with no holes that go all the way through, so all the risks are properly managed. | The Swiss cheese testing model is a risk management model juxtaposed on the typical structure of software tests. The idea is that a given layer of testing, or a test mesh, may have holes, like one slice of Swiss cheese, and those holes might miss a defect, or fail to uncover a risk. But when multiple layers (meshes) are combined, the totality of tests look more like a block of Swiss cheese, with no holes that go all the way through, so all the risks are properly managed. | ||

:::[[File:Swiss_Cheese_Model.png]] | :::[[File:Swiss_Cheese_Model.png|398px]] | ||

=Strategies to Improve Testing Speed and Efficiency= | =Strategies to Improve Testing Speed and Efficiency= | ||

Revision as of 21:45, 1 January 2022

External

- xUnit Test Patterns by Gerard Meszaros.

Internal

Overview

Automated testing provides an effective mechanism for catching regressions, especially when combined with test-driven development. Well written tests can also act as a form of a system documentation but if not used carefully, writing tests may produce meaningless boilerplate test cases. Automated testing is also valuable for covering corner cases that rarely arise in normal operations. Thinking about writing the tests improves the understanding of the problem and in some cases leads to better solutions.

Testing is ultimately about managing risks.

Test-Driven Development (TDD)

Test-Driven Development (TDD) is an agile software development technique for writing software that guides software development by writing tests. It consists of the following steps, applied repeatedly:

- Write a test for the next bit of functionality you want to add.

- Write the functional code until the test passes.

- Refactor both new and old code to make it well structured.

Writing the tests first and exercising them in process of writing the code makes the code more modular, and also prevents it to break if changed later - the tests become part of the code base. This is referring to as "building quality in" instead of "testing quality in".

Automated Test

An automated test verifies an assumption about the behavior of the system and provides a safety mesh that is exercised continuously, again and again, in an automated fashion, in most cases on each commit in the repository. The benefits of automated testing and that the software is continuously verified, maintaining its quality. Another benefit of tests is that they serve as documentation for code. Yet another benefit is that enables refactoring.

Continuous Testing

Continuous testing means the test suite is run as often as possible, ideally after each commit. The CI build and the CD pipeline should run immediately someone pushes a change to the codebase. Continuous testing provide continuous feedback. Quick feedback, ideally immediately after a change was committed, allows developers to respond to the feedback with as little interruption to their flow as possible. Additionally, more often the tests run, the smaller scope of change is examined for each run, so it's easier to figure out where the problems were introduced, if any.

Test Categories

Test Pyramid

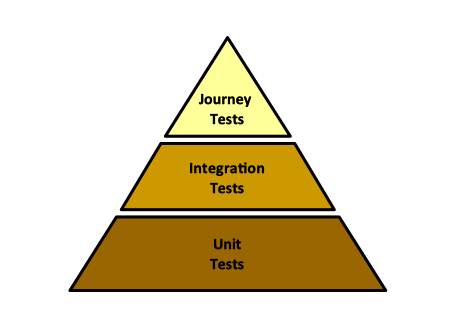

The test pyramid is a well known-model for software testing. It prescribes more "cheaper" tests at the lower level, and a fewer, more expensive tests at the higher levels. The lower level of the pyramid contains unit tests. The middle layer contains integration tests, which cover collections of components assembled together. The higher stage contain journey tests, driven through the UI, which test the application as a whole.

The tests in the higher level of the pyramid cover the same code base as the tests in the lower levels, which means they can be fewer, and less comprehensive. They only need to tests the functionality that emerges from the interaction of components, in way the tests at the lower levels can't. They don't need to prove the behavior of the lower-level components, that's already done by the lower level tests.

TO PROCESS: The Practical Test Pyramid by Ham Vocke

Note that the "classical" test pyramid does not apply entirely to infrastructure software. The model for infrastructure code testing is the "infrastructure test diamond".

Unit Test

- Unit Test by Martin Fowler

Unit testing is the testing of the smallest possible part of software, such a single method, a small set of related methods or a class. In reality we test logical units - which can extend to a method, a single class or multiple classes. A unit test has the following characteristics:

- It should be automated. Continually verified assumptions encourage refactoring. Code should not be refactored without proper automated test coverage.

- It should be reliable - it should fail if, and only if, the production code is broken. If the test starts failing for some other reason, for example if the internet connection is not available, that implies the unit testing code is broken.

- It should be fast - not more that a few milliseconds to finish execution.

- It should be self-contained and runnable in isolation, and then in any order as part of the test suite. The unit test should not depend on the result of another test or on the execution order, or external state (such as configuration files stored on the developer's machine). One should understand what is going on in a unit test without having to look at other parts of the code.

- It should not depend on database access, or any long running task. If the presence of external dependencies are necessary to test the logic, they should be provided as test doubles.

- It should be time (time of the day, timezone) and location independent.

- It should be meaningful. Getter or setter testing is not meaningful.

- It should be usable as documentation: readable and expressive.

External Dependency

The following are examples of situations when the test relies on external dependencies:

- The test acquire a database connection and fetches/updates data.

- The test connects to the internet and downloads files.

- The test interacts with a mail server to sent an e-mail.

- The test looks up JNDI objects.

- The test invokes a web service.

Integration Test

Integration tests cover collection of components, assembled together and interacting with each via their interfaces. Integration test depend on real external dependencies, such databases, and they are inherently slower than the unit tests. Integration tests should be automated, but they should run outside the continuous unit test feedback loop.

UI-Driven Journey Test

The journey tests, driven through the UI, test the application as a whole.

Acceptance Test

Acceptance tests are written by analysts and other stakeholders, in a user story manner.

Other Kinds of Tests

When people think about automated testing, they generally think about functional tests like unit tests, integration tests or UI-driven journey tests. However, the scope of risks is broader than functional defects, so the scope of validation should be broader as well. Constraints and requirements beyond the purely functional ones are called Non-Functional Requirements (NFR) or Cross-Functional Requirements (CFR) (see A Decade of Cross Functional Requirements (CFRs) by Sarah Taraporewalla):

Code Quality

Security

Compliance

Performance

Scalability

Automated tests can prove that scaling works correctly.

Availability

Automated tests can prove that failover works.

Test Suite

A test suite is a collection of automated tests that are run as a group.

Test Double

A test double is meant to replace a real external dependency in the unit test cycle, isolating it from the real dependency and allowing the test to run in standalone mode. This may be necessary either because the external dependency is unavailable, or the interaction with it is slow. The term was introduced by Gerard Meszaros in his xUnit Test Patterns book.

Dummy

A dummy object is passed as a mandatory parameter object but is not directly used in the test code or the code under test. The dummy object is required for the creation of another object required in the code under test. When implemented as a class, all methods should throw a "Not Implemented" runtime exception.

Stub

A stub delivers indirect inputs to the caller when the stub's methods are invoked. Stubs are programmed only for the test scope. Stubs' methods can be programmed to return hardcoded results or to throw specific exceptions. Stubs are useful in impersonating error conditions in external dependencies. The same result can be achieve with a mock.

Spy

A spy is a variation of a stub but instead of only setting the expectation, a spy records the method calls made to the collaborator. A spy can act as an indirect output of the unit under test and can also act as an audit log.

Mock

A mock object is a combination of a stub and a spy. It stubs methods to return values and throw exceptions, like a stub, but it acts as an indirect output for code under test, as a spy. A mock object fails a test if an expected method is not invoked, or if the parameter of the method do not match. The Mockito framework provides an API to mock objects.

Fake

A fake object is a test double with real logic, unlike stubs, but it is much more simplified and cheaper than the real external dependency. This way, the external dependencies of the unit are mocked or stubbed so that the output of the dependent objects can be controlled and observed from the test. A classic example is a database fake, which is an entirely in-memory non-persistent database that otherwise is fully functional. By contrast, a database stub would return a fixed value.

Fake objects are extensively used in legacy code, in scenarios like:

- the real object cannot be instantiated (such as when the constructor reads a file or performs a JNDI lookup).

- the real object has slow methods - a

calculate()method that invokes aload()methods that reads from a database.

Fake objects are working implementations. Fake classes extends the original classes, but it usually performs some sort of hack which makes them unsuitable for production.

"Given, When, Then" Testing

Swiss Cheese Testing Model

The Swiss cheese testing model is a risk management model juxtaposed on the typical structure of software tests. The idea is that a given layer of testing, or a test mesh, may have holes, like one slice of Swiss cheese, and those holes might miss a defect, or fail to uncover a risk. But when multiple layers (meshes) are combined, the totality of tests look more like a block of Swiss cheese, with no holes that go all the way through, so all the risks are properly managed.

Strategies to Improve Testing Speed and Efficiency

Divide the System into Small, Tractable Components

Design the system as a set of small, loosely coupled components that can be tested independently. Testing a component in isolation with unit test at the lower level of the test hierarchy is faster and less expensive. A smaller component has a smaller surface area of risk. Testing component interaction is still needed, but with fewer of more complex and expensive tests.

Use Test Doubles

External dependencies impact testing, especially if you need to rely on someone else to provide instances to support the tests. Even if they are available, they may be slow, expensive, unreliable and might have inconsistent state, especially if they are shared in testing. A strategy to speed up testing and make it more deterministic is to use test doubles at the unit and integration test level, as part of the progressive testing strategy. Actual dependencies might be used for testing in the later stages, but the number of high level tests that use them should be minimal.

Use Progressive Testing

Progressive testing is running the cheaper and faster unit tests first, to get quicker feedback if they fail, then run integration tests, and only run journey tests if all lower level tests pass successfully. The Swiss cheese model provides an explanation of why this approach makes sense.

Testing and Continuously Delivering Infrastructure Code

To Process

- Mocks Aren't Stubs by Martin Fowler