WildFly HornetQ-Based Messaging Subsystem Concepts: Difference between revisions

| Line 150: | Line 150: | ||

===Cluster Connection Configuration=== | ===Cluster Connection Configuration=== | ||

Configuration elements description: | Configuration elements description is found here: | ||

<blockquote style="background-color: #f9f9f9; border: solid thin lightgrey;"> | <blockquote style="background-color: #f9f9f9; border: solid thin lightgrey;"> | ||

Revision as of 00:25, 14 May 2016

External

- Messaging chapter in "EAP 6.4 Administration and Configuration Guide" https://access.redhat.com/documentation/en-US/JBoss_Enterprise_Application_Platform/6.4/html/Administration_and_Configuration_Guide/chap-Messaging.html

- HornetQ User Manual http://docs.jboss.org/hornetq/2.4.0.Final/docs/user-manual/html/index.html

Internal

Overview

This page contains a descriptions of the concepts behind WildFly HornetQ-based messaging subsystem.

Address

Acceptors and Connectors

Acceptor

An acceptor is a HornetQ mechanism that defines which types of connections are accepted by a HornetQ server. A specific acceptor matches a specific connector that initiates the connection. The connectors can be thought of as "the initiating end of the connection" and they are either clients or other server nodes that attempt to cluster.

Connector

A connector is a HornetQ mechanism that defines how a client or another server can connect to a server. The connector information is used by the HornetQ clients. A specific connector matches a specific acceptor. A server may broadcast its connectors over network, as a way to make itself known and allow clients (and other servers in the same cluster) to connect to it.

Address

Persistence

Paging

Security

HornetQ does not allow creation of unauthenticated connections. The connection's user name and password are authenticated against the "other" security domain, and the user must belong to the "guest" role. The security domain name is not explicitly specified in the configuration, but the default value is "other", and the following declaration has the same effect as the default configuration:

<subsystem xmlns="urn:jboss:domain:messaging:1.2">

<hornetq-server>

...

<security-domain>other</security-domain>

...

For more details about the relationship between security domains and security realms, see Relationship between a Security Realm and a Security Domain.

Users can be added to the ApplicationRealm with add-user.sh. The user can be added to a certain role by assigning it to a "group" with the same name when adding with add-user.sh.

For details on how to secure destinations, see:

For details on how to secure cluster connections, see:

Connection Factory

Sending Messages

send()

By default, non-transactional persistent messages are sent in blocking mode: the sending thread blocks until the acknowledgement for the message is received from the server. The configuration elements that controls this behavior, for both durable and non-durable messages, are block-on-durable-send and block-on-non-durable-send. The actual values a server is configured with can be obtained via CLI:

/subsystem=messaging/hornetq-server=active-node-pair-A/connection-factory=HighlyAvailableLoadBalancedConnectionFactory:read-attribute(name=block-on-durable-send)

Relationship between block-on-durable-send and block-on-acknowledge, it is probably related to "asynchronous send acknowledgements". More documentation: http://docs.jboss.org/hornetq/2.4.0.Final/docs/user-manual/html_single/#non-transactional-sends. http://docs.jboss.org/hornetq/2.4.0.Final/docs/user-manual/html_single/#send-guarantees.nontrans.acks, http://docs.jboss.org/hornetq/2.4.0.Final/docs/user-manual/html_single/#asynchronous-send-acknowledgements.

HornetQ allows for an optimization called "Asynchronous Send Acknowledgements": the acknowledgment is sent back on a separate stream. The send() method still blocks until it receives the acknowledgment.

Writing to Journal

Once a persistent message reaches the server, it must be written to the journal. Writing to journal is controlled by the journal-sync-transactional and journal-sync-non-transactional. Both are true by default.

The actual value can be read with CLI:

/subsystem=messaging/hornetq-server=active-node-pair-A:read-attribute(name=journal-sync-non-transactional)

Also see:

Replication

This section only makes sense if the server is in HA mode and message replication was enabled. Replication is asynchronous, but it is initiated before the message is written in the persistent storage. Full explanation:

Clustering

- EAP 6.4 Administation and Configuration Manual - HornetQ Clustering https://access.redhat.com/documentation/en-US/JBoss_Enterprise_Application_Platform/6.4/html/Administration_and_Configuration_Guide/sect-HornetQ_Clustering.html

Clustering in this context means establishing a mesh of HornetQ brokers. The main purpose of creating a cluster is to spread message processing load across more than one node. Each active node in the cluster acts as an independent HornetQ server and manages its own connections and messages. HornetQ insures that messages can be intelligently load balanced between the servers in the cluster, according to the number of consumers on each node, and whether they are ready for messages. HornetQ also has the ability to automatically redistribute messages between nodes of a cluster to prevent starvation on any particular node.

Clustering does not automatically insure high availability. Go here for more details on HornetQ high availability.

Cluster Connection

The elements that turns a HornetQ instance into a clustered HornetQ instance is the presence of one or more cluster connections in the configuration, and the configuration setting that tells the instance that is clustered: <clustered>true</clustered>. Since EAP 6.1, the <clustered> element is redundant, the simple presence of a cluster connection turns on clustering. For more details see <clustered> post EAP 6.1.

The cluster connections represents unidirectional communication channels between nodes.

An initiator node can open one or more connections to a target node, by explicitly declaring the cluster connection in its configuration, and can use the cluster connection(s) to send payload and traffic control messages into the target node. A core bridge is automatically created internally when a cluster connection is declared to another node.

Core Bridge

A core bridge is ... TODO

A core bridge is automatically created when a cluster connection is declared. Messages are passed between nodes over core bridges. Core bridges consume messages from a source queue and forward them to a target queue deployed on a HornetQ node which may or may not be in the same cluster.

Cluster Connections in Log Messages

The cluster connections appear in log messages as follows:

sf.<cluster-connection-name-as-declared-on-the-initiator-node>.<target-hornetq-node-UUID>

Example:

sf.load-balancing.ec55aece-195d-11e6-9d57-c3467f86a8cb

Cluster Connection Configuration

Configuration elements description is found here:

For more details on how to configure a WildFly HornetQ-based messaging cluster see:

Broadcast and Discovery Groups

Broadcast Group

A broadcast group is a HornetQ mechanism used to advertise connector information over the network. If the HornetQ server is configured for high availability, thus has an active and a stand-by node, the broadcast group advertises connector pairs: a live server connector and a stand-by server connector.

Broadcast groups use internally either UDP multicast or JGroups channels.

Discovery Group

A discovery group defines how connector information broadcasted over a broadcast group is received from the broadcast endpoint. Discovery groups are used by JMS clients and cluster connections to obtain the initial connection information in order to download the actual topology.

A discovery group maintains lists of connectors (or connector pairs, in case the server is HA), one connector per server. Each broadcast updates the connector information.

A discovery group implies a broadcast group, so they require either UDP or JGroups.

Does "discovery group" intrinsically mean multicast - or we can have "static" discovery groups where the connectors are statically declared?

Client-Side Load Balancing

All a client needs to do in order to load balance messages across a number of HornetQ nodes is to look up a connection factory that was configured to load balance amongst those nodes. For an example of how such a connection factory is configured see: Configure a ConnectionFactory for Load Balancing.

High Availability

- EAP 6.4 Administration and Configuration Guide - HornetQ High Availability https://access.redhat.com/documentation/en-US/JBoss_Enterprise_Application_Platform/6.4/html/Administration_and_Configuration_Guide/sect-High_Availability.html

- Configure high-availability and fail-over for HornetQ in JBoss EAP 6 https://access.redhat.com/solutions/169683

High Availability in this context means the ability of HornetQ to continue functioning after the failure of one or more nodes. High availability is implemented on the server side by using a pair of active/stand-by (backup) broker nodes, and on the client side by logic that allows client connections to automatically migrate from the active sever to the stand-by server in event of active server failure.

High Availability does not necessarily mean that the load is spread across more than one active node. Go here for more details about HornetQ clustering for load balancing.

Replication Types

For step-by-step instruction on how to configure such a topology see:

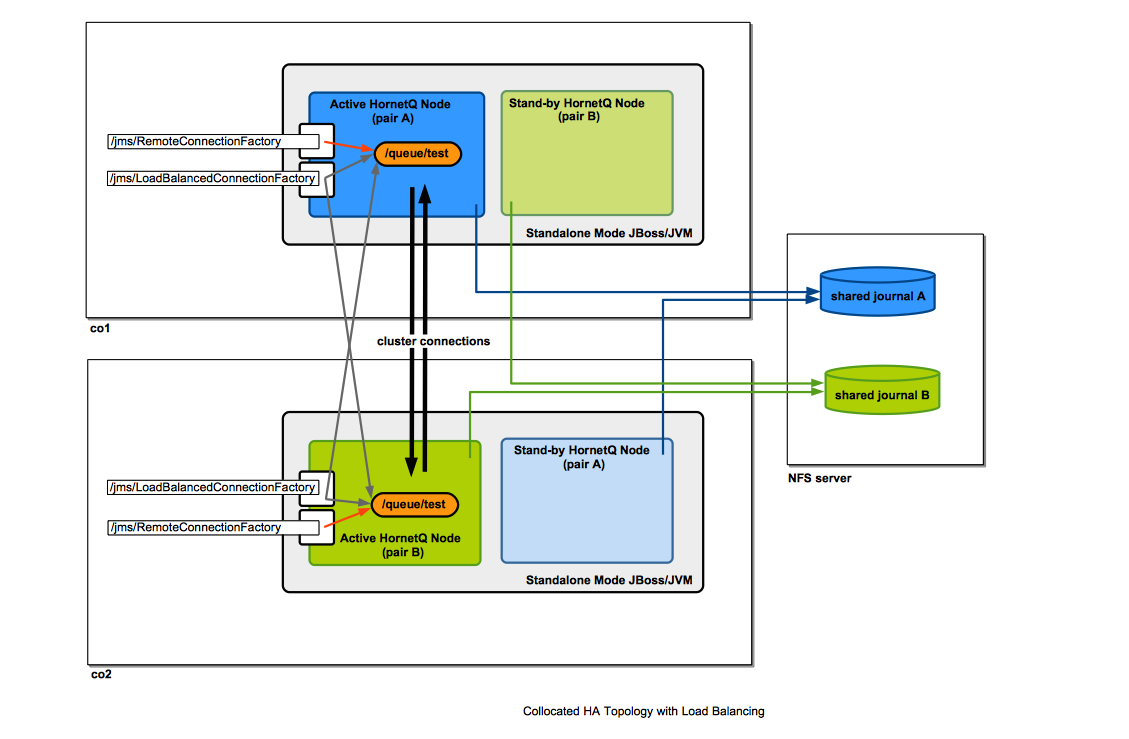

For an example of a shared filesystem-based replication diagram, see Collocated Topology below.

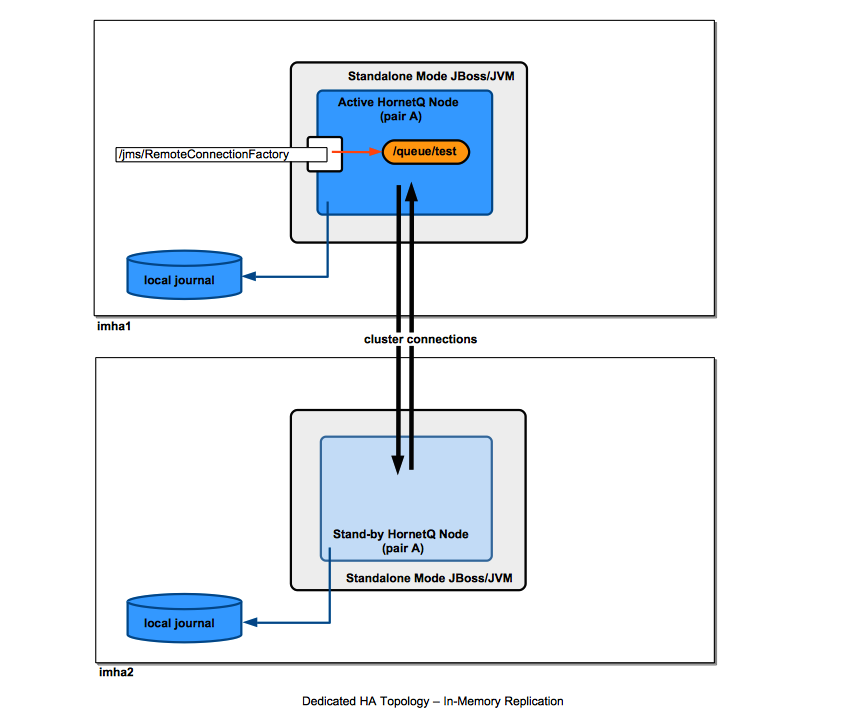

Message Replication

In a message replication configuration, the active and stand-by nodes do not share filesystem-based data stores. The message replication is done via network traffic over cluster connections. A backup server will only replicate with a live server within the same group name (<backup-group-name>). Note that a backup group name only has two nodes: the active and the stand-by.

For message replication, the journals, and not individual messages are replicated. Since only the persistent messages make it to the journal, only the persistent messages are replicated.

When the backup server comes on-line, it attempts to connect to its live server to attempt synchronization. While the synchronization is going on, the node is unavailable as backup server. If the backup server comes online and no live server is available, the backup server will wait until the live server is available in the cluster.

if the live server fails, the fully synchronized backup server attempts to become active. The backup server will activate only if the live server has failed and the backup server is able to connect to more than half of the servers in the cluster. If more than half of the other servers in the cluster also fail to respond it would indicate a general network failure and the backup server will wait to retry the connection to the live server.

Fail-back: To get to the original state after failover, it is necessary to start the live server and wait until it is fully synchronized with the backup server. When this has been achieved, the original live server will become active only after the backup server is shut down. This happens automatically if the <allow-failback> attribute is set to true.

How is the Replication Done

Configuration

For step-by-step instruction on how to configure such a topology see:

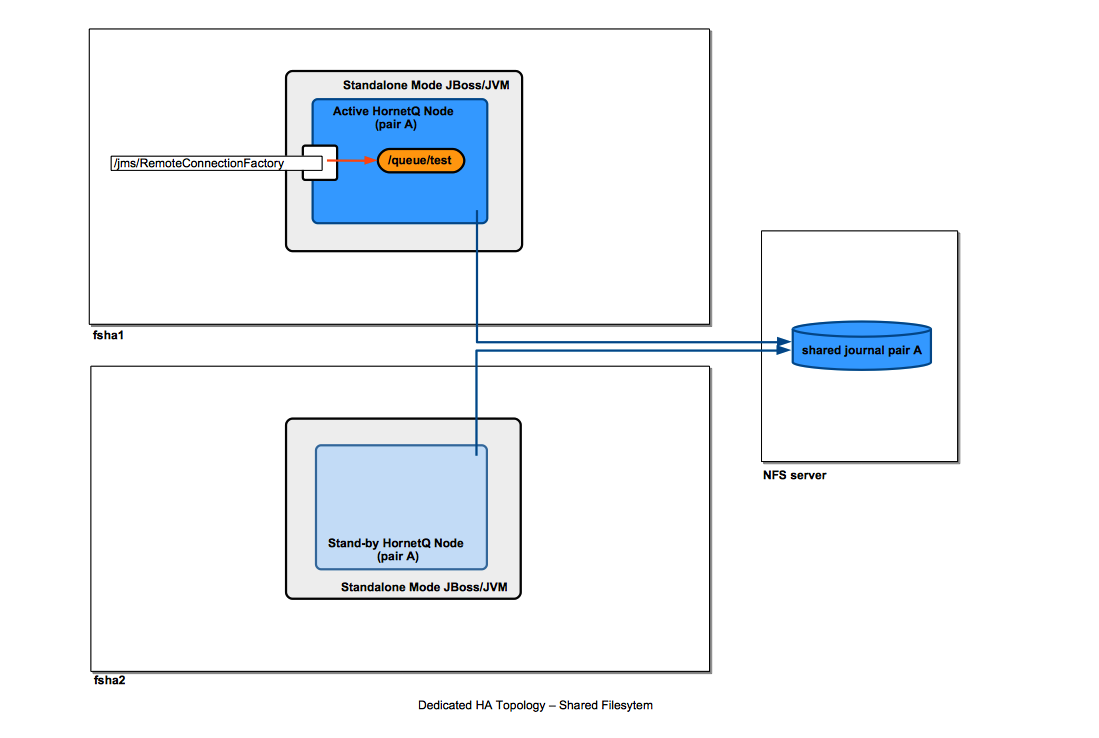

Dedicated Topology

For step-by-step instruction on how to configure such a topology see:

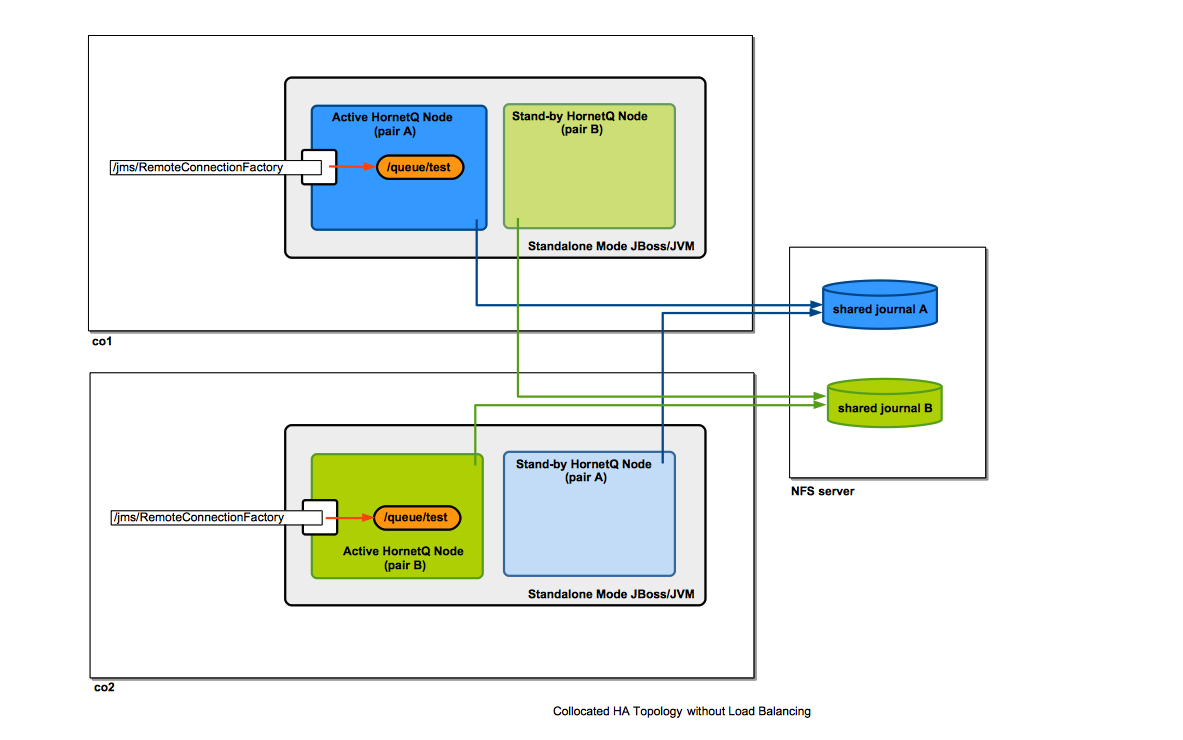

Collocated Topology

The diagram shows an example of collocated topology where the high availability is insured by writing journals on a shared file system.

For step-by-step instruction on how to configure such a topology see:

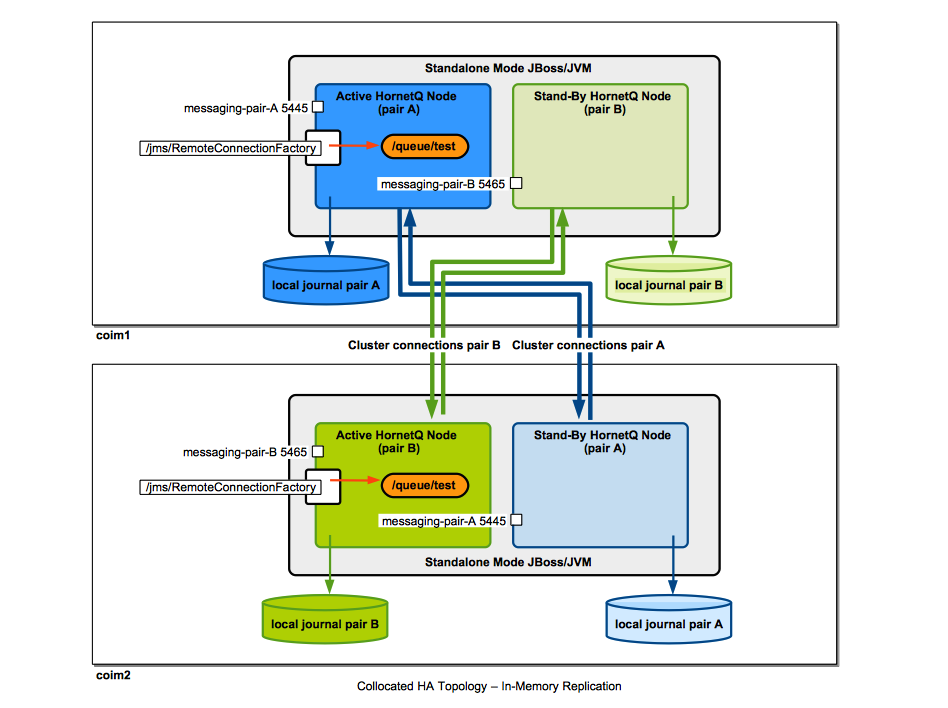

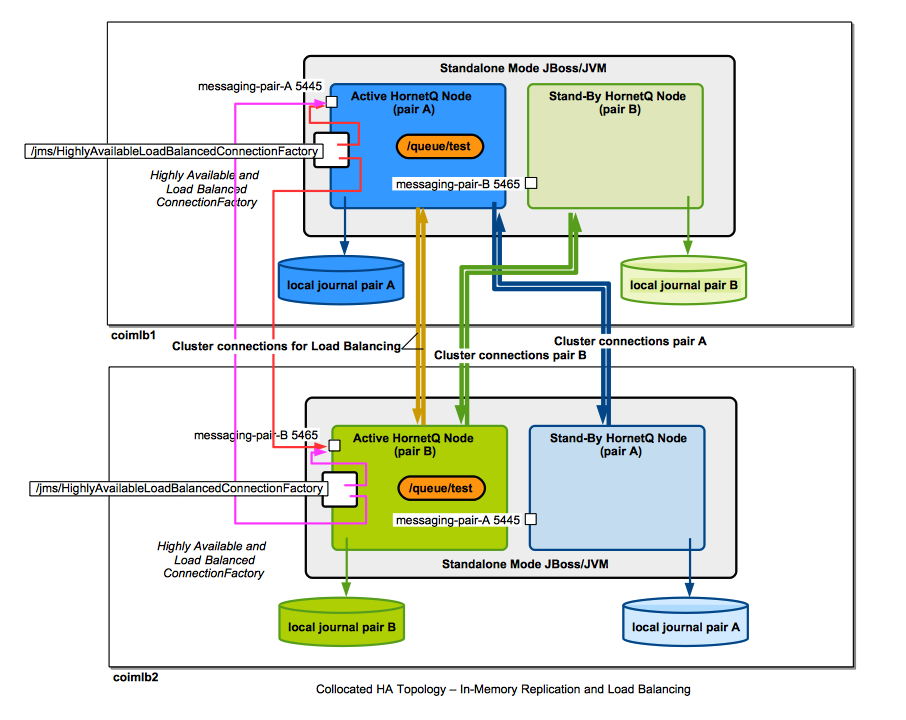

Collocated Topology with Message Replication, no Load Balancing

The diagram shows an example of collocated topology where the high availability is achieved by message replication. No shared filesystem is required.

For step-by-step instruction on how to configure such a topology see:

Collocated Topology with Load Balancing

Collocated Topology with Message Replication and Load Balancing

Server State Replication

TODO Explain this:

HornetQ does not replicate full server state between live and backup servers. When the new session is automatically recreated on the backup it will not have any knowledge of messages already sent or acknowledged in that session. Any in-flight sends or acknowledgments at the time of fail-over might also be lost.

Client-Side Failover

Playground Example

TODO

Failover Limitations

Due to the way HornetQ was designed, the failover is not fully transparent and it requires application’s cooperation.

There are two notable situations when the application will be notified of live server failure:

- The application performs a blocking operations (for example a message send()). In this situation, if a live server failure occurs, the client side messaging runtime will interrupt the send operation and make it throw a JMSExcepiton.

- The live server failure occurs during a transaction. In this case, the client-side messaging runtime rolls back the transaction.

Automatic Client Fail-Over

- EAP 6.4 Administration and Configuration Guide - Automatic Client Failover https://access.redhat.com/documentation/en-US/JBoss_Enterprise_Application_Platform/6.4/html/Administration_and_Configuration_Guide/sect-High_Availability.html#Automatic_Client_Failover

- EAP 6.4 Administration and Configuration Guide - Application-Level Failover https://access.redhat.com/documentation/en-US/JBoss_Enterprise_Application_Platform/6.4/html/Administration_and_Configuration_Guide/sect-High_Availability.html#Application-Level_Failover

HornetQ clients can be configured with knowledge of live and backup servers, so that in event of live server failure, the client will detect this and reconnect to the backup server. The backup server will then automatically recreate any sessions and consumers that existed on each connection before fail-over, thus saving the user from having to hand-code manual reconnection logic. HornetQ clients detect connection failure when they have not received packets from the server within the time given by 'client-failure-check-period'.

Client code example:

final Properties env = new Properties();

env.put(Context.INITIAL_CONTEXT_FACTORY, "org.jboss.naming.remote.client.InitialContextFactory");

env.put("jboss.naming.client.connect.timeout", "10000");

env.put(Context.PROVIDER_URL, "remote://<host1>:4447,remote://<host2>:4447");

env.put(Context.SECURITY_PRINCIPAL, "username");

env.put(Context.SECURITY_CREDENTIALS, "password");

Context context = new InitialContext(env);

ConnectionFactory cf = (ConnectionFactory) context.lookup("jms/RemoteConnectionFactory");

If <host1> (i.e. your "live" server) is down it will automatically try <host2> (i.e. your "backup" server).

If you want the JMS connections to move back to the live when it comes back then you should set <allow-failback> to "true" on both servers.

Failover in Case of Administrative Shutdown of the Live Server

HornetQ allows the possibility to specify the client-side failover behavior in case of administrative shutdown of the live server. There are two options:

- Client does not fail over to the backup server on administrative shutdown of the live server. If the connection factory is configured to contain other live server connectors, the client will reconnect to those; if not, it will issue a warning log entry and close the connection.

- Client does fail over to the backup server on administrative shutdown of the live server. If there are no other live servers available, this is probably a sensible option.

WildFly Clustering and HornetQ High Availability

HornetQ High Availability is configured independently of WildFly Clustering, so a configuration in which WildFly nodes are running in a non-clustered configuration but the embedded HornetQ instances are configured for High Availability is entirely possible.

Generic JMS Client with HornetQ

Pre-Conditions for a JMS Client to Work

No JNDI Authentication

WildFly requires invocations into the remoting subsystems to be authenticated. JNDI uses remoting, so in order to make JNDI calls we either need to enable remoting authentication and make remoting client calls under an authenticated identity, or disable remoting authentication. See:

Authenticated User Present

Add an "ApplicationRealm" user that belongs to the "guest" role. That identity will be used to create JMS connections.

For more details on using add-user.sh, see: