Concurrent (Parallel) Programming: Difference between revisions

| Line 9: | Line 9: | ||

Concurrent programming (or concurrency) is the management of multiple tasks that exist, and possibly execute, at the same time. Concurrent programming includes management of task execution, communication between tasks and [[#Synchronization|synchronization]] between tasks. | Concurrent programming (or concurrency) is the management of multiple tasks that exist, and possibly execute, at the same time. Concurrent programming includes management of task execution, communication between tasks and [[#Synchronization|synchronization]] between tasks. | ||

Parallel programming is important for the reasons that will be explained below. | |||

Moore Law (or observation, more precisely) stated that the transistor density on the chip doubles every two years. This observation was true for a while, but processor engineering is reaching the limits of what is physically possible when it comes to shrinking transistors. The difficulties are mainly related to power consumptions and temperature dissipation, generically known as the “power wall”. | |||

The relationship between dynamic power consumed by a transistor and percentage of time spent switching (α), capacitance (C), clock frequency (F) and voltage differential (V) is given by the following equation: | |||

P = αCFV<sup>2</sup> | |||

Smaller transistors have a lower capacitance, so C goes down as the transistor shrinks. | |||

Also, Dennard scaling law says that as transistors get smaller, both voltage and current scale downward, so the V2 term scales down in the equation. | |||

For constant α, whose maximum and desired value is 1, the dynamic power consumption equation would allow increasing the clock frequency, hence the performance of the processor, as C and V decrease. | |||

However, transistors cannot get smaller than a certain size. One of the reasons is that the voltage has to stay above a specific limit, otherwise the noise would become unmanageable. In consequence, the voltage swings cannot get infinitely small. Thinner insulator layers increase static power leakage. Increasing the clock frequency under these circumstances would mean that the dynamic power consumption goes up, the temperature of the circuit goes up, as not sufficient heat can be dissipated fast enough, and the circuit simply melts. | |||

There is another factor limits the overall system's performance even if the processor frequency could be increased further, and that his the Von Neumann bottleneck: the main memory access is orders of magnitude slower than the processor, so even if the processor is fast, it'll idle waiting for memory access. People work around this by onboarding fast caches on processors. | |||

All these factors determined hardware designer to implement a different scaling strategy: not so much shrinking the transistors in a single but packing more cores per chip. Suddenly, there’s not just one single processor that executes instructions serially, one at a time, but more of them, which can execute instruction in a truly parallel fashion. | |||

This development is what makes concurrent programming very relevant. A core is essentially sequential, so in order to use the system effectively, concurrent programming is necessary. Programs must take advantage of parallel hardware, otherwise the majority of the cores in the processor would idle, so concurrent programming support in the language is very important. Even though there is research going on into parallel compilers, attempts to automatically parallelize a program are not going very well, which makes the programmer's job to decompose the task so it renders itself to parallel execution. | |||

Implementations in programming languages: | Implementations in programming languages: | ||

Revision as of 22:46, 31 August 2023

Internal

Overview

Concurrency and parallelism refer to slightly different things, but we discuss about "concurrent (parallel)" programming just because we want "concurrent" and "parallel" search to hit the same article.

Concurrent programming (or concurrency) is the management of multiple tasks that exist, and possibly execute, at the same time. Concurrent programming includes management of task execution, communication between tasks and synchronization between tasks.

Parallel programming is important for the reasons that will be explained below.

Moore Law (or observation, more precisely) stated that the transistor density on the chip doubles every two years. This observation was true for a while, but processor engineering is reaching the limits of what is physically possible when it comes to shrinking transistors. The difficulties are mainly related to power consumptions and temperature dissipation, generically known as the “power wall”.

The relationship between dynamic power consumed by a transistor and percentage of time spent switching (α), capacitance (C), clock frequency (F) and voltage differential (V) is given by the following equation:

P = αCFV2

Smaller transistors have a lower capacitance, so C goes down as the transistor shrinks.

Also, Dennard scaling law says that as transistors get smaller, both voltage and current scale downward, so the V2 term scales down in the equation.

For constant α, whose maximum and desired value is 1, the dynamic power consumption equation would allow increasing the clock frequency, hence the performance of the processor, as C and V decrease.

However, transistors cannot get smaller than a certain size. One of the reasons is that the voltage has to stay above a specific limit, otherwise the noise would become unmanageable. In consequence, the voltage swings cannot get infinitely small. Thinner insulator layers increase static power leakage. Increasing the clock frequency under these circumstances would mean that the dynamic power consumption goes up, the temperature of the circuit goes up, as not sufficient heat can be dissipated fast enough, and the circuit simply melts.

There is another factor limits the overall system's performance even if the processor frequency could be increased further, and that his the Von Neumann bottleneck: the main memory access is orders of magnitude slower than the processor, so even if the processor is fast, it'll idle waiting for memory access. People work around this by onboarding fast caches on processors.

All these factors determined hardware designer to implement a different scaling strategy: not so much shrinking the transistors in a single but packing more cores per chip. Suddenly, there’s not just one single processor that executes instructions serially, one at a time, but more of them, which can execute instruction in a truly parallel fashion.

This development is what makes concurrent programming very relevant. A core is essentially sequential, so in order to use the system effectively, concurrent programming is necessary. Programs must take advantage of parallel hardware, otherwise the majority of the cores in the processor would idle, so concurrent programming support in the language is very important. Even though there is research going on into parallel compilers, attempts to automatically parallelize a program are not going very well, which makes the programmer's job to decompose the task so it renders itself to parallel execution.

Implementations in programming languages:

Parallel vs. Concurrent

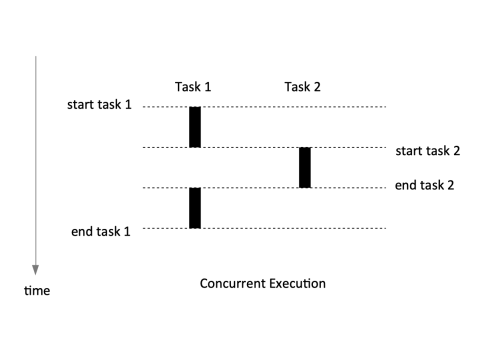

"Concurrent execution" of two tasks means that the tasks execution overlaps. Concurrency can be achieved with serial hardware, such as a processor that executes one instructions at the time. It can be of course achieved on replicated hardware too.

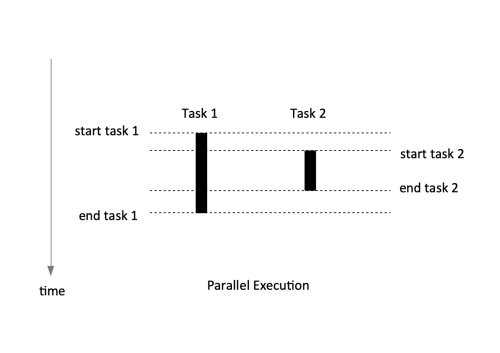

"Parallel execution" means executing two tasks at the same time. Parallelism is only achievable with replicated hardware, usually a multi-core processor. Determining which hardware performs which task is called hardware mapping. Usually this is not controlled by the programmer.

The completion time for concurrent execution is longer than parallel execution.

In concurrent programming, usually the programmer determines which tasks can be executed in parallel, communication between tasks, synchronization between tasks, etc. Usually the programmer does not decide which tasks will be perform in parallel and they they don's specify hardware mapping. That is left to the O/S and the programming language runtime scheduler.

Concurrency improves performance even without parallelism. This is because tasks usually wait for some event (over network, for example) and concurrent programming helps to preempt that task and use the processor for other tasks that are ready for compute. Concurrency can help to "hide latency".

Synchronization

A way to address concurrent updates of shared state in concurrent programming is to use locks. Locks are problematic because they rely of the capacity of the programer to identify problem areas that require concurrent access protection. As the complexity of the program increases, the reliance of this capacity may become problematic.

An alternative approach is to use an actor model.