Go Pipelines

Internal

Overview

A pipeline is a data processing pattern, aimed at processing streams of data. A pipeline consists of a series of stages. The output of a stage is connected to the input of the subsequent stage, unless they happen to be the first and last stage. The input of the first stage is the input of the pipeline. The output of the last stage is the output of the pipeline. The pipeline pattern is powerful because it offers separation of concerns: each stage can implement a different concern. Thus, stages can be modified independently of one another, the stages can be mixed and matched, arranged in fan-out and fan-out topologies, etc.

Generally, the limiting factor on the pipeline is either the generator or the stages that are computationally intensive.

Stage

A stage has the following two properties:

- A stage consumes and returns the same type.

- A stage must be reified by the language so that it may be passed around.

Reification means that the language exposes a concept to the developers so they can work with it directly. Examples: functions, classes.

Stages can be combined at a higher level without modifying the stages themselves. The same stage can be reused multiple times in the same pipeline.

Stages are capable of safely processing stream elements concurrently. A stage reads stream data from a concurrent-safe input and sends it on a concurrent-safe output. This arrangement allows stages to execute independently of one another.

The first stage of a pipeline, which reads the input that consists of discrete value from a source and converts it into stream elements is called generator.

Stream

A stream consists of elements, which are processed one at a time by stages.

Go Pipeline

Go Pipeline Stage

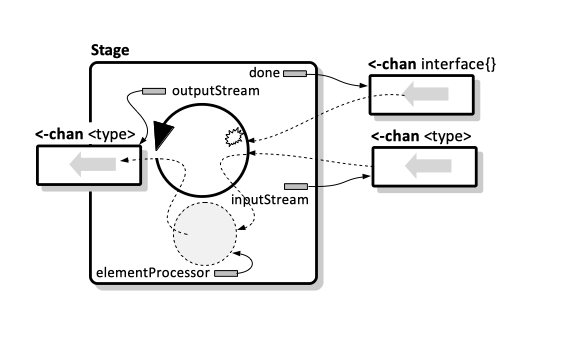

Each stage consists of an (externally) read-only output channel and an internal thread pump that invokes an element-processing function. The stream elements to be processed by the stage are read from a read-only input channel, which usually belongs to the preceding stage in the pipeline. Each element is processed by the element-processing function and the result is written on the stage's output channel. The stage also reads from the done channel, which makes stages to be interconnected in two ways: by the channels that are passed in the subsequent stages of the pipeline and by the done channel. The role of the done channel is to allow us to force the pipeline stages to terminate regardless of the state the pipeline stage is in - waiting on the incoming channel or waiting to send. Closing the done channel will force the pipeline stage to terminate.

The Stage struct looks like this:

// Stage is a structure representing a pipeline stage. The stage creates and manages its own output stream,

// which is an externally read-only channel that is used to send out the elements processed by the stage, and

// a "thread pump" that pulls elements from the input channel, processes them by invoking the element processor

// function on them, and then sends them on the output channel. The stage is preemptable via the "done" channel.

type Stage struct {

name string

done <-chan interface{}

// The read only input stream, it is external, and usually belongs to Stage preceding this one in the pipeline.

in <-chan interface{}

// The externally read only output stream, it is created by the Stage.

out <-chan interface{}

elementProcessor func(e interface{}) interface{}

}

Note that we could use either a concrete type as <some_type> or interface{}. If a generically-written stage needs to work with a specific type, type assertions can be used. Also, a stage that just performs the type assertion could be inserted in the pipeline.

The Stage constructor defines the "thread pump" behavior, in the form of an anonymous function. Among other things, the function closes the out channel when it is not needed anymore. Also, the goroutine exists when something gets written on the done, as an embodiment of the pattern described in the Preventing Goroutines Leak section.

func NewStage(name string, done <-chan interface{}, precedingStage *Stage, elementProcessor func(e interface{}) interface{}) *Stage {

out := make(chan interface{})

stage := &Stage{

name: name,

done: done,

in: precedingStage.out,

out: out,

elementProcessor: elementProcessor,

}

// create and start the thread pump that reads from the input stream, processes elements with

// the element processor function and writes them on the output stream

go func() {

// close the stage when it's done processing all input elements

defer close(out)

// iterate over input elements

for e := range stage.in {

select {

case <-done:

return // the stage may be forcibly closed by writing the "done" channel

case out <- elementProcessor(e):

}

}

}()

return stage

}

Generators

The first stage of the pipeline must read elements from somewhere (a slice, a channel, etc.) and write them to its out channel. In general, the first stage of a pipeline usually reads the input that consists of discrete value from a source and converts it into stream elements. We call this kind of stage a generator. The generator is also a Stage but with a nil input stream.

func NewGenerator(name string, done <-chan interface{}, input []int) *Stage {

outputStream := make(chan interface{})

stage := &Stage{

name: name,

done: done,

out: outputStream,

}

// create and start the thread pump that reads from the input slice and writes them on the output stream

go func() {

// close the stage when it's done processing all input elements

defer close(outputStream)

// iterate over input elements

for _, e := range input {

select {

case <-done:

return // the stage may be forcibly closed by writing the "done" channel

case outputStream <- e:

}

}

}()

return stage

}

Generators can be written to take a function to generate elements.

The Assembled Pipeline

multiplicator := func(e interface{}) interface{} {

i := e.(int)

return i * 2

}

additor := func(e interface{}) interface{} {

i := e.(int)

return i + 1

}

done := make(chan interface{})

defer close(done)

gs := NewGenerator("generator", done, []int{1, 2, 3, 4})

ms := NewStage("multiplicator A", done, gs, multiplicator)

as := NewStage("additor", done, ms, additor)

ms2 := NewStage("multiplicator B", done, as, multiplicator)

for i := range ms2.out {

fmt.Printf("%d\n", i)

}

Fan-Out Pipeline Pattern

The fan-out pipeline pattern consists in starting multiple equivalent stages, each own with its own goroutine, to handle the output of one stage. This pattern is useful when some of the pipeline stages are computationally expensive and can be replicated, allowing the pipeline to send its data stream over multiple, equivalent processing stages that use more CPU in parallel.

A stage of a pipeline is suited for utilizing this pattern if both of the following criteria apply:

- It does not rely on the values that the stage has calculated before (this is important because there is no guarantee in what order the concurrent copies of the stage will run).

- It takes a long time to run.

Fan-In Pipeline

The fan-in pipeline pattern consists in reading multiple parallel stages and multiplexing (combining) their output into a single stage.