System Design

External

- Episode 06 Unqualified Engineer - Jackson Gabbard: Intro to Architecture and Systems Design Interviews https://www.youtube.com/watch?v=ZgdS0EUmn70

Internal

Overview

The goals of system design is to build software systems that first and foremost are correct, in that the system correctly implements the functions it was built to implement. Additionally, they should aim to maximize reliability, scalability and maintainability.

A Typical System

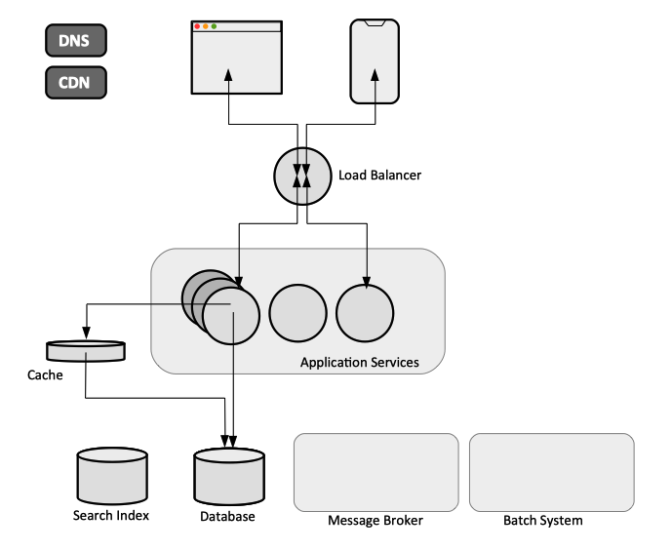

Many web and mobile applications in use today are conceptually similar to the generic system described below. The functionality differs, as well as reliability, scalability and maintainability requirements, but most systems share at least several of the elements described here.

The applications have mobile and browser clients that communicate with the backend via HTTP or WebSocket protocols. They include a business logic layer deployed as a monolith or a set of microservices, in most cases in containers managed by a container orchestration system like Kubernetes. They persist their data in databases, either relational or NoSQL. They may need to remember results of expensive operations to speed up reads, so they can use caches for that. In case they need to allow users to search data by keyword, search indexes are available and can be integrated. They may need to rely on message brokers or streaming systems for asynchronous processing and better decoupling. If they need to periodically process large amounts of accumulated data, they can use batch processing tools.

Depending on the actual requirements on the system, these bits and pieces are implemented by different products, which are integrated into the system and stitched together with application code. The exercise of developing the system consists in combining standard building blocks into a structure that provides custom functionality. When you combine several tools to provide a custom service, the service's API hides implementation details from clients, so in effect you create a special-purpose system from general-purpose components, which provides specific functionality and reliability guarantees. Building such system require system design skills, and the result of the process should accomplish the four main system design goals.

Clients

The clients can be web applications or mobile applications. A web application uses a combination of server-side logic written in a language as Java or Python and packaged and deployed as containerized services to handle business logic and storage, and a client-side language as HTML or JavaScript for presentation. A mobile application uses the same type of backend infrastructure, but a device-specific language to implement the client.

Frontend/Backend Communication

In both cases, the clients send requests over HTTP. The server returns the response as part of the same HTTP request/response pair, either a HTML page to be rendered or JSON-serialized data.

Applications

Database

The database can be relational or NoSQL. The NoSQL article describes why a NoSQL database may be preferable over a relational database, in specific cases.

Cache

Search Index

Full-text search servers: Elasticsearch and Solr.

Stream Processing

Batch Processing

System Design Goals

Correctness

The system should behave correctly, providing the expected functionality. Correctness is ensured during application development via testing. Aside from uncovering functional inconsistencies, testing can discover conditions leading to system failures (see Simple Testing Can Prevent Most Critical Failures: An Analysis of Production Failures in Distributed Data-Intensive Systems by Ding Yuan, Yu Luo). By deliberately inducing faults it can be ensured that the fault-tolerance machinery is continuously exercised and tested, which can increase the confidence that faults will be handled correctly when they occur naturally. Also see:

Reliability

Reliability describes the capacity of a system to work correctly at the desired level of performance even in presence of a certain amount hardware and software faults or human error.

Reliability implies that the persisted data survives storage faults, and also that the system remains available, so reliability implies high-availability.

A fault is defined as one component of the system deviating from its specification. A failure is defined as a system as a whole stopping providing the service. A fault can lead to other faults, a failure or neither. Faults can be caused by hardware, software bugs that make processes crash on bad input, resource leaks followed by resource starvation, operations errors, etc.

It is impossible to reduce the probability of a fault to zero, therefore it is usually best to design mechanism that prevent faults from causing failures. A system that experiences faults may continue to provide its service, that is, not fail. Such a system is said to be fault tolerant or resilient. The observable effect of a fault at the system boundary is called a symptom. The most extreme symptom of a fault is failure, but it might also be something as benign as a high reading on a temperature gauge. For more terminology see A Conceptual Framework for System Fault Tolerance by Walter L. Heimerdinger and Charles B. Weinstock.

System design includes techniques for building reliable systems from unreliable parts. Depending on the type of faults, these techniques include:

- Add redundancy to individual hardware components to prevent hardware faults. Example: RAID disks, dual power supplies and hot swappable CPUs.

- Add software redundancy systems that can make the loss of entire machines tolerable.

- Carefully think about assumptions and interactions in the system to reduce the probability of defects in software.

- Automatically test the software.

- Design the systems in a way that minimizes opportunities for human error during operations. For example, well-designed abstractions, APIs and user interfaces should make it easy to do "the right thing" and discourage "the wrong thing". However, if the interfaces are too restrictive, the people will work around them, negating the benefit.

- Setup monitoring that alerts the operator on faults.

- Put in place reliable deployment operations that allow quick rollback and gradual release.

Scalability

Scalability is a measure of how adding resources affects the performance of the system and describes the ability of the system to cope with increased load.

As the system grows in data volume, traffic or complexity, there should be a reasonable way of dealing with growth. The most common reason for performance degradation, and a scalability concern, is increased load.

Load is a statement of how much stress a system is under and can be described numerically with load parameters. The best choice of parameters depends on the architecture of the system. In case of a web server, an essential load parameter is the number of requests per second. For a database, it could be the ratio of reads to writes. For a cache, it is the miss rate.

To understand how a specific amount of load affects the system, we need to describe the performance of the system by collecting and analyzing performance metrics. For an on-line system, a good performance metric is response time. For a batch system, throughput is more relevant. More details:

Once the load and the performance metrics of a system are understood and measured, you can investigate what happens when the load increases. This can be approached it in two ways: ① How does the performance degrade, as reflected by the performance metrics, if the load, as reflected by load parameters, increases while maintaining the resources of the system (CPUs, memory, network bandwidth) constant. ② What resources do we need to increase to maintain the performance metrics constant under increased load.

Typical solutions to address an increase in load and the associated decrease in performance are:

- Vertical scaling (scaling up). Vertical scaling means adding more resources to the existing machine and making it more powerful. A system that can run on a single machine is often simpler. However, high end machines can become very expensive and ultimately, vertical scaling has hard limits to how much the system can be extended.

- Horizontal scaling (scaling out). Horizontal scaling means distributing the load across multiple smaller machines and adding more machines to the pool. The distributed systems are more difficult to design and build, especially if the system requires to distribute services that share state, but they extend beyond the limits of what vertical scaling can achieve.

Note that an architecture that is appropriate for one level of load is unlikely to cope with 10 times that load, so it is likely that you will need to rethink the architecture on every order of magnitude of load increase.

Also, an architecture that scales well for a particular application is built around assumptions of which operations will be common and which will be rare - the load parameters.

Maintainability

It should be possible to keep working on the system productively as the system evolves over time.

Request Flow

Write path. Read path. Read-to-write ratio.

Operations

Logs

Monitoring

Deployment

Make it fast to roll back configuration changes if necessary.

Roll out new code gradually, so that any unexpected bugs affect only a small subset of users.

Capacity Estimation

Reference Systems

Organizatorium

- Process and redistribute: Distributed Systems

- Clean Architecture https://www.amazon.com/Clean-Architecture-Craftsmans-Software-Structure/dp/0134494164/

- https://medium.com/@i.gorton/six-rules-of-thumb-for-scaling-software-architectures-a831960414f9

- http://highscalability.com

- Harvard Scalability Class David Malan https://www.youtube.com/watch?v=-W9F__D3oY4

- https://www.lecloud.net/post/7295452622/scalability-for-dummies-part-1-clones

- https://www.lecloud.net/post/7994751381/scalability-for-dummies-part-2-database

- https://www.lecloud.net/post/9246290032/scalability-for-dummies-part-3-cache

- https://www.lecloud.net/post/9699762917/scalability-for-dummies-part-4-asynchronism

- https://www.hiredintech.com/classrooms/system-design/lesson/61

- Learning/System Design/*.pdf

- https://github.com/checkcheckzz/system-design-interview

- https://github.com/donnemartin/system-design-primer

- https://github.com/mmcgrana/services-engineering

- https://github.com/orrsella/soft-eng-interview-prep/blob/master/topics/system-architecture.md

- https://queue.acm.org/detail.cfm?id=3480470

- https://blog.pramp.com/how-to-succeed-in-a-system-design-interview-27b35de0df26

- https://developers.redhat.com/articles/2021/09/21/distributed-transaction-patterns-microservices-compared

- State Transition Diagram

- Schemaless: Fowler https://martinfowler.com/articles/schemaless/

Papers and Articles Referred from "Designing Data-intensive Applications"

- “One Size Fits All”: An Idea Whose Time Has Come and Gone https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.68.9136&rep=rep1&type=pdf

- Yury Izrailevsky and Ariel Tseitlin: The Netflix Simian Army https://netflixtechblog.com/the-netflix-simian-army-16e57fbab116

- Haryadi S. Gunawi, Mingzhe Hao, Tanakorn Leesatapornwongsa, et al. "What Bugs Live in the Cloud?" https://ucare.cs.uchicago.edu/pdf/socc14-cbs.pdf

- Richard Cook: How complex systems fail https://www.researchgate.net/publication/228797158_How_complex_systems_fail

- Jay Kreps: Getting Real About Distributed System Reliability https://blog.empathybox.com/post/19574936361/getting-real-about-distributed-system-reliability

- Nathan Marz: Principles of Software Engineering, Part 1 http://nathanmarz.com/blog/principles-of-software-engineering-part-1.html

- Michael Jurewitz: The Human Impact of Bugs http://jury.me/blog/2013/3/14/the-human-impact-of-bugs

- Raffi Krikorian: Timelines at Scale https://www.infoq.com/presentations/Twitter-Timeline-Scalability/