Distributed Systems: Difference between revisions

| (102 intermediate revisions by the same user not shown) | |||

| Line 8: | Line 8: | ||

* [[System Design]] | * [[System Design]] | ||

= | =Distributed System Definition= | ||

= | According to Andrew Tannenbaum, a distributed system is a collection of independent computers that appear to their users as one computer. Specifically, there are three specific characteristics any distributed system must have: | ||

* The computers operate concurrently | |||

* The computers fail independently. They will fail, sooner or later. | |||

* The computers do not share a global clock. All activities these computers perform are asynchronous with respect to the other components. This is a very important characteristics, as it imposes some essential limitations on what the distributed system can do. | |||

As we scale and distribute the system, it seems as bad things start happening to us: distributed system are hard, and it is possible to get away without one, go for it. However, we distribute for well defined reasons, and distributing is in some cases the only way we can address the problems we have. | |||

=CAP Theorem= | |||

<font color=darkkhaki>Integrate: | |||

* https://www.infoq.com/articles/cap-twelve-years-later-how-the-rules-have-changed/ | |||

* https://towardsdatascience.com/cap-theorem-and-distributed-database-management-systems-5c2be977950e | |||

</font> | |||

=Distributed Storage= | |||

<span id='Single_Master_Database'></span>The non-distributed version of a relational database is called a "single master system". A single master node can scale up to a certain level, after which we need to use more physical nodes - turn it into a distributed database. There are several strategies to scale the database: | |||

* [[#Read_Replication|Read replication]] works well for read-heavy applications. The technique is aimed as supporting more read traffic. | |||

* [[#Sharding|Sharding]] applies - possibly in combination with [[#Read_Replication|read replication]] - to a write-heavy access pattern. | |||

* [[#Consistent_Hashing|Consistent hasing]] is a family of approaches to scalable data storage. | |||

==Read Replication== | |||

Read replication is a strategy to scale a [[#Single_Master_Database|single master database]]. | |||

The pattern involves a '''leader''' database and several '''follower''' databases (read followers). The leader database handles updates and is responsible to propagate the updates to the follower databases. The follower databases will be at times out of date with the leader, because it takes a non-zero time for the change to replicate. Also, since the updates for each follower happen independently, followers will be out of sync, for a short interval of time, with each other. This behavior breaks the strong consistency guarantee of a non-distributed database. The strong consistency guarantee is replaced with '''eventual consistency'''. This is something an architect that designs a system using read replication needs to know and plan for. | |||

The same strategy also applies to non-relational databases. [[MongoDB]] does the read replication the same way. | |||

Read replication works well only for a specific state access pattern: a read-heavy application. Usually, this is the case for web applications. However, in case the application implies a large number of concurrent writes, and the leader cannot keep up and '''falls over''', another distribution strategy called [[#Sharding|sharding]] can be used. | |||

==Sharding== | |||

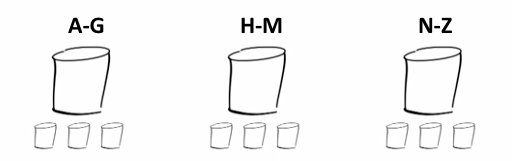

Sharding implies splitting up the workload, by picking a key and breaking up the workload based on the key. Each shard can have its own replica set, so [[#Read_Replication|read replication]] can also be used. | |||

:::[[File:Sharding3.png]] | |||

The splitting of the workload is typically done by a layer in the application. | |||

Relational databases can be sharded this way. [[MongoDB]] also does sharding the same way, internally. | |||

Downsides of sharding: | |||

* '''More complexity'''. | |||

* '''Limited data model''' A key/value pair model works well. For relational databases, the data model is amenable to sharding if each query we issue has a single common key (present in the WHERE clause). Classical example is the user ID. | |||

* '''Limited data access pattern'''. If the query includes a specific key, it works well. If we need to query across keys, that comes with the additional complication that we need to go to all the shards, scatter the query out to all of them, get results and then gather them back together. Data access pattern is important when deciding whether a sharding solution scales well. We don't want too much scatter/gather, which may negate other benefits. | |||

* '''Number of shards is static'''. The decision on the number of shards has to be taken early on, and if that number must change, the data migration is not trivial. | |||

==Consistent Hashing== | |||

Consistent hashing is a family of approaches to scalable data storage. The strategy is also referred to as "distributed hash table" or "DHT", or [[Amazon DynamoDB Concepts|Dynamo]]-style database. | |||

Concept of a node (a peer). | |||

Load balancing. | |||

Write | |||

Read | |||

Add a node - how is the load spread? | |||

Fail a node. | |||

High availability. | |||

TODO: Algorithm. | |||

Nodes are peers. | |||

How does the cluster behaves when we add nodes. Redistribution? | |||

High Availability and Redundancy. | |||

Benefits: | |||

* Scalability | |||

* Always On | |||

Downsides: | |||

* Consistency. What if a node does not get the update? | |||

* Consistency formula for strong consistency. | |||

Entropy problem - when one replica is out of date with another. In that case, it is said that the database has high entropy. | |||

Downsides of consistent hashing: | |||

* Limited data model | |||

Consistent hashing implementations: | |||

* [[Amazon_DynamoDB_Concepts|DynamoDB]] | |||

* [[Cassandra]] | |||

* [[Riak]] | |||

* [[Voldemort]] | |||

<font color=darkgray> | |||

TODO: | |||

* Next TODO in iCloud Drive ▸ Personal ▸ Professional ▸ Learning ▸ Systems Design ▸ Distributed Systems ▸ Distributed Systems TODO.docx in | |||

* The Dynamo paper | |||

* Link to existing Consistent Hashing content or reconcile content [[Consistent Hashing]] | |||

</font> | |||

===Differences between a "Classic" Hash Map and Consistent Hashing-based Table=== | |||

In case of a "classic" Hash Map, the number of buckets is specified at initialization of the data structure, and it is fixed during the life of the structure. If we want to change that number for any reason, the size of the array that keeps references to the buckets changes, so the modulus operator used to calculate the index of the bucket in the array changes, so all the indices, and in consequence, all the locations of the keys already stored become invalid. The keys finds themselves stored in the "wrong" buckets. If we want to keep using that hash table, we need to reallocate '''all''' keys, which is an extremely expensive operation, especially if a large number of keys is already stored. | |||

The consistent hasing-based data structures do not have this problem, because we use the intermediary, abstract hash wheel, to which we report both the keys and the storage nodes. | |||

==Distributed File Systems== | |||

=Distributed Computation= | |||

* [[Hadoop]] | |||

* [[Spark]] | |||

* [[Storm]] | |||

* [[Flink]] | |||

=Distributed Synchronization= | |||

==Network Time Protocol== | |||

==Vector clocks== | |||

=Consensus= | |||

The consensus refers to the problem of agreeing on what is true in a distributed environment. Every distributed system that holds state has to agree on the next valid state of the cluster. This is a well researched area, that yielded several consensus algorithms; [[#Ark|Ark]], [[#Raft|Raft]], [[#BDR|BDR]]. | |||

==Consensus Algorithms== | |||

===Ark=== | |||

{{Internal|Ark#Overview|Ark}} | |||

===Raft=== | |||

{{Internal|Raft#Overview|Raft}} | |||

===BDR=== | |||

{{Internal|BDR#Overview|BDR}} | |||

===Paxos=== | |||

{{Internal|Paxos#Overview|Paxos}} | |||

[[Zookeeper]] | |||

=Distributed Messaging= | |||

{{Internal|Kafka|Kafka}} | |||

=Partitioning= | |||

{{Internal|Partitioning|Partitioning}} | |||

=Replication= | |||

{{Internal|Replication|Replication}} | |||

=To Schedule= | |||

Latest revision as of 16:18, 5 October 2023

External

Internal

Distributed System Definition

According to Andrew Tannenbaum, a distributed system is a collection of independent computers that appear to their users as one computer. Specifically, there are three specific characteristics any distributed system must have:

- The computers operate concurrently

- The computers fail independently. They will fail, sooner or later.

- The computers do not share a global clock. All activities these computers perform are asynchronous with respect to the other components. This is a very important characteristics, as it imposes some essential limitations on what the distributed system can do.

As we scale and distribute the system, it seems as bad things start happening to us: distributed system are hard, and it is possible to get away without one, go for it. However, we distribute for well defined reasons, and distributing is in some cases the only way we can address the problems we have.

CAP Theorem

Integrate:

- https://www.infoq.com/articles/cap-twelve-years-later-how-the-rules-have-changed/

- https://towardsdatascience.com/cap-theorem-and-distributed-database-management-systems-5c2be977950e

Distributed Storage

The non-distributed version of a relational database is called a "single master system". A single master node can scale up to a certain level, after which we need to use more physical nodes - turn it into a distributed database. There are several strategies to scale the database:

- Read replication works well for read-heavy applications. The technique is aimed as supporting more read traffic.

- Sharding applies - possibly in combination with read replication - to a write-heavy access pattern.

- Consistent hasing is a family of approaches to scalable data storage.

Read Replication

Read replication is a strategy to scale a single master database.

The pattern involves a leader database and several follower databases (read followers). The leader database handles updates and is responsible to propagate the updates to the follower databases. The follower databases will be at times out of date with the leader, because it takes a non-zero time for the change to replicate. Also, since the updates for each follower happen independently, followers will be out of sync, for a short interval of time, with each other. This behavior breaks the strong consistency guarantee of a non-distributed database. The strong consistency guarantee is replaced with eventual consistency. This is something an architect that designs a system using read replication needs to know and plan for.

The same strategy also applies to non-relational databases. MongoDB does the read replication the same way.

Read replication works well only for a specific state access pattern: a read-heavy application. Usually, this is the case for web applications. However, in case the application implies a large number of concurrent writes, and the leader cannot keep up and falls over, another distribution strategy called sharding can be used.

Sharding

Sharding implies splitting up the workload, by picking a key and breaking up the workload based on the key. Each shard can have its own replica set, so read replication can also be used.

The splitting of the workload is typically done by a layer in the application.

Relational databases can be sharded this way. MongoDB also does sharding the same way, internally.

Downsides of sharding:

- More complexity.

- Limited data model A key/value pair model works well. For relational databases, the data model is amenable to sharding if each query we issue has a single common key (present in the WHERE clause). Classical example is the user ID.

- Limited data access pattern. If the query includes a specific key, it works well. If we need to query across keys, that comes with the additional complication that we need to go to all the shards, scatter the query out to all of them, get results and then gather them back together. Data access pattern is important when deciding whether a sharding solution scales well. We don't want too much scatter/gather, which may negate other benefits.

- Number of shards is static. The decision on the number of shards has to be taken early on, and if that number must change, the data migration is not trivial.

Consistent Hashing

Consistent hashing is a family of approaches to scalable data storage. The strategy is also referred to as "distributed hash table" or "DHT", or Dynamo-style database.

Concept of a node (a peer).

Load balancing.

Write

Read

Add a node - how is the load spread?

Fail a node.

High availability.

TODO: Algorithm.

Nodes are peers.

How does the cluster behaves when we add nodes. Redistribution?

High Availability and Redundancy.

Benefits:

- Scalability

- Always On

Downsides:

- Consistency. What if a node does not get the update?

- Consistency formula for strong consistency.

Entropy problem - when one replica is out of date with another. In that case, it is said that the database has high entropy.

Downsides of consistent hashing:

- Limited data model

Consistent hashing implementations:

TODO:

- Next TODO in iCloud Drive ▸ Personal ▸ Professional ▸ Learning ▸ Systems Design ▸ Distributed Systems ▸ Distributed Systems TODO.docx in

- The Dynamo paper

- Link to existing Consistent Hashing content or reconcile content Consistent Hashing

Differences between a "Classic" Hash Map and Consistent Hashing-based Table

In case of a "classic" Hash Map, the number of buckets is specified at initialization of the data structure, and it is fixed during the life of the structure. If we want to change that number for any reason, the size of the array that keeps references to the buckets changes, so the modulus operator used to calculate the index of the bucket in the array changes, so all the indices, and in consequence, all the locations of the keys already stored become invalid. The keys finds themselves stored in the "wrong" buckets. If we want to keep using that hash table, we need to reallocate all keys, which is an extremely expensive operation, especially if a large number of keys is already stored.

The consistent hasing-based data structures do not have this problem, because we use the intermediary, abstract hash wheel, to which we report both the keys and the storage nodes.

Distributed File Systems

Distributed Computation

Distributed Synchronization

Network Time Protocol

Vector clocks

Consensus

The consensus refers to the problem of agreeing on what is true in a distributed environment. Every distributed system that holds state has to agree on the next valid state of the cluster. This is a well researched area, that yielded several consensus algorithms; Ark, Raft, BDR.