Docker Networking Concepts

External

- https://docs.docker.com/engine/userguide/networking/

- https://stackoverflow.com/questions/24319662/from-inside-of-a-docker-container-how-do-i-connect-to-the-localhost-of-the-mach

Internal

Overview

Docker's networking subsystem uses drivers. Docker comes with several drivers, and others can be developed and deployed. The drivers available by default are described below:

Network Drivers

docker info lists the network drivers available to a certain Docker server:

...

Plugins:

...

Network: bridge host macvlan null overlay

Networks backed by different drivers may coexists within the same Docker server. By default, a Docker servers starts with three networks based on three different drivers: the "default" bridge network, a host network and a "null" network. Details about all these networks are provided below.

Detailed information about a certain network, including the subnet address, the gateway address, and the containers attached to it, can be obtained with:

docker network inspect <network-name>

bridge

A bridge network consists of a software bridge that allows containers connected to it to communicate, while providing isolation from containers not connected to it. This is the default network driver. This configuration is appropriate when multiple containers need to communicate on the same Docker host. The Docker bridge driver automatically installs rules on the host machine so that containers on different bridge networks cannot communicate directly with each other.

The Default Bridge Network

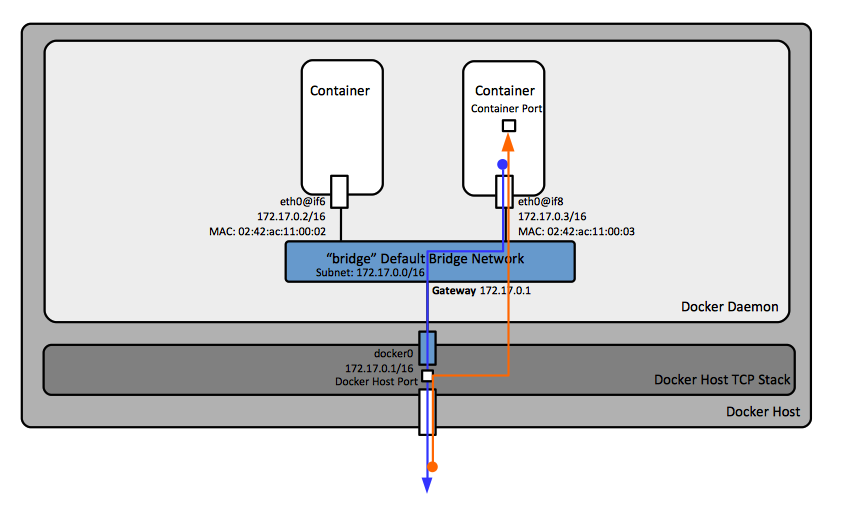

By default, and without additional configuration, a default bridge network is created, and all containers executed by the Docker server will connect to it.

docker network ls [...] NETWORK ID NAME DRIVER SCOPE 158b89572a60 bridge bridge local

The default bridge network is considered a legacy detail of Docker and it is not recommended for production use. User-defined bridge networks are recommended instead. Details about the default bridge network can be obtained with docker network inspect bridge. Its gateway address coincides with the IP address configured on the Docker host's TCP/IP stack "docker0" interface.

The default bridge network can be configured in daemon.json, but all containers use the same configuration (such as MTU and iptables rules).

IP Forwarding

By default, traffic from containers connected to the default bridge is not forwarded to the outside world. Forwarding can be enabled as described here.

User-Defined Bridge Networks

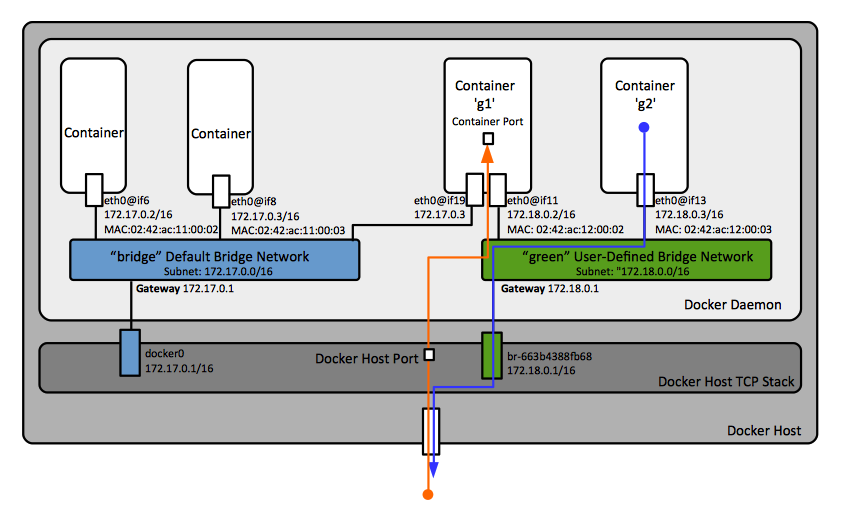

User-defined bridge networks can also be created.

Docker documentation mentions that this is the best configuration to use with standalone containers.

Each user-defined bridge network creates a separate bridge, which can be configured independently. When a user-defined bridge is created or removed, or containers are connected or disconnected from the bridge, Docker uses OS-specific tools to manage the underlying network infrastructure, such as adding or removing bridge devices and configuring iptables rules.

User-defined bridge networks have some advantages over the default bridge network:

- All containers connecting to a user-define bridge network open all ports to each other. This is not the case with the default bridge network, for two containers on the default bridge network to communicate, they need to expose their ports with -p.

- User-defined bridges provide automatic DNS resolution between containers, and resolves the names of the containers to their IP address. Containers on the default bridge network can only access each other by IP, unless --link is used, which is deprecated and it will be removed. Also, linked containers with --link share environment variables. For more details see https://docs.docker.com/network/links/.

- In case of user-defined bridges, containers can be connected and disconnected from the network bridge dynamically. In case of the default bridge, a container can be disconnected only if it stopped and recreated with different network options.

Details about a specific user-define bridge network can be obtained with docker network inspect <network-name>. Its gateway address coincides with the IP address of a Docker host's TCP/IP stack "br-...." interface.

Configuration

User-defined bridge networks can be configured with:

subnet

Subnet in CIDR format that represents a network segment.

ip-range

Allocate container ip from a sub-range.

gateway

IPv4 or IPv6 Gateway for the master subnet.

Connecting Containers to a User-Defined Bridge Network

host

This network driver removes network isolation between the container and the Docker host, and it uses the host's networking directly. This use case is appropriate when the container's network stack should not be isolated from the Docker host, but other aspects of the containers should be isolated. A host network named "host" is available by default on the Docker server:

docker network ls NETWORK ID NAME DRIVER SCOPE ... 036bdd552d37 host host local

If a container is executed with "--network host", then the container will bind directly to the host network interface. No new Docker host-level interface will be created.

overlay

Overlay networks connect multiple Docker daemons together.

macvlan

The macvlan driver allows assigning a MAC address to a container, making it appear as a physical device on the network. The Docker daemon routes traffic to containers by their MAC addresses.

none

Container networking can be disabled altogether.

iptables

Container Networking

A Docker container behaves like a host on a private network. Each container has its own virtual network stack, Ethernet interface and its own IP address. All containers managed by the same server are connected via bridge interfaces to a default virtual network and can talk to each other directly. Logically, they behave like physical machines connected through a common Ethernet switch.

Outbound Traffic

In order to get to the host and the outside world, the traffic from the containers goes over an interface called docker0: the Docker server acts as a virtual bridge for outbound traffic. The application running inside the container have outbound IP connectivity by default: traffic is routed over the bridge interface and out.

Inbound Traffic

The Docker server also allows containers to "bind" to ports on the host, so outside traffic can reach them: the traffic passes over a proxy that is part of the Docker server before getting to containers.

The default mode can be changed, for example --net configures the server to allow containers to use the host's own network device and address.

Also see:

Connecting a Container to More Than One Bridge

A container can be connected to more than one bridge. This can be achieved by running the container with a --network specification, and then executing

docker network connect <other-network> <container-name>

Details about the Network the Container is Connected To

docker network inspect <network-name>

returns detailed information about a specific network, the output contains the containers attached to it.