Linux NFS Concepts: Difference between revisions

(Created page with "=Internal= * Linux NFS =Locking Considerations= NFSv3 does not time out the lock held by a process that crashes. NFSv4 locks time out in the same sit...") |

|||

| (14 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=External= | |||

* Why NFS Sucks https://www.kernel.org/doc/ols/2006/ols2006v2-pages-59-72.pdf | |||

=Internal= | =Internal= | ||

* [[Linux NFS#Concepts|Linux NFS]] | * [[Linux NFS#Concepts|Linux NFS]] | ||

=NFS Version= | |||

The NFS version to use can be specified either as an argument of the <tt>mount</tt> command (see [[Mount#-o|<tt>mount -o</tt>]]) or in the client-side mount configuration (see [[Linux_NFS_Configuration#Mount_Options|NFS mount options]]). | |||

=Share= | |||

=NFS Client= | |||

=NFS Driver= | |||

Also see: {{Internal|Linux_7_Storage_Concepts#Block_Driver|Block Driver}} | |||

=NFS Security= | |||

==NFS v3== | |||

NFS controls who can mount an exported file system based on the host making the mount request, not the user that actually uses the file system. Hosts must be given explicit rights to mount the exported file system. Access control is not possible for users, other than through file and directory permissions. In other words, once a file system is exported via NFS, any user on any remote host connected to the NFS server can access the shared data. | |||

==NFS v4== | |||

With NFSv4, the security mechanisms are oriented towards authenticating individual users, and not client machines as used in NFSv2 and NFSv3. | |||

=Locking Considerations= | =Locking Considerations= | ||

NFSv3 does not time out the lock held by a process that crashes. NFSv4 locks time out in the same situation. | NFSv3 does not time out the lock held by a process that crashes. NFSv4 locks time out in the same situation. | ||

=Consistency Considerations= | |||

==Close-to-Open Consistency== | |||

The only consistency guarantee made by NFS is close-to-open consistency, which means that any changes are flushed to the server on closing the file, and a cache revalidation occurs when the file is opened. | |||

=Root Squash= | =Root Squash= | ||

| Line 29: | Line 60: | ||

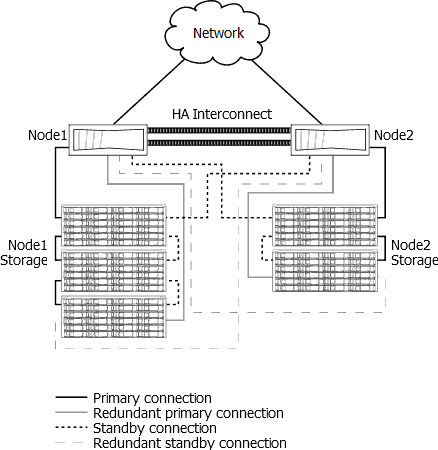

Multipath HA is a hardware configuration that provides redundancy for the path from each disk controller to every disk shelf in the configuration. More details in https://library.netapp.com/ecmdocs/ECMP1210206/html/GUID-C4630743-898E-4AB1-A321-E6E38532CEF6.html. | Multipath HA is a hardware configuration that provides redundancy for the path from each disk controller to every disk shelf in the configuration. More details in https://library.netapp.com/ecmdocs/ECMP1210206/html/GUID-C4630743-898E-4AB1-A321-E6E38532CEF6.html. | ||

[ | [[Image:Multipath Netapp.png]] | ||

2. Make sure that the physical network is resilient. | 2. Make sure that the physical network is resilient. | ||

Latest revision as of 01:53, 20 August 2020

External

Internal

NFS Version

The NFS version to use can be specified either as an argument of the mount command (see mount -o) or in the client-side mount configuration (see NFS mount options).

NFS Client

NFS Driver

Also see:

NFS Security

NFS v3

NFS controls who can mount an exported file system based on the host making the mount request, not the user that actually uses the file system. Hosts must be given explicit rights to mount the exported file system. Access control is not possible for users, other than through file and directory permissions. In other words, once a file system is exported via NFS, any user on any remote host connected to the NFS server can access the shared data.

NFS v4

With NFSv4, the security mechanisms are oriented towards authenticating individual users, and not client machines as used in NFSv2 and NFSv3.

Locking Considerations

NFSv3 does not time out the lock held by a process that crashes. NFSv4 locks time out in the same situation.

Consistency Considerations

Close-to-Open Consistency

The only consistency guarantee made by NFS is close-to-open consistency, which means that any changes are flushed to the server on closing the file, and a cache revalidation occurs when the file is opened.

Root Squash

Root squash represents reduction of the access rights for the remote superuser (root) when using identity authentication (local user is the same as remote user). If using root squash, the remote user is no longer super user on the NFS server. After implementing the root squash, the authorized superuser performs restricted actions after logging into an NFS server directly and not just by mounting the exported NFS folder.

NFS Caching

The nfs client mount option “ac” causes the client to cache file attributes. “ac” is the default as well. Changing it to not cache attributes “noac” incurs a significant performance penalty. The max time, by default that the nfs client caches file or directory attributes is 30 sec min, and 60 sec maximum, by default.

Asynchronous NFS

The default export behavior for both NFS Version 2 and Version 3 protocols, used by exportfs in nfs-utils versions prior to nfs-utils-1.0.1 is "asynchronous". This default permits the server to reply to client requests as soon as it has processed the request and handed it off to the local file system, without waiting for the data to be written to stable storage. This is indicated by the async option denoted in the server's export list. It yields better performance at the cost of possible data corruption if the server reboots while still holding unwritten data and/or metadata in its caches. This possible data corruption is not detectable at the time of occurrence, since the async option instructs the server to lie to the client, telling the client that all data has indeed been written to the stable storage, regardless of the protocol used.

More details: http://nfs.sourceforge.net/nfs-howto/ar01s05.html#sync_versus_async

HA

NFS v4 is a viable option for mission critical applications. Oracle, SAP, etc sell NFS v4 HA solutions. NFS HA requires resilient compute, networking and storage:

1. Create a multipath HA environment on 2 RHEL hosts with VIPs.

Multipath HA is a hardware configuration that provides redundancy for the path from each disk controller to every disk shelf in the configuration. More details in https://library.netapp.com/ecmdocs/ECMP1210206/html/GUID-C4630743-898E-4AB1-A321-E6E38532CEF6.html.

2. Make sure that the physical network is resilient.

3. Configure Resilient Storage add-on for two hosts for path redundancy. The Red Hat Resilient Storage Add-on creates a resilient storage feature.

4. The storage device needs to be able to handle multipath HA. The shared storage device needs at least two nodes or controllers that is compatible with Resilient Storage.

5. Use in-memory caching and commit with replication to other node, so it can flush that to whatever the datastore is if the path outage is intolerable to the application. That way the application can recover, and it would just show up to the use as a minor delay (usually failover can occur in less than a couple of seconds).