Spinnaker Running a Script with Run Job (Manifest): Difference between revisions

| (25 intermediate revisions by the same user not shown) | |||

| Line 13: | Line 13: | ||

{{Note|⚠️ Make sure you use [[Spinnaker Stage Run Job Manifest#Overview|Run Job (Manifest)]] and NOT [[Spinnaker_Stage_Deploy_(Manifest)#Overview|Deploy (Manifest)]]!}} | {{Note|⚠️ Make sure you use [[Spinnaker Stage Run Job Manifest#Overview|Run Job (Manifest)]] and NOT [[Spinnaker_Stage_Deploy_(Manifest)#Overview|Deploy (Manifest)]]!}} | ||

[[Spinnaker Stage Run Job Manifest#Overview|Run Job (Manifest)]] only accepts Job manifests, so a ConfigMap to specify the script cannot be used. The functionality must be already present in the container image and can be controlled via <code>command</code> and arguments. The stage will deploy the job manifest and wait until it completes, thus gating the pipeline’s continuation on the job’s success or failure. The stage may optionally collect the Job output and inject it back into the pipeline. The Job will be automatically deleted upon completion. | [[Spinnaker Stage Run Job Manifest#Overview|Run Job (Manifest)]] only accepts [[Kubernetes_Job#Overview|Kubernetes Job]] manifests, so a ConfigMap to specify the script cannot be used. The functionality must be already present in the container image and can be controlled via <code>command</code> and arguments. The stage will deploy the job manifest and wait until it completes, thus gating the pipeline’s continuation on the job’s success or failure. The stage may optionally collect the Job output and inject it back into the pipeline. The Job will be automatically deleted upon completion. | ||

An alternative to run configurable functionality is to use [[Spinnaker_Running_a_Script_with_Deploy_(Manifest)#Overview|Running a Script with Deploy (Manifest)]], but in that case we lose the ability to inject output back into the pipeline. | An alternative to run configurable functionality is to use [[Spinnaker_Running_a_Script_with_Deploy_(Manifest)#Overview|Running a Script with Deploy (Manifest)]], but in that case we lose the ability to inject output back into the pipeline. | ||

| Line 44: | Line 44: | ||

restartPolicy: Never | restartPolicy: Never | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==Configuration== | |||

===<tt>backoffLimit</tt>=== | |||

{{Internal|Kubernetes_Job_Manifest#backoffLimit|Kubernetes Job Manifest | backoffLimit}} | |||

=Viewing Execution Logs= | =Viewing Execution Logs= | ||

| Line 63: | Line 67: | ||

Output → Capture Output From → Logs. A Container Name must be specified. | Output → Capture Output From → Logs. A Container Name must be specified. | ||

===<tt>SPINNAKER_PROPERTY_*=*</tt>=== | ⚠️ Once set, "Capture Output From: Logs" implies that the job output contains the expected syntax ([[#w3te|SPINNAKER_PROPERTY_*=*]] or [[#b34g|SPINNAKER_CONFIG_JSON=...]]). If that output is missing, the job will fail with "Exception ( Wait On Job Completion ) Expected properties file automation-logic but it was either missing, empty or contained invalid syntax". On the other hand, if additional output is present, it won't interfere with the parsing of the expected syntax. | ||

===<span id='w3te'></span><tt>SPINNAKER_PROPERTY_*=*</tt>=== | |||

If the job sends to stdout <code>SPINNAKER_PROPERTY_<key>=<value></code>, then <code><key></code>:<code><value></code>, where <code><key></code> is converted to lowercase, shows up in the [[Spinnaker_Concepts#Stage_Output|stage output]] map <code>.stages[stage_index].outputs</code>, and also in the map <code>.stages[stage_index].outputs.propertyFileContents</code>. It also shows in the [[Spinnaker_Concepts#Stage_Context|stage context]] map <code>.stages[stage_index].context</code>, and also in the map <code>.stages[stage_index].context. propertyFileContents </code>. | If the job sends to stdout <code>SPINNAKER_PROPERTY_<key>=<value></code>, then <code><key></code>:<code><value></code>, where <code><key></code> is converted to lowercase, shows up in the [[Spinnaker_Concepts#Stage_Output|stage output]] map <code>.stages[stage_index].outputs</code>, and also in the map <code>.stages[stage_index].outputs.propertyFileContents</code>. It also shows in the [[Spinnaker_Concepts#Stage_Context|stage context]] map <code>.stages[stage_index].context</code>, and also in the map <code>.stages[stage_index].context. propertyFileContents </code>. | ||

| Line 97: | Line 103: | ||

} | } | ||

</syntaxhighlight> | </syntaxhighlight> | ||

===<tt>SPINNAKER_CONFIG_JSON</tt>=== | |||

The values can be accessed with: | |||

<syntaxhighlight lang='groovy'> | |||

${ execution.stages.?[ name == 'Script Job' ][0].outputs.color } | |||

</syntaxhighlight> | |||

or: | |||

<syntaxhighlight lang='groovy'> | |||

${ execution.stages.?[ name == 'Script Job' ][0].context.color } | |||

</syntaxhighlight> | |||

More key formats: | |||

<syntaxhighlight lang='text'> | |||

SPINNAKER_PROPERTY_COLOR.NUANCE=darkblue | |||

</syntaxhighlight> | |||

surfaces in "output"/"context" as: | |||

<syntaxhighlight lang='json'> | |||

{ | |||

"stages": [ | |||

{ | |||

"context": { | |||

"color.nuance": "Blue", | |||

... | |||

} | |||

} | |||

] | |||

} | |||

</syntaxhighlight> | |||

The SPEL lookup should be performed as follows:: | |||

<syntaxhighlight lang='groovy'> | |||

${ execution.stages.?[ name == 'Script Job' ][0].context['color.nuance'] } | |||

</syntaxhighlight> | |||

===<span id='b34g'></span><tt>SPINNAKER_CONFIG_JSON</tt>=== | |||

<font color=darkkhaki>TO PROCESS: https://spinnaker.io/docs/guides/user/kubernetes-v2/run-job-manifest/#spinnaker_config_json</font> | <font color=darkkhaki>TO PROCESS: https://spinnaker.io/docs/guides/user/kubernetes-v2/run-job-manifest/#spinnaker_config_json</font> | ||

If the Job container sends this to stdout: | |||

<syntaxhighlight lang='text'> | |||

SPINNAKER_CONFIG_JSON ={"color":"Blue","size","small"} | |||

</syntaxhighlight> | |||

when the stage completes, it carries the following structure in its JSON representation. | |||

Note that the JSON content must be on just one line! | |||

<syntaxhighlight lang='json'> | |||

{ | |||

"type": "PIPELINE", | |||

"stages": [ | |||

{ | |||

"type": "runJobManifest", | |||

"context": { | |||

"color": "Blue", | |||

"object": { | |||

"shape": "square", | |||

"size": "10" | |||

}, | |||

"propertyFileContents": { | |||

"color": "Blue", | |||

"object": { | |||

"shape": "square", | |||

"size": "10" | |||

} | |||

} | |||

}, | |||

"outputs": { | |||

"color": "Blue", | |||

"object": { | |||

"shape": "square", | |||

"size": "10" | |||

}, | |||

"propertyFileContents": { | |||

"color": "Blue", | |||

"object": { | |||

"shape": "square", | |||

"size": "10" | |||

} | |||

} | |||

} | |||

} | |||

] | |||

} | |||

</syntaxhighlight> | |||

The SPINNAKER_CONFIG_JSON map keys are merged into <code>context</code>, <code>contents.propertyFileContents</code>, <code>outputs</code> and <code>outputs.propertyFileContents</code>. | |||

The values can be accessed with: | |||

<syntaxhighlight lang='groovy'> | |||

${ execution.stages.?[ name == 'Script Job' ][0].outputs.color } | |||

</syntaxhighlight> | |||

or: | |||

<syntaxhighlight lang='groovy'> | |||

${ execution.stages.?[ name == 'Script Job' ][0].outputs.object.shape } | |||

</syntaxhighlight> | |||

====Inject a base64-encoded Embedded Artifact back into the Pipeline with <tt>SPINNAKER_CONFIG_JSON</tt>==== | |||

The content generated by the Job can be packaged as a base64-encoded artifact and injected back into the pipeline, to be used by subsequent stages: | |||

1. Generate the content in the Job and package it as a base64 element. | |||

<syntaxhighlight lang='bash'> | |||

cat > /tmp/test.yaml <<EOF | |||

a: | |||

b: | |||

c: 'something' | |||

EOF | |||

local content=$(cat /tmp/test.yaml | base64 | tr -d \\n) || exit 1 | |||

</syntaxhighlight> | |||

2. Generate the following JSON as value of <code>SPINNAKER_CONFIG_JSON</code>: | |||

<syntaxhighlight lang='json'> | |||

{ | |||

"artifacts": [ | |||

{ "type":"embedded/base64", | |||

"name": "job_generated_artifact", | |||

"reference": "${content}"} | |||

] | |||

} | |||

</syntaxhighlight> | |||

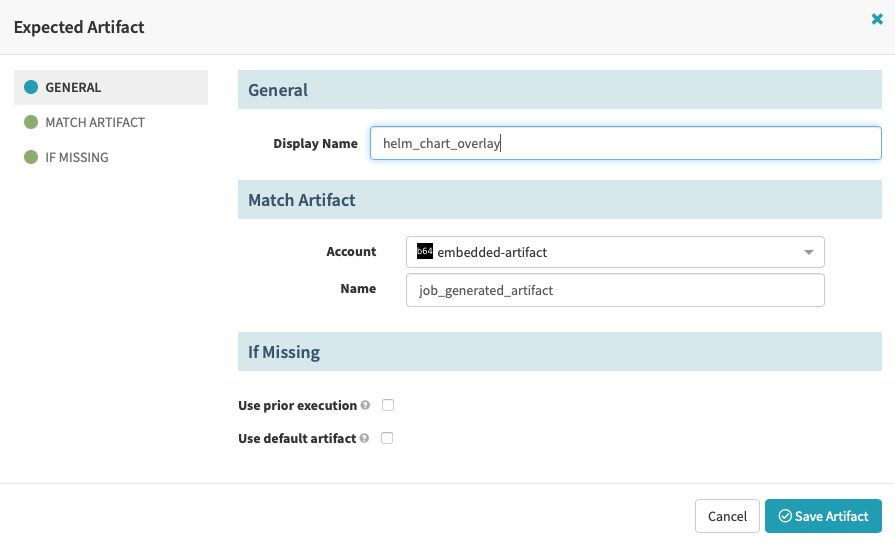

3. Configure the 'Run Job (Manifest)' 'Produces Artifacts" section with a new artifact that "matches" the base64-encoded job output, which is available to the pipeline as "job_generated_artifact": | |||

:::[[File:spinnaker_run_job_produces_artifact.png]] | |||

Upon the successful execution of the stage, the stage JSON will contain this: | |||

<syntaxhighlight lang='json'> | |||

{ | |||

"type": "PIPELINE", | |||

"stages": [ | |||

{ | |||

"name": "Automation Logic", | |||

"context": { | |||

"artifacts": [ | |||

{ | |||

"reference": "YXBwbGVDb25uZWN0...", | |||

"name": "helm_chart_overlay", | |||

"type": "embedded/base64" | |||

} | |||

] | |||

}, | |||

"outputs": { | |||

"artifacts": [ | |||

{ | |||

"name": "helm_chart_overlay", | |||

"reference": "YXBwbGVDb25uZWN0...", | |||

"type": "embedded/base64" | |||

} | |||

], | |||

"propertyFileContents": { | |||

"artifacts": [ | |||

{ | |||

"name": "helm_chart_overlay", | |||

"reference": "YXBwbGVDb25uZWN0...", | |||

"type": "embedded/base64" | |||

} | |||

] | |||

} | |||

} | |||

}, | |||

... | |||

], | |||

} | |||

</syntaxhighlight> | |||

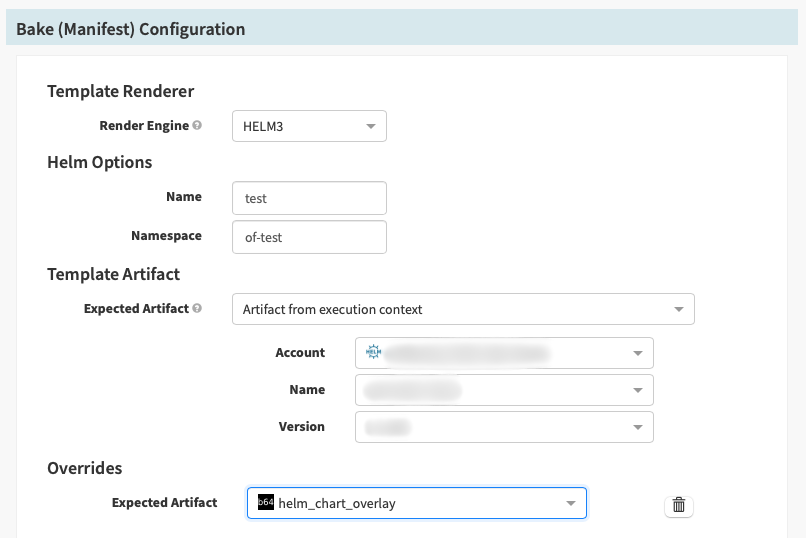

4. Configure the next stage to use the artifact produced by this stage: | |||

:::[[File:spinnaker_helm_overlay.png]] | |||

==Job-Generated Artifacts== | ==Job-Generated Artifacts== | ||

<font color=darkkhaki>TO PROCESS: https://spinnaker.io/docs/guides/user/kubernetes-v2/run-job-manifest/#artifacts</font> | <font color=darkkhaki>TO PROCESS: https://spinnaker.io/docs/guides/user/kubernetes-v2/run-job-manifest/#artifacts</font> | ||

Also see [[#Inject_a_base64-encoded_Embedded_Artifact_back_into_the_Pipeline_with_SPINNAKER_CONFIG_JSON|Inject a base64-encoded Embedded Artifact back into the Pipeline with SPINNAKER_CONFIG_JSON]] above. | |||

Latest revision as of 03:00, 10 May 2022

External

- https://kb.armory.io/s/article/Run-a-Generic-Shell-Script-with-Spinnaker

- https://spinnaker.io/docs/guides/user/kubernetes-v2/run-job-manifest/

- https://blog.spinnaker.io/extending-spinnaker-with-kubernetes-and-containers-5d16ec810d81

Internal

Overview

The execution phase is implemented as a Run Job (Manifest) stage.

⚠️ Make sure you use Run Job (Manifest) and NOT Deploy (Manifest)!

Run Job (Manifest) only accepts Kubernetes Job manifests, so a ConfigMap to specify the script cannot be used. The functionality must be already present in the container image and can be controlled via command and arguments. The stage will deploy the job manifest and wait until it completes, thus gating the pipeline’s continuation on the job’s success or failure. The stage may optionally collect the Job output and inject it back into the pipeline. The Job will be automatically deleted upon completion.

An alternative to run configurable functionality is to use Running a Script with Deploy (Manifest), but in that case we lose the ability to inject output back into the pipeline.

Run Job (Manifest) Definition

Add a "Run Job (Manifest)" stage and name it "Script Job" or similar.

The Account is the target Kubernetes cluster.

In Manifest Configuration. set Manifest Source: Text, and use the following manifest. Replace 'REPLACE-WITH-TARGET-NAMESPACE-NAME' with the target namespace for the Job.

Note that the Job name will be available in kubectl -n <namespace> get job and it will also surface in pod names, so choose something meaningful.

apiVersion: batch/v1

kind: Job

metadata:

name: script-job

namespace: REPLACE-WITH-TARGET-NAMESPACE-NAME

spec:

backoffLimit: 2

template:

spec:

containers:

- command:

- kubectl

- version

image: 'bitnami/kubectl:1.12'

name: script

restartPolicy: Never

Configuration

backoffLimit

Viewing Execution Logs

The job execution log is captured and can be accessed in the pipeline Execution Details → Run Job Config → Logs → Console Output.

If the Spinnaker instance is configured as such, logs can be forwarded to dedicated infrastructure.

Also see:

Generating and Using Execution Results

A job produces some output, and the Spinnaker "Run Job (Manifest)" stage can be configured to "capture it". Captured output will be available in the pipeline context for use in downstream stages. There are several ways to "capture output": from a job's container stdout or from an artifact produced by the job.

Capture Output from Container Stdout and Update Stage Outputs and Context

Output → Capture Output From → Logs. A Container Name must be specified.

⚠️ Once set, "Capture Output From: Logs" implies that the job output contains the expected syntax (SPINNAKER_PROPERTY_*=* or SPINNAKER_CONFIG_JSON=...). If that output is missing, the job will fail with "Exception ( Wait On Job Completion ) Expected properties file automation-logic but it was either missing, empty or contained invalid syntax". On the other hand, if additional output is present, it won't interfere with the parsing of the expected syntax.

SPINNAKER_PROPERTY_*=*

If the job sends to stdout SPINNAKER_PROPERTY_<key>=<value>, then <key>:<value>, where <key> is converted to lowercase, shows up in the stage output map .stages[stage_index].outputs, and also in the map .stages[stage_index].outputs.propertyFileContents. It also shows in the stage context map .stages[stage_index].context, and also in the map .stages[stage_index].context. propertyFileContents .

So, if the designated Job container sends this to stdout:

SPINNAKER_PROPERTY_COLOR=Blue

when the stage completes, it carries this in its JSON representation:

{

"type": "PIPELINE",

"stages": [

{

"type": "runJobManifest",

"context": {

"color": "Blue",

"propertyFileContents": {

"color": "Blue"

}

},

"outputs": {

"color": "Blue",

"propertyFileContents": {

"color": "Blue"

}

}

}

]

}

The values can be accessed with:

${ execution.stages.?[ name == 'Script Job' ][0].outputs.color }

or:

${ execution.stages.?[ name == 'Script Job' ][0].context.color }

More key formats:

SPINNAKER_PROPERTY_COLOR.NUANCE=darkblue

surfaces in "output"/"context" as:

{

"stages": [

{

"context": {

"color.nuance": "Blue",

...

}

}

]

}

The SPEL lookup should be performed as follows::

${ execution.stages.?[ name == 'Script Job' ][0].context['color.nuance'] }

SPINNAKER_CONFIG_JSON

TO PROCESS: https://spinnaker.io/docs/guides/user/kubernetes-v2/run-job-manifest/#spinnaker_config_json

If the Job container sends this to stdout:

SPINNAKER_CONFIG_JSON ={"color":"Blue","size","small"}

when the stage completes, it carries the following structure in its JSON representation.

Note that the JSON content must be on just one line!

{

"type": "PIPELINE",

"stages": [

{

"type": "runJobManifest",

"context": {

"color": "Blue",

"object": {

"shape": "square",

"size": "10"

},

"propertyFileContents": {

"color": "Blue",

"object": {

"shape": "square",

"size": "10"

}

}

},

"outputs": {

"color": "Blue",

"object": {

"shape": "square",

"size": "10"

},

"propertyFileContents": {

"color": "Blue",

"object": {

"shape": "square",

"size": "10"

}

}

}

}

]

}

The SPINNAKER_CONFIG_JSON map keys are merged into context, contents.propertyFileContents, outputs and outputs.propertyFileContents.

The values can be accessed with:

${ execution.stages.?[ name == 'Script Job' ][0].outputs.color }

or:

${ execution.stages.?[ name == 'Script Job' ][0].outputs.object.shape }

Inject a base64-encoded Embedded Artifact back into the Pipeline with SPINNAKER_CONFIG_JSON

The content generated by the Job can be packaged as a base64-encoded artifact and injected back into the pipeline, to be used by subsequent stages:

1. Generate the content in the Job and package it as a base64 element.

cat > /tmp/test.yaml <<EOF

a:

b:

c: 'something'

EOF

local content=$(cat /tmp/test.yaml | base64 | tr -d \\n) || exit 1

2. Generate the following JSON as value of SPINNAKER_CONFIG_JSON:

{

"artifacts": [

{ "type":"embedded/base64",

"name": "job_generated_artifact",

"reference": "${content}"}

]

}

3. Configure the 'Run Job (Manifest)' 'Produces Artifacts" section with a new artifact that "matches" the base64-encoded job output, which is available to the pipeline as "job_generated_artifact":

Upon the successful execution of the stage, the stage JSON will contain this:

{

"type": "PIPELINE",

"stages": [

{

"name": "Automation Logic",

"context": {

"artifacts": [

{

"reference": "YXBwbGVDb25uZWN0...",

"name": "helm_chart_overlay",

"type": "embedded/base64"

}

]

},

"outputs": {

"artifacts": [

{

"name": "helm_chart_overlay",

"reference": "YXBwbGVDb25uZWN0...",

"type": "embedded/base64"

}

],

"propertyFileContents": {

"artifacts": [

{

"name": "helm_chart_overlay",

"reference": "YXBwbGVDb25uZWN0...",

"type": "embedded/base64"

}

]

}

}

},

...

],

}

4. Configure the next stage to use the artifact produced by this stage:

Job-Generated Artifacts

TO PROCESS: https://spinnaker.io/docs/guides/user/kubernetes-v2/run-job-manifest/#artifacts

Also see Inject a base64-encoded Embedded Artifact back into the Pipeline with SPINNAKER_CONFIG_JSON above.