Httpd mod proxy Concepts

Internal

Overview

mod_proxy implements generic forward and reverse proxying functionality: a request is received by httpd and forwarded to another server, commonly referred to as the origin server.

Proxying does not necessarily involve load balancing. However, httpd is capable of proxying to a set of equivalent origin servers, which is commonly referred to as load balancing. For that, mod_proxy_balancer must be installed and configured.

Reverse Proxy (Gateway)

A reverse proxy (or gateway), appears to the client just like an ordinary web server. No special configuration on the client is necessary. The client makes ordinary requests for content in the name-space of the reverse proxy. The reverse proxy then decides on what origin server to use to forward the requests, and returns the content as if it was itself the origin. The reverse proxy may also employ configurable logic to modify the response headers set by the origin server so the response appears as originating from the proxy. For more details see ProxyPassReverse, ProxyPassReverseCookieDomain and ProxyPassReverseCookiePath.

A typical usage of a reverse proxy is to provide Internet users access to an origin server sitting is behind a firewall. Reverse proxies can also be used to balance load among several back-end servers, or to provide caching for a slower back-end server. In addition, reverse proxies can be used simply to bring several servers into the same URL space.

The configuration of a reverse proxy starts with the declaration of a ProxyPass directive, which is the entry point that maps an origin server(s) URL into the proxy's URL space. ProxyPass defines a worker, which can be a direct worker, when there is only one origin server or a balancer worker, when there are several load-balanced origin servers.

httpd implements a reverse proxy with using mod_proxy modules. Various protocols can be proxied. The most common are HTTP and AJP. For an explanation of how mod_proxy modules work together, see the "mod_proxy Modules and How they Work Together" section below.

Forward Proxy

A forward proxy is an intermediate server that sits between the client and the origin server. In order to get content from the origin server, the client sends a request to the proxy naming the origin server as the target and the proxy then requests the content from the origin server and returns it to the client. The client must be specially configured to use the forward proxy to access other sites. httpd can be used to implement a forward proxy with mod_proxy. The forward proxy is activated using the ProxyRequests directive.

The ProxyPass Directive

The ProxyPass directive is the configuration entry point that maps one or more origin servers URLs into the proxy's main server or virtual host URL space. ProxyPass defines a worker, which can be a direct worker, when there is only one origin server or a balancer worker, when there are several load-balanced origin servers.

A ProxyPass configuration options reference is available here:

Workers

A worker is an object maintained by the httpd server to manage the configuration and communication parameters for one origin server or for a group of origin servers, as it is the case for a balancer worker. The worker object itself does not have the concept of forward or reverse proxying, it just encapsulates the concept of communication with an origin server. There are two built-in workers: the default forward proxy and the default reverse proxy worker. The built-in workers do not use HTTP Keep-Alive, they open TCP connections to the origin server for each request. They do not do connection pooling.

ProxyPass and ProxyRequests define explicit workers. An explicit worker is identified by its URL, which includes the path that maps the origin server(s) into the proxy's URL space, the address of the origin server or the balancer group and optionally connection configuration elements. For a reverse proxy, an explicit worker is created and configured using the ProxyPass and ProxyPassMatch directives. Each worker defined as such uses a separate connection pool and configuration. Declaring explicit workers for forward proxies is not very common.

Worker Status

The initial worker status can be set via configuration and is reported by mod_status.

D: worker is disabled and will not accept any requests.

S: worker is administratively stopped.

I: worker is in ignore-errors mode and will be always considered available.

H: worker is in hot-standby mode and will only be used if no other viable workers are available.

E: worker is in error state.

N: worker is in drain mode and will only accept existing sticky sessions destined for itself and ignore all other requests.

Direct Workers

A direct worker is defined with a simple ProxyPass directive, where its URL entry represents just one origin server.

ProxyPass "/example" "http://backend.example.com" connectiontimeout=5 timeout=30

Worker Sharing

The order in which ProxyPass directives are declared matters.

If two different ProxyPass directives that share a URL fragment are declared in the same configuration file, and the shortest one is declared first, only one worker is created, for the shorter URL. The requests that are matched for the ProxyPass with the longer URL will be proxied using the only worker, and all configuration attributes given explicitly for the later worker will be ignored.

If the longer URL is declared first, two different workers will be created.

Also see

Balancer Workers

Balancer workers are virtual workers that use direct workers, known as their members, to handle requests. The balancer worker does not really communicate with a backend server. It is only responsible with the management of its members and load balancing of the request among them.

A balancer worker is declared with a ProxyPass directive where the URL entry uses "balancer://" as the protocol scheme. The balancer URL uniquely identifies the balancer worker.

Each balancer can have multiple members. The member the next request is sent to is chosen based on the load balancer algorithm in effect. Members are added to a balancer by declaring them with the BalancerMember directive, within a Proxy container, as shown below. If the Proxy directive's URL starts with "balancer://", then any path information in the URL is ignored.

ProxyPass "/example" "balancer://mycluster/"

<Proxy balancer://mycluster>

BalancerMember http://node1/

BalancerMember http://node2/

</Proxy>

The concepts behind the mod_proxy load balancing are presented here:

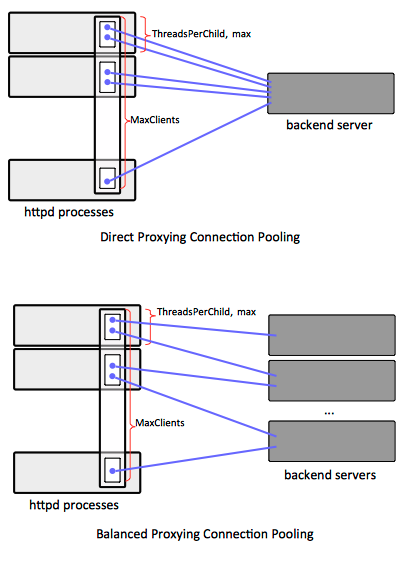

Connection Pooling

This section provides more details on connection pooling for explicit workers. Each explicit (direct or balancer) worker defined with a ProxyPass directive, retains the TCP/IP connections created to forward requests to the backend nodes in a connection pool, for further reuse, even if the browser closes the original connection that required proxying. This behavior saves system resources by opening and closing fewer TCP connections, and it reduces the latency on subsequent requests since there is no time spent with the TCP connection opening handshake.

The connection pools such defined can be configured independently on a per-ProxyPass basis, subject to worker sharing rules. Explicit connection pool configuration can be declared in the form of "key=value" parameters, trailing the ProxyPass declaration.

For a httpd proxy running in worker MPM mode, the connection pool can the thought as logically spanning all httpd child processes active at a time: connections can be opened and maintained from any httpd child process, and they are subject to connection pool capacity rules described below.

Connection Creation

The connections are lazily initiated. A connection is created only when there is a request to forward, and there aren't other available connections in the pool that can be used to forward that request. If the backend servers are not available when the httpd proxy starts, this fact won't become obvious until the first request to be proxied arrives at the server. If at that time there are still no backend servers available, the proxy will respond with 503 Service Temporarily Unavailable. After the request is handled, the newly created connection is retained in the pool.

Initial connection creation is subject to timeout. The httpd proxy process will wait for the configured number of seconds for the creation process to complete, and it will timeout if the connection is not established. Connection creation timeout is controlled by the connectiontimeout parameter.

No Pooling

Connection pooling can be explicitly disabled with the disablereuse parameter. Setting this parameter to "On" forces mod_proxy to immediately close a connection to the backend after being used, effectively disabling its persistent connection pool for the backend. This is useful in various situations where a firewall between httpd and the backend server silently drops connections.

Connection Pool Capacity

For a httpd proxy running in worker MPM mode, the maximum number of connections in the ProxyPass’ logical connection pool spanning all children processes is given by default by the value of the MaxClient directive. This assumes there is just one ProxyPass declaration per server configuration. The number of connections permitted for just one httpd child process is limited to the value of ThreadsPerChild directive, per worker MPM sizing rules.

ProxyPass exposes options that can be used to override the generic sizing directives mentioned above.

"max" configuration element sets an upper limit on the number of connections for the corresponding ProxyPass that can be maintain by one worker MPM child process. This limits the total number of connection in the connection pool for the corresponding ProxyPass directive to max * number_of_httpd_children_processes. max cannot exceed the value of ThreadsPerChild directive, if set to a value higher than ThreadsPerChild, it will be silently disregarded.

Another per-ProxyPass connection pool capacity configuration element is smax. If smax is set and it is lower than max, the connections above the smax limit are shut down after ttl.

Connection Time to Live

Once established, the connections to the backend will last forever, unless the child process that created them is stopped, the ttl parameter was configured and the value is reached, or a connection failure is detected. If ttl is specified, and if a connection is inactive for ttl seconds, the connection will not be used again. However, that connection won't be immediately closed, but will be closed later time. Based on experiments with httpd 2.2, it seems that an "expired" connection is closed when a new request for that backend arrives to the proxy. At that time, the "expired" connection is closed and a new one is created.

Connection Behavior

Once a new request arrives to the child process, a free connection is retrieved from the pool and used to service the requests. If acquire is set, the server will only wait for the specified number of milliseconds, and if no free connection is available, it will return SERVER_BUSY status to the client.

The proxy will wait for the configured number of seconds to receive a response from the backend server, and if the answer does not arrive in time, it returns a 502 Proxy Error to the client. The underlying connection is not closed. This event surfaces in the httpd proxy error log as "(70007) The timeout specified has expired: proxy: error reading status line from remote server ...". The timeout can be configured, in the order of precedence, with ProxyPass timeout parameter, the ProxyTimeout configuration directive, and finally with Timeout configuration directive.

A failure detected on the connection puts the connection in an error state. The error state of a connection can also be detected also with pinging. The worker will not forward any request to a connection in an error state until the retry timeout expires. A value of zero means aways retry workers in an error state with no timeout.

Backend Pinging

The proxy can ping the backend before forwarding a request over a certain connection in the pool, to ensure the connection is valid. For HTTP, mod_proxy_httpd implements it by sending a 100-Continue request to the backend. This will only work with HTTP/1.1 backends. For AJP, mod_proxy_ajp implements it by sending a CPING request, which works with Tomcat 3.3.2+, 4.1.28+, 5.0.13+. The number of seconds to wait for the answer can be configured as ping.

TCP Keep-Alive

If there is no further traffic, and no ttl is explicitly configured, the connection in the pool are left to idle indefinitely. If there is a firewall between the proxy and the backend server, it may close them. To prevent that, httpd allows configuration of a keepalive flag, that turns on TCP keep-alive mechanism.

Miscellaneous

Connection Pooling Configuration Reference

Load Balancing

mod_proxy supports several load balancing algorithms, described below. A specific algorithm is configured using the lbmethod configuration element. Load balancing consists in distributing requests to multiple, equivalent backend nodes, referred to as balancer members.

Multiple Connections per Balancer Member

As described in the Connection Pooling section, the proxy maintains several TCP/IP connections to each balancer member. The maximum number of connections per the entire server and per balancer worker are subject to the rules described in the Connection Pool Capacity section, above. Depending on the load balancing method, the method's configuration and how specific requests are handled, some member may end up with more connections than others, as the total number of connections allowed for a proxy instance is dynamically distributed among members.

Member Error State

The load balancer monitors the health status of the connections to the backend nodes and the HTTP responses returning on those connections, and avoids sending new requests to members that are found to be in error state. A member can be marked to be in error state if one of the following events happen:

- the backend node fails and the load balancer cannot establish a new connection to it.

- the backend returns any of the HTTP status codes configured with failonstatus.

- there is a timeout, configured with failontimeout, on receiving the response from the backend.

The load balancer will continue to load balance requests among the remaining members. In the worst case, all members fail, and that condition is reflected in the logs by the following message:

[error] proxy: BALANCER: (balancer://mycluster). All workers are in error state

Member Recovery from Error

The general approach in case a member that was marked to be in error state is to avoid that member for a while, and then resume sending requests to it. If the member recovers in the meantime, new connections will be created and requests will be processed successfully. If the member does not recover, it will be marked as being in error state again.

However, the default configuration shortcuts this behavior by instructing the load balancer to skip avoiding the member and send requests to it immediately. This behavior is controlled by the forcerecovery configuration element, which by default is "On".

If forcerecovery is explicitly switched to "Off", the load balancer will wait retry seconds before retrying the member again.

This is a recommended configuration to avoid thrashing when a member fails and does not recover:

ProxyPass / balancer://mycluster/ forcerecovery=Off ...

<Proxy balancer://mycluster>

BalancerMember http://node1 retry=30

BalancerMember http://node2 retry=30

...

</Proxy>

Session Stickiness

The load balancer supports session stickiness: if the balancer identifies a request as being associated to a known session, and it was configured to ensure session stickiness, it will forward the request to the backend node indicated by the routing information found in the session identification information, which comes as part of the request. mod_proxy_balancer implements session stickiness in two different ways: with cookies and URL encoding: the session identification information propagates between invocations as a value of a specific session cookie or the session ID is encoded within the URL. The name of the cookie can be configured with stickysession. The content of the cookie can be configured with stickysessionsep and scolonpathdelim. The cookie value can be generated by either back-end or by the Apache web server itself. The URL encoding is usually done on the back-end. When session stickiness is implemented with cookies, the balancer extracts the value of the cookie and looks for a member worker with route equals with the value extracted from the cookie. For more details on how to configure the route, see route. Apache Tomcat adds the name of the Tomcat instance to the end of its session ID cookie, separated with a dot (.) from the session ID. Thus if the Apache web server finds a dot in the value of the stickyness cookie, it only uses the part behind the dot to search for the route. In order to let Tomcat know about its instance name, you need to set the attribute jvmRoute inside the Tomcat configuration file conf/server.xml to the value of the route of the worker that connects to the respective Tomcat.

The load balancer exports session stickiness-specific environment variables:

The load balancer manages the session failover, which is enabled by default (nofailover="Off"). If the member that was supposed to handle the session becomes unavailable, the load balancer will fail over the request to an alternate node. A session replication mechanism must be in place on the backend for this to work. The load balancer can be configured to attempt failover for a set number of times, after which will return an error status to the client. The number of failover attempts is configured with maxattemtps. There are situations when the failover should be disabled, for example in the case the backend severs do not replicate the session among nodes. In this case, a session failover attempt would be pointless.

Load Balancing Methods

Load Balancer Member Monitoring and Management

General monitoring capability is proxied by mod_status:

Load balancer members can be managed with the "balancer-manager" handler:

Load Balancing Troubleshooting

This article offers hints on how to troubleshoot load balancing and stickiness problems:

Load Balancing Configuration Reference

mod_proxy Modules and How they Work Together

The proxy functionality requires a set of modules to be loaded and work together. For more details on how to load a module, see mod_proxy Installation section.

mod_proxy

This module lets the server act as proxy for data transferred over AJP, FTP, CONNECT (for SSL, the Secure Sockets Layer), and HTTP. To serve specific protocols, mod_proxy relies on specialized implementations provided by mod_proxy_http, mod_proxy_ajp, mod_proxy_ftp, mod_proxy_wstunnel, etc. The load balancing algorithms are implemented by mod_proxy_balancer.

Also see:

More details:

mod_proxy Compilation

mod_proxy_http

mod_proxy_http proxies HTTP and HTTPS requests and it supports HTTP/1.0 and HTTP/1.1. It requires mod_proxy. It does not provide any caching capabilities.

More details:

Reverse Proxy Request Headers

When implementing a reverse proxy, mod_proxy_http adds the following request headers that carry additional information to the origin server:

- X-Forwarded-For - the IP address of the client

- X-Forwarded-Host - the original host requested by the client in the Host HTTP request header

- X-Forwarded-Server - the hostname of the proxy server.

mod_proxy_ajp

mod_proxy_ajp is a httpd module that provides support for AJP proxying. mod_proxy.so is required to use this module.

Also see:

mod_proxy_ajp Compilation

TODO (integrate and deplete): https://home.feodorov.com:9443/wiki/Wiki.jsp?page=Mod_proxy_ajp

mod_proxy_balancer

Proxying does not necessarily involve load balancing. httpd is capable of providing load balancing functionality, in addition to reverse proxying, if is installed and configured. mod_proxy_balancer provides load balancing logic. It is dependent on mod_proxy. The load balancing scheduler algorithm is not provided by this module, but by additional, specialized modules that must be loaded explicitly. Those modules are mod_lbmethod_byrequests, mod_lbmethod_bytraffic, mod_lbmethod_bybusiness and mod_lbmethod_heartbeat.

More details:

Also see:

<Proxy> Control Block

Proxying Requests that Contain Bodies

Also see HTTP Request Body.