Spinnaker Concepts: Difference between revisions

| (45 intermediate revisions by the same user not shown) | |||

| Line 104: | Line 104: | ||

{{External|https://spinnaker.io/docs/concepts/#application-deployment}} | {{External|https://spinnaker.io/docs/concepts/#application-deployment}} | ||

Spinnaker can be used to manage continuous delivery workflows, via its application deployment features. The application deployment features are exercised by creating and managing [[#Pipeline|pipelines]]. | Spinnaker can be used to manage continuous delivery workflows, via its application deployment features. The application deployment features are exercised by creating and managing [[#Pipeline|pipelines]]. | ||

== | ==<span id='Pipeline_Status'></span><span id='Pipeline_Context'></span><span id='Pipeline_Template'></span><span id='Pipeline_Configuration'></span><span id='Execution_Options'></span><span id='Disable_concurrent_pipeline_executions_.28only_run_one_at_a_time.29'></span><span id='Do_not_automatically_cancel_pipelines_waiting_in_queue'></span><span id='Automated_Triggers'></span><span id='Parameters'></span><span id='Pipeline_Variable'></span><span id='Pipeline_Operations'></span>Pipeline== | ||

{{Internal|Spinnaker Pipeline#Overview|Spinnaker Pipeline}} | |||

<span id=' | |||

</ | |||

= | |||

< | |||

</ | |||

==Trigger== | ==Trigger== | ||

===Trigger Types=== | ===Trigger Types=== | ||

====Manual Trigger==== | |||

A pipeline can be manually triggered from the UI ("Start Manual Execution") or via the command line with <code>[[Spinnaker_Executing_a_Pipeline_in_Command_Line#Overview|spin pipeline execute]]</code> | |||

====<span id='Docker_Registry'></span>Docker Registry Trigger==== | ====<span id='Docker_Registry'></span>Docker Registry Trigger==== | ||

'''Permissions''' | '''Permissions''' | ||

| Line 285: | Line 215: | ||

Applies entity tags to a resource. | Applies entity tags to a resource. | ||

===Evaluate Variables=== | ===Evaluate Variables=== | ||

{{Internal|Spinnaker Stage Evaluate Variables|Evaluate Variables}} | |||

===Find Artifacts From Execution=== | ===Find Artifacts From Execution=== | ||

Find and bind artifacts from another execution. | Find and bind artifacts from another execution. | ||

| Line 299: | Line 230: | ||

===Patch (Manifest)=== | ===Patch (Manifest)=== | ||

Patch a Kubernetes object in place. | Patch a Kubernetes object in place. | ||

===Pipeline=== | ===<span id='Pipeline_Stage'></span>Pipeline=== | ||

{{Internal|Spinnaker Stage Pipeline|Pipeline Stage}} | |||

===Pulumi=== | ===Pulumi=== | ||

Run Pulumi as a RunJob container. | Run Pulumi as a RunJob container. | ||

| Line 333: | Line 265: | ||

A custom stage [[#Running_Arbitrary_Functionality_in_a_Pipeline_Stage|allows running arbitrary functionality]]. | A custom stage [[#Running_Arbitrary_Functionality_in_a_Pipeline_Stage|allows running arbitrary functionality]]. | ||

==Stage Context== | ==Stage Context== | ||

Context values are similar to pipeline-wide [[Spinnaker_Pipeline#Pipeline_Context_and_Helper_Properties|helper properties]], except that they are specific to a particular stage. | |||

<font color=darkkhaki>TO PROCESS: https://spinnaker.io/docs/guides/user/pipeline/expressions/#context-values</font> | <font color=darkkhaki>TO PROCESS: https://spinnaker.io/docs/guides/user/pipeline/expressions/#context-values</font> | ||

It can be accessed in the JSON representation of the pipeline execution with <code>.stages[index].context</code>. | It can be accessed in the JSON representation of the pipeline execution with <code>.stages[index].context</code>. Stages like [[Spinnaker_Stage_Run_Job_Manifest#Overview|Run Job (Manifest)]] have the capability to update the stage context, as shown [[Spinnaker_Running_a_Script_with_Run_Job_(Manifest)#Capture_Output_from_Container_Stdout_and_Update_Stage_Outputs_and_Context|here]]. | ||

Also see: {{Internal|Spinnaker_Pipeline#Pipeline_Context_and_Helper_Properties|Spinnaker Pipeline | Pipeline Context and Helper Properties}} | |||

==Stage Output== | ==Stage Output== | ||

It can be accessed in the JSON representation of the pipeline execution with <code>.stages[index].outputs</code>. | It can be accessed in the JSON representation of the pipeline execution with <code>.stages[index].outputs</code>. Stages like [[Spinnaker_Stage_Run_Job_Manifest#Overview|Run Job (Manifest)]] have the capability to update the stage output, as shown [[Spinnaker_Running_a_Script_with_Run_Job_(Manifest)#Capture_Output_from_Container_Stdout_and_Update_Stage_Outputs_and_Context|here]]. | ||

==Step== | ==Step== | ||

| Line 411: | Line 347: | ||

* [[Spinnaker Stage Jenkins#Overview|Jenkins]] | * [[Spinnaker Stage Jenkins#Overview|Jenkins]] | ||

* [[Spinnaker Stage Script#Overview|Script]] | * [[Spinnaker Stage Script#Overview|Script]] | ||

* [[Spinnaker Stage Run Job|Run Job]] | |||

* [[Spinnaker Stage Run Job Manifest#Overview|Run Job (Manifest)]] applied to [[Spinnaker Running a Script with Run Job (Manifest)#Overview|Running a Script with Run Job (Manifest)]] | * [[Spinnaker Stage Run Job Manifest#Overview|Run Job (Manifest)]] applied to [[Spinnaker Running a Script with Run Job (Manifest)#Overview|Running a Script with Run Job (Manifest)]] | ||

* [[Spinnaker_Stage_Deploy_(Manifest)#Overview|Deploy (Manifest)]] can also be executed to run a Kubernetes Job and optionally a ConfigMap that feeds it with a script, by the virtue of the fact that the Job executes automatically upon the deployment. The solution, documented here ([[Spinnaker_Running_a_Script_with_Deploy_(Manifest)|Running a Script with Deploy (Manifest)]]), has some disadvantages, one being that it does not capture the output to inject it back in the pipeline. | * [[Spinnaker_Stage_Deploy_(Manifest)#Overview|Deploy (Manifest)]] can also be executed to run a Kubernetes Job and optionally a ConfigMap that feeds it with a script, by the virtue of the fact that the Job executes automatically upon the deployment. The solution, documented here ([[Spinnaker_Running_a_Script_with_Deploy_(Manifest)|Running a Script with Deploy (Manifest)]]), has some disadvantages, one being that it does not capture the output to inject it back in the pipeline. | ||

A [[#Custom_Stage|custom stage]] can also be used to run arbitrary functionality. | A [[#Custom_Stage|custom stage]] can also be used to run arbitrary functionality. | ||

=Plugins= | =Plugins= | ||

* https://blog.spinnaker.io/creating-your-first-custom-stage-94e02e439cdb | * https://blog.spinnaker.io/creating-your-first-custom-stage-94e02e439cdb | ||

Latest revision as of 04:22, 31 May 2023

External

- https://spinnaker.io/docs/concepts/

- Managed Delivery: Evolving Continuous Delivery at Netflix by Michael Galloway

Internal

Overview

Spinnaker is an OS CD solution, created by Netflix. Spinnaker provides two core service: application deployment and application management.

Application Management

Spinnaker application management uses on a domain model that includes applications, clusters, server groups, load balancers and firewalls.

Application

An application models a microservice. The application represents the service to be deployed using Spinnaker, the configuration for the service, and the infrastructure on which the service will run, which is organized into clusters, where each cluster is a collection of server groups, plus the required firewalls and load balancers. The application also logically includes the pipelines that process the service through deployment in production, and also canary configurations.

Application Configuration Elements

Name

A unique name to identify this application.

Owner Email

Repo Type

The platform hosting the code repository for this application. Values: github, stash, bitbucket, gitlab. When creating the application, this has informative value only, no connection to any repository will be attempted.

Repo Project

According to in-line documentation: Source repository project name. When creating the application, this has informative value only, no connection to any repository will be attempted, and the project name won't be resolved in the backend.

Repo Name

According to in-line documentation: source repository name (not the URL). When creating the application, this has informative value only, no connection to any repository will be attempted, and the repository name won't be resolved in the backend.

Description

Cloud Providers

- aws

- ecs

- kubernetes

Consider only cloud provider health when executing tasks

When this option is enabled, instance status as reported by the cloud provider will be considered sufficient to determine task completion. When this option is disabled, tasks will normally need health status reported by some other health provider (e.g. a load balancer or discovery service) to determine task completion. More research on this.

Show health override option for each operation

When this option is enabled, users will be able to toggle the option above on a task-by-task basis.

Simply enabling the "Consider only cloud provider health when executing tasks" option above is usually sufficient for most applications that want the same health provider behavior for all stages. Note that pipelines will require manual updating if this setting is disabled in the future.

Instance Port

This field is only used to generate links within Spinnaker to a running instance when viewing an instance's details.

The instance port can be used or overridden for specific links configured for your application (via the Config screen).

Pipeline Behavior

Enable restarting running pipelines

When this option is enabled, users will be able to restart pipeline stages while a pipeline is still running. This behavior can have varying unexpected results and is not recommended to enable.

Enable re-run button on active pipelines

When this option is enabled, the re-run option also appears on active executions. This is usually not needed but may sometimes be useful for submitting multiple executions with identical parameters.

Permissions

To read from this application, a user must be a member of at least one group with read access. To write to this application, a user must be a member of at least one group with write access.

If no permissions are specified, the default behavior is that any user can read from or write to this application. These permissions will only be enforced if Fiat is enabled.

However, the Spinnaker instance can be configured so if no permissions are specified, the application creation may fail.

Application Operations

Cluster

A cluster is a logical grouping of server groups. A Spinnaker cluster does not necessarily map to a Kubernetes cluster. It is a collection of server groups, irrespective of any Kubernetes clusters that might be included in the underlying architecture.

PROCESS: https://spinnaker.io/docs/concepts/clusters/

Server Group

The server group identifies the deployable artifact (VM image, container image, source location) and basic configuration such as the number of instances, autoscaling policies, metadata, etc. A server group is optionally associated with a load balancer and a firewall. When deployed, the server group is a collection of instances of the running software (VM instances, Kubernetes pods, etc.).

Load Balancer

In Kubernetes, Load Balancers are Kubernetes Services with special Spinnaker annotations (app.kubernetes.io/managed-by: spinnaker). Load Balancers for an application can be created with the Spinnaker UI and also CLI. To create a Load Balancer with the UI, go to the "LOAD BALANCER" left-menu item → Create Load Balancer, specify the "Account" (the Kubernetes cluster) and the Kubernetes Service manifest:

kind: Service

apiVersion: v1

metadata:

name: synthetic-spinnaker

spec:

selector:

app: smoke

ports:

- protocol: TCP

port: 8080

targetPort: 8080

By default, the service will be created in the "default" namespace, to create it in a different namespace, use: namespace: <namespace-name> in the metadata section.

Firewall

Application Deployment

Spinnaker can be used to manage continuous delivery workflows, via its application deployment features. The application deployment features are exercised by creating and managing pipelines.

Pipeline

Trigger

Trigger Types

Manual Trigger

A pipeline can be manually triggered from the UI ("Start Manual Execution") or via the command line with spin pipeline execute

Docker Registry Trigger

Permissions

Type: Docker Registry

Registry Name. The name used when the Docker registry was registered with the Spinnaker instance (ex. synthetic-registry-name-specified-during-onboarding).

Organization my-namespace

Image my-namespace/my-image

Tag Worked with empty tags. If specified, only the tags that match this Java Regular Expression will be triggered. Leave empty to trigger builds on any tag pushed. Builds will not be triggered off the latest tag or updates to existing tags.

⚠️ If "older" semantic version tags are pushed while the pipeline have run for newer, the pipeline is not triggered.

Artifact Constraints . See Artifact Constraints below.

Referencing the New Image

When a new image is identified, the Docker Registry trigger will fill the "trigger" sub-map, part of the overall pipeline state:

"trigger": {

"id": "...",

"type": "docker",

"user": "[...]",

"parameters": {},

"artifacts": [

{

"customKind": false,

"reference": "docker.com/ovidiu_feodorov/smoke:1.0.17",

"metadata": {},

"name": "docker.com/ovidiu_feodorov/smoke",

"type": "docker/image",

"version": "1.0.17"

}

],

"notifications": [],

"rebake": false,

"dryRun": false,

"strategy": false,

"account": "docker",

"repository": "ovidiu_feodorov/smoke",

"tag": "1.0.17",

"resolvedExpectedArtifacts": [],

"expectedArtifacts": [],

"registry": "docker.apple.com",

"eventId": "e[...]3",

"enabled": true,

"runAsUser": "...",

"organization": "ovidiu_feodorov",

"preferred": false

}

This data can be used in SpEL expressions elsewhere in the pipeline. For example, the tag can be accessed with the following expression:

- image: "something/something-else:${trigger['tag']}"

cron

Executes the pipeline on cron schedule.

git

Executes the pipeline on git push.

GitHub Trigger

This configuration allows GitHub to post push events. TO PROCESS: https://spinnaker.io/docs/guides/tutorials/codelabs/kubernetes-v2-source-to-prod/#allow-github-to-post-push-events

Helm Chart Trigger

Executes the pipeline on a Helm chart update.

Jenkins Trigger

Listens on a Jenkins job.

Pipeline

Listens to another pipeline execution.

Plugin Trigger

Executes the pipeline in response to a plugin event.

Other Triggers

Concourse, Nexus, pub/sub, Travis, Artifactory, Werker,

Artifact Constraints

The section specifies artifacts required for trigger to execute.

⚠️ If anything is specified here, the pipeline will only trigger if these artifacts are present. It is fine to leave empty if you need the trigger to be generated by arbitrary artifacts. Only one of the artifacts needs to be present for the trigger to execute.

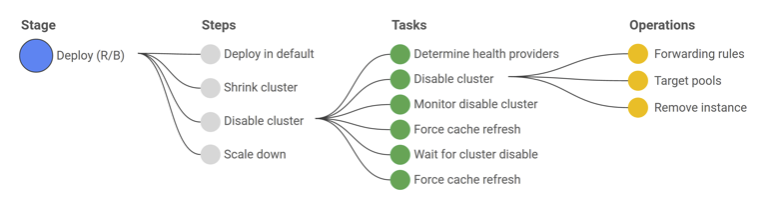

Stage

A stage is a collection of sequential tasks or other stages. The stage describes a higher-level action a pipeline performs either linearly or in parallel. Spinnaker provides a number of standard stages, which range from functions that manipulate infrastructure (deploy, resize, disable) to utility scaffolding functions (manual judgment, wait, run Jenkins job, etc.). Together, these stages provide a runbook for managing a deployment. The pipeline history gives access to details of each deployment operation, and provides an audit log of enforced policies.

Bake (Manifest)

Deploy (Manifest)

Canary Analysis

Check Preconditions

Check for preconditions before continuing.

Custom Webhook

Delete (Manifest)

Disable (Manifest)

Disable a Kubernetes manifest.

Enable (Manifest)

Enable a Kubernetes manifest.

Entity Tag

Applies entity tags to a resource.

Evaluate Variables

Find Artifacts From Execution

Find and bind artifacts from another execution.

Find Artifacts From Resource (Manifest)

Find artifacts from a Kubernetes resource.

Jenkins

This is one of the stages that allow running arbitrary functionality.

Manual Judgment

Patch (Manifest)

Patch a Kubernetes object in place.

Pipeline

Pulumi

Run Pulumi as a RunJob container.

Run Job

This is one of the stages that allow running arbitrary functionality.

Run Job (Manifest)

This is one of the stages that allow running arbitrary functionality.

Save Pipelines

Saves pipelines defined in an artifact.

Scale (Manifest)

Scale a Kubernetes object created from a manifest.

Script

This is one of the stages that allow running arbitrary functionality.

Terraform

Apply a terraform operation.

Undo Rollout (Manifest)

Wait

Waits a specified period of time.

Webhook

Runs a Webhook job.

Other Stages

AWS CodeBuild, AWS EC2 Deploy (Artifacts), AWS Instance Register for Target Groups, AWS Lambda Delete, AWS Lambda Deployment, AWS Lambda Invoke, AWS Lambda Route, Change Request, Change Request Creation, CloudShell Colony AWS EKS Onboarding, CloudShell Colony AWS Onboarding, CloudShell Colony Space Creation, Code Deploy Safetynet IAC, Colony End Sandbox, Colony Start Sandbox, Concourse, Fortress Job Trigger, Google Cloud Build, Gremlin, Jenkins Trigger for Cookie Auth, Travis, Wercker.

Custom Stage

A custom stage allows running arbitrary functionality.

Stage Context

Context values are similar to pipeline-wide helper properties, except that they are specific to a particular stage.

TO PROCESS: https://spinnaker.io/docs/guides/user/pipeline/expressions/#context-values

It can be accessed in the JSON representation of the pipeline execution with .stages[index].context. Stages like Run Job (Manifest) have the capability to update the stage context, as shown here.

Also see:

Stage Output

It can be accessed in the JSON representation of the pipeline execution with .stages[index].outputs. Stages like Run Job (Manifest) have the capability to update the stage output, as shown here.

Step

Task

A task is an automatic function to perform.

Deployment Strategies

Spinnaker treats could-native deployment strategies as first class constructs, handling the underlying orchestration such as verifying health checks, disabling old server groups and enabling new server groups. Spinnaker supports the blue/green (red/black) strategy, with rolling blue/green and canary strategies in active development. How does this relate to Kubernetes' application management built-in mechanisms?

For more details on specific strategies, see:

Traffic Management Strategies

TO PROCESS: https://spinnaker.io/docs/guides/user/kubernetes-v2/traffic-management/

Managed Delivery

TODO, watch "https://youtu.be/mEgvOfmLnlY" Managed Delivery by Emily Burns, Rob Fletcher

Artifacts

The page addresses GitHub file/directory artifacts, Helm charts, container images, S3 artifacts, etc:

Project

A project has a Name and an Owner E-mail.

It contains a list of applications, clusters and pipelines.

Architecture

Deck

Information about Kubernetes resources can be displayed in the Deck's detail panel.

Gate

The API Gateway. https://github.com/spinnaker/gate.

Provider

Kubernetes Provider

Kubernetes Source to Production

TO PROCESS: https://spinnaker.io/docs/guides/tutorials/codelabs/kubernetes-v2-source-to-prod

Helm Chart Support

Storage

Pipeline SpEL Expressions

Annotations

strategy.spinnaker.io/max-version-history

When set to a non-negative integer, this annotation configures how many versions of a resource to keep around. When more than max-version-history versions of a Kubernetes artifact exist, Spinnaker deletes all older versions. Resources are sorted by the metadata.creationTimestamp Kubernetes property rather than the version number.

Example:

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: ...

annotations:

strategy.spinnaker.io/max-version-history: '3'

...

If you are trying to restrict how many copies of a ReplicaSet a Deployment is managing, that is configured by spec.revisionHistoryLimit . If instead Spinnaker is deploying ReplicaSets directly without a Deployment, this annotation does the job.

Running Arbitrary Functionality in a Pipeline Stage

Existing stages that may help:

- Jenkins

- Script

- Run Job

- Run Job (Manifest) applied to Running a Script with Run Job (Manifest)

- Deploy (Manifest) can also be executed to run a Kubernetes Job and optionally a ConfigMap that feeds it with a script, by the virtue of the fact that the Job executes automatically upon the deployment. The solution, documented here (Running a Script with Deploy (Manifest)), has some disadvantages, one being that it does not capture the output to inject it back in the pipeline.

A custom stage can also be used to run arbitrary functionality.