Algorithm Complexity: Difference between revisions

| (144 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

* https://en.wikipedia.org/wiki/Big_O_notation | * https://en.wikipedia.org/wiki/Big_O_notation | ||

* http://infotechgems.blogspot.com/2011/11/java-collections-performance-time.html | * http://infotechgems.blogspot.com/2011/11/java-collections-performance-time.html | ||

* Coursera Class https://www.coursera.org/learn/algorithms-divide-conquer | |||

* https://github.com/jwasham/coding-interview-university#algorithmic-complexity--big-o--asymptotic-analysis | |||

=Internal= | =Internal= | ||

* [[ | * [[Algorithms#Algorithm_Efficiency|Algorithms]] | ||

=Overview= | =Overview= | ||

Algorithm analysis is | Algorithm complexity analysis is an essential subject if the amount of resources, such as time and memory, consumed by an algorithm, is important. For practical applications, and with the exception of the most trivial cases, resource consumption '''is''' important. Algorithms that differ slightly may come with significant differences in processing time, or memory consumption, when the size of the input is large. Algorithm complexity analysis is used to approximate, or bound, the amount of resources consumed by an algorithm while solving a [[Algorithms#Computational_Problem|computational problem]]. In most cases, we are interested in [[#Running_Time|running time]], but memory requirements, network bandwidth and [[#Algorithm_Complexity_Measures|other resources]] can also be estimated. | ||

Algorithm complexity analysis usually involves the following sequence: | |||

# We establish the relevant input and [[#Input_Size|input size]] of the problem. The input size may be multi-dimensional. | |||

# The algorithm is analyzed and its [[#Algorithm_Complexity_Measures|complexity]], or the quantity of consumed resources, such as time and memory, is expressed as a function of the input size and the constant costs of elementary operations of an idealized [[#Random_Access_Machine|random access machine]]. Different techniques exist for [[#Time_Complexity_Analysis_Method|iterative algorithms]] or [[#Recursive_Algoritms_and_Recurrences|recursive algorithms]]. At this stage, situations like [[#Worst-case_Running_Time|worst case]] and [[#Average-case_Running_Time|average case]] are usually taken into consideration. Worst case analysis is common, as it occurs fairly often and its complexity is usually comparable with the average case. | |||

# The resulting functions are approximated using their [[#Order_of_Growth|order of growth]]. If we compare two algorithms, the one with a lower order of growth is usually better for a large input size. This is not necessarily true for small input sizes. | |||

"Big O" is the language and the metric used when discussing algorithm complexity. "Big O" is an informal name for the formalism known as [[#Asymptotic_Notation|asymptotic notation]]. | |||

=Intuition behind Time Complexity= | |||

The intuition behind algorithm complexity analysis can be introduced using the following example: let's assume that we created a computer file, which is currently stored locally, and we want to share it with a friend in a remote location. There are at least two different "algorithms" to do that: send the content over the network as part of a FTP or scp transfer, or copy the file on a large enough drive and ship it with FedEx. The natural [[#Input_Size|input size]] in this case is the size of the file, in bytes. It is interesting to analyze the transfer time as a function of the file size and determine what algorithm is more efficient. | |||

The network transfer is subject to bandwidth limitation. Assuming that we can transfer data with 1 Mbs (1 Megabit per second, which is about 120,000 bytes per second, an arbitrary value to simplify the calculations), 1GB file can be transferred in roughly 2 hours and 15 minutes, and 1 TB file in a thousand times that, which is about 98 days. The transfer time is proportional with the file size. We can describe the time complexity of the network transfer "algorithm" as O(n) - the details of the [[#Asymptotic_Notation|asymptotic notation]] will be explained below. In case of transfer by courier, it will take 24 hours to overnight a drive containing the file, regardless of size of the file stored on the drive. The asymptotic notation for constant time is O(1), which says the transfer time is a constant that does not depend on the input size. | |||

We will argue that for an arbitrarily large input size, drive shipping, assuming that the drive is large enough, has a better time complexity than the file transfer. Even if the constant involved by the shipping "algorithm" is relatively large (24 hours), shipping will be faster than the network transfer for any file of approximately 10 GB size and up. Obviously, if the file has 1KB, shipping is terribly inefficient, and the O(1) algorithm performs far worse than the O(n) algorithm, because of the large built-in constants. However, no matter how big the O(1) constant is, and no matter how slow the linear increase is, the linear algorithm will at some point surpass the constant time algorithm. | |||

=Input Size= | |||

The concept of '''input size''' depends on the problem being addressed. In case of sorting algorithms, the most natural measure of input size is the number of items in the input. For the problem of multiplying two integers, the best measure of input size is the total number of bits needed to represent the input in binary notation. | |||

Input size can be multi-dimensional: In the case of graph algorithms, there are two numbers that describe the input size: the graph's number of [[Graph_Concepts#Vertex|vertices]] and [[Graph_Concepts#Edge|edges]]. If the problem involves two vectors whose size is unrelated, then the input size is also two-dimensional and consists of the lengths of both vectors. | |||

=Algorithm Complexity Measures= | |||

An algorithm's '''complexity''' is expressed as quantity of consumed resources, such as time and memory. The amount of resources is expressed with a function of the [[#Input_Size|input size]] and the cost of elementary operations of an idealized [[#Random-Access_Machine_.28RAM.29|random access machine]]. If we are interested in the behavior of the algorithm for large input sizes, it is sufficient to approximate the function by its [[#Order_of_Growth|order of growth]]. | |||

==Time Complexity== | |||

The <span id='Running_Time'></span>'''running time''', or '''computational time''' of an algorithm on a particular input is usually very well predicted by the number of [[#Random-Access_Machine_.28RAM.29|random-access machine]] primitive operations, or steps, executed. | The <span id='Running_Time'></span>'''running time''', or '''computational time''' of an algorithm on a particular input is usually very well predicted by the number of [[#Random-Access_Machine_.28RAM.29|random-access machine]] primitive operations, or steps, executed. | ||

==Space Complexity== | |||

'''Space complexity''' refers to the algorithm's requirements for memory. | |||

=<span id='Random_Access_Machine'></span>Random-Access Machine (RAM)= | =<span id='Random_Access_Machine'></span>Random-Access Machine (RAM)= | ||

| Line 30: | Line 61: | ||

We start by expressing the algorithm in [[#Random-Access_Machine_.28RAM.29|random-access machine]] instructions. As mentioned above, each type of instruction takes a constant time c<sub>i</sub> to execute, referred to as the '''cost''' of the instruction. | We start by expressing the algorithm in [[#Random-Access_Machine_.28RAM.29|random-access machine]] instructions. As mentioned above, each type of instruction takes a constant time c<sub>i</sub> to execute, referred to as the '''cost''' of the instruction. | ||

The running time of the algorithm is the sum of running times for each statement executed. A statement with a cost c<sub>i</sub> that executes n times will contribute c<sub>i</sub>⋅n to the total running time. When adding various costs, the terms of the same order can be coalesced: c<sub>1</sub>n + c<sub>2</sub>n + ... c<sub>k</sub>n can be expressed as c⋅n. | The running time of the algorithm is the sum of running times for each statement executed. A statement with a cost c<sub>i</sub> that executes n times will contribute c<sub>i</sub>⋅n to the total running time. When adding various costs, the terms of the same order can be coalesced: c<sub>1</sub>n + c<sub>2</sub>n + ... c<sub>k</sub>n can be expressed as c⋅n. More informally, if an algorithm is in the form "do this, and when you are done, do that", the corresponding running times are added. However, if an algorithm is in the form "do this for each time you do that", the corresponding running times are multiplied. | ||

Some statements may execute or not depending on which input of size n is given. From this perspective, an input may be the worst, leading to the longest running time, the best, leading to the shortest running time, or average. Additional complications in calculating the running time arise when we deal with recursive algorithms. Those aspects will be discussed below in the [[Recursive_Algorithms_Complexity#Overview|Recursive Algorithms Complexity]] section. | |||

When we analyze algorithms from a time complexity or other perspective we won't worry unduly about small constant factors or lower order terms. The justification for this guiding principle is based on three arguments: | |||

# It is easier mathematically if we don't have to pin down all constant factors. | |||

# Given the level at which the algorithms are described and analyzed (usually pseudocode, which is usually underspecified), to constant factors are not obvious, because they depend on the programming language, compiler, compiler optimization, machine code and hardware, so it is inappropriate to specify these constant factors. | |||

# We lose very little predictive power if we don't keep track of constant factors and lower order terms. | |||

====Worst-case Running Time==== | ====Worst-case Running Time==== | ||

Time complexity analysis focuses on ''worst-case running time''. The worst-case running time is the longest running time for '''any''' input of size n. The worst-case running time is important because it gives us an upper bound for the running time for any input, and knowing it provides a guarantee that the algorithm cannot do any worse. For some algorithms, the worst case occurs fairly often: for example, searching in a database when the information is not present. Also, the [[#Average-case_Running_Time|average case]] is often roughly as bad as the worst case. As we will see below, the [[#O-notation|O-notation]] provides a way to express asymptotic upper bounds to functions, so it is used when talking about running time for any situation, including worst-case running time. | Time complexity analysis focuses on '''worst-case running time''', which is a '''worst-case analysis''' applied to running time. Under worst case analysis, we made no assumption about where the input comes from, unlike in the [[#Average-case_Running_Time|average-case analysis]], we don't need specific domain knowledge, and it is appropriate for general-purpose algorithms. Doing worst-case analysis is mathematically much more tractable than doing average-case analysis. The worst-case running time is the longest running time for '''any''' input of size n. The worst-case running time is important because it gives us an upper bound for the running time for any input, and knowing it provides a guarantee that the algorithm cannot do any worse. For some algorithms, the worst case occurs fairly often: for example, searching in a database when the information is not present. Also, the [[#Average-case_Running_Time|average case]] is often roughly as bad as the worst case. As we will see below, the [[#O-notation|O-notation]] provides a way to express asymptotic upper bounds to functions, so it is used when talking about running time for any situation, including worst-case running time. | ||

====Best-case Running Time==== | ====Best-case Running Time==== | ||

The ''best-case running time'' for an algorithm is the running time when the input is arranged in the best possible way, so the algorithm has to perform the minimal number of steps to solve the problem. An example is an already sorted array for [[# | The '''best-case running time''' for an algorithm is the running time when the input is arranged in the best possible way, so the algorithm has to perform the minimal number of steps to solve the problem. An example is an already sorted array for [[Insertion_Sort#Overview|insertion sort]]. As we will see below, we use the [[#.CE.A9-notation|Ω-notation]] to describe the best-case running time of an algorithm. | ||

====Average-case Running Time==== | ====Average-case Running Time==== | ||

The ''average-case running time'' is the running time of an algorithm, in average, for a large number of different inputs. The average-case running time can be determined using | The '''average-case running time''', which is an '''average-case analysis''' applied to running time, is the running time of an algorithm, in average, for a large number of different inputs. Average case analysis is the analysis of an algoritm behavior under some assumptions about the relative frequencies of different inputs. For the average case analysis to make sense, you need to have some domain knowledge of the problem. The average-case running time can be determined using [[Randomized_Algorithms#Probabilistic_Analysis|probabilistic analysis]]. | ||

Note that average-case running time is not [[#Amortized_Analysis|amortized running time]]. | |||

====Expected Running Time==== | ====Expected Running Time==== | ||

In general we expect that all inputs of a given size are equally likely, but in practice this may not be the case. In such situations we can use a [[Randomized_Algorithms#Overview|randomized algorithm]] to allow [[Randomized_Algorithms#Probabilistic_Analysis|probabilistic analysis]] and yield an ''expected running time''. | |||

<font color=darkkhaki>More explanations on the difference between average-case running time and expected running time.</font> | |||

====Benchmark Analysis==== | |||

Analysis by use of a pre-specified benchmarks, where one agrees upfront about some set of inputs, which are thought to represent practical or typical inputs for the algorithm. | |||

=<span id=' | =<span id='Order_of_Growth_of_an_Algorithm.27s_Running_Time'></span>Order of Growth= | ||

The result of the [[Algorithm_Complexity#Time_Complexity_Analysis_Method|time complexity analysis method]] described above is a mathematical function of the input size. | The result of the [[Algorithm_Complexity#Time_Complexity_Analysis_Method|time complexity analysis method]] described above is a mathematical function of the input size. | ||

| Line 58: | Line 99: | ||

A very common case, but by all means not the only case, is that the running time can approximated by a polynomial function in n, such as, for example a⋅n<sup>2</sup> + b⋅n + c. In this situation, we can consider only the highest-order term of the formula: a⋅n<sup>2</sup>. We can also ignore the leading term's constant coefficient, since the constant factors are less significant than the other factor of the term in determining computational efficiency for large inputs. | A very common case, but by all means not the only case, is that the running time can approximated by a polynomial function in n, such as, for example a⋅n<sup>2</sup> + b⋅n + c. In this situation, we can consider only the highest-order term of the formula: a⋅n<sup>2</sup>. We can also ignore the leading term's constant coefficient, since the constant factors are less significant than the other factor of the term in determining computational efficiency for large inputs. | ||

The '''rate of growth''' or the '''order of growth''' of the algorithm's running time is the highest-order term, without coefficient. In this case of our example, the rate of growth is n<sup>2</sup>. | {{Note|The '''rate of growth''' or the '''order of growth''', or the '''rate of increase''' of the algorithm's running time is the highest-order term, without coefficient.}} | ||

In this case of our example, the rate of growth is n<sup>2</sup>. | |||

<span id='Comparing_Running_Time'></span>One algorithm is considered to be more efficient than another if its [[#Worst-case_Running_Time|worst-case running time]] has a lower [[#Order_of_Growth_of_an_Algorithm.27s_Running_Time|order of growth]], so that is why when we are analyzing the function describing the running time, we are usually interested in its order of growth. | <span id='Comparing_Running_Time'></span>One algorithm is considered to be more efficient than another if its [[#Worst-case_Running_Time|worst-case running time]] has a lower [[#Order_of_Growth_of_an_Algorithm.27s_Running_Time|order of growth]], so that is why when we are analyzing the function describing the running time, we are usually interested in its order of growth. | ||

Due to constant factors and lower-order terms, an algorithm whose running time has a higher order of growth might take less time for small inputs than an algorithm whose running time has a lower order of growth. However, for large enough inputs, the algorithm with lower order of growth will aways run faster. For example, comparing running time for two algorithms of which one is c<sub>1</sub>n<sup>2</sup> and the other is c<sub>2</sub> n lg n, even if c<sub>2</sub> > c<sub>1</sub>, no matter how much smaller c<sub>1</sub> is than c<sub>2</sub>, there will always be a crossover point beyond which the c<sub>2</sub>n lg n algorithm is faster. | Due to constant factors and lower-order terms, an algorithm whose running time has a higher order of growth might take less time for small inputs than an algorithm whose running time has a lower order of growth. However, for large enough inputs, the algorithm with lower order of growth will aways run faster. For example, comparing running time for two algorithms of which one is c<sub>1</sub>n<sup>2</sup> and the other is c<sub>2</sub> n lg n, even if c<sub>2</sub> > c<sub>1</sub>, no matter how much smaller c<sub>1</sub> is than c<sub>2</sub>, there will always be a crossover point beyond which the c<sub>2</sub> n lg n algorithm is faster. | ||

==Determining the Order of Growth of a Generic Function== | ==Determining the Order of Growth of a Generic Function== | ||

The asymptotic behavior of a function is the same as the asymptotic behavior of its order of growth, which can be obtained from the function by: | |||

* Coalescing the terms of the same order: the function c<sub>1</sub>n + c<sub>2</sub>n + ... c<sub>k</sub>n can be collapsed to c⋅n. Note that if the input size is multi-dimensional, the terms corresponding to independent input sizes cannot be collapsed, c<sub>1</sub>n + c<sub>2</sub>m cannot be approximated with c⋅n (or c⋅m) if m and n are independent. | |||

* Dropping smaller order (non-dominant) terms: the function c<sub>1</sub>n<sup>2</sup> + c<sub>2</sub>n can be collapsed to c<sub>1</sub>n<sup>2</sup>. Note that if the input size is multi-dimensional, the terms corresponding to independent input sizes cannot dropped, even if their order is lower. c<sub>1</sub>n<sup>2</sup> + c<sub>2</sub>m cannot be approximate with c<sub>1</sub>n<sup>2</sup> if m and n are independent. | |||

* Dropping the constant of the highest order term: the function c⋅n can be collapsed to n. | |||

Asymptotic | =Asymptotic Analysis= | ||

Asymptotic analysis provides the basic vocabulary for discussing the design and analysis of algorithms. This vocabulary is so ubiquitous because it identifies a "sweet spot" for discussing the performance of algorithms. It is a "sweet spot" because on one hand it is coarse enough to suppress architecture/language/compiler-dependent details. On the other hand is sharp enough to be useful to make useful prediction between high level algorithmic approaches, especially for large inputs. | |||

The main point of asymptotic analysis is to '''suppress constant factors''' and '''lower-oder terms'''. | |||

Lower order terms become increasingly irrelevant for larger inputs, which are the interesting ones. | |||

Constant factors are highly dependent on the details of the environments, and we want to ignore that for high-level algorithm analysis. Constant factors still matter for a particular algorithmic solution - you may want to work hard to improve constant factors. | |||

==Asymptotic Efficiency== | |||

When we look at input sizes large enough to make only the [[#Order_of_Growth_of_an_Algorithm.27s_Running_Time|order of growth]] of an algorithm relevant, we say that we are analyzing the '''asymptotic efficiency''' of the algorithm. Asymptotic efficiency is concerned with how the running time of the algorithm increases with the size input ''in the limit'', as the size of the input increases without bound. Usually, an algorithm that is asymptotically more efficient is the best choice, for all but very small inputs. | |||

In other words, when we analyze algorithms, we will focus on the case of large input sizes. These are the type of problems that are interesting. | |||

==Asymptotic Notation== | |||

To make easier to reason about [[#Asymptotic_Efficiency|asymptotic efficiency]] of various function expressing algorithms' running times, we can use the '''asymptotic notation'''. Asymptotic notation essentially designates classes, or sets of functions that behave asymptotically in the same way as the function used in the notation. Adding two function with the same asymptotic notation results in a function with the same asymptotic notation, and this simplifies reasoning when we estimate the algorithm's efficiency. While we mostly use the notation to describe algorithms running times, it actually applies to functions whose domain is the set of natural numbers. We can use asymptotic notation to function that describe space complexity, or to functions that have nothing to do with algorithms. | |||

The notation abstracts away some details of the actual function: the lower-order terms of an asymptotically positive function can be ignored when determining the asymptotical bounds, because they are insignificant for large n. The terms of the same order can be coalesced. The coefficient (constant) of the highest-order term can be dropped, since it only changes the constants c<sub>1</sub> and c<sub>2</sub> in the definitions presented below by just a constant factor. All these can be done while the essence of the function - its asymptotic efficiency - is preserved. | |||

Technically, a function f(n) belongs to a set such as O(g(n)) or Θ(g(n)), so it should be written as f(n) ∈ O(g(n)), but we conventionally write "f(n) = O(g(n))". For more details, see [[#Using_Asymptotic_Notation_in_Equations_and_Inequalities|Using Asymptotic Notation in Equations and Inequalities]]. | |||

<span id='Constant_Function'></span>Any constant function can be expressed as Θ(1). This notation is a minor abuse, because it does not indicate what variable is tending to infinity, but is accepted by convention. | |||

==O-notation== | ===O-notation=== | ||

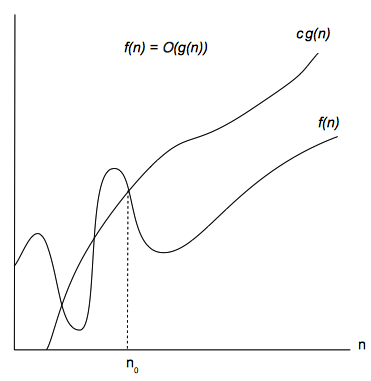

Unlike the Θ notation, the O notation is used to express an '''asymptotic upper bound'''. For a given function g(n), we denote by O(g(n)) the set of functions f(n) as follows: | Unlike the Θ notation, the O notation is used to express an '''asymptotic upper bound'''. For a given function g(n), we denote by O(g(n)) the set of functions f(n) as follows: | ||

<blockquote style="background-color: Cornsilk; border: solid thin Gray;"> | |||

<blockquote style="background-color: | |||

:<br>O(g(n)) = {all f(n) : there exist a positive constant c and a integer n<sub>0</sub> such that 0 ≤ f(n) ≤ c⋅g(n) for all n ≥ n<sub>0</sub>}<br><br> | :<br>O(g(n)) = {all f(n) : there exist a positive constant c and a integer n<sub>0</sub> such that 0 ≤ f(n) ≤ c⋅g(n) for all n ≥ n<sub>0</sub>}<br><br> | ||

</blockquote> | </blockquote> | ||

In English: a function is O(g(n)) if eventually, for all sufficiently large values of n, all given function values are smaller than a constant multiple of g(n). | |||

For all n ≥ n<sub>0</sub>, function g(n) provides an upper bound to function f(n) within a constant factor. | For all n ≥ n<sub>0</sub>, function g(n) provides an upper bound to function f(n) within a constant factor. | ||

| Line 118: | Line 157: | ||

For all values n at and to the right of n<sub>0</sub>, the value of the function f(n) is on or below c⋅g(n). | For all values n at and to the right of n<sub>0</sub>, the value of the function f(n) is on or below c⋅g(n). | ||

==Ω-notation== | Note that the industry uses the "O-notation" or "Big O" notion with a semantics similar to [[#.CE.98-notation|Θ-notation]]. For more details see [[#Big_O_in_Industry|Big O in Industry]]. | ||

====Practical Tip==== | |||

To formally prove that a certain function is O of other function, use the formal definition and find two constants - the positive constant c and the natural number n0 for which the inequality works. | |||

To formally prove that a certain function is NOT O of other function, use contradiction. | |||

===Θ-notation=== | |||

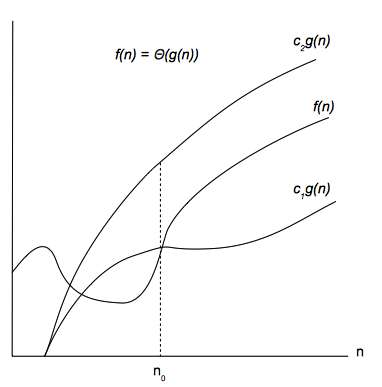

For a given function g(n), we denote by Θ(g(n)) the set of functions f(n) as follows: | |||

<blockquote style="background-color: Cornsilk; border: solid thin Gray;"> | |||

:<br>Θ(g(n)) = {all f(n) : there exist positive constants c<sub>1</sub>, c<sub>2</sub> and a integer n<sub>0</sub> such that 0 ≤ c<sub>1</sub>g(n) ≤ f(n) ≤ c<sub>2</sub>g(n) for all n ≥ n<sub>0</sub>}<br><br> | |||

</blockquote> | |||

A function f(n) belongs to the set Θ(g(n)) if there exist positive constants c<sub>1</sub> and c<sub>2</sub> so f(n) is bounded by c<sub>1</sub>g(n) and c<sub>2</sub>g(n) for sufficiently large n. In other words, for all n ≥ n<sub>0</sub>, function f(n) is equal to g(n) within a constant factor. We also say that g(n) is an '''asymptotically tight bound''' for f(n). | |||

Θ-notation is a stronger notion that [[#O-notation|O-notation]] and [[#.CE.A9-notation|Ω-notation]], described below. That means f(n) = Θ(g(n)) implies f(n) = O(g(n)) and f(n) = Ω(g(n)). | |||

::[[Image:Theta_Notation.png]] | |||

For all values of n at and to the right of n<sub>0</sub>, the value of f(n) lies at or above c<sub>1</sub>g(n) and at or below c<sub>2</sub>g(n). | |||

===Ω-notation=== | |||

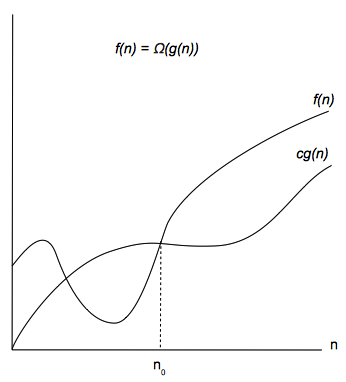

The Ω notation is used to express an '''asymptotic lower bound'''. For a given function g(n), we denote by Ω(g(n)) the set of functions f(n) as follows: | The Ω notation is used to express an '''asymptotic lower bound'''. For a given function g(n), we denote by Ω(g(n)) the set of functions f(n) as follows: | ||

<blockquote style="background-color: | <blockquote style="background-color: Cornsilk; border: solid thin Gray;"> | ||

:<br>Ω(g(n)) = {all f(n) : there exist a positive constant c and a integer n<sub>0</sub> such that 0 ≤ c⋅g(n) ≤ f(n) for all n ≥ n<sub>0</sub>}<br><br> | :<br>Ω(g(n)) = {all f(n) : there exist a positive constant c and a integer n<sub>0</sub> such that 0 ≤ c⋅g(n) ≤ f(n) for all n ≥ n<sub>0</sub>}<br><br> | ||

</blockquote> | </blockquote> | ||

| Line 131: | Line 193: | ||

For all values n at and to the right of n0, the value of the function f(n) is on or above c⋅g(n). | For all values n at and to the right of n0, the value of the function f(n) is on or above c⋅g(n). | ||

==Using Asymptotic Notation in Equations and Inequalities== | ===Using Asymptotic Notation in Equations and Inequalities=== | ||

Technically, a function f(n) '''belongs''' to a set such as O(g(n)), Θ(g(n)) or Ω(g(n)), so it should be written as f(n) ∈ O(g(n)), but we conventionally write "f(n) = O(g(n))". | Technically, a function f(n) '''belongs''' to a set such as O(g(n)), Θ(g(n)) or Ω(g(n)), so it should be written as f(n) ∈ O(g(n)), but we conventionally write "f(n) = O(g(n))". | ||

| Line 143: | Line 205: | ||

* f(n) = Θ(g(n)) is like a = b | * f(n) = Θ(g(n)) is like a = b | ||

* f(n) = Ω(g(n)) is like a ≥ b | * f(n) = Ω(g(n)) is like a ≥ b | ||

===Little o Notation=== | |||

While for big O the inequalities are not strict, for little o the same inequalities are strict. | |||

= | ===Big O in Industry=== | ||

In industry, people seem to have merged Θ and O together. Industry's meaning of big O is closer to what the asymptotic notation formalism defines as [[#.CE.98-notation|Θ-notation]]: the tightest description of the complexity. | |||

Empiric method: | |||

* Different steps get added. | |||

* Drop constants. | |||

* Different inputs introduce different variables in the O() notation. | |||

* Drop non-dominant term. | |||

== | =<span id='Recursive_Algoritms_and_Recurrences'><span id='Recurrence'></span><span id='Recurrences'><span id='Solving_Recurrences'></span><span id='Substitution_Method'></span><span id='Recursion-Tree_Method'></span><span id='Recursion_Tree'></span><span id='The_Master_Theorem'></span><span id='Master_Theorem'></span><span id='Analyzing_Divide-and-Conquer_Algorithms'>Recursive Algorithms Complexity= | ||

{{Internal|Recursive Algorithms Complexity|Recursive Algorithms Complexity}} | |||

=Amortized Analysis= | |||

<font color=darkkhaki>Integrate: https://www.youtube.com/watch?v=B3SpQZaAZP4</font> | |||

Amortized analysis is NOT [[#Average-case_Running_Time|average-case running time]] analysis. | |||

=<span id='Examples_of_Time_Complexity_Analyses'></span>Examples of Time Complexity Analysis= | =<span id='Examples_of_Time_Complexity_Analyses'></span>Examples of Time Complexity Analysis= | ||

| Line 186: | Line 231: | ||

* <span id='Analysis_of_Insertion_Sort'></span>[[Insertion Sort#Overview|Insertion sort]] | * <span id='Analysis_of_Insertion_Sort'></span>[[Insertion Sort#Overview|Insertion sort]] | ||

* <span id='Analysis_of_Merge_Sort'></span>[[Merge_Sort#Time_Complexity_Analysis|Merge sort]] | * <span id='Analysis_of_Merge_Sort'></span>[[Merge_Sort#Time_Complexity_Analysis|Merge sort]] | ||

=Fast Algorithm Definition= | |||

A fast algorithm is an algorithm whose worst-case running time grows slowly with the input size. | |||

Latest revision as of 19:46, 9 November 2021

External

- https://en.wikipedia.org/wiki/Big_O_notation

- http://infotechgems.blogspot.com/2011/11/java-collections-performance-time.html

- Coursera Class https://www.coursera.org/learn/algorithms-divide-conquer

- https://github.com/jwasham/coding-interview-university#algorithmic-complexity--big-o--asymptotic-analysis

Internal

Overview

Algorithm complexity analysis is an essential subject if the amount of resources, such as time and memory, consumed by an algorithm, is important. For practical applications, and with the exception of the most trivial cases, resource consumption is important. Algorithms that differ slightly may come with significant differences in processing time, or memory consumption, when the size of the input is large. Algorithm complexity analysis is used to approximate, or bound, the amount of resources consumed by an algorithm while solving a computational problem. In most cases, we are interested in running time, but memory requirements, network bandwidth and other resources can also be estimated.

Algorithm complexity analysis usually involves the following sequence:

- We establish the relevant input and input size of the problem. The input size may be multi-dimensional.

- The algorithm is analyzed and its complexity, or the quantity of consumed resources, such as time and memory, is expressed as a function of the input size and the constant costs of elementary operations of an idealized random access machine. Different techniques exist for iterative algorithms or recursive algorithms. At this stage, situations like worst case and average case are usually taken into consideration. Worst case analysis is common, as it occurs fairly often and its complexity is usually comparable with the average case.

- The resulting functions are approximated using their order of growth. If we compare two algorithms, the one with a lower order of growth is usually better for a large input size. This is not necessarily true for small input sizes.

"Big O" is the language and the metric used when discussing algorithm complexity. "Big O" is an informal name for the formalism known as asymptotic notation.

Intuition behind Time Complexity

The intuition behind algorithm complexity analysis can be introduced using the following example: let's assume that we created a computer file, which is currently stored locally, and we want to share it with a friend in a remote location. There are at least two different "algorithms" to do that: send the content over the network as part of a FTP or scp transfer, or copy the file on a large enough drive and ship it with FedEx. The natural input size in this case is the size of the file, in bytes. It is interesting to analyze the transfer time as a function of the file size and determine what algorithm is more efficient.

The network transfer is subject to bandwidth limitation. Assuming that we can transfer data with 1 Mbs (1 Megabit per second, which is about 120,000 bytes per second, an arbitrary value to simplify the calculations), 1GB file can be transferred in roughly 2 hours and 15 minutes, and 1 TB file in a thousand times that, which is about 98 days. The transfer time is proportional with the file size. We can describe the time complexity of the network transfer "algorithm" as O(n) - the details of the asymptotic notation will be explained below. In case of transfer by courier, it will take 24 hours to overnight a drive containing the file, regardless of size of the file stored on the drive. The asymptotic notation for constant time is O(1), which says the transfer time is a constant that does not depend on the input size.

We will argue that for an arbitrarily large input size, drive shipping, assuming that the drive is large enough, has a better time complexity than the file transfer. Even if the constant involved by the shipping "algorithm" is relatively large (24 hours), shipping will be faster than the network transfer for any file of approximately 10 GB size and up. Obviously, if the file has 1KB, shipping is terribly inefficient, and the O(1) algorithm performs far worse than the O(n) algorithm, because of the large built-in constants. However, no matter how big the O(1) constant is, and no matter how slow the linear increase is, the linear algorithm will at some point surpass the constant time algorithm.

Input Size

The concept of input size depends on the problem being addressed. In case of sorting algorithms, the most natural measure of input size is the number of items in the input. For the problem of multiplying two integers, the best measure of input size is the total number of bits needed to represent the input in binary notation.

Input size can be multi-dimensional: In the case of graph algorithms, there are two numbers that describe the input size: the graph's number of vertices and edges. If the problem involves two vectors whose size is unrelated, then the input size is also two-dimensional and consists of the lengths of both vectors.

Algorithm Complexity Measures

An algorithm's complexity is expressed as quantity of consumed resources, such as time and memory. The amount of resources is expressed with a function of the input size and the cost of elementary operations of an idealized random access machine. If we are interested in the behavior of the algorithm for large input sizes, it is sufficient to approximate the function by its order of growth.

Time Complexity

The running time, or computational time of an algorithm on a particular input is usually very well predicted by the number of random-access machine primitive operations, or steps, executed.

Space Complexity

Space complexity refers to the algorithm's requirements for memory.

Random-Access Machine (RAM)

An algorithm is usually analyzed in the context of a random-access machine (RAM), which is a theoretical model for the computing resources typically used by algorithms. A random-access machine has only one processor. In this model, instructions are executed one after another, with no concurrent operations, though extensions of the model exist for the case where we need to analyze multithreaded algorithms. Algorithms are implemented as computer programs for such a machine. The random-access machine mimics how real computers are designed, so it has instructions commonly found in real computers: arithmetic (add, subtract, multiply, divide, remainder, floor, ceiling), data movement (load, store, copy) and control (conditional branch, subroutine call and return). It is important to emphasize that each type of instruction takes a constant amount of time - this assumption is essential in algorithm analysis. This time is marked as ci when analyzing a specific algorithm.

In accordance with how real computers are implemented, operations as multiplication with 2k are also considered constant-time, because they are implemented by shifting left a number representation for k times, as long as k is no more than the number of bits in a computer word. Also, a statement calling a subroutine takes a constant amount of time, although the execution of the subroutine will usually not.

The data types in the RAM model are integer and floating point. The model assumes a limit on the size of each word of data: if the word size could grow arbitrarily, we could store large amounts of data in one word and operate on it in constant time, which is an unrealistic scenario.

A random-access machine does not attempt to model a memory hierarchy (caches or virtual memory). In some cases, memory hierarchy's effect is significant, but usually, RAM-model analyses are good predictors of performance on actual machines.

Time Complexity Analysis Method

We start by expressing the algorithm in random-access machine instructions. As mentioned above, each type of instruction takes a constant time ci to execute, referred to as the cost of the instruction.

The running time of the algorithm is the sum of running times for each statement executed. A statement with a cost ci that executes n times will contribute ci⋅n to the total running time. When adding various costs, the terms of the same order can be coalesced: c1n + c2n + ... ckn can be expressed as c⋅n. More informally, if an algorithm is in the form "do this, and when you are done, do that", the corresponding running times are added. However, if an algorithm is in the form "do this for each time you do that", the corresponding running times are multiplied.

Some statements may execute or not depending on which input of size n is given. From this perspective, an input may be the worst, leading to the longest running time, the best, leading to the shortest running time, or average. Additional complications in calculating the running time arise when we deal with recursive algorithms. Those aspects will be discussed below in the Recursive Algorithms Complexity section.

When we analyze algorithms from a time complexity or other perspective we won't worry unduly about small constant factors or lower order terms. The justification for this guiding principle is based on three arguments:

- It is easier mathematically if we don't have to pin down all constant factors.

- Given the level at which the algorithms are described and analyzed (usually pseudocode, which is usually underspecified), to constant factors are not obvious, because they depend on the programming language, compiler, compiler optimization, machine code and hardware, so it is inappropriate to specify these constant factors.

- We lose very little predictive power if we don't keep track of constant factors and lower order terms.

Worst-case Running Time

Time complexity analysis focuses on worst-case running time, which is a worst-case analysis applied to running time. Under worst case analysis, we made no assumption about where the input comes from, unlike in the average-case analysis, we don't need specific domain knowledge, and it is appropriate for general-purpose algorithms. Doing worst-case analysis is mathematically much more tractable than doing average-case analysis. The worst-case running time is the longest running time for any input of size n. The worst-case running time is important because it gives us an upper bound for the running time for any input, and knowing it provides a guarantee that the algorithm cannot do any worse. For some algorithms, the worst case occurs fairly often: for example, searching in a database when the information is not present. Also, the average case is often roughly as bad as the worst case. As we will see below, the O-notation provides a way to express asymptotic upper bounds to functions, so it is used when talking about running time for any situation, including worst-case running time.

Best-case Running Time

The best-case running time for an algorithm is the running time when the input is arranged in the best possible way, so the algorithm has to perform the minimal number of steps to solve the problem. An example is an already sorted array for insertion sort. As we will see below, we use the Ω-notation to describe the best-case running time of an algorithm.

Average-case Running Time

The average-case running time, which is an average-case analysis applied to running time, is the running time of an algorithm, in average, for a large number of different inputs. Average case analysis is the analysis of an algoritm behavior under some assumptions about the relative frequencies of different inputs. For the average case analysis to make sense, you need to have some domain knowledge of the problem. The average-case running time can be determined using probabilistic analysis.

Note that average-case running time is not amortized running time.

Expected Running Time

In general we expect that all inputs of a given size are equally likely, but in practice this may not be the case. In such situations we can use a randomized algorithm to allow probabilistic analysis and yield an expected running time.

More explanations on the difference between average-case running time and expected running time.

Benchmark Analysis

Analysis by use of a pre-specified benchmarks, where one agrees upfront about some set of inputs, which are thought to represent practical or typical inputs for the algorithm.

Order of Growth

The result of the time complexity analysis method described above is a mathematical function of the input size.

A very common case, but by all means not the only case, is that the running time can approximated by a polynomial function in n, such as, for example a⋅n2 + b⋅n + c. In this situation, we can consider only the highest-order term of the formula: a⋅n2. We can also ignore the leading term's constant coefficient, since the constant factors are less significant than the other factor of the term in determining computational efficiency for large inputs.

The rate of growth or the order of growth, or the rate of increase of the algorithm's running time is the highest-order term, without coefficient.

In this case of our example, the rate of growth is n2.

One algorithm is considered to be more efficient than another if its worst-case running time has a lower order of growth, so that is why when we are analyzing the function describing the running time, we are usually interested in its order of growth.

Due to constant factors and lower-order terms, an algorithm whose running time has a higher order of growth might take less time for small inputs than an algorithm whose running time has a lower order of growth. However, for large enough inputs, the algorithm with lower order of growth will aways run faster. For example, comparing running time for two algorithms of which one is c1n2 and the other is c2 n lg n, even if c2 > c1, no matter how much smaller c1 is than c2, there will always be a crossover point beyond which the c2 n lg n algorithm is faster.

Determining the Order of Growth of a Generic Function

The asymptotic behavior of a function is the same as the asymptotic behavior of its order of growth, which can be obtained from the function by:

- Coalescing the terms of the same order: the function c1n + c2n + ... ckn can be collapsed to c⋅n. Note that if the input size is multi-dimensional, the terms corresponding to independent input sizes cannot be collapsed, c1n + c2m cannot be approximated with c⋅n (or c⋅m) if m and n are independent.

- Dropping smaller order (non-dominant) terms: the function c1n2 + c2n can be collapsed to c1n2. Note that if the input size is multi-dimensional, the terms corresponding to independent input sizes cannot dropped, even if their order is lower. c1n2 + c2m cannot be approximate with c1n2 if m and n are independent.

- Dropping the constant of the highest order term: the function c⋅n can be collapsed to n.

Asymptotic Analysis

Asymptotic analysis provides the basic vocabulary for discussing the design and analysis of algorithms. This vocabulary is so ubiquitous because it identifies a "sweet spot" for discussing the performance of algorithms. It is a "sweet spot" because on one hand it is coarse enough to suppress architecture/language/compiler-dependent details. On the other hand is sharp enough to be useful to make useful prediction between high level algorithmic approaches, especially for large inputs.

The main point of asymptotic analysis is to suppress constant factors and lower-oder terms.

Lower order terms become increasingly irrelevant for larger inputs, which are the interesting ones.

Constant factors are highly dependent on the details of the environments, and we want to ignore that for high-level algorithm analysis. Constant factors still matter for a particular algorithmic solution - you may want to work hard to improve constant factors.

Asymptotic Efficiency

When we look at input sizes large enough to make only the order of growth of an algorithm relevant, we say that we are analyzing the asymptotic efficiency of the algorithm. Asymptotic efficiency is concerned with how the running time of the algorithm increases with the size input in the limit, as the size of the input increases without bound. Usually, an algorithm that is asymptotically more efficient is the best choice, for all but very small inputs.

In other words, when we analyze algorithms, we will focus on the case of large input sizes. These are the type of problems that are interesting.

Asymptotic Notation

To make easier to reason about asymptotic efficiency of various function expressing algorithms' running times, we can use the asymptotic notation. Asymptotic notation essentially designates classes, or sets of functions that behave asymptotically in the same way as the function used in the notation. Adding two function with the same asymptotic notation results in a function with the same asymptotic notation, and this simplifies reasoning when we estimate the algorithm's efficiency. While we mostly use the notation to describe algorithms running times, it actually applies to functions whose domain is the set of natural numbers. We can use asymptotic notation to function that describe space complexity, or to functions that have nothing to do with algorithms.

The notation abstracts away some details of the actual function: the lower-order terms of an asymptotically positive function can be ignored when determining the asymptotical bounds, because they are insignificant for large n. The terms of the same order can be coalesced. The coefficient (constant) of the highest-order term can be dropped, since it only changes the constants c1 and c2 in the definitions presented below by just a constant factor. All these can be done while the essence of the function - its asymptotic efficiency - is preserved.

Technically, a function f(n) belongs to a set such as O(g(n)) or Θ(g(n)), so it should be written as f(n) ∈ O(g(n)), but we conventionally write "f(n) = O(g(n))". For more details, see Using Asymptotic Notation in Equations and Inequalities.

Any constant function can be expressed as Θ(1). This notation is a minor abuse, because it does not indicate what variable is tending to infinity, but is accepted by convention.

O-notation

Unlike the Θ notation, the O notation is used to express an asymptotic upper bound. For a given function g(n), we denote by O(g(n)) the set of functions f(n) as follows:

O(g(n)) = {all f(n) : there exist a positive constant c and a integer n0 such that 0 ≤ f(n) ≤ c⋅g(n) for all n ≥ n0}

In English: a function is O(g(n)) if eventually, for all sufficiently large values of n, all given function values are smaller than a constant multiple of g(n).

For all n ≥ n0, function g(n) provides an upper bound to function f(n) within a constant factor.

Since O-notation provides an upper bound, if we use it to describe worst-case running time, then it characterizes the running time for any input. Worst-case running time is the running time we're most interested in, as argued above, and that is the reason the O-notation is most commonly used.

For all values n at and to the right of n0, the value of the function f(n) is on or below c⋅g(n).

Note that the industry uses the "O-notation" or "Big O" notion with a semantics similar to Θ-notation. For more details see Big O in Industry.

Practical Tip

To formally prove that a certain function is O of other function, use the formal definition and find two constants - the positive constant c and the natural number n0 for which the inequality works.

To formally prove that a certain function is NOT O of other function, use contradiction.

Θ-notation

For a given function g(n), we denote by Θ(g(n)) the set of functions f(n) as follows:

Θ(g(n)) = {all f(n) : there exist positive constants c1, c2 and a integer n0 such that 0 ≤ c1g(n) ≤ f(n) ≤ c2g(n) for all n ≥ n0}

A function f(n) belongs to the set Θ(g(n)) if there exist positive constants c1 and c2 so f(n) is bounded by c1g(n) and c2g(n) for sufficiently large n. In other words, for all n ≥ n0, function f(n) is equal to g(n) within a constant factor. We also say that g(n) is an asymptotically tight bound for f(n).

Θ-notation is a stronger notion that O-notation and Ω-notation, described below. That means f(n) = Θ(g(n)) implies f(n) = O(g(n)) and f(n) = Ω(g(n)).

For all values of n at and to the right of n0, the value of f(n) lies at or above c1g(n) and at or below c2g(n).

Ω-notation

The Ω notation is used to express an asymptotic lower bound. For a given function g(n), we denote by Ω(g(n)) the set of functions f(n) as follows:

Ω(g(n)) = {all f(n) : there exist a positive constant c and a integer n0 such that 0 ≤ c⋅g(n) ≤ f(n) for all n ≥ n0}

In other words, for all n ≥ n0, function g(n) provides a lower bound to function f(n) within a constant factor. Informally, we say that under any circumstance, the algorithm cannot do better that. The Ω is used to describe the best-case running time of an algorithm.

For all values n at and to the right of n0, the value of the function f(n) is on or above c⋅g(n).

Using Asymptotic Notation in Equations and Inequalities

Technically, a function f(n) belongs to a set such as O(g(n)), Θ(g(n)) or Ω(g(n)), so it should be written as f(n) ∈ O(g(n)), but we conventionally write "f(n) = O(g(n))".

When the asymptotic notation stands alone on the right-hand side of an equation, as in the above example, or inequality, the equal sign means set membership. Example: f(n) = O(n2) means f(n) ∈ O(n2).

When the asymptotic notation appears in a formula, it is a stand-in for some anonymous function. The main reason to use the notation this way is to eliminate inessential details in an expression.

Because transitivity, reflexivity and symmetry apply to asymptotic notation, we can draw an analogy between the asymptotic comparison of two functions f() and g()( and the comparison of two real numbers:

- f(n) = O(g(n)) is like a ≤ b

- f(n) = Θ(g(n)) is like a = b

- f(n) = Ω(g(n)) is like a ≥ b

Little o Notation

While for big O the inequalities are not strict, for little o the same inequalities are strict.

Big O in Industry

In industry, people seem to have merged Θ and O together. Industry's meaning of big O is closer to what the asymptotic notation formalism defines as Θ-notation: the tightest description of the complexity.

Empiric method:

- Different steps get added.

- Drop constants.

- Different inputs introduce different variables in the O() notation.

- Drop non-dominant term.

Recursive Algorithms Complexity

Amortized Analysis

Integrate: https://www.youtube.com/watch?v=B3SpQZaAZP4

Amortized analysis is NOT average-case running time analysis.

Examples of Time Complexity Analysis

Fast Algorithm Definition

A fast algorithm is an algorithm whose worst-case running time grows slowly with the input size.