AWS CodePipeline Concepts: Difference between revisions

No edit summary |

|||

| (63 intermediate revisions by the same user not shown) | |||

| Line 24: | Line 24: | ||

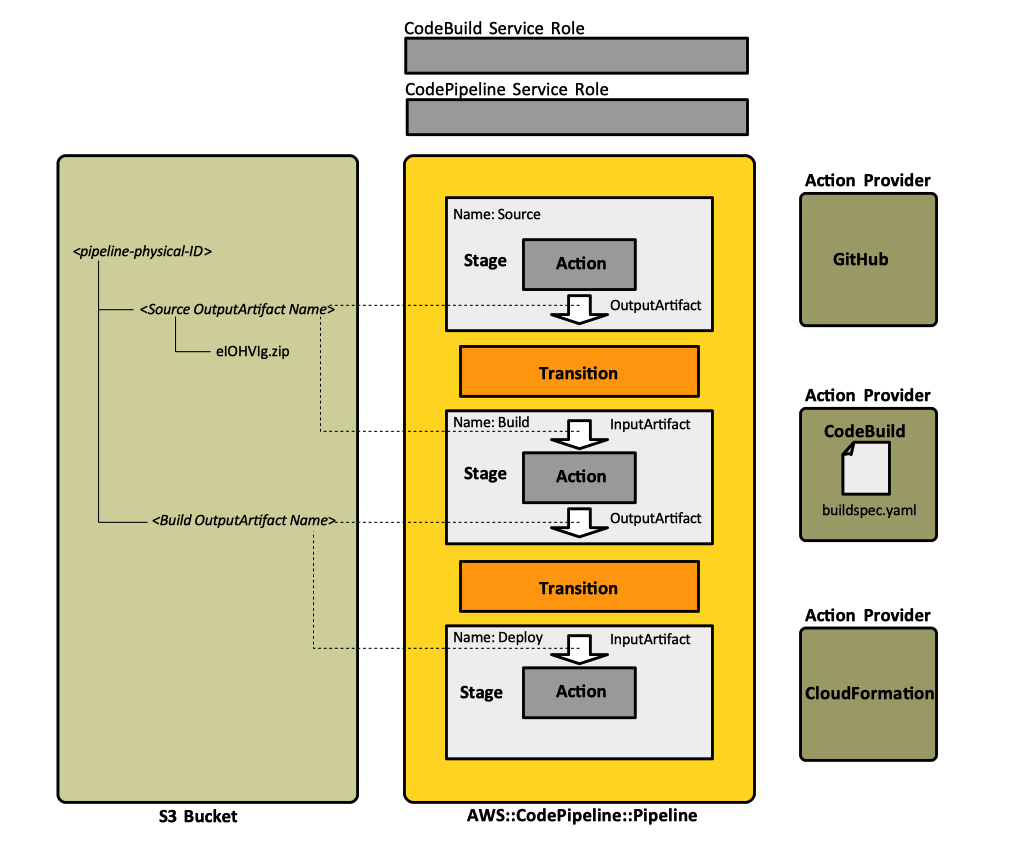

As implemented in AWS, the pipeline, consist in a set of sequential [[#Stage|stages]], each stage containing one or more [[#Action|actions]]. A specific stage is always in a fixed position relative to other stages, and only one stage runs at a time. However, actions within a stage can be executed sequentially, according their [[#Run_Order|run order]] or in parallel. Stages and actions process artifacts, which "advance" along the pipeline. | As implemented in AWS, the pipeline, consist in a set of sequential [[#Stage|stages]], each stage containing one or more [[#Action|actions]]. A specific stage is always in a fixed position relative to other stages, and only one stage runs at a time. However, actions within a stage can be executed sequentially, according their [[#Run_Order|run order]] or in parallel. Stages and actions process artifacts, which "advance" along the pipeline. | ||

A pipeline starts automatically when a change is made in the source location, as defined in a source action in a pipeline. The pipeline can also be started manually. A rule can be configured in [[Amazon CloudWatch]] to automatically start a pipeline when a specific event occurs. | A pipeline starts automatically when a change is made in the source location, as defined in a source action in a pipeline. The pipeline can also be started manually. A rule can be configured in [[Amazon CloudWatch]] to automatically start a pipeline when a specific event occurs. After a pipeline starts, the revision runs through every stage and action in the pipeline. | ||

A pipeline can be created the following CloudFormation sequence: | A pipeline can be created the following CloudFormation sequence: | ||

| Line 54: | Line 54: | ||

The pipeline requires an artifact store, which provides the storage for transient and final artifacts that are processed by the various stages and actions. In most cases, the storage is provided by an Amazon S3 bucket. "Location" specifies the name of the bucket. When the pipeline is initialized, the [[#CodePipeline_as_AWS_Service|codepipeline service]] creates a directory associated with the pipeline. The directory will have the same name as the pipeline. As the pipeline operates, sub-directories corresponding to various input and output artifacts declared by actions will be also created. | The pipeline requires an artifact store, which provides the storage for transient and final artifacts that are processed by the various stages and actions. In most cases, the storage is provided by an Amazon S3 bucket. "Location" specifies the name of the bucket. When the pipeline is initialized, the [[#CodePipeline_as_AWS_Service|codepipeline service]] creates a directory associated with the pipeline. The directory will have the same name as the pipeline. As the pipeline operates, sub-directories corresponding to various input and output artifacts declared by actions will be also created. | ||

<font color=darkgray>Assuming that "BuildBucket" is the resource name of the artifact store S3 bucket, then the name of the pipeline's build folder can be dereferenced as: | |||

!Ref '${BuildBucket}/${AWS::StackName}' | |||

</font> | |||

When Amazon Console is used to create the first pipeline, an S3 bucket is created in the same region as the pipeline to be sued items for all pipelines in that region, associated with the account. | When Amazon Console is used to create the first pipeline, an S3 bucket is created in the same region as the pipeline to be sued items for all pipelines in that region, associated with the account. | ||

| Line 90: | Line 95: | ||

Actions: | Actions: | ||

- ... | - ... | ||

=<span id='Stage_Action'></span>Action= | =<span id='Stage_Action'></span>Action= | ||

| Line 119: | Line 120: | ||

[[#Custom_Action|Custom actions]] can also be developed. | [[#Custom_Action|Custom actions]] can also be developed. | ||

====Run Order==== | |||

====Configuration==== | |||

Configuration elements are specific to the [[#Action_Provider|action provider]] and are passed to it. | |||

===<span id='Artifact'>Artifacts=== | |||

{{External|[https://stelligent.com/2018/09/06/troubleshooting-aws-codepipeline-artifacts/ Stelligent Troubleshooting AWS CodePipeline Artifacts]}} | |||

A [[#Revision|revision]] propagates through the pipeline by having the files associated with [[#Action|actions]] performed at different stages copied by CodePipeline service in the S3 bucket associated with the pipeline. CodePipeline maintains a hierarchical system of "folders", implemented using the [[Amazon_S3_Concepts#Key|S3 object keys]]. There is a "folder" associated with the pipeline. That "folder" contains "sub-folders", each of those associated with an [[#Input_Artifact|input artifact]] or an [[#Output_Artifact|output artifact]]. This may seem to be a bit confusing at first, but a pipeline's ''artifacts'' are "folders", not the high granularity files produced by an action. That also explains why the pipeline passes ZIP files from an action to another - it does not have a native concept of hierarchical filesystems, so individual files are packed together as S3 objects. Artifacts may be used as input to an action - [[#Input_Artifact|Input Artifacts]] - or may be produced by an action - [[#Output_Artifact|Output Artifacts]]. | |||

{{Note|It is a little bit inconvenient that the logical Input Artifact and Output Artifact names, used in the template, are also used to generated, by truncation, the name of the corresponding S3 "folders" - actually, key portions: that leads to slightly confusing S3 "folder" names. However, given the fact that if everything goes well, those "folders" are never seen by a human, this is probably OK.}} | |||

====<span id='Input_Artifact'></span>Input Artifacts==== | ====<span id='Input_Artifact'></span>Input Artifacts==== | ||

| Line 124: | Line 137: | ||

{{External|[https://docs.aws.amazon.com/codepipeline/latest/APIReference/API_InputArtifact.html InputArtifact]}} | {{External|[https://docs.aws.amazon.com/codepipeline/latest/APIReference/API_InputArtifact.html InputArtifact]}} | ||

An action declares zero or more ''input [[#Artifact|artifacts]]'', which are | An action declares zero or more ''input [[#Artifact|artifacts]]'', which are names for S3 virtual "folders", created by the pipeline inside the pipeline's S3 "folder", which is created inside the [[#ArtifactStore|artifact store]] S3 bucket. These are not actually real folders, but a convention implemented using [[Amazon_S3_Concepts#Key|S3 object keys]]. Every input artifact for an action must match an [[#Output_Artifact|output artifact]] of an action earlier in the pipeline, whether that action is immediately before the action in a stage or runs in a stage several stages earlier. | ||

====Output Artifacts==== | ====Output Artifacts==== | ||

| Line 130: | Line 143: | ||

{{External|[https://docs.aws.amazon.com/codepipeline/latest/APIReference/API_OutputArtifact.html OutputArtifact]}} | {{External|[https://docs.aws.amazon.com/codepipeline/latest/APIReference/API_OutputArtifact.html OutputArtifact]}} | ||

An action declares zero or more ''output [[#Artifact|artifacts]]'', which are | An action declares zero or more ''output [[#Artifact|artifacts]]'', which are names for S3 virtual "folders", created by the pipeline inside the pipeline's S3 "folder", which is created inside the [[#ArtifactStore|artifact store]] S3 bucket. These are not actually real folders, but a convention implemented using [[Amazon_S3_Concepts#Key|S3 object keys]]. Every output artifact in the pipeline must have a unique name. | ||

==Failure== | ==Failure== | ||

| Line 161: | Line 169: | ||

ActionTypeId: | ActionTypeId: | ||

Category: Source | Category: Source | ||

Provider: GitHub | |||

Owner: ThirdParty | Owner: ThirdParty | ||

Version: '1' | Version: '1' | ||

Configuration: | Configuration: | ||

Owner: !Ref GitHubOrganizationID | Owner: !Ref GitHubOrganizationID | ||

| Line 171: | Line 179: | ||

InputArtifacts: [] | InputArtifacts: [] | ||

OutputArtifacts: | OutputArtifacts: | ||

- Name: | - Name: RepositorySourceTree | ||

RunOrder: 1 | RunOrder: 1 | ||

- Name: ... | - Name: ... | ||

</syntaxhighlight> | </syntaxhighlight> | ||

The [[#Action_Provider|action provider]], which can be GitHub or other source repository provider, performs a repository clone and packages the content as a ZIP file. The ZIP file is placed in the [[#ArtifactStore|artifact store]], under the directory corresponding to the pipeline and the sub-directory named based on the "OutputArtifacts.Name" configuration element. Assuming that the pipeline is named "thalarion", the output ZIP file is placed in s3://thalarion-buildbucket-enqyf1xp13z2/thalarion/ | The [[#Action_Provider|action provider]], which can be GitHub or other source repository provider, performs a repository clone and packages the content as a ZIP file. The ZIP file is placed in the [[#ArtifactStore|artifact store]], under the directory corresponding to the pipeline and the sub-directory named based on the "OutputArtifacts.Name" configuration element. Assuming that the pipeline is named "thalarion", the output ZIP file is placed in s3://thalarion-buildbucket-enqyf1xp13z2/thalarion/Repository. The name of the S3 "folder" is generated truncating the name of the output artifact. | ||

An example of a simple, working GitHub-based pipeline is available here: {{Internal|Simple GitHub Simulated Shell Build Simulated Deployment AWS CodePipeline Pipeline|Simple GitHub - Simulated Shell Build - Simulated Deployment Pipeline}} | An example of a simple, working GitHub-based pipeline is available here: {{Internal|Simple GitHub Simulated Shell Build Simulated Deployment AWS CodePipeline Pipeline|Simple GitHub - Simulated Shell Build - Simulated Deployment Pipeline}} | ||

| Line 202: | Line 210: | ||

ActionTypeId: | ActionTypeId: | ||

Category: Build | Category: Build | ||

Provider: CodeBuild | |||

Owner: AWS | Owner: AWS | ||

Version: '1' | Version: '1' | ||

InputArtifacts: | InputArtifacts: | ||

- Name: | - Name: RepositorySourceTree | ||

OutputArtifacts: | OutputArtifacts: | ||

- Name: | - Name: BuildspecProducedFiles | ||

Configuration: | Configuration: | ||

ProjectName: !Ref CodeBuildProject | ProjectName: !Ref CodeBuildProject | ||

| Line 215: | Line 223: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

The [[#Action_Provider|action provider]], which in this case is the [[AWS CodeBuild|CodeBuild service]], executes the build. Existing [[AWS_CodeBuild_Concepts#Build_Project|build projects]] can be used, or new ones can be created in the CodePipeline console. The build artifacts are placed in the [[#ArtifactStore|artifact store]], under | The [[#Action_Provider|action provider]], which in this case is the [[AWS CodeBuild|CodeBuild service]], executes the build. Existing [[AWS_CodeBuild_Concepts#Build_Project|build projects]] can be used, or new ones can be created in the CodePipeline console. The build artifacts, as declared in the [[AWS_CodeBuild_Buildspec#artifacts|buildspec artifacts section]], are placed in the [[#ArtifactStore|artifact store]], under this pipeline's directory and a sub-directory named based on the "OutputArtifacts.Name" configuration element. Assuming that the pipeline is named "thalarion", the build artifacts are placed in s3://thalarion-buildbucket-enqyf1xp13z2/thalarion/BuildspecP. <span id='CodePipeline-Driven_CodeBuild_Builds'></span>The following article explains in detail how CodePipeline and CodeBuild interact: {{Internal|AWS CodePipeline-Driven CodeBuild Builds|CodePipeline-Driven CodeBuild Builds}} | ||

An example of a simple, working GitHub-based pipeline is available here: {{Internal|Simple GitHub Simulated Shell Build Simulated Deployment AWS CodePipeline Pipeline|Simple GitHub - Simulated Shell Build - Simulated Deployment Pipeline}} | An example of a simple, working GitHub-based pipeline is available here: {{Internal|Simple GitHub Simulated Shell Build Simulated Deployment AWS CodePipeline Pipeline|Simple GitHub - Simulated Shell Build - Simulated Deployment Pipeline}} | ||

| Line 238: | Line 246: | ||

ActionTypeId: | ActionTypeId: | ||

Category: Deploy | Category: Deploy | ||

Provider: CloudFormation | |||

Owner: AWS | Owner: AWS | ||

Version: '1' | Version: '1' | ||

InputArtifacts: | InputArtifacts: | ||

- Name: | - Name: RepositorySourceTree | ||

- Name: | - Name: BuildspecProducedFiles | ||

OutputArtifacts: [] | OutputArtifacts: [] | ||

Configuration: | Configuration: | ||

StackName: !Ref ProjectID | StackName: !Ref ProjectID | ||

TemplatePath: !Sub | TemplatePath: !Sub RepositorySourceTree::${DeploymentStackTemplate} | ||

# The union of parameters specified in 'TemplateConfiguration' and in 'ParameterOverrides' must | # The union of parameters specified in 'TemplateConfiguration' and in 'ParameterOverrides' must | ||

# match exactly the set of deployment template parameters that do not have defaults | # match exactly the set of deployment template parameters that do not have defaults | ||

TemplateConfiguration: | TemplateConfiguration: BuildspecProducedFiles::cloudformation-deployment-configuration.json | ||

# parameter values specified in "ParameterOverrides" take precedence over the values specified in | # parameter values specified in "ParameterOverrides" take precedence over the values specified in | ||

# 'TemplateConfiguration' | # 'TemplateConfiguration' | ||

ParameterOverrides: !Sub '{ "MyConfigurationParameterA": "yellow", "MyConfigurationParameterB": "black" }' | ParameterOverrides: !Sub '{ "MyConfigurationParameterA": "yellow", "MyConfigurationParameterB": "black" }' | ||

ActionMode: CREATE_UPDATE | ActionMode: CREATE_UPDATE | ||

Capabilities: | Capabilities: CAPABILITY_NAMED_IAM | ||

RoleArn: | RoleArn: | ||

Fn::ImportValue: !Sub '${ProjectID}-cloudformation-service-role-ARN' | Fn::ImportValue: !Sub '${ProjectID}-cloudformation-service-role-ARN' | ||

| Line 261: | Line 269: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

<font color=darkgray>Understand how does the DEPLOY stage of the pipeline do the deployment. What happens if a new pipeline run starts before the last deployment is complete? How do I stop a running pipeline? The “ActionMode: CREATE_UPDATE” of the DEPLOY stage of the pipeline has probably something to do with that: https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/continuous-delivery-codepipeline-action-reference.html#w2ab2c13c13b9</font> | |||

A CloudFront template can be configured externally with [[AWS_CloudFormation_Concepts#Input_Parameters|parameters]], and thus the deployment template can be configured by providing configuration values in a configuration file, specified as "TemplateConfiguration". "TemplateConfiguration" | This step relies on the presence of a CloudFormation stack template specification file, somewhere in an artifact produced by a previous pipeline stage. The simplest and the most logical option is the repository root. The name of the template file is configured as "TemplatePath", which should follow the format: | ||

<font color=teal>''InputArtifactName''</font>::<font color=teal>''TemplateFileName''</font> | |||

In the example above, the template file name is relative the RepositorySourceTree InputArtifact, which is backed by an S3 "folder" that contains the full source tree stored as ZIP; the source tree contains the template file. | |||

====TemplateConfiguration==== | |||

A CloudFront template can be configured externally with [[AWS_CloudFormation_Concepts#Input_Parameters|parameters]], and thus the deployment template can be configured by providing configuration values in a configuration file, specified as "TemplateConfiguration". The "TemplateConfiguration" configuration element should follow the format: | |||

<font color=teal>''InputArtifactName''</font>::<font color=teal>''TemplateConfigurationFileName''</font> | |||

In the example above, the template configuration file is produced by the Build action and placed in the BuildspecProducedFiles output artifact of that action, which is an S3 "folder". If the template configuration file is not found, maybe because the none of the previous stages created it, the deployment stage will fail with an S3 error. The configuration file allows JSON <font color=darkgray>and YAML</font>. A JSON configuration file is similar to: | |||

<syntaxhighlight lang='json'> | <syntaxhighlight lang='json'> | ||

| Line 282: | Line 302: | ||

Note that '''all''' parameters that '''do not have defaults''' in the deployment template must be provided, otherwise the deployment will fail with: "Action execution failed: Parameters: [...] must have values (Service: AmazonCloudFormation; Status Code: 400; Error Code: ValidationError." | Note that '''all''' parameters that '''do not have defaults''' in the deployment template must be provided, otherwise the deployment will fail with: "Action execution failed: Parameters: [...] must have values (Service: AmazonCloudFormation; Status Code: 400; Error Code: ValidationError." | ||

It is possible to override values in the template configuration file in the pipeline definition, using the "ParameterOverrides" key. If the same parameter is specified both in "ParameterOverrides" and in the template configuration file, the value specified in "ParameterOverrides" takes precedence. | If the CloudFormation deployment template does not expose any parameters with no default values, or if those parameters are supplied by other means ("[[#ParameterOverrides|ParameterOverrides]]), then "TemplateConfiguration" can be omitted. | ||

====ParameterOverrides==== | |||

{{External|[https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/continuous-delivery-codepipeline-action-reference.html Parameter Overrides]}} | |||

{{External|[https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/continuous-delivery-codepipeline-parameter-override-functions.html Using Parameter Override Functions with CodePipeline Pipelines]}} | |||

It is possible to override values in the template configuration file in the pipeline definition, using the "ParameterOverrides" key. If the same parameter is specified both in "ParameterOverrides" and in the template configuration file, the value specified in "ParameterOverrides" takes precedence. The union of parameters specified as "ParameterOverrides" and those coming from the configuration files should match exactly the no-default template parameter set. | |||

ParameterOverrides: !Sub '{ "MyConfigurationParameterA": "yellow", "MyConfigurationParameterB": "black" }' | |||

or | |||

ParameterOverrides: | | |||

{ | |||

"MyConfigurationParameterA": "yellow", | |||

"MyConfigurationParameterB": "black" | |||

} | |||

or | |||

ParameterOverrides: !Sub | | |||

{ | |||

"BuildBucket": "${ProjectID}-release-pipeline-build-bucket-2" | |||

} | |||

<font color=darkgray>I could not make the following to work, I am not sure if I can use Fn::ImportValue from. inside a ParameterOverrides block:</font> | |||

ParameterOverrides: | | |||

{ | |||

"BuildBucket": "Fn::ImportValue" : "thalarion-release-pipeline-build-bucket" | |||

} | |||

Parameters that are numbers cannot be passed without quotation marks. Assuming that the ANumber parameter was declared with a "Type: Number" in the template, the following declaration is incorrect, even if the deployment template also declares the parameter as a Number: | |||

ParameterOverrides: !Sub '{ "ANumber": ${ANumber}}' | |||

The | The correct declaration is (note the value enclosed in quotes): | ||

ParameterOverrides: !Sub '{ "ANumber": "${ANumber}"}' | |||

An example of a simple, working GitHub-based pipeline is available here: {{Internal|Simple GitHub Simulated Shell Build Simulated Deployment AWS CodePipeline Pipeline|Simple GitHub - Simulated Shell Build - Simulated Deployment Pipeline}} | An example of a simple, working GitHub-based pipeline is available here: {{Internal|Simple GitHub Simulated Shell Build Simulated Deployment AWS CodePipeline Pipeline|Simple GitHub - Simulated Shell Build - Simulated Deployment Pipeline}} | ||

====Capabilities==== | |||

{{Internal|AWS_CloudFormation_Concepts#Capabilities|CloudFormation Concepts - Capabilities}} | |||

===Continuous Delivery with CloudFormation and CodePipeline=== | |||

{{External|[https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/continuous-delivery-codepipeline.html Continuous Delivery with CloudFormation and CodePipeline]}} | |||

One of the deploy action providers is [[AWS_CloudFormation_Concepts#Continuous_Delivery_with_CloudFormation_and_CodePipeline|CloudFormation]]. | |||

==<span id='Approval_Action'></span>Approval== | ==<span id='Approval_Action'></span>Approval== | ||

==<span id='Invoke_Action'></span>Invoke== | ==<span id='Invoke_Action'></span>Invoke== | ||

Invokes an [[AWS Lambda]]. | |||

See: {{Internal|Invoking Lambda from CodePipeline Pipeline|Invoking Lambda from CodePipeline Pipeline}} | |||

=Custom Action= | =Custom Action= | ||

{{External|https://docs.aws.amazon.com/codepipeline/latest/userguide/actions-create-custom-action.html}} | |||

Custom actions can be developed. | Custom actions can be developed. | ||

| Line 299: | Line 371: | ||

A transition is the act of a [[#Revision|revision]] in a pipeline continuing from one stage to the next in a [[#Workflow|workflow]]. After a [[#Stage|stage]] is completed, the pipeline transitions the [[#Revision|revision]] and its artifacts created by the [[#Action|actions]] in that stage to the next stage in the pipeline. A transition can be manually enabled or disabled. If a transition is disabled, the pipeline will run all actions in the stages before that transition, but will not run any stages or actions after that stage. This is a simple way to prevent changes from running through the entire pipeline. | A transition is the act of a [[#Revision|revision]] in a pipeline continuing from one stage to the next in a [[#Workflow|workflow]]. After a [[#Stage|stage]] is completed, the pipeline transitions the [[#Revision|revision]] and its artifacts created by the [[#Action|actions]] in that stage to the next stage in the pipeline. A transition can be manually enabled or disabled. If a transition is disabled, the pipeline will run all actions in the stages before that transition, but will not run any stages or actions after that stage. This is a simple way to prevent changes from running through the entire pipeline. | ||

If a transition is enabled, the most recent revision that ran successfully through the previous stages will be run through the stages after that transition. If all transitions are enabled, the pipeline runs continuously, implementing the concept of [[ | If a transition is enabled, the most recent revision that ran successfully through the previous stages will be run through the stages after that transition. If all transitions are enabled, the pipeline runs continuously, implementing the concept of [[Continuous Delivery#Overview|continuous delivery]]. | ||

=Approval Action= | =Approval Action= | ||

An approval action prevents a [[#Pipeline|pipeline]] from transitioning to the next [[#Stage|stage]] until permission is granted - for example, manual approval by user that performed a code review. | An approval action prevents a [[#Pipeline|pipeline]] from transitioning to the next [[#Stage|stage]] until permission is granted - for example, manual approval by user that performed a code review. | ||

Latest revision as of 01:52, 1 January 2022

External

- https://docs.aws.amazon.com/codepipeline/latest/userguide/concepts.html

- CreatePipeline API Request Reference

Internal

CodePipeline as AWS Service

CodePipeline is an AWS service, named "codepipeline.amazonaws.com".

Pipeline

A pipeline is a top-level AWS resource that provides CI/CD release pipeline functionality.

From a conceptual perspective, a pipeline is a workflow construct that describes how software changes go through a release process.

As implemented in AWS, the pipeline, consist in a set of sequential stages, each stage containing one or more actions. A specific stage is always in a fixed position relative to other stages, and only one stage runs at a time. However, actions within a stage can be executed sequentially, according their run order or in parallel. Stages and actions process artifacts, which "advance" along the pipeline.

A pipeline starts automatically when a change is made in the source location, as defined in a source action in a pipeline. The pipeline can also be started manually. A rule can be configured in Amazon CloudWatch to automatically start a pipeline when a specific event occurs. After a pipeline starts, the revision runs through every stage and action in the pipeline.

A pipeline can be created the following CloudFormation sequence:

Resources:

Pipeline:

Name: !Ref AWS::StackName

Type: AWS::CodePipeline::Pipeline

Properties:

RoleArn: 'arn:aws:iam::777777777777:role/CodePipelineServiceRole-1'

ArtifactStore

Type: 'S3'

Location: 'experimental-s3-bucket-for-codepipeline'

...

Stages:

...

An example of a simple, working GitHub-based pipeline is available here:

Required Configuration

The pipeline requires a number of configuration properties:

RoleArn

The pipeline needs to be associated with a service role, which allows the codepipeline service to execute various actions required by pipeline operations.

ArtifactStore

The pipeline requires an artifact store, which provides the storage for transient and final artifacts that are processed by the various stages and actions. In most cases, the storage is provided by an Amazon S3 bucket. "Location" specifies the name of the bucket. When the pipeline is initialized, the codepipeline service creates a directory associated with the pipeline. The directory will have the same name as the pipeline. As the pipeline operates, sub-directories corresponding to various input and output artifacts declared by actions will be also created.

Assuming that "BuildBucket" is the resource name of the artifact store S3 bucket, then the name of the pipeline's build folder can be dereferenced as:

!Ref '${BuildBucket}/${AWS::StackName}'

When Amazon Console is used to create the first pipeline, an S3 bucket is created in the same region as the pipeline to be sued items for all pipelines in that region, associated with the account.

Optional Configuration

Optionally, a name can also be configured with a name:

Name

Optional parameter, that provides the physical ID for the pipeline. If not specified, a name will be generated based on the stack-name-Pipeline-24RCYXM52UE6A pattern. A recommended name is:

Name: !Ref AWS::StackName

Revision

A revision is a change made to a source that feeds a pipeline. The revision can be triggered by a git push command, or an S3 file update in a versioned S3 bucket. Each revision runs separately through the pipeline. Multiple revisions can be processed in the same pipeline, but each stage can process only one revision at a time. Revisions are run through the pipeline as soon as a change is made in the location specified in the source stage of the pipeline.

Because only one revision can run through a stage at a time, CodePipeline batches any revisions that have completed the previous stage until the next stage is available. If a more recent revision completes running through the stage, the batched revision is replaced by the most current revision.

Stage

A stage is a component of the workflow implemented by the pipeline. Each stage has an unique name within the pipeline. Intrinsically related to a stage is the concept of serialization: stages cannot be execute in parallel, just one stage of the pipeline executes at a time.

A pipeline must have at least 2 stages, one-stage pipeline will be considered invalid. A stage contains one or more actions, which could be executed sequentially or in parallel. All actions configured in a stage must complete successfully before a stage is considered complete.

Resources:

MyPipeline:

Type: AWS::CodePipeline::Pipeline

...

Stages:

- Name: ...

Actions:

- ...

- Name: ...

Actions:

- ...

Action

An action is a task performed on an artifact, and it is triggered at a specific stage of a pipeline. The action may occur in a specified order, or in parallel, depending on their configuration. All actions share a common structure:

Action Structure

Action Name

An action name must match the regular expression pattern: [A-Za-z0-9.@\-_]+ The action name must not contain spaces.

Action Type Declaration (ActionTypeId)

The action type declaration specifies an action provider. Currently, six types of actions are supported:

Custom actions can also be developed.

Run Order

Configuration

Configuration elements are specific to the action provider and are passed to it.

Artifacts

A revision propagates through the pipeline by having the files associated with actions performed at different stages copied by CodePipeline service in the S3 bucket associated with the pipeline. CodePipeline maintains a hierarchical system of "folders", implemented using the S3 object keys. There is a "folder" associated with the pipeline. That "folder" contains "sub-folders", each of those associated with an input artifact or an output artifact. This may seem to be a bit confusing at first, but a pipeline's artifacts are "folders", not the high granularity files produced by an action. That also explains why the pipeline passes ZIP files from an action to another - it does not have a native concept of hierarchical filesystems, so individual files are packed together as S3 objects. Artifacts may be used as input to an action - Input Artifacts - or may be produced by an action - Output Artifacts.

It is a little bit inconvenient that the logical Input Artifact and Output Artifact names, used in the template, are also used to generated, by truncation, the name of the corresponding S3 "folders" - actually, key portions: that leads to slightly confusing S3 "folder" names. However, given the fact that if everything goes well, those "folders" are never seen by a human, this is probably OK.

Input Artifacts

An action declares zero or more input artifacts, which are names for S3 virtual "folders", created by the pipeline inside the pipeline's S3 "folder", which is created inside the artifact store S3 bucket. These are not actually real folders, but a convention implemented using S3 object keys. Every input artifact for an action must match an output artifact of an action earlier in the pipeline, whether that action is immediately before the action in a stage or runs in a stage several stages earlier.

Output Artifacts

An action declares zero or more output artifacts, which are names for S3 virtual "folders", created by the pipeline inside the pipeline's S3 "folder", which is created inside the artifact store S3 bucket. These are not actually real folders, but a convention implemented using S3 object keys. Every output artifact in the pipeline must have a unique name.

Failure

A failure is an action that has not completed successfully. If one action fails in a stage, the revision does not transition to the next action in the stage or the next stage in the pipeline. If a failure occurs, no more transitions occur in the pipeline for that revision. CodePipeline pauses the pipeline until one of the following occurs:

- The stage is manually retried.

- The pipeline is restarted for that revision.

- Another revision is made in a source stage action.

Available Actions

Source

Resources:

MyPipeline:

Type: AWS::CodePipeline::Pipeline

Properties:

...

Stages:

- Name: Source

Actions:

- Name: !Sub 'github-pull-${Branch}'

ActionTypeId:

Category: Source

Provider: GitHub

Owner: ThirdParty

Version: '1'

Configuration:

Owner: !Ref GitHubOrganizationID

Repo: !Ref GitHubRepositoryName

Branch: !Ref Branch

OAuthToken: !Ref GitHubPersonalAccessCode

InputArtifacts: []

OutputArtifacts:

- Name: RepositorySourceTree

RunOrder: 1

- Name: ...

The action provider, which can be GitHub or other source repository provider, performs a repository clone and packages the content as a ZIP file. The ZIP file is placed in the artifact store, under the directory corresponding to the pipeline and the sub-directory named based on the "OutputArtifacts.Name" configuration element. Assuming that the pipeline is named "thalarion", the output ZIP file is placed in s3://thalarion-buildbucket-enqyf1xp13z2/thalarion/Repository. The name of the S3 "folder" is generated truncating the name of the output artifact.

An example of a simple, working GitHub-based pipeline is available here:

GitHub Authentication

Build

Resources:

MyPipeline:

Type: AWS::CodePipeline::Pipeline

Properties:

...

Stages:

...

- Name: Build

Actions:

- Name: !Sub '${Buildspec}-driven-CodeBuild'

ActionTypeId:

Category: Build

Provider: CodeBuild

Owner: AWS

Version: '1'

InputArtifacts:

- Name: RepositorySourceTree

OutputArtifacts:

- Name: BuildspecProducedFiles

Configuration:

ProjectName: !Ref CodeBuildProject

RunOrder: 1

- Name: ...

The action provider, which in this case is the CodeBuild service, executes the build. Existing build projects can be used, or new ones can be created in the CodePipeline console. The build artifacts, as declared in the buildspec artifacts section, are placed in the artifact store, under this pipeline's directory and a sub-directory named based on the "OutputArtifacts.Name" configuration element. Assuming that the pipeline is named "thalarion", the build artifacts are placed in s3://thalarion-buildbucket-enqyf1xp13z2/thalarion/BuildspecP. The following article explains in detail how CodePipeline and CodeBuild interact:

An example of a simple, working GitHub-based pipeline is available here:

Test

Deploy

Resources:

MyPipeline:

Type: AWS::CodePipeline::Pipeline

Properties:

...

Stages:

...

- Name: Deploy

Actions:

- Name: !Sub '${DeploymentStackTemplate}-driven-deployment'

ActionTypeId:

Category: Deploy

Provider: CloudFormation

Owner: AWS

Version: '1'

InputArtifacts:

- Name: RepositorySourceTree

- Name: BuildspecProducedFiles

OutputArtifacts: []

Configuration:

StackName: !Ref ProjectID

TemplatePath: !Sub RepositorySourceTree::${DeploymentStackTemplate}

# The union of parameters specified in 'TemplateConfiguration' and in 'ParameterOverrides' must

# match exactly the set of deployment template parameters that do not have defaults

TemplateConfiguration: BuildspecProducedFiles::cloudformation-deployment-configuration.json

# parameter values specified in "ParameterOverrides" take precedence over the values specified in

# 'TemplateConfiguration'

ParameterOverrides: !Sub '{ "MyConfigurationParameterA": "yellow", "MyConfigurationParameterB": "black" }'

ActionMode: CREATE_UPDATE

Capabilities: CAPABILITY_NAMED_IAM

RoleArn:

Fn::ImportValue: !Sub '${ProjectID}-cloudformation-service-role-ARN'

RunOrder: 1

Understand how does the DEPLOY stage of the pipeline do the deployment. What happens if a new pipeline run starts before the last deployment is complete? How do I stop a running pipeline? The “ActionMode: CREATE_UPDATE” of the DEPLOY stage of the pipeline has probably something to do with that: https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/continuous-delivery-codepipeline-action-reference.html#w2ab2c13c13b9

This step relies on the presence of a CloudFormation stack template specification file, somewhere in an artifact produced by a previous pipeline stage. The simplest and the most logical option is the repository root. The name of the template file is configured as "TemplatePath", which should follow the format:

InputArtifactName::TemplateFileName

In the example above, the template file name is relative the RepositorySourceTree InputArtifact, which is backed by an S3 "folder" that contains the full source tree stored as ZIP; the source tree contains the template file.

TemplateConfiguration

A CloudFront template can be configured externally with parameters, and thus the deployment template can be configured by providing configuration values in a configuration file, specified as "TemplateConfiguration". The "TemplateConfiguration" configuration element should follow the format:

InputArtifactName::TemplateConfigurationFileName

In the example above, the template configuration file is produced by the Build action and placed in the BuildspecProducedFiles output artifact of that action, which is an S3 "folder". If the template configuration file is not found, maybe because the none of the previous stages created it, the deployment stage will fail with an S3 error. The configuration file allows JSON and YAML. A JSON configuration file is similar to:

{

"Parameters": {

"MyConfigurationParameter": "my value"

}

}

The buildspec produces it as follows:

...

- echo "{\"Parameters\":{\"MyConfigurationParameter\":\"spurious\"}}" > ./cloudformation-deployment-configuration.json

...

Note that all parameters that do not have defaults in the deployment template must be provided, otherwise the deployment will fail with: "Action execution failed: Parameters: [...] must have values (Service: AmazonCloudFormation; Status Code: 400; Error Code: ValidationError."

If the CloudFormation deployment template does not expose any parameters with no default values, or if those parameters are supplied by other means ("ParameterOverrides), then "TemplateConfiguration" can be omitted.

ParameterOverrides

It is possible to override values in the template configuration file in the pipeline definition, using the "ParameterOverrides" key. If the same parameter is specified both in "ParameterOverrides" and in the template configuration file, the value specified in "ParameterOverrides" takes precedence. The union of parameters specified as "ParameterOverrides" and those coming from the configuration files should match exactly the no-default template parameter set.

ParameterOverrides: !Sub '{ "MyConfigurationParameterA": "yellow", "MyConfigurationParameterB": "black" }'

or

ParameterOverrides: |

{

"MyConfigurationParameterA": "yellow",

"MyConfigurationParameterB": "black"

}

or

ParameterOverrides: !Sub |

{

"BuildBucket": "${ProjectID}-release-pipeline-build-bucket-2"

}

I could not make the following to work, I am not sure if I can use Fn::ImportValue from. inside a ParameterOverrides block:

ParameterOverrides: |

{

"BuildBucket": "Fn::ImportValue" : "thalarion-release-pipeline-build-bucket"

}

Parameters that are numbers cannot be passed without quotation marks. Assuming that the ANumber parameter was declared with a "Type: Number" in the template, the following declaration is incorrect, even if the deployment template also declares the parameter as a Number:

ParameterOverrides: !Sub '{ "ANumber": ${ANumber}}'

The correct declaration is (note the value enclosed in quotes):

ParameterOverrides: !Sub '{ "ANumber": "${ANumber}"}'

An example of a simple, working GitHub-based pipeline is available here:

Capabilities

Continuous Delivery with CloudFormation and CodePipeline

One of the deploy action providers is CloudFormation.

Approval

Invoke

Invokes an AWS Lambda.

See:

Custom Action

Custom actions can be developed.

Transition

A transition is the act of a revision in a pipeline continuing from one stage to the next in a workflow. After a stage is completed, the pipeline transitions the revision and its artifacts created by the actions in that stage to the next stage in the pipeline. A transition can be manually enabled or disabled. If a transition is disabled, the pipeline will run all actions in the stages before that transition, but will not run any stages or actions after that stage. This is a simple way to prevent changes from running through the entire pipeline.

If a transition is enabled, the most recent revision that ran successfully through the previous stages will be run through the stages after that transition. If all transitions are enabled, the pipeline runs continuously, implementing the concept of continuous delivery.

Approval Action

An approval action prevents a pipeline from transitioning to the next stage until permission is granted - for example, manual approval by user that performed a code review.