Amazon Elastic File System Concepts: Difference between revisions

No edit summary |

|||

| (47 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=Internal= | =Internal= | ||

* [[Amazon Elastic File System#Subjects|Amazon Elastic File System]] | * [[Amazon Elastic File System#Subjects|Amazon Elastic File System]] | ||

* [[Linux_NFS|Linux NFS]] | |||

=Overview= | |||

Amazon Elastic File System (EFS) provides a scalable [[Storage_Concepts#Filesystem_Storage|filesystem]] for Linux-based workloads for use with AWS cloud services and on-premises resources. More details about the specifics of the filesystem are available in the [[#EFS_File_System|EFS File System]] section below. | |||

EFS is built to scale on demand to petabytes without disrupting applications, growing and shrinking automatically as files are added and removed. It is designed to provide massively parallel shared access to thousands of Amazon EC2 instances, enabling applications to achieve high levels of aggregate throughput and IOPS with consistent low latencies. Amazon EFS is a fully managed service that requires no changes to existing applications and tools, providing access through a standard file system interface. Amazon EFS is a regional service storing data within and across multiple Availability Zones. The filesystems can be accessed across Availability Zones and regions via AWS Direct Connect or AWS VPN. Amazon EFS is well suited to support a broad spectrum of use cases from highly parallelized, scale-out workloads that require the highest possible throughput to single-threaded, latency-sensitive workloads. | |||

=EFS and EC2= | =EFS and EC2= | ||

{{Internal|Amazon_EC2_Concepts#Amazon_Elastic_File_System_.28EFS.29|EFS and EC2}} | {{Internal|Amazon_EC2_Concepts#Amazon_Elastic_File_System_.28EFS.29|EFS and EC2}} | ||

=a= | =EFS File System= | ||

= | |||

The EFS file system is exposed as a POSIX-compliant file system mounted in an EC2 instance, a Docker container or a EKS pod. Most common use case is to mount EFS file systems on instances running in the AWS Cloud, but it is also possible to mount these file systems on instances running in on-premises data center, with AWS Direct Connect or AWS VPN. | |||

The persistent storage backing up the EFS file system is managed by the AWS infrastructure and it is not accessible directly. However, an EFS file system can be accessed from a VPC via a [[#Mount_Target|mount target]]. The underlying protocol used to mount EFS file systems is NFS 4.0 and 4.1, so the appropriate [[Linux_NFS_Concepts#NFS_Client|NFS client]] must be available in the environment attempting to mount the file system. | |||

An EFS file system is a primary resource, it has an [[#File_System_ID|ID]], creation token, creation time and a lifecycle state. | |||

Each file system has a DNS name of the following form: | |||

<file-system-id>.efs.<aws-region>.amazonaws.com | |||

==File System ID== | |||

=Mount Target= | |||

{{External|https://docs.aws.amazon.com/efs/latest/ug/API_CreateMountTarget.html}} | |||

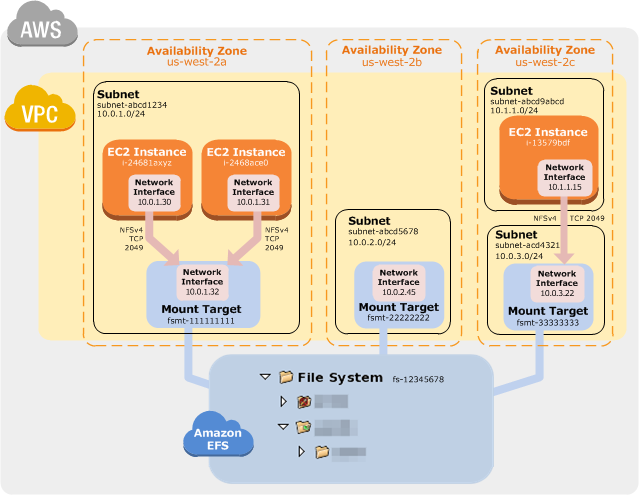

A mount target can be logically thought of as an NFS server with a fixed IP address that exists in a particular subnet in a VPC. The mount target provides the IP address for the NFSv4 endpoint at which the EFS file system can be mounted. The mount target IP address is associated with a DNS name. Mount targets are designed to be highly available. | |||

In case of multiple subnets in the same Availability Zone, a mount target must be created for each subnet, and each EC2 instances in the subnet can share the mount target. An EFS file system can only have mount targets in one VPC at a time. | |||

Each mount target has an ID, the subnet ID in which it was created and the file system ID for which it was created, the IP address at which the file systems may be mounted, VPC security groups and a state. The mount target is a sub-resource of a file system, it can only be created within the context of an existing file system. | |||

It seems that there could be just one mount target per availability zone, so if the same availability zone has multiple subnets (public and private), only one can be used to host the mount target, but all the EC2 instances from the availability zone can share the mount target - some routing adjustments might be required. | |||

[[File:efs.png]] | |||

=<span id='EFS_Access_Point'></span>Access Point= | |||

{{External|https://docs.aws.amazon.com/efs/latest/ug/efs-access-points.html}} | |||

An access point is an abstraction that applies an operating system user, group and file system path to any file system request made using the access point. The access point's operating system user and group override any identity information provided by the NFS client. Access points can also enforce a different root directory for the file system so that clients can only access data in the specified directory or its subdirectories: the file system path is exposed to the client as the access point's root directory. This ensures that each application always uses the correct operating system identity and the correct directory when accessing shared file-based datasets. Applications using the access point can only access data in its own directory and below. | |||

==Access Point Elements== | |||

====File System==== | |||

Each access point is associated with one and only one file system. | |||

====Name==== | |||

Optional name to show up in console. The access point will always get a generated ID. | |||

====Root Directory Path==== | |||

{{External|https://docs.aws.amazon.com/efs/latest/ug/efs-access-points.html#enforce-root-directory-access-point}} | |||

The path specified here is used as the EFS file system's virtual root directory. If nothing is specified, the default is root "/". | |||

====POSIX User ID==== | |||

{{External|https://docs.aws.amazon.com/efs/latest/ug/efs-access-points.html#enforce-identity-access-points}} | |||

POSIX user ID used for all file system operations using this access point (values from 0 to 4294967295). | |||

====POSIX Group ID==== | |||

POSIX group ID used for all file system operations using this access point (values from 0 to 4294967295). | |||

====POSIX Secondary group IDs==== | |||

Secondary POSIX group IDs used for all file system operations using this access point. | |||

====Root Directory Creation Owner User ID==== | |||

{{External|https://docs.aws.amazon.com/efs/latest/ug/efs-access-points.html#enforce-root-directory-access-point}} | |||

EFS will automatically create the specified root directory with these permissions if the directory does not already exist. | |||

====Root Directory Creation Owner Group ID==== | |||

====POSIX Permissions to Apply to the Root Directory Path==== | |||

Required. Usually 0755. | |||

==Access Point Operations== | |||

* [[Amazon_Elastic_File_System_Operations#Create_an_Access_Point|Create an Access Point]] | |||

* [[Amazon_Elastic_File_System_Operations#Remove_an_Access_Point|Remove an Access Point]] | |||

=Data Sharing and Consistency= | |||

An EFS file system can be accessed concurrently from multiple NFS clients, deployed within multiple Availability Zones within the same AWS Region. | |||

Amazon EFS provides the [[Linux_NFS_Concepts#Close-to-Open_Consistency|close-to-open consistency]] semantics that applications expect from NFS. | |||

In Amazon EFS, write operations are durably stored across Availability Zones in these situations: | |||

# An application performs a synchronous write operation (for example, using the <code>open</code> Linux command with the O_DIRECT flag, or the <code>fsync</code> Linux command). | |||

# An application closes a file. | |||

Depending on the access pattern, Amazon EFS can provide stronger consistency guarantees than close-to-open semantics. Applications that perform synchronous data access and perform non-appending writes have read-after-write consistency for data access. | |||

=Storage Classes= | |||

==Standard== | |||

The Standard storage class is used to store frequently accessed files. | |||

==Infrequent Access== | |||

The Infrequent Access (IA) storage class is a lower-cost storage class that's designed for storing long-lived, infrequently accessed files cost-effectively. | |||

=Permissions= | |||

By default, new Amazon EFS file systems are owned by root:root, and only the root user (UID 0) has read-write-execute permissions. | |||

=Security Group= | |||

One or more security groups per subnet must be specified when creating the file system. <font color=darkgray>TODO</font>. | |||

=File System Size= | |||

See: {{Internal|Amazon_EFS_CSI#Persistent_Volume_Size|Amazon EFS CSI | Persistent Volume Size}} | |||

=EFS Operations= | |||

* [[Amazon_Elastic_File_System_Operations#Create_a_File_System|Create an EFS file system]] | |||

Latest revision as of 23:25, 30 March 2021

Internal

Overview

Amazon Elastic File System (EFS) provides a scalable filesystem for Linux-based workloads for use with AWS cloud services and on-premises resources. More details about the specifics of the filesystem are available in the EFS File System section below.

EFS is built to scale on demand to petabytes without disrupting applications, growing and shrinking automatically as files are added and removed. It is designed to provide massively parallel shared access to thousands of Amazon EC2 instances, enabling applications to achieve high levels of aggregate throughput and IOPS with consistent low latencies. Amazon EFS is a fully managed service that requires no changes to existing applications and tools, providing access through a standard file system interface. Amazon EFS is a regional service storing data within and across multiple Availability Zones. The filesystems can be accessed across Availability Zones and regions via AWS Direct Connect or AWS VPN. Amazon EFS is well suited to support a broad spectrum of use cases from highly parallelized, scale-out workloads that require the highest possible throughput to single-threaded, latency-sensitive workloads.

EFS and EC2

EFS File System

The EFS file system is exposed as a POSIX-compliant file system mounted in an EC2 instance, a Docker container or a EKS pod. Most common use case is to mount EFS file systems on instances running in the AWS Cloud, but it is also possible to mount these file systems on instances running in on-premises data center, with AWS Direct Connect or AWS VPN.

The persistent storage backing up the EFS file system is managed by the AWS infrastructure and it is not accessible directly. However, an EFS file system can be accessed from a VPC via a mount target. The underlying protocol used to mount EFS file systems is NFS 4.0 and 4.1, so the appropriate NFS client must be available in the environment attempting to mount the file system.

An EFS file system is a primary resource, it has an ID, creation token, creation time and a lifecycle state.

Each file system has a DNS name of the following form:

<file-system-id>.efs.<aws-region>.amazonaws.com

File System ID

Mount Target

A mount target can be logically thought of as an NFS server with a fixed IP address that exists in a particular subnet in a VPC. The mount target provides the IP address for the NFSv4 endpoint at which the EFS file system can be mounted. The mount target IP address is associated with a DNS name. Mount targets are designed to be highly available.

In case of multiple subnets in the same Availability Zone, a mount target must be created for each subnet, and each EC2 instances in the subnet can share the mount target. An EFS file system can only have mount targets in one VPC at a time.

Each mount target has an ID, the subnet ID in which it was created and the file system ID for which it was created, the IP address at which the file systems may be mounted, VPC security groups and a state. The mount target is a sub-resource of a file system, it can only be created within the context of an existing file system.

It seems that there could be just one mount target per availability zone, so if the same availability zone has multiple subnets (public and private), only one can be used to host the mount target, but all the EC2 instances from the availability zone can share the mount target - some routing adjustments might be required.

Access Point

An access point is an abstraction that applies an operating system user, group and file system path to any file system request made using the access point. The access point's operating system user and group override any identity information provided by the NFS client. Access points can also enforce a different root directory for the file system so that clients can only access data in the specified directory or its subdirectories: the file system path is exposed to the client as the access point's root directory. This ensures that each application always uses the correct operating system identity and the correct directory when accessing shared file-based datasets. Applications using the access point can only access data in its own directory and below.

Access Point Elements

File System

Each access point is associated with one and only one file system.

Name

Optional name to show up in console. The access point will always get a generated ID.

Root Directory Path

The path specified here is used as the EFS file system's virtual root directory. If nothing is specified, the default is root "/".

POSIX User ID

POSIX user ID used for all file system operations using this access point (values from 0 to 4294967295).

POSIX Group ID

POSIX group ID used for all file system operations using this access point (values from 0 to 4294967295).

POSIX Secondary group IDs

Secondary POSIX group IDs used for all file system operations using this access point.

Root Directory Creation Owner User ID

EFS will automatically create the specified root directory with these permissions if the directory does not already exist.

Root Directory Creation Owner Group ID

POSIX Permissions to Apply to the Root Directory Path

Required. Usually 0755.

Access Point Operations

Data Sharing and Consistency

An EFS file system can be accessed concurrently from multiple NFS clients, deployed within multiple Availability Zones within the same AWS Region.

Amazon EFS provides the close-to-open consistency semantics that applications expect from NFS.

In Amazon EFS, write operations are durably stored across Availability Zones in these situations:

- An application performs a synchronous write operation (for example, using the

openLinux command with the O_DIRECT flag, or thefsyncLinux command). - An application closes a file.

Depending on the access pattern, Amazon EFS can provide stronger consistency guarantees than close-to-open semantics. Applications that perform synchronous data access and perform non-appending writes have read-after-write consistency for data access.

Storage Classes

Standard

The Standard storage class is used to store frequently accessed files.

Infrequent Access

The Infrequent Access (IA) storage class is a lower-cost storage class that's designed for storing long-lived, infrequently accessed files cost-effectively.

Permissions

By default, new Amazon EFS file systems are owned by root:root, and only the root user (UID 0) has read-write-execute permissions.

Security Group

One or more security groups per subnet must be specified when creating the file system. TODO.

File System Size

See: