Infrastructure as Code Concepts: Difference between revisions

| (472 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

* [[Infrastructure as Code#Concepts|Infrastructure as Code]] | * [[Infrastructure as Code#Concepts|Infrastructure as Code]] | ||

* [[Infrastructure Concepts]] | * [[Infrastructure Concepts]] | ||

* [[Infrastructure Code Testing Concepts#Overview|Infrastructure Code Testing]] | |||

* [[Designing Modular Systems]] | |||

* [[Continuous_Integration#Overview|Continuous Integration]] | |||

* [[Continuous_Delivery#Overview|Continuous Delivery]] | |||

=Overview= | =Overview= | ||

Infrastructure is not something you build and forget, in requires constant change: fixing, updating and improving. Infrastructure as Code is a set of technology and engineering practices aimed at delivering | Infrastructure is not something you build and forget, in requires constant change: fixing, updating and improving. Infrastructure as Code is a set of technology and engineering practices aimed at delivering [[#Change|change]] more frequently (or, some would say continuously), quickly and reliably, while improving the overall quality of the system at the same time. Trading speed for quality is a false dichotomy. Used correctly, Infrastructure as Code embeds speed, quality, reliability and compliance into the process of making changes. Changing infrastructure becomes safer. Infrastructure as Code is based on practices from software development, especially [[Software_Testing_Concepts#Test-Driven_Development_.28TDD.29|test driven development]], [[Continuous Integration#Overview|continuous integration]] and [[Continuous Delivery#Overview|continuous delivery]]. | ||

The capability to make changes frequently and reliably is correlated with organizational success. Organizations can't choose between being good at change or being good at stability. They tend to either be good at both or bad at both. (<font color=darkkhaki>[https://www.amazon.com/Accelerate-Software-Performing-Technology-Organizations-ebook/dp/B07B9F83WM Accelerate] by Dr. Nicole Forsgren, Jez Humble and Gene Kim</font>). Changes include adding new services, such as a new database, upgrades, increase of resources to keep up with load, change and tune underlying application runtimes for diagnosis and performance reasons, security patches. Stability comes from the capability of making changes fast and easy: unpatched systems are not stable, they are vulnerable. If you can't fix issues as soon as you discover them, the system is not stable. If you can't recovery from failure quickly, the system is not stable. If the changes you make involve considerable downtime, the system is not stable. If changes frequently fail, the system is not stable. Infrastructure as Code practices help teams perform well against the operational metrics described [[Operations#Operational_Performance_Metrics|here]]. | The capability to make changes frequently and reliably is correlated with organizational success. Organizations can't choose between being good at change or being good at stability. They tend to either be good at both or bad at both. (<font color=darkkhaki>[https://www.amazon.com/Accelerate-Software-Performing-Technology-Organizations-ebook/dp/B07B9F83WM Accelerate] by Dr. Nicole Forsgren, Jez Humble and Gene Kim</font>). Changes include adding new services, such as a new database, upgrades, increase of resources to keep up with load, change and tune underlying application runtimes for diagnosis and performance reasons, security patches. Stability comes from the capability of making changes fast and easy: unpatched systems are not stable, they are vulnerable. If you can't fix issues as soon as you discover them, the system is not stable. If you can't recovery from failure quickly, the system is not stable. If the changes you make involve considerable downtime, the system is not stable. If changes frequently fail, the system is not stable. Infrastructure as Code practices help teams perform well against the operational metrics described [[Operations#Operational_Performance_Metrics|here]]. | ||

| Line 20: | Line 24: | ||

* '''Create disposable instances''' that are easy to discard and replace. | * '''Create disposable instances''' that are easy to discard and replace. | ||

* <span id='Minimize_Variation'></span>'''Minimize variation'''. More similar things are, the easier to manage. Aim for your system to have as few '''types of pieces''' as possible, then automatically instantiate those pieces in as many instances you need, as a consequence of [[#Make_Everything_Reproducible|making everything reproducible]] principle. | * <span id='Minimize_Variation'></span>'''Minimize variation'''. More similar things are, the easier to manage. Aim for your system to have as few '''types of pieces''' as possible, then automatically instantiate those pieces in as many instances you need, as a consequence of [[#Make_Everything_Reproducible|making everything reproducible]] principle. | ||

* '''Ensure that you can repeat any process'''. If you can script a task, script it. If it's worth documenting, it's worth automating. | * <span id='Ensure_that_You_Can_Repeat_Any_Process'></span>'''Ensure that you can repeat any process'''. If you can script a task, script it. If it's worth documenting, it's worth automating. Repeatedly applying the same code safely requires the code to be [[#Idempotency|idempotent]]. | ||

* <span id='Keep_Configuration_Simple'></span>'''Keep configuration simple'''. Even if we strive to [[#Minimize_Variation|minimize variation]], there will aways be a need to configure the infrastructure types. The configurability should be kept in check: configurable code creates opportunity for inconsistency, and more configurable a piece of infrastructure code is, the more difficult is to understand its behavior and to ensure it is tested correctly. As such, minimize the number of configuration parameters. Avoid parameters you "might" need, they can be added later. Prefer simple parameters like numbers, strings, ad most lists and key-value maps. Do not get more complex than that. Avoid using boolean parameters that toggle on or off complex behavior. | |||

=Change= | |||

High quality systems enable faster change. A lot of software engineering techniques are about of applying change faster to systems, in such a way that those systems do not break. Design your systems and the operations around them to enable pushing small changes faster all the way to where they belong, in production. | |||

If you have a choice, choose more smaller, reduced [[#Blast_Radius|blast radius]] changes that are pushed all the way to production instead of a larger change. This choice goes along well with the practice of [[Designing_Modular_Systems#Overview|modularizing the system]]. Aim to break up a large, significant change into a set of smaller changes that can be delivered one at a time. | |||

The larger disruptions to systems usually occur when too much work is built up locally before pushing. It is tempting to focus on completing the full piece of work and it is harder to make a mall change that only takes you a little further toward the full thing. However, the proper structure based on continuous integration and continuous delivery pipelines encourages this approach, because allows proving that small, atomic, separate changes work, while at the same time provably move the system towards its desired end state. | |||

Small changes, applied in sequence and that progressively improve the system are referred to as '''incremental changes''' or '''iterative changes'''. | |||

More ideas: https://medium.com/@kentbeck_7670/bs-changes-e574bc396aaa | |||

=Core Practices= | =Core Practices= | ||

==Define Everything as Code== | ==Define Everything as Code== | ||

Code can be versioned and compared, it can benefit from lessons learned in software design patterns, principles and techniques such as [[Software_Testing_Concepts#Test-Driven_Development_.28TDD.29|test driven development]], [[ | Code can be versioned and compared, it can benefit from lessons learned in software design patterns, principles and techniques such as [[Software_Testing_Concepts#Test-Driven_Development_.28TDD.29|test driven development]], [[Continuous Integration#Overview|continuous integration]], [[Continuous Delivery#Overview|continuous delivery]] or [[#Refactoring|refactoring]]. | ||

Once a piece of infrastructure has been defined as code, many identically or slightly different instances of it can be created automatically by tools, automatically tested and deployed. Instances built by tools are built the same way every time, which makes the behavior of the system predictable. Moreover, everyone can see how the infrastructure has been defined by reviewing the code. Structure and configuration can be automatically audited for compliance. | Once a piece of infrastructure has been defined as code, many identically or slightly different instances of it can be created automatically by tools, automatically tested and deployed. Instances built by tools are built the same way every time, which makes the behavior of the system predictable. Moreover, everyone can see how the infrastructure has been defined by reviewing the code. Structure and configuration can be automatically audited for compliance. | ||

If more people work on the same piece of infrastructure, the changes can be [[ | If more people work on the same piece of infrastructure, the changes can be [[Continuous Integration#Overview|continuously integrated]] and then continuously tested and delivered, as described [[#Continuously_Test_and_Deliver|in the next section]]. | ||

==Continuously Test and Deliver== | ==Continuously Test and Deliver== | ||

Continuously test and deliver all work in progress. Continuously testing [[#Small_Pieces|small pieces]] encourages a modular, loosely coupled design. It also helps you find problems sooner, then quickly iterate, fix and rebuild the problematic code, which yields better infrastructure. | Continuously test and deliver all infrastructure work in progress. | ||

===Infrastructure Code Testing=== | |||

Continuously testing [[#Small_Pieces|small pieces]] encourages a modular, loosely coupled design. It also helps you find problems sooner, then quickly iterate, fix and rebuild the problematic code, which yields better infrastructure. The fact that tests remain with the code base and are continuously exercises as part of CD runs is referred to as "building quality in" rather than "testing quality in". | |||

{{Internal|Infrastructure Code Testing Concepts#Overview|Infrastructure Code Testing}} | |||

===<span id='Infrastructure_Code_Continuous_Delivery'></span>Continuous Delivery for Infrastructure Code=== | |||

{{Internal|Infrastructure_Code_Continuous_Delivery_Concepts#Overview|Continuous Delivery for Infrastructure Code}} | |||

==<span id='Small_Pieces'></span>Build Small, Simple, Loosely Coupled Pieces that Can Be Changed Independently== | ==<span id='Small_Pieces'></span>Build Small, Simple, Loosely Coupled Pieces that Can Be Changed Independently== | ||

{{Internal|Designing Modular Systems#Overview|Designing Modular Systems}} | |||

=Infrastructure Code= | |||

Infrastructure code consists in text files that contain the definition of the infrastructure elements to be created, updated or deleted, and their [[#Configuration_as_Code|configuration]]. As such, infrastructure code is some times referred to as "infrastructure definition". Infrastructure code elements map directly to [[Infrastructure_Concepts#Infrastructure_Resources|infrastructure resources]] and options exposed by the platform API. The code is fed to a tool, which either creates new instances or modifies the existing infrastructure to match the code. Different tools have different names for their source code: Terraform code (<code>.tf</code> files), CloudFormation [[AWS_CloudFormation_Concepts#Template|templates]], Ansible [[Ansible_Concepts#Playbooks_and_Plays|playbooks]], etc. One of the essential characteristics of infrastructure code is that the files it is declared in can be visualized, edited and stored independently of the tool that uses them to create the infrastructure: the tool must not be required to review or modify the files. A tool that uses external code for its specifications does not constrain its users to use a specific workflow: they can use instead industry-standard source control systems, editors, CI server and automated testing frameworks. | |||

==Declarative Infrastructure Languages== | |||

Declarative code defines the desired state of the infrastructure and relies on the tool to handle the logic required to reach the desired state, either if the infrastructure element does not exist at all and needs to be created, or if the state of the infrastructure element just needs to be adjusted. In other words, declarative code specifies what you want, without specifying how to make it happen. As result, the code is cleaner and clearer. The declarative code is closer to configuration than to programming. In addition to being declarative, many infrastructure tools use their own [[Domain Specific Languages|DSL]] instead of a general purpose declarative language like YAML. | |||

[[Terraform_Concepts#Overview|Terraform]], [[AWS CloudFormation Concepts#Overview|CloudFormation]], [[Ansible_Concepts#Overview|Ansible]], Chef and Puppet are based on this concept and use proprietary declarative languages based on DSLs. That makes it possible to writhe code that refers infrastructure platform-specific domain elements like virtual machines, subnets or disk volumes. | |||

Most tools support modularization of declarative code by the use of [[#Module|modules]]. | |||

Also see: {{Internal|Kubernetes_Concepts#Declarative_versus_Imperative_Approach|Kubernetes Concepts | Declarative versus Imperative Approach}} | |||

==Imperative Languages for Infrastructure== | |||

Declarative code become awkward to use in situations when you want different results depending on circumstances. Every time you need a conditional is one of those situations. The need to repeat the same action a number of times is another one of those situations. Because of this, most declarative infrastructure tools have extended their languages to add imperative programming capabilities. Another category of tools, such as [[Pulumi_Concepts#Overview|Pulumi]] or [[AWS CDK Concepts#Overview|AWS CDK]] use general-purpose programming language to define infrastructure. | |||

One of the biggest advantage of using a general-purpose programming language is the ecosystem of tools, especially support for testing and refactoring. | |||

==Mixing Declarative and Imperative Code== | |||

It's not very clear yet whether mixing declarative and imperative code when building different parts of an infrastructure system is good or bad. Also, it is not clear whether one style or another should be mandated. What is a little bit clearer is that declarative and imperative code must not be mixed when implementing the same component. A better approach is defining concerns clearly, and addressing each concern with the best tool, without mixing them. One sign that concerns are being mixed is extending a declarative syntax like YAML to add conditionals and loops. Another sign is mixing configuration data into procedural code - this is an indication that we're mixing what we want with how to implement it. When this happens, code should be split into separate concerns. | |||

==Infrastructure Code Management== | |||

Infrastructure code should be treated like any other code. It should be designed and managed so that is easy to understand and maintain. Code quality practices, such as code reviews, automated testing and [[Software_Architecture#Coupling_and_Cohesion|improving cohesion and reducing coupling]] should be followed. Infrastructure code could double as documentation in some cases, as it is always an accurate and updated record of your system. However, the infrastructure code is rarely the only documentation required. High-level documentation is necessary to understand concepts, context and strategy. | |||

=Primitives= | |||

==Stack== | |||

<font color=darkkhaki>Reconcile IaC stack and Pulumi stack.</font> | |||

A stack is a collection of [[Infrastructure_Concepts#Infrastructure_Resources|infrastructure resources]] that are defined, changed and managed together as a unit, using a tool like [[Terraform_Concepts#Overview|Terraform]], [[Pulumi_Concepts#Overview|Pulumi]], [[AWS CloudFormation Concepts#Overview|CloudFormation]] or [[Ansible_Concepts#Overview|Ansible]]. All elements declared in the stack are provisioned and destroyed with a single command. | |||

A stack is defined using [[#Infrastructure_Code|infrastructure source code]]. The stack source code, usually living in a [[#Stack_Project|stack project]], is read by the stack management tool, which uses the cloud platform APIs to instantiate or modify the elements defined by the code. The resources in a stack are provisioned together to create a [[#Stack_Instance|stack instance]]. The time to provision, change and test a stack is based on the entire stack and this should be a consideration when deciding on how to combine infrastructure elements in stacks. This subject is addressed in the [[#Stack_Patterns|Stack Patterns]] section, below. CloudFormation and Pulumi come with a very similar concepts, also named [[AWS_CloudFormation_Concepts#Stack|stack]]. | |||

The infrastructure stack is an example of an "architectural quantum", defined by [[EvoA|Ford, Parsons and Kua]] as "an independently deployable component with high functional [[Designing_Modular_Systems#Cohesion|cohesion]], which includes all the structural elements required for the system to function correctly". In other words, a stack is a component that can be pushed in production on its own. | |||

A stack can be composed of [[#Stack_Components|components]], and it may be itself a component used by other stacks. | |||

Code that builds servers should be decoupled by code that builds stacks. A stack should specify what servers to create and pass the information about the environment to a server configuration tool. | |||

===Stack Project=== | |||

A project is a collection of code used to build a discrete component of the system. In this case, the component is a stack <font color=darkkhaki>or a set of stacks</font>. A stack project is the source code that declares the infrastructure resources that make a stack. A stack project should define the shape of the stack that is consistent across instances. The stack may be declared in one or more source files. | |||

The project may also include different types of code, aside from infrastructure declaration: tests, configuration value and utility scripts. Files specific to a project live with the project. It is a good idea to establish and popularize a strategy for organizing these things consistently across similar projects. Generally speaking, any supporting code for a specific project should live with that project's code: | |||

<font size=-1> | |||

├─ [[#Source_Code|src]] | |||

├─ [[#Tests|test]] | |||

├─ [[#Configuration|environments]] | |||

├─ [[#Delivery_Configuration|pipeline]] | |||

└─ build.sh | |||

</font> | |||

====Source Code==== | |||

The infrastructure code is central to a stack project. When a stack consists of multiple resources, aim to group the code for resources that are combined to assemble domain concepts together in the same files, instead of organizing the code along the lines of functional elements. For example, you may want an "application cluster" file, that includes the container runtime declaration, networking elements, firewall rules and security policies, instead of a set of "servers", "network", "firewall-rules" and "security-policies" files. It is often easier to write, understand, change and maintain code for related functional elements if they are all declared in the same file. This concept follows the design principle of [[Designing_Modular_Systems#Design_Components_around_Domain_Concepts.2C_not_Technical_Ones|designing around the domain concept rather than technical ones]]. | |||

====Tests==== | |||

The project should include the tests that ensure the stack works correctly. Tests will run at different phases, such as [[Infrastructure_Code_Testing_Concepts#Offline_Stack_Tests|offline]] and [[Infrastructure_Code_Testing_Concepts#Online_Stack_Tests|online]] tests. There are cases, however, when integration tests do not naturally belong with the stack, but outside the stack project. For more details, see: {{Internal|Infrastructure_Code_Testing_Concepts#Test_Code_Location|Infrastructure Code Testing Concepts | Test Code Location}} | |||

====Configuration==== | |||

This section of the project contains [[#Stack_Configuration_File|stack configuration files]] and application configuration (Helm files, etc.). There are two approaches to store stack configuration files: | |||

* A component project contains the configuration for multiple [[#Environment|environments]] in which that component is deployed. This approach is not very clean, because it mixes generalized, reusable code with details of specific instances. On the other hand, it's arguably easier to trace and understand configuration values when they're close to the project they relate to, rather than mingled in a monolithic configuration project. | |||

* A separate project with configuration for all the stacks, organized by environment. | |||

====Delivery Configuration==== | |||

Configuration to create delivery stages in a delivery pipeline tool. | |||

===Stack Instance=== | |||

A stack definition can be used to provision more than one stack instance. A stack instance is a particular embodiment of a [[#Stack|stack]], containing actual live infrastructure resources. Changes should not be applied to the stack instance components, on risk of creating [[Infrastructure_Concepts#Configuration_Drift|configuration drift]], but to the stack, and then applied via the infrastructure tool. The stack tool reads the stack definition and, using the platform APIs, ensure the stack elements exists and their state matches the desired state. If the tool is run without making any changes to the code, it should [[#Idempotency|leave the stack instance unmodified]]. This process is referred to as "applying" the code to an instance. | |||

====Reusable Stack==== | |||

It is convenient to create multiple [[#Stack_Instance|stack instances]] based on a single stack definition. This pattern, named "reusable stack", encourages code reuse and helps maintaining the resulting stack instances in sync. A fix or an improvement required by a particular stack instance can be applied to the reusable stack definition the instance was created from, and then applied to all stack instances created based on the same definition. | |||

The reusable stack pattern is only possible if the infrastructure tool supports modules. | |||

===<span id='Dependency'></span>Stack Dependencies=== | |||

Stacks consume resources provided by other stacks. If stack A creates resources stack B depends on, then stack A is a <span id='Provider'></span>'''provider''' for stack B and stack B is a <span id='Consumer'></span>'''consumer''' of stack A. Stack B has dependencies created by stack A. A given stack may be both a provider and a consumer, consuming resources from another stack and providing resources to other stacks. In testing, dependencies are often [[Infrastructure_Code_Testing_Concepts#Online_Stack_Tests|simulated with test doubles]]. Dependencies are [[#Dependency_Discovery|discovered]] via different mechanisms. | |||

===Stack Integration Point=== | |||

Stacks enable modularization by defining their integration points with the rest of the system. The stack is wired into the rest of the system via these integration points. | |||

===Stack Management Tools=== | |||

* [[Terraform_Concepts#Overview|Terraform]] | |||

* [[Terragrunt_Concepts#Overview|Terragrunt]] | |||

* [[AWS_CloudFormation_Concepts#Overview|CloudFormation]] | |||

* [[Pulumi_Concepts#Overview|Pulumi]] | |||

* [[Azure Resource Manger Concepts#Overview|Azure Resource Manger]] | |||

* [[Google Cloud Deployment Manager Concepts#Overview|Google Cloud Deployment Manager]] | |||

* [[OpenStack_Heat#Overview|OpenStack Heat]] | |||

===Stack Patterns=== | |||

====Monolithic Stack==== | |||

A monolithic stack contains too many infrastructure elements, making it difficult to manage the stack well. It is very rare when all the infrastructure elements of the entire [[#Environment|environment]] <font color=darkkhaki>or system</font> need to be managed as a unit. As such, a monolithic stack is an antipattern. A sign that a stack had became a monolithic stack is if multiple teams are making changes to it at the same time. | |||

====Application Group Stack==== | |||

An application group stack includes the infrastructure for multiple related applications or services, which is provisioned and managed as a unit. This pattern makes sense when a single team owns the infrastructure and the deployment of all the pieces of the application group. An application group stack can align the boundaries of the stack to the team's responsibilities. The team needs to manage the risk to the entire stack for every change, even if only one element is changing. This pattern is inefficient if some parts of the stack change more frequently than others. | |||

====Service Stack==== | |||

A service stack declares the infrastructure for an individual application component (service). This pattern applies to [[Microservices#Overview|microservices]]. It aligns the infrastructure boundaries to the software that runs on it. This alignment limits the [[#Blast_Radius|blast radius]] for a change to one service, which simplifies the process of scheduling changes. The service team can own the infrastructure their service requires, and that encourages team autonomy. Each service has a separate [[#Stack_Project|infrastructure code project]]. | |||

In case more than one services uses similar infrastructure, packaging the common infrastructure elements in shared modules is a better option than duplicating infrastructure code in each service stack. | |||

=Stack= | ====Micro Stack==== | ||

The micro stack pattern divides the infrastructure for a single service across multiple stacks (ex. separate stacks for networking, servers and database). The separation lines are related to the lifecycle of the underlying [[#State_Continuity|managed state]] for each infrastructure element. Having multiple different small stacks requires an increased focus on [[#Stack_Integration_Point|integration points]]. | |||

===Stack Configuration=== | |||

The idea behind automated provisioning and management of multiple similar [[#Stack_Instance|stack instances]] is that the infrastructure resources are specified in a [[#Reusable_Stack|reusable stack]] which is then applied to create similar, yet slightly different stack instance, depending on their intended use. The difference comes from the need for customization: instances might be configured with different names, different resource settings, or security constraints. | |||

The typical way of dealing with this situation is to 1) use parameters in the stack code and 2) pass values for those parameters as configuration to the tool that applies the stack. One important principle to follow when exposing stack configuration is to [[#Keep_Configuration_Simple|keep configuration simple]]. | |||

Configuration can be passed as [[#Command_Line_Arguments|command line arguments]], [[#Environment_Variables|environment variables]], the content of a [[#Stack_Configuration_File|configuration file]], a special [[#Wrapper_Stack|wrapper stack]], [[#Pipeline_Stack_Parameters|pipeline parameters]], or have the infrastructure tool to read them from [[#Parameter_Registry|a key/value store or other type of central registry]]. | |||

<font color=darkkhaki>If needing to deal with external configuration from pipeline scripts, see [[IaC]] Chapter 19. Delivering Infrastructure Code → Using Scripts to Wrap Infrastructure Tools → Assembling Configuration Values.</font> | |||

====Command Line Arguments==== | |||

The configuration parameters can be passed to the infrastructure tool as command line arguments when the tools is executed. Unless used for experimentation, this is an antipattern, as it require human intervention on each run, which is what we are trying to move away from. Besides, manually entered parameters are not suitable for automated CD pipelines. | |||

====Scripted Command Line Arguments==== | |||

A variation of this theme is to write a wrapper script around the tool that contains and groups command line parameters and only run the wrapper script, without any manually entered parameters. This pattern is not suitable for security sensitive configuration, as those values must not hardcoded in scripts. Also, maintain scripts tends to become messy over time. | |||

More ideas on how to use scripts in automation are available in [[IaC|Infrastructure as Code: Dynamic Systems for the Cloud Age]] by Kief Morris, Chapter 7. Configuring Stack Instances → Patterns for Configuring Stacks → Pattern: Scripted Parameters. | |||

====Environment Variables==== | |||

If the infrastructure tool allows it, the parameter values can be set as environment variables. This implies that the environment variables used in the infrastructure code are automatically translated to their value by the tool. If that is not the case, a wrapper script can read the values from environment and convert them to command line arguments for the infrastructure tool. | |||

The values of all environment variables can be collected into a file that can be applied to the environment as follows: | |||

<syntaxhighlight lang='bash'> | |||

source ./values.env | |||

</syntaxhighlight> | |||

This is a variation of the [[#Stack_Configuration_File|Stack Configuration File]] pattern, introduced below. However, using environment variables directly in the stack code arguably couples the code too tightly to the runtime environment. This approach is also not appropriate when the configuration is security sensitive, as setting secrets in environment variable may expose them to other processes that run on the same system. | |||

====Stack Configuration File==== | |||

Declare parameter values for each stack instance into a corresponding configuration file (the "stack configuration file") in the [[#Configuration|configuration section]] of the [[#Stack_Project|stack project]]. The stack configuration file will be automatically committed to the source repository, as the rest of the stack project. Also see [[#Configuration_as_Code|Configuration as Code]] below. | |||

This pattern comes with a few advantages: | |||

* enforces the separation of configuration from the stack code. | |||

* requires and helps enforcing consistent logic in how different instances are created because they can't include logic. | |||

* it makes obvious what values are used for a given environment by scanning of just a small amount of content (they can be even diff-ed). | |||

* provides a history of changes. | |||

This approach alone is not appropriate when the configuration is security-sensitive, as maintaining secrets source code must be avoided. The pattern must be combined with a method to manage security-sensitive configuration. | |||

Notable examples of stack configuration files are Pulumi's [[Pulumi_Concepts#Stack_Settings_File|stack settings files]]. | |||

One variation of this pattern involves declaring default values in the stack code and then "overlaying" the values from the configuration files, where the values coming from the configuration files take precedence. This establishes a '''configuration hierarchy''' and inheritance mechanism. <font color=darkkhaki>More on configuration layout and hierarchies in [[IaC|Infrastructure as Code: Dynamic Systems for the Cloud Age]] by Kief Morris, Chapter 7. Configuring Stack Instances → Patterns for Configuring Stacks → Pattern: Stack Configuration Files → Implementation.</font> | |||

====Wrapper Stack==== | |||

The code that describes the infrastructure, which usually changes slower over time, is coded in a stack, or a [[#Reusable_Stack|reusable stack]]. The configuration, that changes more often over time and across stack instances, is coded in a "wrapper stack". There is one wrapper stack per stack instance. Each wrapper stack defines configuration values for its own stack instance, in the language of the infrastructure tool. All wrapper stacks import the shared reusable stack. There is a separate [[#Stack_Project|infrastructure project]] for each stack instance, which contains the corresponding wrapper stack. | |||

This pattern is only possible if the infrastructure tool supports modules or libraries. Also, it adds an extra layer of complexity between the stack instance and the code that defines it: on one level there is the stack project that contains the wrapper stack, and on the other level there is the component that contains the code for the stack. Since the wrapper stack is written in the same language as the reusable stack, it is theoretically possible to add logic in the wrapper stack - and people will probably be tempted to - but that should be forbidden, because custom stack instance code makes the codebase inconsistent and hard to maintain. | |||

This pattern cannot be used to manage security-sensitive configuration, because the wrapper stack is stored in the code repository. | |||

====Pipeline Stack Parameters==== | |||

Define parameter values for each stack instance in the configuration of the [[Continuous_Delivery#Infrastructure_Delivery_Pipeline|delivery pipeline]] for the stack instance. | |||

This is kind of an antipattern. By defining stack instance variables in the pipeline configuration, the configuration is coupled with the delivery process. If the coupling is too hard, and no other way to parameterize stack instance creation exist, then it may become hard do develop and test stack code outside the pipeline. It is better to keep the logic and configuration in layers called by the pipeline, rather than in the pipeline configuration. | |||

====<span id='Parameter_Registry'></span>Stack Parameter Registry==== | |||

A stack parameter registry, which is a specific use case for a [[Infrastructure_Concepts#Configuration_Registry|configuration registry]], stores parameter values in a central location, rather than via the stack code. The infrastructure tool retrieves relevant values when it applies the stack code to a stack instance. | |||

To avoid coupling the infrastructure tool directly with the configuration registry, there could be a script or other piece of logic that fetches the configuration values from the registry and passes them to the infrastructure tool as normal (command line) parameters. This increases testability of the stack code. | |||

===Stack Modularization=== | |||

See [[#Stack_Components|stack components]] and [[#Stacks_as_Components|stacks as components]] below. | |||

==Environment== | |||

An environment is a collection of operationally-related applications and infrastructure, organized around a particular purpose, such as to support a specific client (segregation), a specific testing phase, provide service in a geographical region, provide high availability or scalability. Most often, multiple environments exists, each running an instance of the same system, or group of applications. The classical use case for multiple environments is to support a progressive software release process ("path to production"), where a given build of an application is deployed in turn to is the development, test, staging and production environments. | |||

The environments should be defined as [[#Infrastructure_Code|code]] too, which increases consistency across environments. An environment's infrastructure should be defined in a [[#Stack|stack]] or a set of stacks - see [[#Reusable_Stack_to_Implement_Environments|Reusable Stack to Implement Environments]] below. Defining multiple environments in a single stack is an antipattern and it should not be used, for the same reasons presented in the [[#Monolithic_Stack|Monolithic Stack]] section. | |||

Copying and pasting the environment stack definition into multiple projects, each corresponding to an individual environment, is also an antipattern, because the infrastructure code for what are supposed to be similar environments will get soon out of sync and the environment will start to suffer from [[Infrastructure_Concepts#Configuration_Drift|configuration drift]]. In the rare cases where you want to maintain and change different instances and aren't worried about code duplication or losing consistency, copy-and-paste might be appropriate. | |||

===<span id='Reusable_Stack_to_Implement_Environments'></span>Environments as Reusable Stack Instances=== | |||

A single [[#Stack_Project|project]] is used to define the generic structure of an environment, as a [[#Reusable_Stack|reusable stack]], which is then used to manage a separate [[#Stack_Instance|stack instance]] for each environment. | |||

When a new environment is needed - for example, when a new customer signs on - the infrastructure team uses the environment's reusable stack to create a new instance of the environment. When a new fix or improvement is applied to the code base, the modified stack is applied to a test environment first, then it is rolled out to all existing customers. This is a pattern appropriate for change delivery to multiple production environments. However, if each environment is heavily customized, this pattern is not applicable. | |||

<font color=darkkhaki>If the environment is large, describing the entire environment in a single stack might start to resemble a [[Infrastructure_as_Code_Concepts#Monolithic_Stack|monolithic stack]], and we all know that a monolithic stack is an antipattern. In this situation, the environment definition should be broken down into a set of [[#Application_Group_Stack|application group]], [[#Service_Stack|service]] or [[#Micro_Stack|micro]] stacks. TO BE CONTINUED. </font> | |||

===Managing Differences between Environments=== | |||

Naturally, there are differences between similar environments, only if in naming. However, differences usually go beyond names and extend to resource configuration, security configuration, etc. In all these cases, the differences should surface as parameters to be provided to the infrastructure tool when creating an environment. This approach is described in the [[#Wrapper_Stack|Wrapper Stack]] section. | |||

=Modular Infrastructure= | |||

Most of the concepts and techniques used to [[Designing_Modular_Systems#Modular_Infrastructure|design modular software systems]] apply to infrastructure code. <font color=darkkhaki>Explain [[#Module|modules]] vs. [[#Library|libraries]].</font> | |||

==<span id='Module'></span>Modules== | |||

Modules are a mechanism to reuse [[#Declarative_Infrastructure_Languages|declarative code]]. Modules are components that can be [[Designing_Modular_Systems#Reuse|reused]], [[Designing_Modular_Systems#Composition|composed]], independently [[Designing_Modular_Systems#Testability|tested]] and [[Designing_Modular_Systems#Sharing|shared]]. CloudFormation has [[AWS_CloudFormation_Concepts#Nested_Stack|nested stacks]] and Terraform has [[Terraform_Concepts#Module|modules]]. In case of a declarative language, complex logic is discouraged, so declarative code modules work best for defining infrastructure components that don't vary very much. Patterns that fit well with declarative languages are [[#Facade|facade components]]. | |||

==<span id='Library'></span>Libraries== | |||

Libraries are a mechanism to reuse [[#Imperative_Languages_for_Infrastructure|imperative code]]. Libraries, like modules, are components that can be [[Designing_Modular_Systems#Reuse|reused]], [[Designing_Modular_Systems#Composition|composed]], independently [[Designing_Modular_Systems#Testability|tested]] and [[Designing_Modular_Systems#Sharing|shared]]. Unlike modules, libraries can include more complex logic that dynamically provisions infrastructure, as a consequence of the capabilities of the imperative language they're written in. [[Pulumi_Concepts#Modularization|Pulumi]] supports modular code by using the underlying programming language modularization features. | |||

==<span id='Stack_Component'></span><span id='Component'></span>Stack Components== | |||

Stack components refer to breaking [[#Stack|stacks]] into smaller [[Designing_Modular_Systems#Cohesion|high-cohesion pieces]], to achieve [[Designing_Modular_Systems#Modularization_Benefits|the benefits of modularization]]. While modularizing the stack, also be aware of the [[Designing_Modular_Systems#Modularization_Downsides|dangers of modularization]]. Depending on the infrastructure language, the pieces could be [[#Module|modules]] or [[#Library|libraries]]. | |||

===<span id='Facade'></span>Facade Component=== | |||

A facade component creates a simplified interface to a single resource from the infrastructure tool language or the infrastructure platform (when more than one resource is involved, see [[#Bundle_Component|bundle component]] below). The component exposes fewer parameters to the calling code. The component passes the parameters to the underlying resource, and hardcodes values for the other parameters needed by the resource. The facade component simplifies and standardizes a common use case for an infrastructure resource, the code involving it is should be simpler and easier to read. The facade limits how you can use the underlying component, and this can be beneficial, but it also limits flexibility. As with any extra layer of code, it adds to overhead to maintaining, debugging and improving the code. | |||

This pattern is appropriate for declarative infrastructure languages, and not so much for imperative infrastructure languages. | |||

Care should be taken so this pattern does not become an "obfuscation component" pattern, which only adds a layer of code without simplifying anything or adding any particular value. | |||

===Bundle Component=== | |||

A bundle component declares a collection of interrelated infrastructure resources with [[Designing_Modular_Systems#Cohesion|high cohesion]] with a simplified interface. Those multiple infrastructure resources are usually centered around a core resource. The bundle component is useful to capture knowledge about various elements needed and how to wire them together for a common purpose. | |||

This pattern is appropriate for declarative infrastructure languages and when the resource set involved is fairy static - does not vary much in different use cases. If you find out that you need to create different secondary resources inside the component depending on usage, it's probably better to create several components, one for each us case. In case of imperative languages, this pattern is known as [[#Infrastructure_Domain_Entity|infrastructure domain entity]]. | |||

====Spaghetti Component==== | |||

An attempt to implement an [[#Infrastructure_Domain_Entity|infrastructure domain entity]] with a declarative language is called a "spaghetti module". According to [[IaC|Kief Morris]], "a spaghetti module is a bundle module that wishes it was a domain entity but descends into madness thanks to the limitations of its declarative language". The presence of many conditionals in declarative code, or if you are having problems to test the component in isolation, that's a sign that you have a spaghetti component. Most spaghetti components are a result of pushing declarative code to implement dynamic logic. | |||

===Infrastructure Domain Entity=== | |||

The infrastructure domain entity is a component that implements a high-level stack component by combining multiple lower-level infrastructure resources. The component is similar to [[#Bundle_Component|bundle component]], but it creates the infrastructure resources dynamically, instead of relying on declarative composition. Infrastructure domain entities are implemented in imperative languages. | |||

An example of high-level concept that could be implemented as an infrastructure domain entity is the infrastructure needed to run an application. | |||

The domain entity is often part of an [[#Abstraction_Layer|abstraction layer]] that emerges when attempting to build infrastructure based on higher-level requirements. Usually an infrastructure platform team build components other teams use to assemble stacks. | |||

==Abstraction Layer== | |||

An abstraction layer provides a simplified interface to lower-level resources. From this perspective, a set of composable [[#Stack_Component|stack components]] can act as an abstraction layer for underlying resources. The components become in this case [[Infrastructure_as_Code_Concepts#Infrastructure_Domain_Entity|infrastructure domain entities]] and they assemble low-level resources into components that are useful when focusing on higher-level tasks. | |||

An abstraction layer helps separate concerns, exposing some and hiding others, so that people can focus on a problem at a particular level of detail. An abstraction layer might emerge organically as various components are developed, but it's usually useful to have a high-level design and standards so that the components of the layer work well together and fit into a cohesive view of the system. | |||

[[Open Application Model]] is an example of an attempt to define a standard architecture that decouples application, runtime and infrastructure. | |||

==Stacks as Components== | |||

Infrastructure composed of small stacks is more nimble than large stacks built off [[#Component|components]] ([[#Module|modules]] and [[#Library|libraries]]). A small stack can be changed more quickly, easily and safely than a large stack. Building a system from multiple stacks requires keeping each stack cohesive, loosely coupled, and not very large. | |||

===Dependency Discovery=== | |||

Integration between two stacks involves one stack managing a resource that other stack uses as a [[#Dependency|dependency]], so dependency discovery mechanisms are essential to integration. Depending on how stacks find their dependencies and connect to them, they might be tighter or loosely coupled. | |||

====Hardcoding==== | |||

This creates very tight coupling and makes testing with test doubles impossible. It makes it hard to maintain multiple infrastructure instances, as for different environments. | |||

====Resource Matching==== | |||

[[#Consumer|Consumer stacks]] dynamically select their dependencies by using names or tags, using either patterns or exact values. This reduces coupling with other stacks, or with specific tools. The provider and consumer stack can be implemented using different tools. The matching pattern becomes a contract between the provider and the consumer. The producer suddenly starts to create resources using a different naming/tagging pattern, the dependency breaks, so the convention should be published and tested, like any other contract. | |||

Using tags should be preferred to naming patterns. | |||

Most stack languages support matching other attributes than the resource name (Terraform [[Terraform_Concepts#Data_Source|data sources]], AWS CDK [[AWS_CDK_Concepts#Resource_Importing|resource importing]]). | |||

<font color=darkkhaki>The language should support resource matching at the language level. | |||

<syntaxhighlight lang='text'> | |||

external_resource: | |||

id: appserver_vlan | |||

match: | |||

tag: name == "network_tier" && value == "application_servers" | |||

tag: name == "environment" && value == ${ENVIRONMENT_NAME} | |||

</syntaxhighlight> | |||

Follow up. | |||

</font> | |||

====Stack Data Lookup==== | |||

Stack data lookup finds provider resources using data structures maintained by the tool that manages the provider stack, usually referred to as "state files" or "backends". The backend state maintained by the tool includes values exported by the provider stack, which are looked up and used by the consumer stack. See [[Pulumi_Concepts#Backend|Pulumi backend]] and [[Pulumi_Concepts#Stack_References|stack references]], and [[Terraform_Concepts#Backend|Terraform backend]] and [[Hashicorp_Configuration_Language#Output_Variable|output variables]]. | |||

This pattern works when the stacks to be integrated are managed by the same tool. | |||

Also, this approach requires embedding references to the provider stack in the consumer stack's code. Another option is to use [[#Dependency_Injection|dependency injection]]. The orchestration logic will look up the output value from the provider stack and inject it into the consumer stack. | |||

====Integration Registry Lookup==== | |||

Both stack refer to a integration registry (or [[Infrastructure_Concepts#Configuration_Registry|configuration registry]]) in a known location to store and read values. Using a configuration registry makes the integration points explicit. A consumer stack can only use value explicitly published by a producer stack, so the provider team can freely change how they implement their resources. If used, the registry becomes critical for integration and it must me made available at all times. It requires a clear naming convention. One option is a hierarchical namespace. | |||

====Dependency Injection==== | |||

All dependency discovery patterns presented so far ([[#Hardcoding|hardcoding]], [[#Resource_Matching|resource matching]], [[#Stack_Data_Lookup|stack data lookup]] and [[#Integration_Registry_Lookup|integration registry lookup]]) couple the consumer stack either to the provider stack directly or to the dependency mechanism. Ideally, this coupling should be minimized. Hardcoding specific assumptions about dependency discovery into stack code makes it less [[Designing_Modular_Systems#Reuse|reusable]]. Dependency injection is a pattern that decouples dependencies from their discovery. Dependency injection is a technique where components get their dependencies (are injected with dependencies) rather than discovering them themselves. A consumer stack will declare the resource it depends as parameters, similarly to declaring the instance configuration parameters. The tool that orchestrates the infrastructure tool would be responsible for discovering the dependencies, using any of the patterns described above, and passing them to the consumer stack. The benefit of this approach is that the stack definition code is simpler and can be used in different contexts and with different dependency resolution mechanisms. It makes the code easier to tests, too, allowing for [[Software_Testing_Concepts#Progressive_Testing|progressive testing]] first with mocks, then with real infrastructure resources. | |||

Also see: {{Internal|Inversion of Control Pattern#Overview|Inversion of Control Pattern}} | |||

=Organizing Infrastructure Code= | |||

Most of the infrastructure code belongs within the [[Infrastructure_as_Code_Concepts#Stack_Project|stack projects]] that define the component stacks. That includes [[#Source_Code|code]], [[#Tests|tests]], [[#Configuration|configuration]] and [[#Delivery_Configuration|delivery configuration]]. There is the special case of integration test code, that arguably [[Infrastructure_Code_Testing_Concepts#Test_Code_Location|may belong into separate specialized testing projects]]. | |||

==Application and Infrastructure Code== | |||

There are pros and cons for keeping the application code in the same repository with the infrastructure code. Separating code creates a cognitive barrier. If the infrastructure code is in a different repository, the application team won't have the same level of comfort digging into it. Infrastructure code located in the team's own area is less intimidating. | |||

Regardless of where the code lives, the application and infrastructure code are delivered together. Testing application and infrastructure should be done as part of the same delivery pipeline all the way to production. Many organizations have a legacy view of production infrastructure as separate silo. The team that owns the production infrastructure (staging and production) does not have responsibility for development and testing environments. This separation creates friction for application delivery and also for infrastructure changes. Teams don't discover gaps between the two parts of the system until late in the delivery process. The delivery strategy should deliver changes to infrastructure code across all environments. This approach takes advantage of application integration tests, that are executed together with the infrastructure changes. However, to avoid breaking shared environments, it's a good idea to test infrastructure changes in advance, in stages and environments that test infrastructure code on their own. | |||

==Using Infrastructure Code to Deploy Applications== | |||

⚠️ Building infrastructure for applications and deploying application onto infrastructure are two different concerns that should not be mixed up. The interface between application and infrastructure should be simple and clear. Operating system packaging systems like RPMs are a well-defined interface for packaging and deploying applications. <font color=darkkhaki>Infrastructure code can specify the package to deploy and then let the deployment tool take over.</font> Trouble comes when deploying an application involves multiple activities, especially when involves multiple moving parts, like multi-steps procedural scripts. Avoid procedural scripts mixed with declarative infrastructure code. Use a packaging system similar to RPM and write tests for the package. For cloud-native application, deployment orchestration should be done with [[Helm]] or [[Octopus Deploy]]. These tools enforce separation of concerns by focusing on deployment and leaving the provisioning of the underlying cluster to other parts of the infrastructure codebase. The easier and safer it is to deploy a change independently of other changes, the more reliable the entire system is. | |||

Also see: {{Internal|Infrastructure_Concepts#Application_Deployment_is_Not_Infrastructure_Management|Infrastructure Concepts | Applications | Application Deployment is Not Infrastructure Management}} | |||

=Delivering Infrastructure Code= | |||

{{Internal|Infrastructure_Code_Continuous_Delivery_Concepts#Overview|Continuous Delivery for Infrastructure Code}} | |||

==Integrating Infrastructure Projects== | |||

This section deals with combining different versions of infrastructure projects that depend on each other. When someone makes a change to one of these infrastructure code projects, they create a new version of the project code. That version must be integrated with a version of each of the other projects. The projects can be integrate at [[#Build-Time_Integration|build time]], [[#Delivery-Time_Integration|delivery time]] or [[#Apply-Time_Integration|apply time]]. | |||

===Build-Time Integration=== | |||

<font color=darkkhaki>TO PROCESS: [[IaC]] Chapter 19. Delivering Infrastructure Code → Integrating Projects → Pattern: Build-Time Project Integration.</font> | |||

===Delivery-Time Integration=== | |||

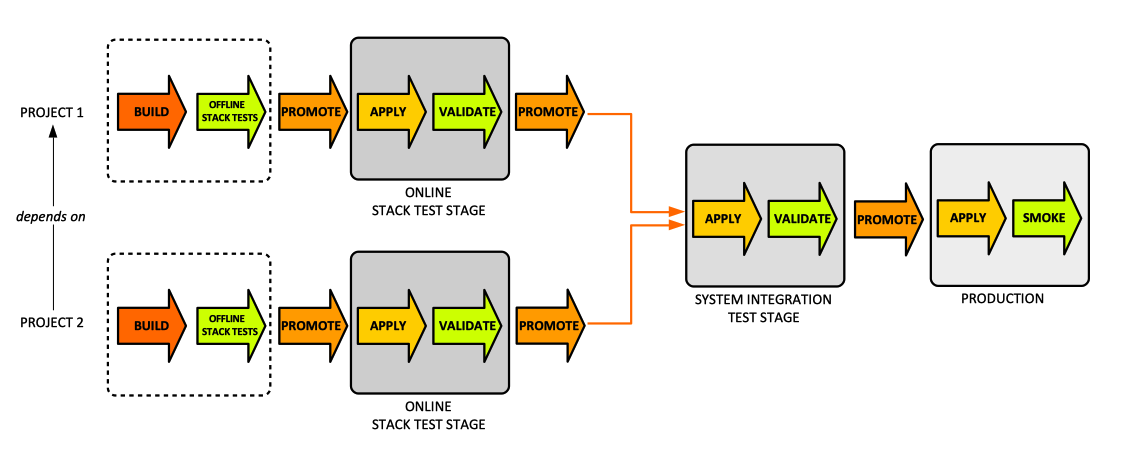

Given multiple projects with dependencies between them, delivery-time project integration builds and tests each project individually, and then combines them and tests the integration. Once the projects have been combined and tested, their code progresses together though the rest of the delivery cycle - the same set of combined project versions need to be applied in later stages of the delivery process. This could be implemented introducing a descriptor that contains the version numbers of the projects that work together. The delivery process treats the descriptor file as an artifact and each stage that applies the infrastructure code pulls the individual project artifact from the delivery repository. Building and testing projects individually helps enforcing clear boundaries and loose coupling between them. Failures at the integration stage reveal coupling between projects. This pattern is difficult to scale to a large number of projects. The pattern posts the complexity or resolving and coordinating different versions of different projects into the delivery process, and this requires a sophisticated delivery implementation, such as a. pipeline. Integrating projects this way as part of the delivery pipeline is known in as "[[Continuous_Delivery#Fan-in_Pipeline_Design|fan-in pipeline design]]". | |||

[[File:Delivery-Time_Integration.png|1134px]] | |||

===Apply-Time Integration=== | |||

This pattern is known as "decoupled delivery" or "decoupled pipelines". This pattern involves pushing multiple projects through delivery stages separately. When someone changes a project's code, the pipeline applies the updated code to each environment in the delivery path for the projects. <font color=darkkhaki>This version of the project code may integrate with different versions of of upstream or downstream projects in each of these environments. Integration between projects takes place by applying the code of the project that changed to an environment. </font> Integrating projects at apply time minimizes coupling between projects. This pattern suits organizations with an autonomous team structure. Different teams can push changes to their systems to production without needing to coordinate and without being blocked by problems with a change to another team's project. However, this pattern moves the risk of breaking dependencies across the project to apply-time. It does not ensure consistency through the pipeline. Interfaces between projects need to be carefully managed to maximize compatibility across different version on each side of any given dependency. There is no guarantee of which version of anther project's code was used in any given environment, so teams need to identify dependencies between projects clearly and treat them as contracts. [[Consumer-Driven Contract]]s may help in this situation. | |||

==Applying Infrastructure Code== | |||

While it is OK to apply infrastructure code from a developer's workstation for experimentation or testing in an isolated environment, doing so will create problem in shared environments. In these cases, code should be applied from a centralized service. The service pulls code from a [[Continuous_Delivery#Delivery_Repository|delivery repository]] or a source repository and applies it to the infrastructure via a clear, controlled process for managing which version of code is applied. Using a central service aligns well with the pipeline mode for delivering infrastructure code across environments. Examples of such services: Terraform Cloud, Atlantis, Pulumi for Teams, Weave Cloud. | |||

==Changing Live Infrastructure== | |||

The "Expand and Contract" pattern (also called "Parallel Change" pattern, see https://martinfowler.com/bliki/ParallelChange.html). | |||

Zero downtime with [[Blue-Green_Deployments|Blue-Green deployments]]. | |||

=Configuration as Code= | =Configuration as Code= | ||

<font color=darkkhaki>Integrate with [[#Stack_Configuration|Stack Configuration]], [[Infrastructure_Concepts#Configuration|Infrastructure Concepts | Configuration]].</font> | |||

==Security-Sensitive Configuration== | |||

<font color=darkkhaki>Integrate with [[Infrastructure_Concepts#Security-Sensitive_Configuration|Infrastructure Concepts | Security-Sensitive Configuration]].</font> | |||

=State Continuity= | =State Continuity= | ||

{{Internal|State Continuity in Operations|State Continuity in Operations}} | |||

=Idempotency= | |||

One of the infrastructure code principles is to [[#Ensure_that_You_Can_Repeat_Any_Process|ensure that any process can be repeated]]. However, repeating the same process and re-applying infrastructure code only works reliably if the code is idempotent. The code must be written in such a way that it ensures the actual state is not modified if it matches the desired state, no matter how many time the code is applied. | |||

=Blast Radius= | |||

A "blast radius" is the scope of code that the command to apply a change includes. The indirect blast radius include other elements of the system that depend on the resources in the direct blast radius. | |||

=Container Clusters as Code= | |||

<font color=darkkhaki>[[IaC]] Chapter 14. Building Clusters as Code</font> | |||

=Walking Skeleton= | |||

{{External|https://wiki.c2.com/?WalkingSkeleton}} | |||

A walking skeleton is a basic implementation of the main parts of a new system that helps validating the general design and structure. People often create a walking skeleton for an infrastructure project along with a similar initial implementation of applications that will run on it. | |||

=Organizatorium= | |||

<font color=darkkhaki> | |||

* Explore ADRs https://github.com/joelparkerhenderson/architecture-decision-record | |||

* Explore [https://www.red-gate.com/simple-talk/databases/sql-server/database-administration-sql-server/using-migration-scripts-in-database-deployments/ Using Migration Scripts in Database Deployments] | |||

* https://martinfowler.com/bliki/ParallelChange.html | |||

</font> | |||

Latest revision as of 22:36, 1 July 2023

External

- https://infrastructure-as-code.com

- Infrastructure as Code: Dynamic Systems for the Cloud Age by Kief Morris

Internal

- Infrastructure as Code

- Infrastructure Concepts

- Infrastructure Code Testing

- Designing Modular Systems

- Continuous Integration

- Continuous Delivery

Overview

Infrastructure is not something you build and forget, in requires constant change: fixing, updating and improving. Infrastructure as Code is a set of technology and engineering practices aimed at delivering change more frequently (or, some would say continuously), quickly and reliably, while improving the overall quality of the system at the same time. Trading speed for quality is a false dichotomy. Used correctly, Infrastructure as Code embeds speed, quality, reliability and compliance into the process of making changes. Changing infrastructure becomes safer. Infrastructure as Code is based on practices from software development, especially test driven development, continuous integration and continuous delivery.

The capability to make changes frequently and reliably is correlated with organizational success. Organizations can't choose between being good at change or being good at stability. They tend to either be good at both or bad at both. (Accelerate by Dr. Nicole Forsgren, Jez Humble and Gene Kim). Changes include adding new services, such as a new database, upgrades, increase of resources to keep up with load, change and tune underlying application runtimes for diagnosis and performance reasons, security patches. Stability comes from the capability of making changes fast and easy: unpatched systems are not stable, they are vulnerable. If you can't fix issues as soon as you discover them, the system is not stable. If you can't recovery from failure quickly, the system is not stable. If the changes you make involve considerable downtime, the system is not stable. If changes frequently fail, the system is not stable. Infrastructure as Code practices help teams perform well against the operational metrics described here.

Automating an existing system is hard. Automation, including automated testing and delivery, should be part of the system's design and implementation, it should evolve organically with the system. You should build the system incrementally, automating as you go.

A few principles to follow when writing infrastructure code:

- Assume systems are unreliable. Cloud platforms run on cheap commodity hardware. Even if they're not, at the scale they run failure happens even when using reliable hardware. This is why the infrastructure platform, and application need to build reliability into software. You must design for uninterrupted service when the underlying resources change.

- Make everything reproducible automatically, without the need on-the-spot decisions about how to build things. Everything should be defined as code: topology, dependencies, configuration. Rebuilding should be a simple "yes/no" decision and running a pipeline instance. Once the system are reproducible, frequent and consistent automation is easier to run. Ensuring state continuity takes special care.

- Avoid snowflake systems. A snowflake is a system or a part of a system that is difficult to rebuild. This happens when people make change to one instance of a system that they don't make to others, causing configuration drift, and the knowledge how to get in into the current state is lost. As consequence, people avoid making changes, leaving it out of date, unpatched or even partially broken.

- Create disposable instances that are easy to discard and replace.

- Minimize variation. More similar things are, the easier to manage. Aim for your system to have as few types of pieces as possible, then automatically instantiate those pieces in as many instances you need, as a consequence of making everything reproducible principle.

- Ensure that you can repeat any process. If you can script a task, script it. If it's worth documenting, it's worth automating. Repeatedly applying the same code safely requires the code to be idempotent.

- Keep configuration simple. Even if we strive to minimize variation, there will aways be a need to configure the infrastructure types. The configurability should be kept in check: configurable code creates opportunity for inconsistency, and more configurable a piece of infrastructure code is, the more difficult is to understand its behavior and to ensure it is tested correctly. As such, minimize the number of configuration parameters. Avoid parameters you "might" need, they can be added later. Prefer simple parameters like numbers, strings, ad most lists and key-value maps. Do not get more complex than that. Avoid using boolean parameters that toggle on or off complex behavior.

Change

High quality systems enable faster change. A lot of software engineering techniques are about of applying change faster to systems, in such a way that those systems do not break. Design your systems and the operations around them to enable pushing small changes faster all the way to where they belong, in production.

If you have a choice, choose more smaller, reduced blast radius changes that are pushed all the way to production instead of a larger change. This choice goes along well with the practice of modularizing the system. Aim to break up a large, significant change into a set of smaller changes that can be delivered one at a time.

The larger disruptions to systems usually occur when too much work is built up locally before pushing. It is tempting to focus on completing the full piece of work and it is harder to make a mall change that only takes you a little further toward the full thing. However, the proper structure based on continuous integration and continuous delivery pipelines encourages this approach, because allows proving that small, atomic, separate changes work, while at the same time provably move the system towards its desired end state.

Small changes, applied in sequence and that progressively improve the system are referred to as incremental changes or iterative changes.

More ideas: https://medium.com/@kentbeck_7670/bs-changes-e574bc396aaa

Core Practices

Define Everything as Code

Code can be versioned and compared, it can benefit from lessons learned in software design patterns, principles and techniques such as test driven development, continuous integration, continuous delivery or refactoring.

Once a piece of infrastructure has been defined as code, many identically or slightly different instances of it can be created automatically by tools, automatically tested and deployed. Instances built by tools are built the same way every time, which makes the behavior of the system predictable. Moreover, everyone can see how the infrastructure has been defined by reviewing the code. Structure and configuration can be automatically audited for compliance.

If more people work on the same piece of infrastructure, the changes can be continuously integrated and then continuously tested and delivered, as described in the next section.

Continuously Test and Deliver

Continuously test and deliver all infrastructure work in progress.

Infrastructure Code Testing

Continuously testing small pieces encourages a modular, loosely coupled design. It also helps you find problems sooner, then quickly iterate, fix and rebuild the problematic code, which yields better infrastructure. The fact that tests remain with the code base and are continuously exercises as part of CD runs is referred to as "building quality in" rather than "testing quality in".

Continuous Delivery for Infrastructure Code

Build Small, Simple, Loosely Coupled Pieces that Can Be Changed Independently

Infrastructure Code

Infrastructure code consists in text files that contain the definition of the infrastructure elements to be created, updated or deleted, and their configuration. As such, infrastructure code is some times referred to as "infrastructure definition". Infrastructure code elements map directly to infrastructure resources and options exposed by the platform API. The code is fed to a tool, which either creates new instances or modifies the existing infrastructure to match the code. Different tools have different names for their source code: Terraform code (.tf files), CloudFormation templates, Ansible playbooks, etc. One of the essential characteristics of infrastructure code is that the files it is declared in can be visualized, edited and stored independently of the tool that uses them to create the infrastructure: the tool must not be required to review or modify the files. A tool that uses external code for its specifications does not constrain its users to use a specific workflow: they can use instead industry-standard source control systems, editors, CI server and automated testing frameworks.

Declarative Infrastructure Languages

Declarative code defines the desired state of the infrastructure and relies on the tool to handle the logic required to reach the desired state, either if the infrastructure element does not exist at all and needs to be created, or if the state of the infrastructure element just needs to be adjusted. In other words, declarative code specifies what you want, without specifying how to make it happen. As result, the code is cleaner and clearer. The declarative code is closer to configuration than to programming. In addition to being declarative, many infrastructure tools use their own DSL instead of a general purpose declarative language like YAML.

Terraform, CloudFormation, Ansible, Chef and Puppet are based on this concept and use proprietary declarative languages based on DSLs. That makes it possible to writhe code that refers infrastructure platform-specific domain elements like virtual machines, subnets or disk volumes.

Most tools support modularization of declarative code by the use of modules.

Also see:

Imperative Languages for Infrastructure

Declarative code become awkward to use in situations when you want different results depending on circumstances. Every time you need a conditional is one of those situations. The need to repeat the same action a number of times is another one of those situations. Because of this, most declarative infrastructure tools have extended their languages to add imperative programming capabilities. Another category of tools, such as Pulumi or AWS CDK use general-purpose programming language to define infrastructure.

One of the biggest advantage of using a general-purpose programming language is the ecosystem of tools, especially support for testing and refactoring.

Mixing Declarative and Imperative Code

It's not very clear yet whether mixing declarative and imperative code when building different parts of an infrastructure system is good or bad. Also, it is not clear whether one style or another should be mandated. What is a little bit clearer is that declarative and imperative code must not be mixed when implementing the same component. A better approach is defining concerns clearly, and addressing each concern with the best tool, without mixing them. One sign that concerns are being mixed is extending a declarative syntax like YAML to add conditionals and loops. Another sign is mixing configuration data into procedural code - this is an indication that we're mixing what we want with how to implement it. When this happens, code should be split into separate concerns.

Infrastructure Code Management

Infrastructure code should be treated like any other code. It should be designed and managed so that is easy to understand and maintain. Code quality practices, such as code reviews, automated testing and improving cohesion and reducing coupling should be followed. Infrastructure code could double as documentation in some cases, as it is always an accurate and updated record of your system. However, the infrastructure code is rarely the only documentation required. High-level documentation is necessary to understand concepts, context and strategy.

Primitives

Stack

Reconcile IaC stack and Pulumi stack.

A stack is a collection of infrastructure resources that are defined, changed and managed together as a unit, using a tool like Terraform, Pulumi, CloudFormation or Ansible. All elements declared in the stack are provisioned and destroyed with a single command.

A stack is defined using infrastructure source code. The stack source code, usually living in a stack project, is read by the stack management tool, which uses the cloud platform APIs to instantiate or modify the elements defined by the code. The resources in a stack are provisioned together to create a stack instance. The time to provision, change and test a stack is based on the entire stack and this should be a consideration when deciding on how to combine infrastructure elements in stacks. This subject is addressed in the Stack Patterns section, below. CloudFormation and Pulumi come with a very similar concepts, also named stack.

The infrastructure stack is an example of an "architectural quantum", defined by Ford, Parsons and Kua as "an independently deployable component with high functional cohesion, which includes all the structural elements required for the system to function correctly". In other words, a stack is a component that can be pushed in production on its own.

A stack can be composed of components, and it may be itself a component used by other stacks.

Code that builds servers should be decoupled by code that builds stacks. A stack should specify what servers to create and pass the information about the environment to a server configuration tool.

Stack Project

A project is a collection of code used to build a discrete component of the system. In this case, the component is a stack or a set of stacks. A stack project is the source code that declares the infrastructure resources that make a stack. A stack project should define the shape of the stack that is consistent across instances. The stack may be declared in one or more source files.

The project may also include different types of code, aside from infrastructure declaration: tests, configuration value and utility scripts. Files specific to a project live with the project. It is a good idea to establish and popularize a strategy for organizing these things consistently across similar projects. Generally speaking, any supporting code for a specific project should live with that project's code:

├─ src ├─ test ├─ environments ├─ pipeline └─ build.sh

Source Code

The infrastructure code is central to a stack project. When a stack consists of multiple resources, aim to group the code for resources that are combined to assemble domain concepts together in the same files, instead of organizing the code along the lines of functional elements. For example, you may want an "application cluster" file, that includes the container runtime declaration, networking elements, firewall rules and security policies, instead of a set of "servers", "network", "firewall-rules" and "security-policies" files. It is often easier to write, understand, change and maintain code for related functional elements if they are all declared in the same file. This concept follows the design principle of designing around the domain concept rather than technical ones.

Tests

The project should include the tests that ensure the stack works correctly. Tests will run at different phases, such as offline and online tests. There are cases, however, when integration tests do not naturally belong with the stack, but outside the stack project. For more details, see:

Configuration

This section of the project contains stack configuration files and application configuration (Helm files, etc.). There are two approaches to store stack configuration files:

- A component project contains the configuration for multiple environments in which that component is deployed. This approach is not very clean, because it mixes generalized, reusable code with details of specific instances. On the other hand, it's arguably easier to trace and understand configuration values when they're close to the project they relate to, rather than mingled in a monolithic configuration project.

- A separate project with configuration for all the stacks, organized by environment.

Delivery Configuration

Configuration to create delivery stages in a delivery pipeline tool.

Stack Instance