Amazon API Gateway Concepts: Difference between revisions

No edit summary |

|||

| Line 381: | Line 381: | ||

https://<font color=teal>custom-domain-name</font>/<font color=teal>base-path</font>/ | https://<font color=teal>custom-domain-name</font>/<font color=teal>base-path</font>/ | ||

=Mapping Template= | |||

==$context== | |||

Revision as of 18:12, 27 March 2019

External

Internal

REST and Hypermedia Concepts

Amazon API Gateway

Amazon API Gateway is the preferred way to expose internal AWS endpoints to external clients, in form of a consistent and scalable programming REST interface (REST API). Amazon API Gateway can expose the following integration endpoints: internal HTTP(S) endpoints - representing custom services, AWS Lambda functions and other AWS services, such as Amazon Kinesis or Amazon S3. The backend endpoints are exposed by creating an API Gateway REST API, which is the instantiation of a RestApi object, and integrating API methods with their corresponding backend endpoints, using specific integration types.

Primitives

API

An API is a collection of HTTP resources and methods that are integrated with backend HTTP endpoints, Lambda functions, or other AWS services. This collection can be deployed in one or more stages. Typically, API resources are organized in a resource tree according to the application logic. Each API resource can expose one or more API methods that have unique HTTP verbs supported by API Gateway. Each API is represented by an RestApi instance.

API Name

The API name is the human-readable name the API is listed under in the AWS console. If the API was built from OpenAPI metadata, the name is given by the value of the info.title element. More than one API may have the same name.

API ID

The REST API ID is part of various API elements' ARNs.

API Type

Regional API

A regional API is deployed in the current region.

Edge-Optimized API

An edge-optimized API is deployed to the Amazon CloudFront network.

Private API

A private API is only accessible from the VPC. This is different from private integration, where the endpoints are deployed in a private VPC, but they are exposed publicly by the API.

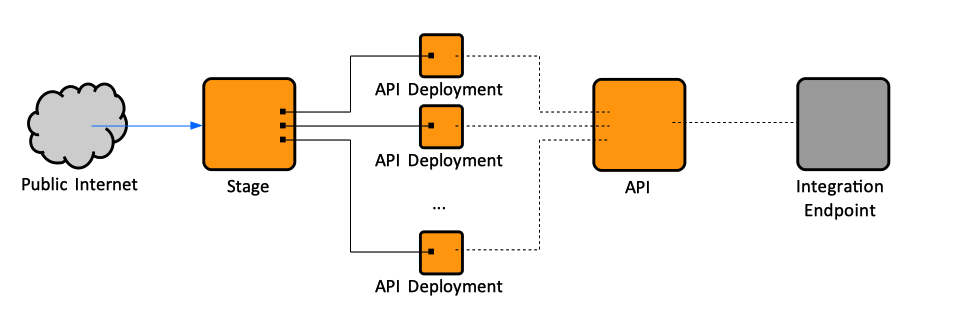

API Deployment

An API Deployment is a point-in-time snapshot of an API, encapsulating the resources, methods and the other API elements as they exist in the API at the moment of the deployment. An API deployment cannot be directly used by the clients, it must be associated with and exposed through a stage. Conceptually, a deployment is like an executable of an API: once a RestApi is created, it requires deployment and association with a stage to make it callable by its users. API deployments cannot be viewed directly in the API Gateway Console, they are accessible though a stage. Can they be listed via AWS CLI, though?

Every time an API is modified in such a way that one of its methods, integrations, routes, authorizers, or anything else other than stage settings change, the API must be redeployed into an existing, or a new stage. Resource updates require redeploying the API, whereas configuration updates to not.

Stage

A stage is a named reference to a deployment. It is named "stage" because it was intended to be a logical reference to a lifecycle state of the REST API: 'dev', 'prod', 'beta', 'v2', as it evolves. As such, stages allow robust version control of the API. API stages are identified by API ID and stage name. The stage makes the API available for client applications to call. The API snapshot includes methods, integrations, models, mapping templates, Lambda authorizers, etc. An API must be deployed in a stage to be accessible to clients.

Various aspects of the deployment can be configured at the stage level:

- API caching

- Method throttling

- Integration with Web Application Firewall (WAF)

- Client certificates the API Gateway uses to authenticate against the integration endpoints in calls into.

- Logging

- X-Ray integration

- SDK generation

- Canary deployments

The stage also allows access to deployment history and documentation history.

Internally, an API stage is represented by a Stage resource.

Stage Name

The stage name is part of the API URL.

Current Deployment

The latest API Deployment for a stage is the current deployment for that stage. Creating a new deployment makes it the current deployment.

Stage Variable

Stage variables can be set in the Stage Editor and can be used in the API configuration to parameterize the integration of a request. Stage variables are also available in the $context object of the mapping templates.

Stage Tags

Stage Operations

Amazon Gateway URL

The URL is determined by a protocol (HTTP(S) or WSS), a hostname, a stage name and, for REST APIs, the resource path. This URL is also known as "Invoke URL".

https://<restapi-id>.execute-api.<region>.amazonaws.com/<stage-name>/<resource-path>

The URL for a specific deployment, exposed in a certain stage can be obtained by navigating to the stage in the console: API Gateway Console -> APIs -> <API Name> -> Stages -> <stage name>. The "Invoke URL" is displayed at the top of the "Stage Editor".

Amazon Gateway API Base URL

The hostname and the stage name determine the API's base URL:

https://<restapi-id>.execute-api.<region>.amazonaws.com/<stage-name>/

Amazon Gateway Hostname

https://<restapi-id>.execute-api.{region}.amazonaws.com

Base Path

See Custom Domain Names below.

Integration

The integration represents an interface between the API Gateway and a backend endpoint. A client uses an API to access backend features by sending method requests. The API Gateway translates the client request, if necessary, into a format acceptable to the back end, creating an integration request, and forwards the integration request to the backend endpoint. The backend returns an integration response to the API Gateway, which is in turn translated by the gateway into a method response and sent to the client, mapping, if necessary, the backend response data to a form acceptable to the client. The API developer can control the behavior of the API frontend interactions by providing (coding) method requests and responses and making them part of the API. The API developer can control the behavior of the API's backend interactions by setting up the integration requests and responses. These may involve data mapping between a method and its corresponding integration.

Integration Endpoints and Types

Amazon API Gateway can integrate three types of backend endpoints, and can also simulate a mock integration endpoint:

- HTTP(S) endpoints, representing client REST API services or web sites, can be integrated with HTTP proxy integration and HTTP custom integration.

- Lambda functions can be integrated with Lambda proxy integration and Lambda custom integration. The Lambda custom integration is a special case of AWS integration, where the integration endpoint corresponds to the function-invoking action of the Lambda service.

- AWS service endpoints (Amazon Kinesis or Amazon S3) can only be integrated with non-proxy (custom) integration.

- Mock backend endpoints, where API gateway serves as an integration endpoint itself. More in mock integration below.

The integration type is defined by how the API Gateway passes data to and from the integration endpoint:

Proxy Integration

In general, proxy integration implies a simple integration setup with a single HTTP endpoint or Lambda function, where the client request is passed with minimal, or no processing at all, to the backend, as input, and the backend processing result is passed directly to the client. The request data that is passed through includes request headers, query string parameters, URL path variables and payload. This integration relies on direct interaction between the client and the integrated backend, with no intervention from the API Gateway. Because of that, the backend can evolve without requiring updates or reconfiguration of the integration point in the API Gateway. There is no need to set the integration request or integration response. More details needed here. This is the preferred integration type to call Lambda functions through the API Gateway.

Proxy integration should be preferred if there are no specific needs to transform the client request for the backend or transform the backend response data for the client.

Proxy Resource

I don't understand this, expand.

{proxy+}

Proxy resources handle requests to all sub-resources using a greedy path parameter: {proxy+}. Creating a proxy resource also creates a special HTTP method called ANY.

ANY verb

The ANY method supports all valid HTTP verbs and forwards requests to a single HTTP endpoint or Lambda integration.

API of a Single Method

Uses the {proxy+} proxy resource and the ANY verb. The method exposes the entire set of the publicly accessible HTTP resources and operations of a website. When the backend HTTP endpoint opens more resources, the client can use these resources with the same API setup.

HTTP Proxy Integration Example

Custom Integration

Custom integration implies a more elaborated setup procedure. For a custom integration, both the integration request and integration response must be configured, and necessary data mappings from the method request to the integration request and from the integration response to the method response must be put in place. Among other things, custom integration allows for reuse of configured mapping templates for multiple integration endpoints that have similar requirements of the input and output data formats. Since the setup is more involved, custom integration is recommended for more advanced application scenarios.

Mock Integration

This integration type lets API Gateway return a response without sending the request further to the backend. It is useful for testing and enables collaborative development of an API, where a team can isolate their development effort by setting up simulations of the API components owned by other teams.

Configuring Integration Type

For details on how to configure a specific integration type, see below:

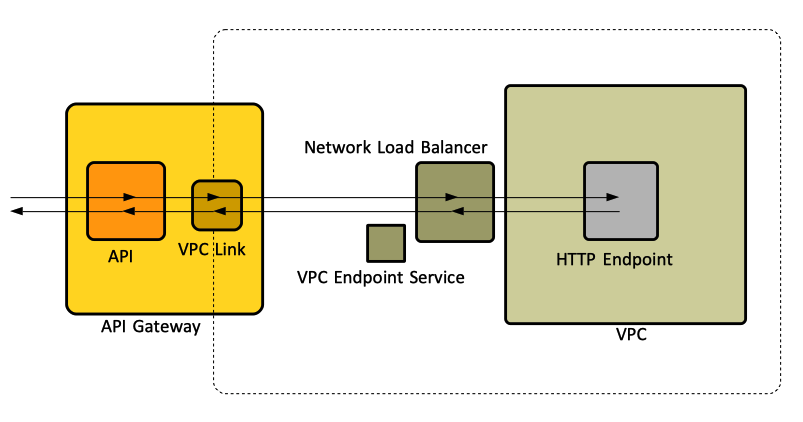

Private Integration

By private integration we understand an arrangement where the endpoints are deployed into a VPC and they are not publicly accessible. It is the API Gateway that exposes publicly the endpoint's resources, or a subset of them.

Integration Request

The API Gateway translates, if necessary, a client method request into a format acceptable to the back end and creates an integration request. The integration request is forwarded to the backend endpoint. The integration request is part of the REST API's interface with the backend.

Integration Response

The backend returns an integration response to the API Gateway, which in turn translates. it into a method response and sends it to the client, mapping, if necessary, the backend response data to a form acceptable to the client. The integration response is part of the REST API's interface with the backend. Also see IntegrationResponse below.

API Gateway Resources

Amazon API Gateway Resources that Require Redeployment

Where are these resources living?

RestApi

A RestApi represents an Amazon API Gateway API.

Resource

A Resource contains methods.

Method

Method Throttling

Method throttling means limitation of the rate of requests sent into the API. The method throttling rate is configured at stage level. Typical default values are 10,000 requests per second with a burst of 5,000 requests.

RequestValidator

MethodResponse

Integration

The integration type can be specified programmatically by setting the type property of the Integration resource as such:

- AWS: exposes an AWS service action, including Lambda, as an integration endpoint, via custom integration.

- AWS_PROXY: exposes a Lambda function (but no other AWS service) as an integration endpoint, via proxy integration.

- HTTP: exposes a HTTP(S) endpoint as an integration endpoint, via custom integration.

- HTTP_PROXY: exposes a HTTP(S) endpoint as an integration endpoin, via proxy integration.

- MOCK: sets up a mock integration endpoint.

Also see above:

IntegrationResponse

Also see Integration Response above.

GatewayResponse

DocumentationPart

DocumentationVersion

Model

ApiKey

See API Key below.

Authorizer

VpcLink

Amazon API Gateway Resources that Require Configuration Changes without Redeployment

Account

Deployment (API Deployment)

See API Deployment above.

DomainName

BasePathMapping

Stage

See Stage above.

Usage

UsagePlan

API Gateway Link Relations

API Documentation

See DocumentationPart and DocumentationVersion above.

Logging

Logging can be configured at stage level. CloudWatch logs, CloudWatch metrics and CloudTrail access logging can be enabled in the Stage Editor.

X-Ray Integration

API request latency issues can be troubleshot by enabling AWS X-Ray. AWS X-Ray can be used to trance API requests and downstream services. X-Ray tracing can be configured at stage level, in the Stage Editor.

CORS

The API Gateway console integrates the OPTIONS method to support CORS with a mock integration. If CORS is enabled for a resource, API Gateway will respond to the preflight request, giving a small performance improvement. This is implemented with an OPTIONS method with basic CORS configuration allowing all origins, all methods and several common headers.

Also see:

Security

Creation, Configuration and Deployment

To create, configure and deploy an API in API Gateway, the IAM user doing it must have provisioned an IAM policy that includes access permissions for manipulating the API Gateway resources and link relations. The "AmazonAPIGatewayAdministrator" AWS-managed policy grants full access to create, configure and deploy an API in API Gateway:

arn:aws:iam::aws:policy/AmazonAPIGatewayAdministrator

Attaching the preceding policy to an IAM user allows ("Effect":"Allow") the user to act with any API Gateway actions ("Action":["apigateway:*"]) on any API Gateway resources (arn:aws:apigateway:*::/*) that are associated with the user's AWS account. To refine the permissions, see Amazon API Gateway Developer Guide Page 241 "Control Access to an API with IAM Permissions".

Access

The following policy grants full access on how an API is invoked:

arn:aws:iam::aws:policy/AmazonAPIGatewayInvokeFullAccess

To refine the permissions, see Amazon API Gateway Developer Guide Page 241 "Control Access to an API with IAM Permissions".

When an API Gateway API is set up with IAM roles and policies to control client access, the client must sign API requests with Signature Version 4. Alternatively, AWS CLI or one of the AWS SDKs can be used to transparently handle request signing. For more details, see Amazon API Gateway Developer Guide Page 462 "Invoking a REST API in Amazon API Gateway".

AWS Endpoint Authentication

When API Gateway is integrated with AWS Lambda or another AWS service, such as Amazon S3 or Amazon Kinesis, the API Gateway must be enabled as a trusted entity to invoke an AWS service in the backend. This is achieved by creating an IAM role and attaching a service-specific access policy to the role. Without specifying this trust relationship, API Gateway is denied the right to call the backend on behalf of the user, even when the user has been granted permissions to access the backend directly. More details in Amazon API Gateway Developer Guide page 525.

Signature Version 4

Per-Method Authorization

API Key

See ApiKey above.

Integration with Web Application Firewall (WAF)

An API deployment can be integrated with Web Application Firewall (WAF) by configuring its stage. Web ACLs can be created from the Stage Editor.

Client Certificates

API Gateway uses client certificates to authenticate against the integration endpoints in calls into. The client certificates are managed at stage level.

API Caching

API caching can be enabled for a specific deployment by configuring its stage

SDK Generation

SDK generation can be initiated at stage level, in the Stage Editor.

API Export

API Export can be initiated at stage level, in the Stage Editor. The API can be exported as:

- Swagger (OpenAPI 2.0) (including with API Gateway Extensions and Postman Extensions), as JSON or YAML.

- OpenAPI 3.0 (including with API Gateway Extensions and Postman Extensions), as JSON or YAML.

Canary Deployment

A Canary deployment is used to test new API deployments and/or changes to stage variables. A Canary can receive a percentage of requests going to the main stage. In addition, API deployments will be made to the Canary first before being able to be promoted to the entire stage.

VPC Link

A VPC link enables access to a private resource available in a VPC, without requiring it to be apriori publicly accessible. It requires a VpcLink API Gateway resource to be created. The VpcLink may target one or more network load balancers of the VPC, which in turn should be configured to forward the requests to the final endpoint, running in the VPC. The network load balancer must be created in advance, as shown here:

The API method is then integrated with a private integration that uses the VpcLink. The private integration has an integration type of HTTP or HTTP_PROXY and has a connection type of VPC_LINK. The integration uses the connectionId property to identify the VpcLink used.

VPC Link Operations

Custom Domain Names

An API's base UR contains a randomly-generated API ID (not necessarily user friendly), "execute-api", the region, and the stage name. To make the base URL more user friendly, a custom domain name can be created and mapped on the base URL. Moreover, to allow for API evolution and support multiple API version within the context of a custom domain name, the API stage can be mapped onto a base path under the custom domain name, so the base URL of the REST API becomes:

https://custom-domain-name/base-path/