KVM Virtual Networking Concepts: Difference between revisions

| (181 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

* [https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/chap-Virtual_Networking.html RHEL7 Virtualization Administration - Virtual Networking] | * [https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/chap-Virtual_Networking.html RHEL7 Virtualization Administration - Virtual Networking] | ||

* https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/chap-Guest_virtual_machine_device_configuration.html | |||

* https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/sect-Managing_guest_virtual_machines_with_virsh-Interface_Commands.html | |||

* https://wiki.libvirt.org/page/Libvirtd_and_dnsmasq | |||

* https://wiki.libvirt.org/page/Networking | |||

* https://wiki.libvirt.org/page/VirtualNetworking | |||

* Configuration reference: https://libvirt.org/formatdomain.html#elementsNICS | |||

* Configuration reference: https://libvirt.org/formatnetwork.html | |||

=Internal= | =Internal= | ||

* [[Linux_Virtualization_Concepts#Networking_and_KVM_Virtualization|Linux Virtualization Concepts]] | * [[Linux_Virtualization_Concepts#Networking_and_KVM_Virtualization|Linux Virtualization Concepts]] | ||

* [[Linux_Virtualization_Operations#Virtualization_Host_Network_Operations|libvirt Network Operations]] | |||

=Overview= | =Overview= | ||

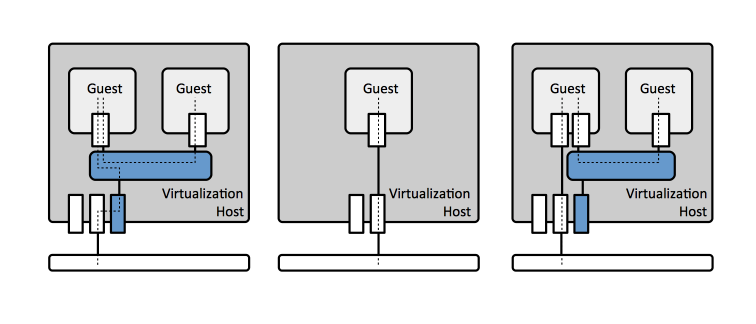

This article discusses concepts related to virtual networking with [[Linux Virtualization Concepts#libvirt|libvirt]]. | This article discusses concepts related to virtual networking with [[Linux Virtualization Concepts#libvirt|libvirt]]. [[Linux_Virtualization_Concepts#libvirtd|libvirtd]] daemon is the main server-side libvirt virtualization management system component. Configuring and manipulating networking is one of its responsibilities. Guests can be connected to the network via a [[#Virtual_Network|virtual network]], or [[#Guests_Attaches_Directly_to_a_Virtualization_Host_Network_Device|directly to a virtualization host network device]]. The virtual network setup is appropriate for the case where there is significant network communication among cooperating guests. If a guest is primarily intended to serve external traffic, a setup where [[#Guests_Attaches_Directly_to_a_Virtualization_Host_Network_Device|the guest is connected directly to a publicly exposed virtualization host network interface]] is more appropriate. A combination of these options is also possible, where a guest has a network interface attached to a virtual network and a different network interface attached to a virtualization host network device. | ||

::[[Image:libvirtNetworking.png]] | |||

=<span id='Virtual_Network_Switch'></span><span id='The_Default_Networking_Configuration'></span>Virtual Network= | |||

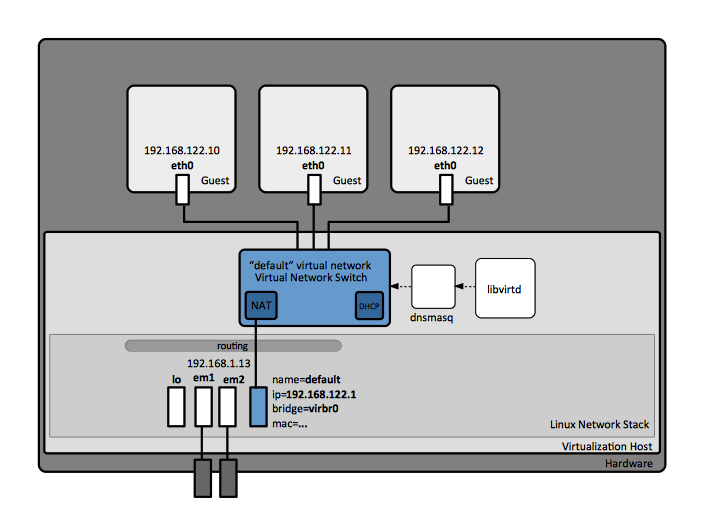

[[Linux Virtualization Concepts#libvirt|libvirt]] implements virtual networking using a ''virtual network switch'', which is logically equivalent with a ''virtual network''. A virtual network switch is a software component that runs on the virtualization host, which guests virtual machines "plug in" to, and direct their traffic through. The traffic between guests attached to a specific virtual switch stays within the confines of the associated virtual network. From a guest's operating system point of view, a virtual network connection is the same as a normal physical network connection. | |||

< | ::[[Image:libvirtVirtualNetworkNAT.png]] | ||

On the virtualization host server, the virtual network switch shows up as a network interface, conventionally named '''virbr0'''. | |||

<syntaxhighlight lang='bash'> | |||

# ip addr | # ip addr | ||

... | ... | ||

7: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000 | 7: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000 | ||

| Line 26: | Line 37: | ||

valid_lft forever preferred_lft forever | valid_lft forever preferred_lft forever | ||

... | ... | ||

</ | </syntaxhighlight> | ||

In the default configuration, which implies [[#NAT_Mode|NAT mode]], this network interface explicitly does '''NOT''' have any physical interfaces added, since it uses NAT + forwarding to connect to outside world. This can be proven by running [[brctl#show|brctl show]] on the virtualization host. The routed address is by default 192.168.122.1 and the routing to a physical network interface is done in the virtual host networking layer. [[Linux_Virtualization_Concepts#libvirt|libvirt]] will add iptables rules to allow traffic to/from the '''virbr0''' interface. It will also enable [[IP Forwarding#Overview|IP forwarding]], required for routing. | |||

The virtual network configuration can be displayed with [[Libvirt_Virtual_Network_Info_Operation#List_Configuration_of_a_Virtual_Network|virsh net-dumpxml]]: | |||

<syntaxhighlight lang='bash'> | |||

virsh net-dumpxml default | |||

</syntaxhighlight> | |||

<syntaxhighlight lang='xml'> | |||

<network connections='6'> | |||

<name>default</name> | |||

<uuid>49a07631-ea20-4741-89ea-b0faa7b42d19</uuid> | |||

<forward mode='nat'> | |||

<nat> | |||

<port start='1024' end='65535'/> | |||

</nat> | |||

</forward> | |||

<bridge name='virbr0' stp='on' delay='0'/> | |||

<mac address='52:54:00:15:ef:87'/> | |||

<ip address='192.168.122.1' netmask='255.255.255.0'> | |||

<dhcp> | |||

<range start='192.168.122.100' end='192.168.122.254'/> | |||

</dhcp> | |||

</ip> | |||

</network> | |||

</syntaxhighlight> | |||

<span id='The_DNS_and_DHCP_Server'></span>The virtual switch is started and managed by the [[Linux_Virtualization_Concepts#libvirtd|libvirtd]] daemon, via a [[dnsmasq]] process. A new instance of dnsmasq is started for each virtual switch and the virtual network associated with it, only accessible to guests in that specific network. The [[dnsmasq]] acts as DNS and DHCP servers for the virtual network. The configuration file for such a dnsmasq instance is /var/lib/libvirt/dnsmasq/''<virtual-network-name>''.conf. By default, there is just one "default" virtual network, and its dnsmasq configuration file is /var/lib/libvirt/dnsmasq/default.conf: | |||

<syntaxhighlight lang='bash'> | |||

strict-order | |||

pid-file=/var/run/libvirt/network/default.pid | |||

except-interface=lo | |||

bind-dynamic | |||

interface=virbr0 | |||

dhcp-range=192.168.122.100,192.168.122.254 | |||

dhcp-no-override | |||

dhcp-authoritative | |||

dhcp-lease-max=155 | |||

dhcp-hostsfile=/var/lib/libvirt/dnsmasq/default.hostsfile | |||

addn-hosts=/var/lib/libvirt/dnsmasq/default.addnhosts | |||

</syntaxhighlight> | |||

These configuration files should not be edited manually, but with: | |||

[[virsh net-edit|virsh net-edit]] <''virtual-network-name''> | |||

<font color=darkgray>The virtual network can be restricted to a specific physical network interface. This may be useful on virtualization hosts that have several physical interfaces, and it only matters for [[#NAT_Mode|NAT]] and [[#Routed_Mode|routed]] modes, as described below. This behavior can be defined [[Libvirt_Virtual_Network_Creation_Operation#Overview|when the virtual network is created]], by specifying "dev=<''interface''>".</font> | |||

==Virtual Network Modes== | |||

The virtual network switch can operate in several ''modes'', described below, and by default it operates in [[#NAT_Mode|NAT mode]]: | |||

* [[#NAT_Mode|NAT mode]] | |||

* [[#Routed_Mode|Routed mode]] | |||

* [[#Bridged_Mode|Bridged mode]] | |||

* [[#Isolated_Mode|Isolated mode]] | |||

===NAT Mode=== | |||

The NAT mode is the default mode in which [[#Virtual_Network_Switch|libvirt virtual network switch]] operates, without additional configuration. | |||

::[[Image:libvirtVirtualNetworkNAT.png]] | |||

All guests have direct connectivity to each other and to the virtualization host. [[#libvirt_Network_Filtering|libvirt network filtering]] and guest operating system iptables rules apply. The guests can access external networks by [[Network Address Translation (NAT)|network address translation]], subject to the host system's firewall rules. | |||

The network address translation function is implemented as [[Network_Address_Translation_(NAT)#IP_Masquerading|IP masquerading]], using [[Iptables_Concepts#nat|iptables]] rules. <font color=darkgray>Connected guests will use the virtualization host physical machine IP address for communication with external networks (it is possible that the virtualization host IP address is unroutable as well, if the virtualization host sits in its own NAT domain).</font> By default, without additional configuration, external hosts cannot initiate connections to the guest virtual machines. If incoming connections need to be handled by guests, this is possible by setting up libvirt's "hook" script for qemu to install the necessary iptables rules to forward incoming connections. More details: https://wiki.libvirt.org/page/Networking#Forwarding_Incoming_Connections | |||

===Routed Mode=== | |||

The [[#Virtual_Network_Switch|libvirt virtual network switch]] can be configured to run in ''routed mode''. In routed mode, the switch connects to the physical LAN the virtualization host is attached to, without the intermediation of a NAT module. All the guest virtual machines are in the same subnet, routed through the virtual switch. Each guest has its own public IP address. External traffic may reach the guest only if additional routing entires are added. The routed mode operates at Layer 3 of the OSI networking model. | |||

<font color=darkgray> | |||

Use Cases: | |||

* Implementation of a DMZ: https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/sect-Virtual_Networking-Examples_of_common_scenarios.html#sect-Examples_of_common_scenarios-Routed_mode | |||

* Virtual Server Hosting: https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/sect-Virtual_Networking-Examples_of_common_scenarios.html#sect-Examples_of_common_scenarios-Routed_mode | |||

</font> | |||

===Bridged Mode=== | |||

In ''bridged mode'', the guests are connected to a bridge device that is also connected directly to a physical ethernet device connected to the local ethernet. This makes the guests directly visible on the physical network, as being part of the same subnet as the virtualization host, and thus enables incoming connections, but does not require any extra routing table entries. All other physical machines on the same physical network are aware of the virtual machines at MAC address level, and they can access them. Bridging operates on Layer 2 of the OSI networking model. <font color=darkgray>Bridged mode does not seem to imply a virtual network of any kind, and seems to be similar to [[#Guests_Attaches_Directly_to_a_Virtualization_Host_Network_Device|Guests Attaches Directly to a Virtualization Host Network Device]], described below.</font> | |||

It is possible to use multiple physical network interfaces on the virtualization host by joining them together with a ''bond''. The bond is then added to the bridge. For more details, see [[Linux Network Bonding|network bonding]]. | |||

Use cases for bridged mode: | |||

* When the guest virtual machines need to be deployed in an existing network alongside virtualization hosts and other physical machines, and they should appear no different to the end user. | |||

* When the guests need to be deployed without making any changes to the existing physical network configuration settings. | |||

* When the guests must be easily accessible from an existing physical network and they must use already deployed services. | |||

* When the guests must be connected to an existing network where VLANs are used. | |||

===Isolated Mode=== | |||

If the [[#Virtual_Network_Switch|libvirt virtual network switch]] is configured to run in ''isolated mode'', the guest virtual machines can communicate with each other and with the virtualization host, but the traffic will not pass outside of the virtualization host, not can they receive external traffic. The guests still receive their addresses via DHCP from [[#The_DNS_and_DHCP_Server|dnsmasq]], and send their DNS queries to the same [[#The_DNS_and_DHCP_Server|dnsmasq]] instance, which may resolve external names, but the resolved IP addresses won't be accessible. | |||

==Guest Configuration for Virtual Network Mode== | |||

<syntaxhighlight lang='xml'> | |||

<devices> | |||

... | |||

<interface type='network'> | |||

<source network='default'/> | |||

</interface> | |||

... | |||

</devices> | |||

</syntaxhighlight> | |||

=Guests Attaches Directly to a Virtualization Host Network Device= | |||

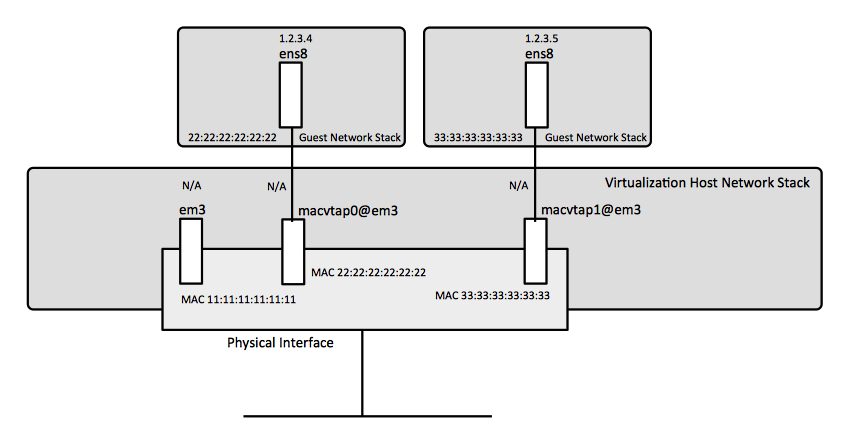

The guest's network interface can be attached directly to a physical network interface on the virtualization host. This can be achieved either though a [[#macvtap_Driver|macvtap driver]], or with [[#PCI_Device_Assignment|device assignment (passthrough)]]. <font color=darkgray>Isn't this [[#Bridged_Mode|Bridged Mode]] described above?</font> | |||

==<span id='Directly_Attaching_a_Guest_Virtual_Machine_to_a_Physical_Network_Interface'></span>macvtap Driver== | |||

{{External|https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/virtualization_deployment_and_administration_guide/sect-virtual_networking-directly_attaching_to_physical_interface}} | |||

A macvtap driver is a network interface device driver that makes the underlying physical network device to appear as one or more character devices that can be used directly by hypervisor. The each macvtap endpoint has its own MAC address on the same ethernet segment as the underlying network device. Thus, the guest's NIC can be attached directly to a specified physical interface of the host machine. Typically is used to make both the guest and the virtualization host show up directly on the switch that the host is connected to. | |||

More than one guest can attach to the ''same'' physical interface on the virtualization host, and the guest-level network interfaces created as result of the binding ''may be configured independently with different IP addresses''. | |||

macvtap drivers should not be confused with [[#PCI_Device_Assignment|PCI device assignment]]. | |||

:[[Image:macvtap.png]] | |||

===macvtap Driver Modes=== | |||

Macvtap connection has the following modes, each with different benefits and usecases: | |||

====VEPA==== | |||

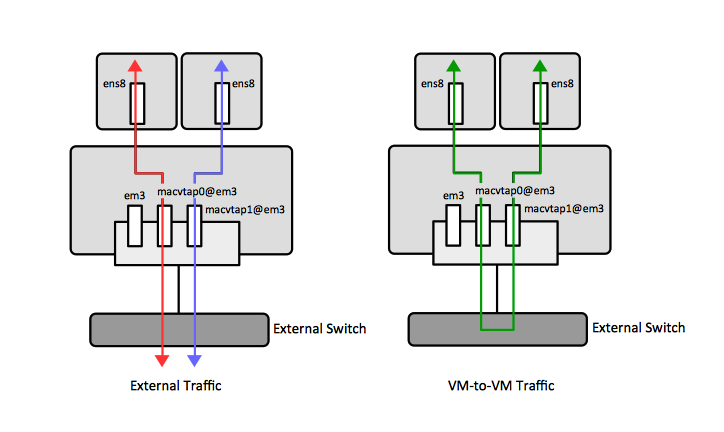

Virtual Ethernet Port Aggregator (VEPA) is mode in which packets from the guest are sent to the external switch. The guest traffic is thus forced through the switch. For VEPA mode to work correctly, the external switch must support ''hairpin mode'', which ensures that packets whose destination is a guest on the same host machine as the source guest are sent back to the host by the external switch. | |||

:::[[Image:macvtapVEPA.png]] | |||

====bridge==== | |||

Packets whose destination is on the same host machine as their source guest are directly delivered to the target macvtap device. Both the source device and the destination device need to be in bridge mode for direct delivery to succeed. If either one of the devices is in VEPA mode, a hairpin-capable external switch is required. | |||

:::[[Image:macvtapBridge.png]] | |||

====private==== | |||

All packets are sent to the external switch and will only be delivered to a target guest on the same host machine if they are sent through an external router or gateway and these send them back to the host. Private mode can be used to prevent the individual guests on the single host from communicating with each other. This procedure is followed if either the source or destination device is in private mode. | |||

:::[[Image:macvtapPrivate.png]] | |||

== | ====passthrough==== | ||

This feature attaches a physical interface device or a SR-IOV Virtual Function (VF) directly to a guest without losing the migration capability. All packets are sent directly to the designated network device. Note that a single network device can only be passed through to a single guest, as a network device cannot be shared between guests in passthrough mode. | |||

= | ===macvtap Driver Operations=== | ||

{{Internal|Attaching_a_Guest_Directly_to_a_Virtualization_Host_Network_Interface_with_a_macvtap_Driver#Overview|Attaching a Guest Directly to a Virtualization Host Network Interface with a macvtap Driver}} | |||

==PCI Device Assignment== | |||

<font color=darkgray> | |||

PCI device assignment is also known as "passthrough". | |||

* https://wiki.libvirt.org/page/Networking#PCI_Passthrough_of_host_network_devices | |||

* https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/virtualization_deployment_and_administration_guide/chap-Guest_virtual_machine_device_configuration | |||

</font> | |||

=libvirt Network Filtering= | |||

* https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/sect-Virtual_Networking-Applying_network_filtering.html | |||

Latest revision as of 01:29, 7 December 2020

External

- RHEL7 Virtualization Administration - Virtual Networking

- https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/chap-Guest_virtual_machine_device_configuration.html

- https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/sect-Managing_guest_virtual_machines_with_virsh-Interface_Commands.html

- https://wiki.libvirt.org/page/Libvirtd_and_dnsmasq

- https://wiki.libvirt.org/page/Networking

- https://wiki.libvirt.org/page/VirtualNetworking

- Configuration reference: https://libvirt.org/formatdomain.html#elementsNICS

- Configuration reference: https://libvirt.org/formatnetwork.html

Internal

Overview

This article discusses concepts related to virtual networking with libvirt. libvirtd daemon is the main server-side libvirt virtualization management system component. Configuring and manipulating networking is one of its responsibilities. Guests can be connected to the network via a virtual network, or directly to a virtualization host network device. The virtual network setup is appropriate for the case where there is significant network communication among cooperating guests. If a guest is primarily intended to serve external traffic, a setup where the guest is connected directly to a publicly exposed virtualization host network interface is more appropriate. A combination of these options is also possible, where a guest has a network interface attached to a virtual network and a different network interface attached to a virtualization host network device.

Virtual Network

libvirt implements virtual networking using a virtual network switch, which is logically equivalent with a virtual network. A virtual network switch is a software component that runs on the virtualization host, which guests virtual machines "plug in" to, and direct their traffic through. The traffic between guests attached to a specific virtual switch stays within the confines of the associated virtual network. From a guest's operating system point of view, a virtual network connection is the same as a normal physical network connection.

On the virtualization host server, the virtual network switch shows up as a network interface, conventionally named virbr0.

# ip addr

...

7: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000

link/ether 52:54:00:15:ef:87 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

...

In the default configuration, which implies NAT mode, this network interface explicitly does NOT have any physical interfaces added, since it uses NAT + forwarding to connect to outside world. This can be proven by running brctl show on the virtualization host. The routed address is by default 192.168.122.1 and the routing to a physical network interface is done in the virtual host networking layer. libvirt will add iptables rules to allow traffic to/from the virbr0 interface. It will also enable IP forwarding, required for routing.

The virtual network configuration can be displayed with virsh net-dumpxml:

virsh net-dumpxml default

<network connections='6'>

<name>default</name>

<uuid>49a07631-ea20-4741-89ea-b0faa7b42d19</uuid>

<forward mode='nat'>

<nat>

<port start='1024' end='65535'/>

</nat>

</forward>

<bridge name='virbr0' stp='on' delay='0'/>

<mac address='52:54:00:15:ef:87'/>

<ip address='192.168.122.1' netmask='255.255.255.0'>

<dhcp>

<range start='192.168.122.100' end='192.168.122.254'/>

</dhcp>

</ip>

</network>

The virtual switch is started and managed by the libvirtd daemon, via a dnsmasq process. A new instance of dnsmasq is started for each virtual switch and the virtual network associated with it, only accessible to guests in that specific network. The dnsmasq acts as DNS and DHCP servers for the virtual network. The configuration file for such a dnsmasq instance is /var/lib/libvirt/dnsmasq/<virtual-network-name>.conf. By default, there is just one "default" virtual network, and its dnsmasq configuration file is /var/lib/libvirt/dnsmasq/default.conf:

strict-order

pid-file=/var/run/libvirt/network/default.pid

except-interface=lo

bind-dynamic

interface=virbr0

dhcp-range=192.168.122.100,192.168.122.254

dhcp-no-override

dhcp-authoritative

dhcp-lease-max=155

dhcp-hostsfile=/var/lib/libvirt/dnsmasq/default.hostsfile

addn-hosts=/var/lib/libvirt/dnsmasq/default.addnhosts

These configuration files should not be edited manually, but with:

virsh net-edit <virtual-network-name>

The virtual network can be restricted to a specific physical network interface. This may be useful on virtualization hosts that have several physical interfaces, and it only matters for NAT and routed modes, as described below. This behavior can be defined when the virtual network is created, by specifying "dev=<interface>".

Virtual Network Modes

The virtual network switch can operate in several modes, described below, and by default it operates in NAT mode:

NAT Mode

The NAT mode is the default mode in which libvirt virtual network switch operates, without additional configuration.

All guests have direct connectivity to each other and to the virtualization host. libvirt network filtering and guest operating system iptables rules apply. The guests can access external networks by network address translation, subject to the host system's firewall rules. The network address translation function is implemented as IP masquerading, using iptables rules. Connected guests will use the virtualization host physical machine IP address for communication with external networks (it is possible that the virtualization host IP address is unroutable as well, if the virtualization host sits in its own NAT domain). By default, without additional configuration, external hosts cannot initiate connections to the guest virtual machines. If incoming connections need to be handled by guests, this is possible by setting up libvirt's "hook" script for qemu to install the necessary iptables rules to forward incoming connections. More details: https://wiki.libvirt.org/page/Networking#Forwarding_Incoming_Connections

Routed Mode

The libvirt virtual network switch can be configured to run in routed mode. In routed mode, the switch connects to the physical LAN the virtualization host is attached to, without the intermediation of a NAT module. All the guest virtual machines are in the same subnet, routed through the virtual switch. Each guest has its own public IP address. External traffic may reach the guest only if additional routing entires are added. The routed mode operates at Layer 3 of the OSI networking model.

Use Cases:

- Implementation of a DMZ: https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/sect-Virtual_Networking-Examples_of_common_scenarios.html#sect-Examples_of_common_scenarios-Routed_mode

- Virtual Server Hosting: https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Virtualization_Deployment_and_Administration_Guide/sect-Virtual_Networking-Examples_of_common_scenarios.html#sect-Examples_of_common_scenarios-Routed_mode

Bridged Mode

In bridged mode, the guests are connected to a bridge device that is also connected directly to a physical ethernet device connected to the local ethernet. This makes the guests directly visible on the physical network, as being part of the same subnet as the virtualization host, and thus enables incoming connections, but does not require any extra routing table entries. All other physical machines on the same physical network are aware of the virtual machines at MAC address level, and they can access them. Bridging operates on Layer 2 of the OSI networking model. Bridged mode does not seem to imply a virtual network of any kind, and seems to be similar to Guests Attaches Directly to a Virtualization Host Network Device, described below.

It is possible to use multiple physical network interfaces on the virtualization host by joining them together with a bond. The bond is then added to the bridge. For more details, see network bonding.

Use cases for bridged mode:

- When the guest virtual machines need to be deployed in an existing network alongside virtualization hosts and other physical machines, and they should appear no different to the end user.

- When the guests need to be deployed without making any changes to the existing physical network configuration settings.

- When the guests must be easily accessible from an existing physical network and they must use already deployed services.

- When the guests must be connected to an existing network where VLANs are used.

Isolated Mode

If the libvirt virtual network switch is configured to run in isolated mode, the guest virtual machines can communicate with each other and with the virtualization host, but the traffic will not pass outside of the virtualization host, not can they receive external traffic. The guests still receive their addresses via DHCP from dnsmasq, and send their DNS queries to the same dnsmasq instance, which may resolve external names, but the resolved IP addresses won't be accessible.

Guest Configuration for Virtual Network Mode

<devices>

...

<interface type='network'>

<source network='default'/>

</interface>

...

</devices>

Guests Attaches Directly to a Virtualization Host Network Device

The guest's network interface can be attached directly to a physical network interface on the virtualization host. This can be achieved either though a macvtap driver, or with device assignment (passthrough). Isn't this Bridged Mode described above?

macvtap Driver

A macvtap driver is a network interface device driver that makes the underlying physical network device to appear as one or more character devices that can be used directly by hypervisor. The each macvtap endpoint has its own MAC address on the same ethernet segment as the underlying network device. Thus, the guest's NIC can be attached directly to a specified physical interface of the host machine. Typically is used to make both the guest and the virtualization host show up directly on the switch that the host is connected to.

More than one guest can attach to the same physical interface on the virtualization host, and the guest-level network interfaces created as result of the binding may be configured independently with different IP addresses.

macvtap drivers should not be confused with PCI device assignment.

macvtap Driver Modes

Macvtap connection has the following modes, each with different benefits and usecases:

VEPA

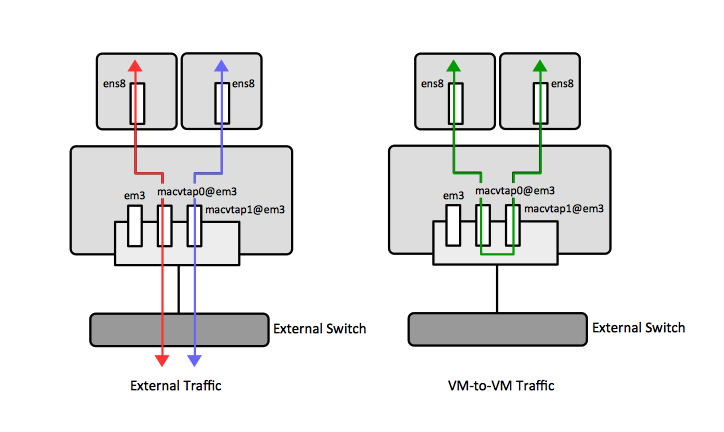

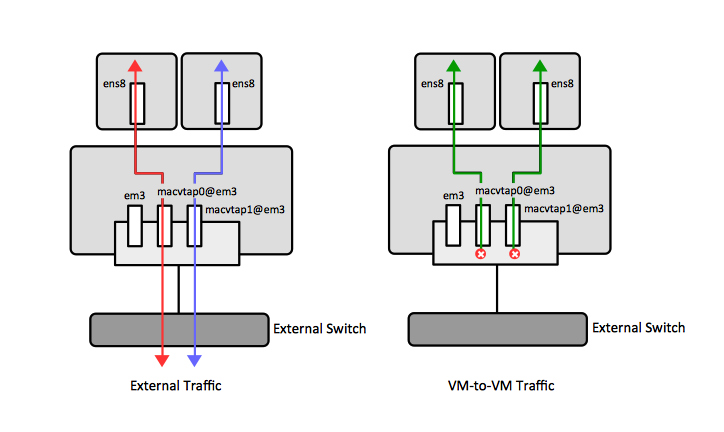

Virtual Ethernet Port Aggregator (VEPA) is mode in which packets from the guest are sent to the external switch. The guest traffic is thus forced through the switch. For VEPA mode to work correctly, the external switch must support hairpin mode, which ensures that packets whose destination is a guest on the same host machine as the source guest are sent back to the host by the external switch.

bridge

Packets whose destination is on the same host machine as their source guest are directly delivered to the target macvtap device. Both the source device and the destination device need to be in bridge mode for direct delivery to succeed. If either one of the devices is in VEPA mode, a hairpin-capable external switch is required.

private

All packets are sent to the external switch and will only be delivered to a target guest on the same host machine if they are sent through an external router or gateway and these send them back to the host. Private mode can be used to prevent the individual guests on the single host from communicating with each other. This procedure is followed if either the source or destination device is in private mode.

passthrough

This feature attaches a physical interface device or a SR-IOV Virtual Function (VF) directly to a guest without losing the migration capability. All packets are sent directly to the designated network device. Note that a single network device can only be passed through to a single guest, as a network device cannot be shared between guests in passthrough mode.

macvtap Driver Operations

PCI Device Assignment

PCI device assignment is also known as "passthrough".

- https://wiki.libvirt.org/page/Networking#PCI_Passthrough_of_host_network_devices

- https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/virtualization_deployment_and_administration_guide/chap-Guest_virtual_machine_device_configuration