OpenShift Concepts TODEPLETE: Difference between revisions

No edit summary |

|||

| Line 1: | Line 1: | ||

=DEPLETE TO= | |||

{{Internal|OpenShift Concepts|OpenShift Concepts}} | |||

=External= | |||

* https://docs.openshift.com/container-platform/latest/welcome/index.html | |||

=Internal= | =Internal= | ||

* [[OpenShift]] | |||

* [[Kubernetes]] | |||

* [[Docker]] | |||

=OpenShift Workflow= | |||

Users or automation make calls to the [[#API|REST API]] via the command line, web console or programmatically, to change the state of the system. The master analyses the state changes and acts to bring the state of the system in sync with the desired state. The state is maintained in the [[#Store_Layer|store layer]]. Most OpenShift commands and API calls do not require the corresponding actions to be performed immediately. They usually create or modify a resource description in [[#etcd|etcd]]. etcd then notifies Kubernetes or the OpenShift [[OpenShift_Pod_Concepts#Controller|controllers]], which notify the resource about the change. Eventually, the system state reflects the change. | |||

OpenShift functionality is provided by the interaction of several layers: | |||

* <span id='Store_Layer'></span>The '''store layer''' holds the state of the OpenShift environment, which includes configuration information, current state and the desired state. It is implemented by [[#etcd|etcd]]. | |||

* <span id='Authentication_Layer'></span>The [[OpenShift Security Concepts#Authentication_Layer|authentication layer]] provides a framework for collaboration and quota management. | |||

* Scheduling is OpenShift master's main function and it is implemented in the <span id='Scheduling_Layer'>'''scheduling layer''', which contains the [[#Scheduler|scheduler]]. | |||

* <span id='Service_Layer'></span>The '''service layer''' handles internal requests. It provides an abstraction of [[#Service_Endpoint|service endpoints]] and provides internal load balancing. The [[#Pod|pods]] get [[#Service_IP_Address|IP addresses]] associated with [[#Service_Endpoint|the service endpoints]]. Note that the external requests are not handled by the service layer, but by the [[#Routing_Layer|routing layer]]. | |||

* The [[#Routing_Layer|routing layer]] handles external requests, to and from the applications. For a description of the relationship between a [[#Service|service]] and a [[#Router|router]], see [[#Relationship_between_Service_and_Router|relationship between a service and router]]. | |||

* Application requests are routed to the business logic serving them according to the [[#Networking_Workflow|networking workflow]], by the <span id='Networking_Layer'></span>'''networking layer'''. | |||

* <span id='Replication_Layer'></span>The '''replication layer''' contains the [[#Replication_Controller|replication controller]], whose role is to ensure that the number of instances and pods defined in the [[#etc|store layer]] actually exists. | |||

=<span id='OpenShift_Component_Types'></span><span id='Component_Types'></span>Objects= | |||

==Core Objects== | |||

Defined by Kubernetes, and also used by OpenShift. All these objects have an API type, which is listed below. | |||

* [[OpenShift_Concepts#Project|Projects]] ([[Oc_get#project|oc get project]]), defined by <tt>[[OpenShift_Project_Definition|Project]]</tt>. | |||

* [[OpenShift_Concepts#Pod|Pods]] ([[Oc_get#pod.2C_pods.2C_po|oc get pods]]) defined by <tt>[[OpenShift_Pod_Definition|Pod]]</tt>. | |||

* [[OpenShift_Concepts#Node|Nodes]] ([[Oc_get#node.2C_nodes|oc get nodes]]) defined by <tt>[[OpenShift_Node_Definition|Node]]</tt>. | |||

* [[OpenShift_Concepts#DeploymentConfig|Deployment Configurations]] ([[Oc_get#dc|oc get dc]]) defined by <tt>[[OpenShift DeploymentConfig Definition#Overview|DeploymentConfig]]</tt>. | |||

* [[OpenShift_Concepts#Service|Services]] ([[Oc_get#services.2C_svc|oc get svc]]) defined by <tt>[[OpenShift Service Definition#Overview|Service]]</tt>. | |||

* [[OpenShift_Concepts#Route|Routes]] ([[Oc_get#routes.2C_routes|oc get route]]) defined by <tt>[[OpenShift Route Definition#Overview|Route]]</tt>. | |||

* [[OpenShift_Concepts#Replication_Controller|Replication Controllers]] ([[Oc_get#rc|oc get rc]]) defined by <tt>[[OpenShift ReplicationController Definition#Overview|ReplicationController]]</tt>. | |||

* [[OpenShift_Security_Concepts#Secret|Secrets]] ([[Oc_get#secret|oc get secret]]) defined by <tt>[[OpenShift Secret Definition|Secret]]</tt>. | |||

* [[OpenShift_Concepts#DaemonSet|Daemon Sets]]. | |||

==OpenShift Objects== | |||

Defined by OpenShift, outside Kubernetes. These objects also have an API type, which is listed below. | |||

* [[OpenShift# | * [[OpenShift_Concepts#Image|Images]] defined by <tt>[[OpenShift_Image_Definition#Overview|Image]]</tt>. | ||

* [[#Image_Stream|Image Streams]] ([[Oc_get#is|oc get is]]) defined by <tt>[[OpenShift Image Stream Definition#Overview|ImageStream]]</tt>. | |||

* [[OpenShift_Concepts#Image_Stream_Tag|Image Stream Tags]] ([[Oc_get#istag|oc get istag]]). | |||

* [[OpenShift_Concepts#Build|Builds]] ([[Oc_get#build.2C_builds|oc get build]]). | |||

* [[OpenShift_Concepts#Build_Configuration|Build Configurations]] ([[Oc_get#bc|oc get bc]]) defined by <tt>[[OpenShift Build Configuration Definition#Overview|BuildConfig]]</tt>. | |||

* [[#Template|Templates]] defined by <tt>[[OpenShift Template Definition|Template]]</tt>. | |||

* [[OpenShift_Security_Concepts#OAuthClient|OAuthClient]]. | |||

= | ==Other Objects that do not have an API Representation== | ||

* [[OpenShift_Concepts#Container|Containers]] | |||

* [[OpenShift_Concepts#Label|Labels]] | |||

* [[OpenShift_Concepts#Volume|Volumes]] | |||

=OpenShift Hosts= | =OpenShift Hosts= | ||

| Line 11: | Line 63: | ||

==Master== | ==Master== | ||

A master is a RHEL or Red Hat Atomic host that orchestrates and schedules resources. | A master is a RHEL or Red Hat Atomic host that ''[[Kubernetes Concepts#Overview|orchestrates]]'' and ''schedules'' resources. | ||

The master ''maintains the state'' of the OpenShift environment. | |||

The master provides the single API all tooling clients must interact with. | The master provides the ''single API'' all tooling clients must interact with. All OpenShift tools (CLI, web console, IDE plugins, etc. speak directly with the master). | ||

The access is protected via fine-grained role-based access control (RBAC). | The access is protected via fine-grained role-based access control (RBAC). | ||

The master monitors application health via user-defined [[#Pod_Probe|pod probes]]. It handles restarting [[#Pod|pods]] that failed probes automatically. Pods that fail too often are marked as "failing" and are temporarily not restarted. The OpenShift [[# | The master monitors application health via user-defined [[#Pod_Probe|pod probes]], insuring that all containers that should be running are running. It handles restarting [[#Pod|pods]] that failed probes automatically. Pods that fail too often are marked as "failing" and are temporarily not restarted. The OpenShift [[#Service_Layer|service layer]] sends traffic only to healthy pods. | ||

{{Internal|Kubernetes Concepts#Master|Kubernetes Master}} | |||

Masters use the [[#etcd|etcd]] cluster to store state, and also [[#etcd_and_Master_Caching|cache some of the metadata]] in their own memory space. | |||

===Master HA=== | |||

Multiple masters can be present to insure HA. A typical HA configuration involves three masters and three [[#Etcd|etcd]] nodes. Such a topology is built by the OpenShift 3.5 ansible inventory file shown here. | |||

An alternative HA configuration consists in a single master node and multiple (at least three) [[#Etcd|etcd]] nodes. | |||

====The native HA Method==== | |||

===Master API=== | |||

The master API is available internally (inside the OpenShift cluster) at https://openshift.default.svc.cluster.local This value is available internally to pods as the KUBERNETES_SERVICE_HOST environment variable. | |||

==Node== | ==Node== | ||

A node is a RHEL or Red Hat Atomic | A node is a ''Linux container host''. It is based on RHEL or Red Hat Atomic and provides a runtime environment where applications run inside [[#Container|containers]], which run inside [[#Pod|pods]] assigned by the [[#Master|master]]. Nodes are orchestrated by [[#Master|masters]] and are usually managed by administrators and not by end users. Nodes can be organized into different topologies. From a networking perspective, nodes gets allocated their own subnets of the [[#The_Cluster_Network|cluster network]], which then they use to distribute to the pods, and containers, running on them. More details about OpenShift networking is available [[#The_Cluster_Network|here]]. | ||

[[OpenShift Concepts#The_node_Daemon|A node daemon]] runs on node each node. | |||

Node operations: | |||

* [[OpenShift_Node_Operations#Getting_Information_about_a_Node|Getting information about a node]] | |||

* [[OpenShift_Node_Operations#Starting.2FStopping_a_Node|Starting/Stopping a node]] | |||

<font color=red> | |||

TODO: | |||

* What is the difference between the kubelet and the node daemon? | |||

* kube proxy daemons. | |||

</font> | |||

==Infrastructure Node== | |||

Also referred to as "infra node". | |||

This is where infrastructure pods run. [[#Metrics|Metrics]], [[#Logging|logging]], [[#Router|routers]] are considered infrastructure pods. | |||

The infrastructure nodes, especially those running the the metrics pods and ... should be closely monitored to detect early CPU, memory and disk capacity shortages on the host system. | |||

=OpenShift Cluster= | |||

All nodes that share the [[#SDN|SDN]]. | |||

=Container= | =Container= | ||

{{External|https://docs.openshift.com/container-platform/latest/architecture/core_concepts/containers_and_images.html#containers}} | |||

A container is a kernel-provided mechanism to run one or more processes, in a portable manner, in a Linux environment. Containers are isolated from each other on a host and are initialized from [[#Image|images]]. All application instances - based on various languages/frameworks/runtimes - as well as databases, run inside containers on [[#Node|nodes]]. For more details, see {{Internal|Docker_Concepts#Container|Docker Containers}} | |||

A [[#Pod|pod]] can have application containers and [[OpenShift_Init_Container#Overview|init containers]]. | |||

In OpenShift, containers are never restarted. Instead, new containers are spun up to replace old containers when needed. Because of this behavior, [[OpenShift_Concepts#Persistent_Volume|persistent storage volumes]] mounted on containers are critical for maintaining state such as for configuration files and data files. | |||

=Docker Support in OpenShift= | |||

All containers that are running in pods are managed by [[Docker_Concepts#The_Docker_Server|Docker servers]]. | |||

==Docker Storage in OpenShift== | |||

Each Docker server requires Docker storage, which is allocated on each node in a space specially provisioned as [[Docker_Concepts#Storage_Backend|Docker storage backend]]. The Docker storage is ephemeral and separate from [[OpenShift_Volume_Concepts#The_Volume_Mechanism|OpenShift storage]]. The [[Docker_Concepts#Loopback_Storage|default loopback storage]] back end for Docker is a [[Linux Logical Volume Management Concepts#thin_pool|thin pool]] on loopback devices, which is not appropriate for production. | |||

As per OCP 3.7, Docker storage ca be backed by one of two options (storage drivers): a [[Docker Concepts#devicemapper-storage-driver|devicemapper]] thin pool logical volume and an [[Docker Concepts#overlayfs-storage-driver|overlay2]] filesystem (recommended). More details about how Docker storage is configured on a specific system can be obtained from: | |||

cat /etc/sysconfig/docker-storage | |||

DOCKER_STORAGE_OPTIONS="--storage-driver devicemapper --storage-opt dm.fs=xfs --storage-opt dm.thinpooldev=/dev/mapper/docker_vg-container--thinpool --storage-opt dm.use_deferred_removal=true --storage-opt dm.use_deferred_deletion=true" | |||

and also | |||

docker info | |||

=The node Daemon= | =The node Daemon= | ||

=Pod= | =<span id='Controller'></span><span id='Pod_Configuration'></span><span id='Pod_Lifecycle'></span><span id='Terminal_State'></span><span id='Pod_Definition'></span><span id='Pod_Name'></span><span id='Pod_Definition_File'></span><span id='Pod_Placement'></span><span id='Pod_Probe'></span><span id='Liveness_Probe'></span><span id='Readiness_Probe'></span><span id='Local_Manifest_Pod'></span><span id='Bare_Pod'></span><span id='Static_Pod'></span><span id='Pod_Type'></span><span id='Terminating'></span><span id='NonTerminating'></span><span id='Pod_Presets'></span><span id='Init_Container'>Pod= | ||

Contains: controller, pod configuration, pod lifecycle, terminal state, pod definition, pod name, pod placement, pod probe, liveness probe, readiness probe, local manifest pod, bare pod, static pod, pod type, terminating, nonterminating, pod presents, init container. | |||

=Label= | |||

Labels are simple key/value pairs that can be assigned to any resource in the system and are used to group and select arbitrarily related objects. Most Kubernetes objects can include labels in their metadata. Labels provide the default way of manage objects as ''groups'', instead of having to handle each object individually. Labels are a [[Kubernetes Concepts#Label|Kubernetes concept]]. | |||

[[OpenShift Get Labels Applied to a Node|Labels associated with a node]] can be obtained with [[oc_describe#node|oc describe node]]. | |||

=Selector= | |||

<span id='Label_Selector'></span> | |||

A ''selector'' is a set of labels. It is also referred to as ''label selector''. Selectors are a [[Kubernetes Concepts#Selector|Kubernetes concept]]. | |||

==Node Selector== | |||

A ''node selector'' is an expression applied to a [[#Node|node]], which, depending on whether the node has or does not have the labels expected by the node selector, allows or prevents [[#Pod_Placement|pod placement]] on the node in question during the pod scheduling operation. It is the [[#Scheduler|scheduler]] that evaluates the node selector expression and decides on which node to place the pod. The [[#DaemonSet|DaemonSets]] also use node selectors when placing the associated pods on nodes. | |||

Node selectors can be associated with [[#Cluster-Wide_Default_Node_Selector|an entire cluster]], with a project, or with a specific pod. The node selectors can be modified as part of a [[OpenShift Node Selector Operations|node selector operation]]. | |||

===Cluster-Wide Default Node Selector=== | |||

The cluster-wide default node selector is configured during OpenShift cluster installation to restrict pod placement on specific nodes. It is specified in the [[Master-config.yml#defaultNodeSelector|projectConfig.defaultNodeSelector]] section of the master configuration file [[master-config.yml]]. It can also be modified after installation with the following procedure: | |||

{{Internal|OpenShift_Node_Selector_Operations#Configuring_a_Cluster-Wide_Default_Node_Selector|Configure a Cluster-Wide Default Node Selector}} | |||

===Per-Project Node Selector=== | |||

The per-project node selector is used by the scheduler to schedule pods associated with the project. The per-project node selector and takes precedence over [[OpenShift_Concepts#Cluster-Wide_Default_Node_Selector|cluster-wide default node selector]], when both exist. It is available as "openshift.io/node-selector" project metadata (see below). If "openshift.io/node-selector" is set to an empty string, the project will not have an adminstrator-set node selector, even if the [[#Cluster-Wide_Default_Node_Selector|cluster-wide default]] has been set. This means that a cluster administrator can set a default to restrict developer projects to a subset of nodes and still enable infrastructure or other projects to schedule the entire cluster. | |||

The per-project node selector value can be queried with: | |||

[[oc_get#project|oc get project -o yaml]] | |||

It is listed as: | |||

... | |||

kind: Project | |||

<b>metadata</b>: | |||

<b>annotations</b>: | |||

... | |||

<b>openshift.io/node-selector</b>: "" | |||

... | |||

The per-project node selector is usually set up when the project is created, as described in this procedure: | |||

{{Internal|OpenShift_Node_Selector_Operations#When_the_Project_is_Created|Configuring a Per-Project Node Selector during Project Creation}} | |||

It can also be changed after project creation with <tt>oc edit</tt> as described in this procedure: | |||

{{Internal|OpenShift_Node_Selector_Operations#After_the_Project_was_Created|Configuring a Per-Project Node Selector after Project Creation}} | |||

===Per-Pod Node Selector=== | |||

The declaration of a ''per-pod node selector'' can be obtained running: | |||

oc get pod <''pod-name''> -o yaml | |||

and it is rendered in the "spec:" section of the pod definition: | |||

... | |||

kind: Pod | |||

<b>spec</b>: | |||

... | |||

<b>nodeSelector</b>: | |||

key-A: value-A | |||

<font color=red>How is the node selector of a pod generated?</font> | |||

<font color=red>TODO: merge with [[OpenShift_Concepts#Pod_Placement]]</font> | |||

Once the pod has been created, the node selector value becomes immutable and an attempt to change it will fail. For more details on pod state see [[OpenShift_Concepts#Pod|Pods]]. | |||

===Precedence Rules when Multiple Node Selectors Apply=== | |||

<font color=red>TODO</font> | |||

===External Resources=== | |||

* https://docs.openshift.com/container-platform/3.5/admin_guide/managing_projects.html#using-node-selectors | |||

=Scheduler= | |||

{{External|https://docs.openshift.com/container-platform/3.5/admin_guide/scheduler.html}} | |||

Scheduling is the master's main function: when a pod is created, the master determines on what node(s) to execute the pod. This is called ''scheduling''. The layer that handles this responsibility is called the [[#Scheduling_Layer|scheduling layer]]. | |||

The ''scheduler'' is a component that runs on master and determines the best fit for running [[#Pod|pods]] across the environment. The scheduler also spreads pod replicas across nodes, for application HA. The scheduler reads data from the [[#Pod_Definition|pod definition]] and tries to find a node that is a good fit based on configured policies. The scheduler does not modify the pod, it creates a binding that ties the pod to the selected node, via the master API. | |||

The OpenShift scheduler is based on [[Kubernetes Concepts#Scheduler|Kubernetes scheduler]]. | |||

Most OpenShift pods are scheduled by the scheduler, unless they are managed by a [[#DaemonSet|DaemonSet]]. In this case, the DaemonSet selects the node to run the pod, and the scheduler ignores the pod. | |||

The scheduler is completely independent and exists as a standalone, pluggable solution. The scheduler is deployed as a container, referred to as an ''infrastructure container''. The functionality of the scheduler can be extended in two ways: | |||

# Via enhancements, by adding predicates to the priority functions. | |||

# Via replacement with a different implementation. | |||

The pod placement process is described here: {{Internal|OpenShift_Pod_Concepts#Pod_Placement|Pod Placement}} | |||

==Default Scheduler Implementation== | |||

The default scheduler is a scheduling engine that selects the node to host the pod in three steps: | |||

# Filter all available nodes by running through a list of filter functions called [[#Predicates|predicates]], discarding the nodes that do not meet the criteria. | |||

# Prioritize the remaining nodes by passing through a series of [[#Priority_Functions|priority functions]] that assigns each node a score between 0 - 10. 10 signifies the best possible fit to run the pod. By default all priority function are considered equivalent, but they can be weighted differently via configuration. | |||

# Sorts the node by score and selects the node with the highest score. If multiple nodes come with the same score, one is chosen at random. | |||

Note that an insight into how the predicates are evaluated and what scheduling decisions are taken can be achieved by increasing the logging verbosity of the [[OpenShift_Runtime#master_controller_Daemon|master controllers processes]]. | |||

==Predicates== | |||

===Static Predicates=== | |||

* PodFitsPorts - a node is fit fi there are no port conflicts. | |||

* PodFitsResources - a node is fit based on resource availability. Nodes declare resource capacities, pods specify what resources they require. | |||

* NoDiskConflict - evaluates if a pod fits based on volumes requested and those already mounted. | |||

* MatchNodeSelector - a node is fit based on the node selector query. | |||

* HostName - a node is fit based on the presence of host parameter and string match with host name. | |||

===Configurable Predicates=== | |||

====ServiceAffinity==== | |||

ServiceAffinity filters out nodes that do not belong to the topological level defined by the provided labels. See [[/etc/origin/master/scheduler.json#serviceAffinity|scheduler.json]] | |||

====LabelsPresence==== | |||

LabelsPresence checks whether the node has certain labels defined, regardless of value. | |||

==Priority Functions== | |||

===Existing Priority Functions=== | |||

* <span id="LeastRequestedPriority"></span>LeastRequestedPriority - favors nodes with fewer requested resources, calculates percentage of memory and CPU requested by pods scheduled on node, and prioritizes nodes with highest available capacity. | |||

* BalancedResourceAllocation - favors nodes with balanced resource usage rate, calculates difference between consumed CPU and memory as fraction of capacity and prioritizes nodes with the smallest difference. It should always be used with [[#LeastRequestedPriority|LeastRequestedPriority]]. | |||

* ServiceSpreadingPriority - spreads pods by minimizing the number of pods that belong to the same service, onto the same node | |||

* EqualPriority | |||

===Configurable Priority Functions=== | |||

* ServiceAntiAffinity | |||

* LabelsPreference | |||

==Scheduler Policy== | |||

= | The selection of the predicates and the priority functions defines the ''scheduler policy''. The default policy is configured in the master configuration file [[master-config.yml]] as [[Master-config.yml#schedulerConfigFile|kubernetesMasterConfig.schedulerConfigFile]]. By default, it points to [[/etc/origin/master/scheduler.json]]. The current scheduling policy in force can be obtained with <font color=red>?</font>. A custom policy can replace it, if necessary, by following this procedure: [[OpenShift Modify the Scheduler Policy|Modify the Scheduler Policy]]. | ||

==Scheduler Policy File== | |||

The default scheduler policy is configured in the master configuration file [[master-config.yml]] as [[Master-config.yml#schedulerConfigFile|kubernetesMasterConfig.schedulerConfigFile]], which by default points to [[/etc/origin/master/scheduler.json]]. Another example of scheduler policy file: {{Internal|Scheduler Policy File|Scheduler Policy File}} | |||

==Volume== | =<span id='Storage'></span><span id='Volume'></span><span id='Persistent_Volume'></span><span id='Persistent_Volume_Claim'></span><span id='EmptyDir'></span><span id='Temporary_Pod_Volume'></span>Volumes= | ||

{{Internal| | Persistent Volumes, Persistent Volume Claims, EmptyDirs, ConfigMaps, Downward API, Host Paths, Secrets: {{Internal|OpenShift Volume Concepts|Volumes}} | ||

=etcd= | =etcd= | ||

{{Internal| | Implements OpenShift's [[#Store_Layer|store layer]], which holds the current state, desired state and configuration information of the environment. | ||

OpenShift stores image, build, and deployment ''metadata'' in etcd. Old resources must be periodically pruned. If a large number of images/build/deployments are planned, etcd must be placed on machines with large amounts of memory and fast SSD drives. | |||

{{Internal|Etcd Concepts#Overview|etcd}} | |||

==etcd and Master Caching== | |||

[[#Master|Masters]] cache deserialized resource metadata to reduce the CPU load and keep the metadata in memory. For small clusters, the cache can use a lot of memory for negligible CPU reduction. The default cache size is 50,000 entries, which, depending on the size of resources, can grow to occupy 1 to 2 GB of memory. The cache size can be configured in [[Master-config.yml#deserialization-cache-size|master-config.yml]]. | |||

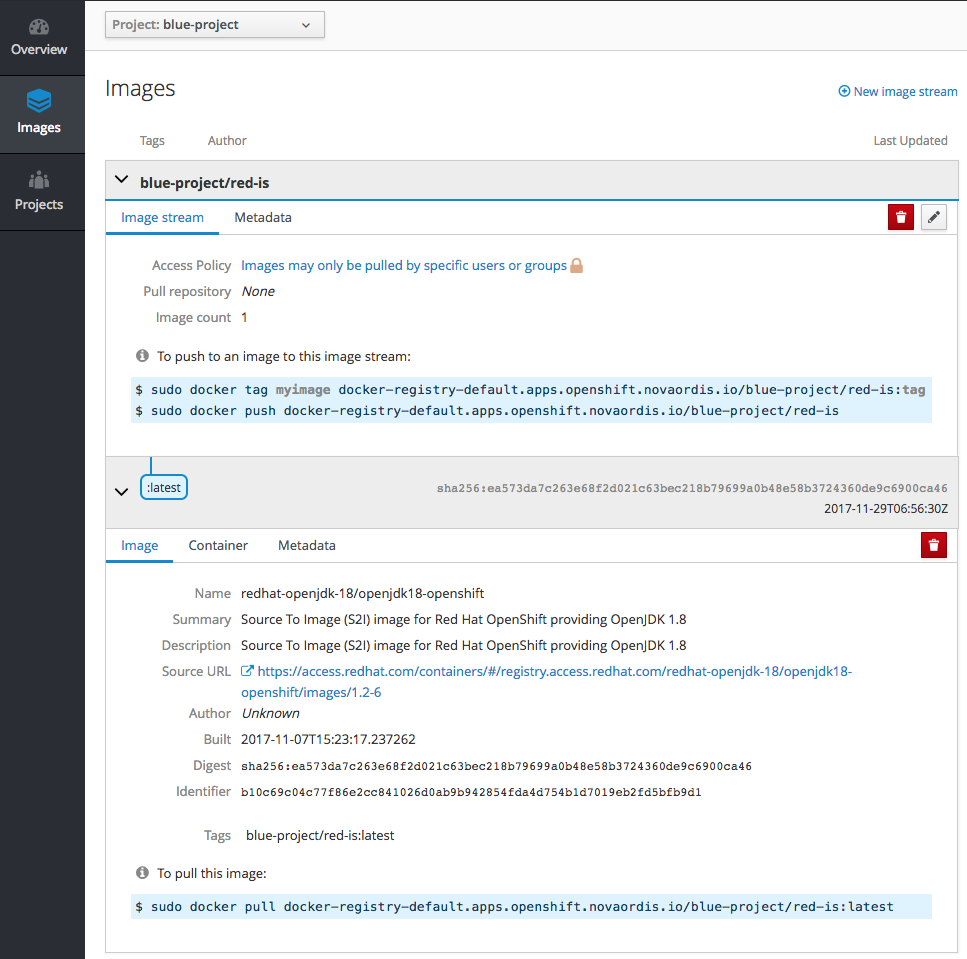

=Image Registries= | |||

{{External|https://docs.openshift.com/container-platform/latest/install_config/registry/index.html}} | |||

<span id='Docker_Registry'></span> | |||

==Integrated Docker Registry== | |||

OpenShift contains an integrated [[Docker_Concepts#Image_Registry|Docker image registry]], providing authentication and access control to images. The integrated registry should not be confused with the OpenShift Container Registry (OCR), which is a standalone solution, which can be used outside of an OpenShift environment. The integrated registry is an application deployed within the "[[#Default_Project|default]]" project as a [[Docker_Concepts#Privileged_Container|privileged container]], as part of the cluster installation process. The integrated registry is available internally in the OpenShift cluster at the following [[#The_Service_IP_Address|Service IP]]: docker-registry.default.svc:5000/. It consists of a a "docker-registry" [[#Service|service]], a "docker-registry" [[#DeploymentConfig|deployment configuration]], a "registry" [[OpenShift_Security_Concepts#Service_Account|service account]] and a role binding that associates the service account to the "system:registry" cluster role. [[OpenShift Registry Definition|More details]] on the structure of the integrated registry can be generated with [[oadm registry|oadm registry -o yaml]]. The default registry also comes with a [[#The_Registry_Console|registry console]] that can be used to browse images. | |||

The main function of the integrated registry is to provide a default location where users can push images they build. Users push images into registry and whenever a new image is stored in the registry, the registry notifies OpenShift about it and passes along image information such as the [[#Namespace|namespace]], the name and the image metadata. Various OpenShift components react by creating new builds and deployments. | |||

Alternatively, the integrated registry may store and expose to projects external images the projects may need. The docker servers on OpenShift nodes are configured to be able to access the internal registry, but they are also configured with [[#registry.access.redhat.com|registry.access.redhat.com]] and [[#docker.io|docker.io]]. | |||

Multiple running instances of the container registry require backend storage supporting writes by multiple processes. If the chosen infrastructure provider does not contain this ability, running a single instance of a container registry is acceptable. | |||

<font color=red> | |||

'''Questions''': | |||

* How can I "instruct" an application to use a specific registry. a “lab” application to use registry-lab | |||

* How do pods that need images go to that registry and not other? The answer to this question lays in the way an application uses the registry. | |||

</font> | |||

==External Registries== | |||

The external registries [[#registry.access.redhat.com|registry.access.redhat.com]] and [[#docker.io|docker.io]] are accessible. In general, any server implementing Docker registry API can be integrated as a [[#Stand-alone_Registry|stand-alone registry]]. | |||

====registry.access.redhat.com==== | |||

{{Internal|registry.access.redhat.com|registry.access.redhat.com}} | |||

The Red Hat registry is available at http://registry.access.redhat.com. An individual image can be inspected with an URL similar to https://access.redhat.com/containers/#/registry.access.redhat.com/jboss-eap-7/eap70-openshift/images/1.5-23 | |||

====docker.io==== | |||

[[Docker Concepts#Docker_Hub|Docker Hub]] is available at http://dockerhub.com. | |||

==Stand-alone Registry== | |||

{{External|https://docs.openshift.com/container-platform/latest/install_config/install/stand_alone_registry.html#install-config-installing-stand-alone-registry}} | |||

Any server implementing Docker registry API can be integrated as a standalone registry: a stand-alone container registry can be installed and used with the OpenShift cluster. | |||

==<span id=The_Registry_Console'></span>Integrated Registry Console== | |||

{{External|https://docs.openshift.com/container-platform/latest/install_config/registry/deploy_registry_existing_clusters.html#registry-console}} | |||

The registry comes with a "registry console" that allows web-based access to the registry. An URL example is https://registry-console-default.apps.openshift.novaordis.io. The registry console is deployed as a [[OpenShift_Concepts#Default_Project|registry-console]] pod part of the "[[OpenShift_Concepts#Default_Project|default]]" project. | |||

= | ==Registry Operations== | ||

{{Internal| | {{Internal|OpenShift Registry Operations|Registry Operations}} | ||

= | =<span id='Service_Endpoint'></span><span id='Service_Proxy'></span><span id='Service_Dependencies'></span><span id='Relationship_between_Services_and_the_Pods_providing_the_Service'></span><span id='EndpointsController'></span>Service= | ||

{{Internal|OpenShift Service Concepts|OpenShift Service Concepts}} | |||

=API= | =API= | ||

| Line 67: | Line 373: | ||

{{Internal|Kubernetes Concepts#API|Kubernetes API}} | {{Internal|Kubernetes Concepts#API|Kubernetes API}} | ||

= | =Networking= | ||

In OpenShift, nodes communicate to each over using the infrastructure-provided network. Pods communicate with each other via a software defined network (SDN), also known as the cluster network, which runs in top of that. | |||

==Required Network Ports== | |||

{{External|https://docs.openshift.com/enterprise/3.1/install_config/install/prerequisites.html#prereq-network-access}} | |||

==Default Network Interface on a Host== | |||

Every time OpenShift refers to the "default interface" on a host, it means the network interface associated with the default route. This is the logic that figures it out is here: {{Internal|Default Network Interface on a Host|Default Network Interface on a Host}} | |||

==SDN, Overlay Network== | |||

{{External|https://docs.openshift.com/container-platform/latest/architecture/additional_concepts/sdn.html#architecture-additional-concepts-sdn}} | |||

{{External|https://docs.openshift.com/container-platform/latest/install_config/configuring_sdn.html}} | |||

<span id="Overlay_Network"></span><span id="SDN"></span> | |||

All hosts in the OpenShift environment are [[#OpenShift_Cluster|clustered]] and are also members of the ''overlay network'' based on a Software Defined Network (SDN). Each [[#Pod|pod]] gets its [[#Pod_IP_Address|own IP address]], by default from the 10.128.0.0/14 subnet, that is routable from any member of the SDN network. Giving each pod its own IP address means that pods can be treated like physical hosts or virtual machines in terms of port allocation, networking, naming, service discovery, load balancing, application configuration and migration. | |||

However, it is not recommended that a pod talks to another directly by using the IP address. Instead, they should use [[#Service|services]] as an indirection layer, and interact with the service, that may be deployed on different pods at different times. Each [[#Service|service]] also gets its own [[#Service_IP_Address|own IP address]] from the 172.30.0.0/16 subnet. | |||

===The Cluster Network=== | |||

The ''cluster network'' is the network from which pod IPs are assigned. These network blocks should be private and must not conflict with existing network blocks in your infrastructure that pods, nodes or the master may require access to. The default subnet value is 10.128.0.0/14 (10.128.0.0 - 10.131.255.255) and it cannot be arbitrarily reconfigured after deployment. The size and address range of the cluster network, as well as the host subnet size are configurable during installation. Configured with 'osm_cluster_network_cid' at [[OpenShift_3.5_Installation#Configure_Ansible_Inventory_File|installation]]. | |||

Samples of pod addresses can be obtained by querying [[OpenShift_Service_Concepts#Service_Endpoints|service endpoints]] in a project: | |||

oc get endpoints | |||

NAME ENDPOINTS AGE | |||

some-service 10.130.1.17:8080 21m | |||

The master maintains a registry of nodes in etcd, and each [[#Node|node]] gets allocated upon creation an unused subnet from the cluster network. Each node gets a /23 subnet, which means the cluster can allocate 512 subnets to nodes, and each node has 510 addresses to assign to containers running on it. Once the node is registered with the master, and gets its cluster network subnet, SDN creates on the node three devices: | |||

* <span id='br0'></span>'''br0''' - the OVS bridge device that pod containers will be attached to. The bridge is configured with a set of non-subnet specific flow rules. | |||

* '''tun0''' - an OVS internal port (port 2 on br0). This gets assigned the cluster subnet gateway address, and it is used for external access. The SDN configures net filter and routing rules to enable access from the cluster subnet to the external network via NAT. | |||

* <span id='vxlan'></span>'''vxlan_sys_4789'''. This is the OVS VXLAN device (port 1 on br0) which provides access to containers on remote nodes. It is referred to as "vxlan0". | |||

Each time a pod is started, the SDN assigns the pod a free IP address from the node's cluster subnet, attaches the host side of the pod's veth interface pair to the OVS bridge br0, adds OpenFlow rules to the OVS database to route traffic addressed to the new pod to the correct OVS port. If a [[OpenShift_Network_Plugins#multitenant|ovs-multitenant]] plug-in is active, it also adds OpenFlow rules to tag traffic coming from the pod with the pod's VIND, and to allow traffic into the pod if the traffic's VIND matches the pod's VIND, or it is the privileged VIND 0. | |||

Nodes update their OpenFlow rules in response to master's notification in case new nodes are added or leave. When a new subnet is added, the node adds rules on br0 so that packets with a destination IP address the remote subnet go to vxlan0 (port 1 on br0) and thus out onto the network. The [[OpenShift_Network_Plugins#subnet|ovs-subnet]] plug-in sends all packets across the VXLAN with VNID 0, but the [[OpenShift_Network_Plugins#multitenant|ovs-multitenant]] plug-in uses the appropriate VNID for the source container. | |||

The SDN does not allow the master host (which is running the [[Open_vSwitch|OVS processes]]) to access the cluster network, so a master does not have access to pods via the cluster network, unless it is also running a node. | |||

When [[OpenShift_Network_Plugins#multitenant|ovs-multitenant]] plug-in is active, the master also allocates VXLAN [[OpenShift_Network_Plugins#Virtual_Network_ID_.28VNID.29|VNIDs]] to projects. VNIDs are used to isolate the traffic. | |||

====Packet Flow==== | |||

<font color=red>TODO: https://docs.openshift.com/container-platform/3.5/architecture/additional_concepts/sdn.html#sdn-packet-flow</font> | |||

====Pod IP Address==== | |||

A [[#The_Cluster_Network|cluster network]] IP address, that gets assigned to a [[#Pod|pod]]. | |||

===The Services Subnet=== | |||

OpenShift uses a "services subnet", also known as "kubernetes services network", in which [[OpenShift Service Concepts#Overview|OpenShift Services]] will be created within the [[#SDN|SDN]]. This network block should be private and must not conflict with any existing network blocks in the infrastructure to which pods, nodes, or the master may require access to. It defaults to 172.30.0.0/16. It cannot be re-configured after deployment. If changed from the default, it must not be 172.16.0.0/16, which the docker0 network bridge uses by default, unless the docker0 network is also modified. It is configured with 'openshift_master_portal_net', 'openshift_portal_net' at [[OpenShift_3.5_Installation#Configure_Ansible_Inventory_File|installation]] and populates the master configuration file [[Master-config.yml#servicesSubnet| servicesSubnet]] element. Note that Docker expects its [[Docker_Server_Configuration#--insecure-registry|insecure registry]] to available on this subnet. | |||

The services subnet IP address of a specific service can be displayed with: | |||

[[Oc_describe#service|oc describe service]] <''service-name''> | |||

or | |||

[[#Service_IP_Address|oc get svc]] <''service-name''> | |||

====The Service IP Address==== | |||

An IP address from the [[#The_Services_Subnet|services subnet]] that is associated with a service. <span id='Service_IP_Address'></span>The service IP address is reported as "Cluster IP" by: | |||

[[Oc_get#services.2C_svc|oc get svc]] <''service-name''> | |||

This makes sense if we think about a service as a cluster of pods that provide the service. | |||

Example: | |||

oc get svc logging-es | |||

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE | |||

logging-es 172.30.254.155 <none> 9200/TCP 31d | |||

===Docker Bridge Subnet=== | |||

Default 172.17.0.0/16. | |||

===Network Plugin=== | |||

{{Internal|OpenShift Network Plugins|OpenShift Network Plugins}} | |||

==Open vSwitch== | |||

{{Internal|Open vSwitch|Open vSwitch}} | |||

==DNS== | |||

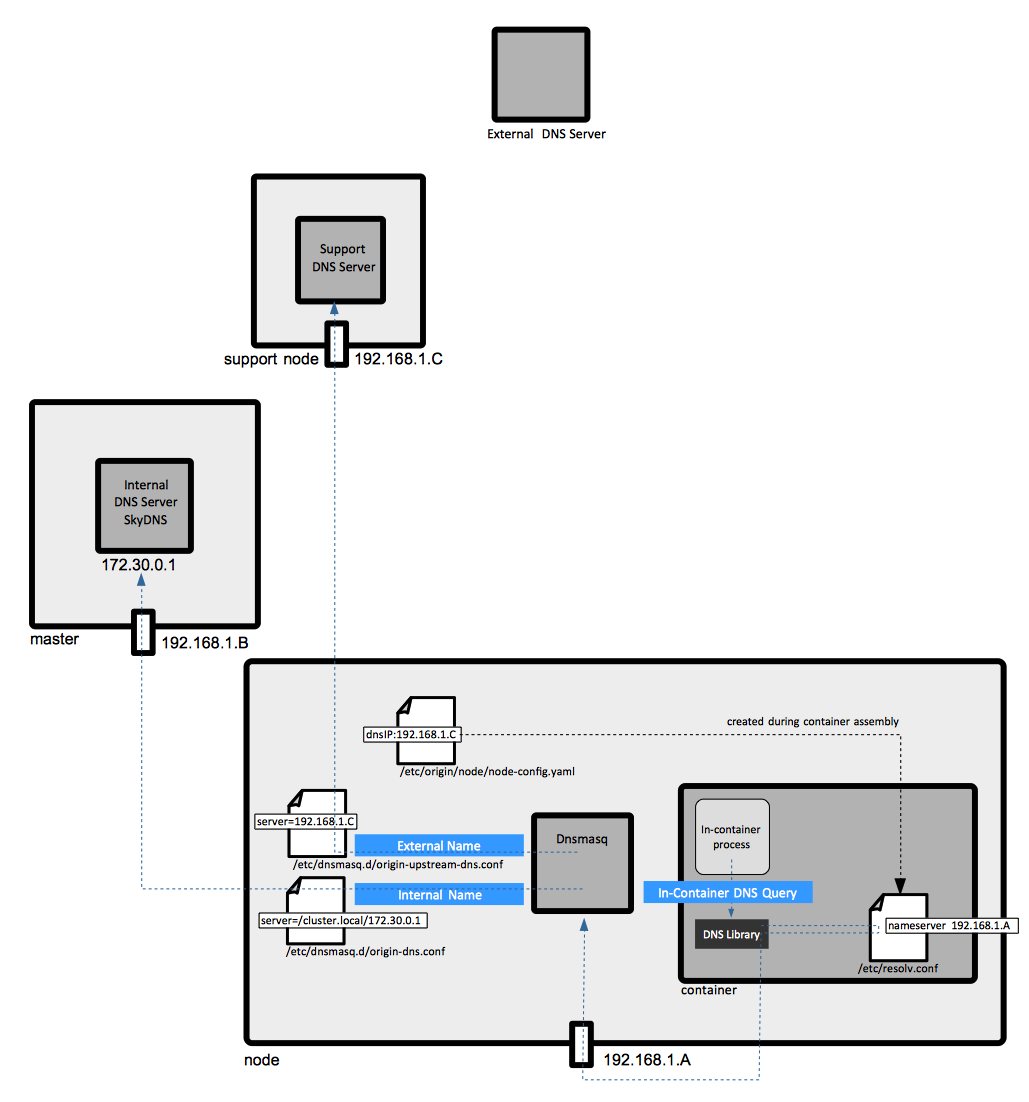

[[Image:OpenShiftDNSConcepts.png]] | |||

===External DNS Server=== | |||

An external DNS server is required to resolve the public wildcard name of the environment - and as consequence, all public names of various application access points - to the public address of the [[#Default_Router|default router]], as per [[#Networking_Workflow|networking workflow]]. If more than one router is deployed, the external DNS server should resolve the public wildcard name of the environment to the public IP address of the load balancer. For more details about initial configuration details see [[OpenShift_3.5_Installation#External_DNS_Setup|External DNS Setup]]. | |||

===Optional Support DNS Server=== | |||

An optional support DNS server can be setup to translate local hostnames (such as master1.ocp36.local) to internal IP addresses allocated to the hosts running OpenShift infrastructure. There is no good reason to make these addresses and names publicly accessible. The OpenShift advanced installation procedure factors it in automatically, as long as the base host images OpenShift is installed in top of is already configured to use it to resolve DNS names via [[/etc/resolv.conf]] or [[NetworkManager]]. No additional configuration via [[OpenShift_hosts#openshift_dns_ip|openshift_dns_ip]] is necessary. | |||

===Internal DNS Server=== | |||

A DNS server used to resolve local resources. The most common use is to translate [[#Service|service]] names to service addresses. The internal DNS server listens on the [[#The_Service_IP_Address|service IP address]] 172.30.0.1:53. It is a SkyDNS instance built into OpenShift. It is deployed on the master and answers the queries for services. The naming queries issued from inside containers/pods are directed to the internal DNS service by Dnsmasq instances running on each node. The process is described [[#Dnsmqsq|below]]. | |||

The internal DNS server will answer queries on the ".cluster.local" domain that have the following form: | |||

* <''namespace''>.cluster.local | |||

* <''service''>.<''namespace''>.svc.cluster.local - service queries | |||

* <''name''>.<''namespace''>.endpoints.cluster.local - endpoint queries | |||

Service DNS can still be used and responds with multiple A records, one for each pod of the service, allowing the client to round-robin between each pod. | |||

===Dnsmasq=== | |||

Dnsmasq is deployed on all nodes, including masters, as part of the installation process, and it works as a DNS proxy. It binds on the [[OpenShift_Concepts#Default_Network_Interface_on_a_Host|default interface]], which is not necessarily the interface servicing the physical OpenShift subnet, and resolves all DNS requests. All OpenShift "internal" names - service names, for example - and all unqualified names are assumed to be in the "''namespace''.svc.cluster.local", "svc.cluster.local", "cluster.local" and "local" domains and forwarded to the [[#Internal_DNS_Server|internal DNS server]]. This is done by Dnsmasq, as configured from /etc/dnsmasq.d/origin-dns.conf: | |||

server=/cluster.local/172.30.0.1 | |||

This configuration tells Dnsmasq to forward all queries for names in the "cluster.local" domain and sub-domains to the [[#Internal_DNS_Server|internal DNS server]] listening on 172.30.0.1. | |||

This is an example of such query (where 192.168.122.17 is the IP address of the local node on which Dnsmasq binds, and 172.30.254.155 is a service IP address) : | |||

nslookup logging-es.logging.svc.cluster.local | |||

Server: 192.168.122.17 | |||

Address: 192.168.122.17#53 | |||

Name: logging-es.logging.svc.cluster.local | |||

Address: 172.30.254.155 | |||

The non-OpenShift name requests are forwarded to the real DNS server stored in /etc/dnsmasq.d/origin-upstream-dns.conf: | |||

server=192.168.122.12 | |||

The configuration file /etc/dnsmasq.d/origin-dns.conf is deployed at installation, while /etc/dnsmasq.d/origin-upstream-dns.conf is created dynamically every time the external DNS changes, as it is the case for example when the interface receives a new DHCP IP address. | |||

For more details, see {{Internal|Dnsmasq|Dnsmasq}} | |||

===Container /etc/resolv.conf=== | |||

The container /etc/resolv.conf is created while the container is being assembled, based on information available in [[Node-config.yml|node-config.yamll]]. Also see: | |||

* [[Node-config.yml#dnsIP|node-config.yml dnsIP]] | |||

===External OpenShift DNS Resources=== | |||

* https://docs.openshift.com/container-platform/3.5/architecture/additional_concepts/networking.html#architecture-additional-concepts-openshift-dns | |||

==Routing Layer== | |||

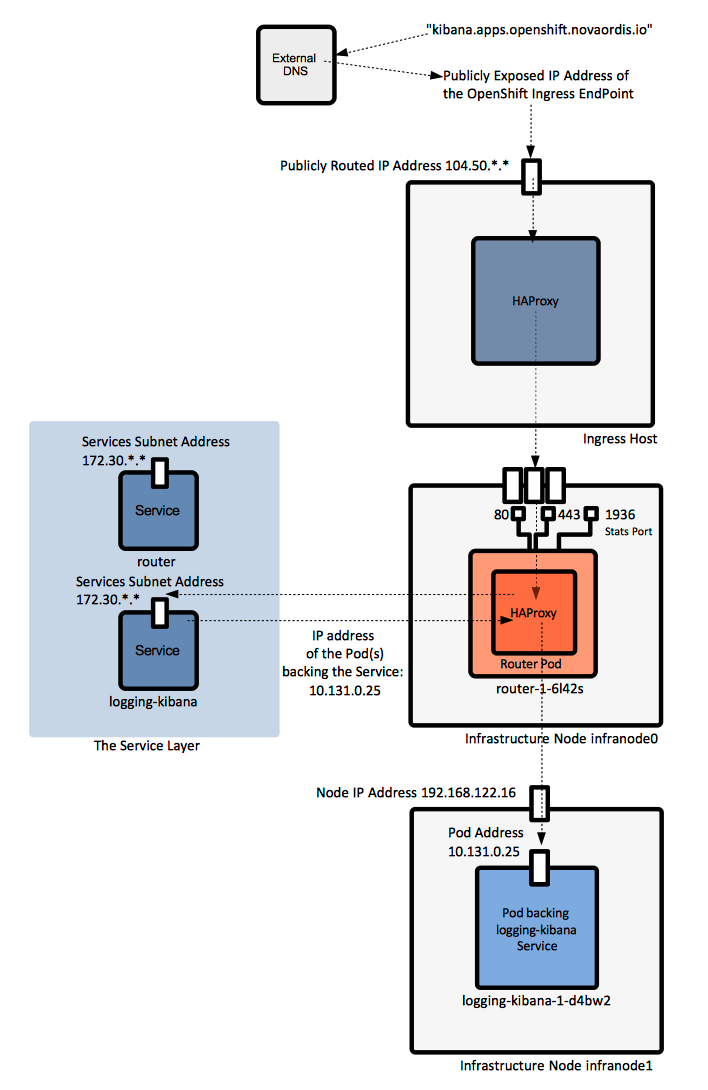

OpenShift networking provides a platform-wide ''routing layer'' that directs outside traffic to the correct pods IPs and ports. The routing layer cooperates with the [[#Service_Layer|service layer]]. Routing layer components run themselves in pods. They provide automated load balancing to pods, and routing around unhealthy pods. The routing layer is pluggable and extensible. For more details about the overall OpenShift workflow, see "[[#OpenShift_Workflow|OpenShift Workflow]]". | |||

==Router== | ==Router== | ||

The router | {{External3|https://docs.openshift.com/container-platform/3.5/install_config/router/index.html|https://docs.openshift.com/container-platform/3.5/architecture/core_concepts/routes.html|https://docs.openshift.com/container-platform/latest/install_config/router/index.html}} | ||

The router service routes external requests to applications inside the OpenShift environment. The router service is deployed as one or more pods. The pods associated with the router service are deployed as infrastructure containers on [[#Infrastructure_Node|infrastructure nodes]]. A router is usually created during the installation process. Additional routers can be created with [[oadm router]]. | |||

The router is the ingress point for all traffic flowing to backing pods implementing OpenShift [[#Service|Services]]. Routers run in containers, which may be deployed on any node (or nodes) in an OpenShift environment, as part of the [[#Default_Project|"default" project]]. A router works by resolving fully qualified DNS external names ("kibana.apps.openshift.novaordis.io") external requests are associated with into pod IP addresses and proxying the requests directly into the pods that back the corresponding [[#Service|service]]. The router gets pod IPs from the [[#Service|service]], and proxies the requests to pods directly, not through the [[#Service|Service]]. | |||

Routers directly attach to port 80 and 443 on all interfaces on a host, so deployment of the corresponding containers should be restricted to the infrastructure hosts where those points are available. Statistics are usually available on port 1936. | |||

===Relationship between Service and Router=== | |||

When the default router is used, the [[#Service_Layer|service layer]] is bypassed. The [[#Service|Service]] is used only to find which pods the service represents. The default router does the load balancing by proxying directly to the pod endpoints. | |||

[[Image:OpenShiftRouterConcepts.png]] | |||

===Sticky Sessions=== | |||

The router configuration determines the sticky session implementation. The default HAProxy template implements sticky session using ''balance source'' directive. | |||

===Router Implementations=== | |||

====HAProxy Default Router==== | |||

{{External|https://docs.openshift.com/container-platform/3.5/architecture/core_concepts/routes.html#haproxy-template-router}} | |||

By default, the router process is an instance of [[HAProxy]], referred to as the <span id="Default_Router"></span>"Default Router". It uses "openshift3/OpenShift Container Platform-haproxy-router" image, and it supports [[#unsecured_route|unsecured]], [[#edge_secured_route|edge-terminated]], [[#re-encryption_terminated_secured_route|re-encryption terminated]] and [[#passthrough_secured_route|passthrough terminated routes]] matching on HTTP host and request path. | |||

====F5 Router==== | |||

The F5 Router integrates with existing F5 Big-IP systems. | |||

===Router Operations=== | |||

Information about the existing routers can be obtained with: | |||

[[Oadm_router#Information|oadm router]] -o yaml | |||

More details on router operations are available here: {{Internal|OpenShift Router Operations|Router Operations}} | |||

==Route== | ==Route== | ||

Logically, a ''route'' is an external DNS entry, either in a top level domain or a dynamically allocated name, that is created to point to an OpenShift [[#Service|service]] so that the service can be accessed outside the cluster. The administrator may configure one or more [[#Router|routers]] to handle those routes, typically through an Apache or HAProxy load balancer/proxy. | |||

The route object describes a service to expose by giving it an externally reachable DNS host name. | |||

A route is a mapping of an FQDN and path to [[#Service_Endpoint|the endpoints of a service]]. Each route consist of a route name, a service selector and an optional security configuration: | |||

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD | |||

logging-kibana kibana.apps.openshift.novaordis.io logging-kibana <all> reencrypt/Redirect None | |||

A route can be <span id="unsecured_route"></span>''unsecured'' or ''secured''. | |||

The secure routes specify the TLS termination of the route, which relies on SNI (Server Name Indication). More specifically, they can be: | |||

* <span id="edge_secured_route"></span>edge secured (or edge terminated). Occurs at the router, prior to proxying the traffic to the destination. The front end of the router serves the TLS certificates, so they must be configured in the route. If the certificates are not configured in the route, the default certificate of the router is used. Connection from the router into the internal network are not encrypted. This is an [[OpenShift_Route_Definition#Secured_Edge-Terminated_Route|example of edge-termintated route]]. | |||

* <span id="passthrough_secured_route"></span>passthrough secured (or passthrough terminated). The encrypted traffic is send to to destination, the router does not provide TLS termination, hence no keys or certificates are required on the router. The destination will handle certificates. This method supports client certificates. This is an [[OpenShift_Route_Definition#Passthrough-Terminated_Route|example of passthrough-termintated route]]. | |||

* <span id="re-encryption_terminated_secured_route"></span>re-encryption terminated secured. This is an [[OpenShift_Route_Definition#Re-encryption-Terminated_Route|example of re-encyrption-termintated route]]. | |||

{{Internal|OpenShift Route Definition|Route Definition}} | |||

Routes can be displayed with the following commands: | |||

[[oc get#all|oc get all]] | |||

[[oc get#routes|oc get routes]] | |||

Routes can be created with API calls, an JSON or [[OpenShift Route Definition|YAML object definition file]] or the [[oc expose#service|oc expose service]] command. | |||

== | ===Path-Based Route=== | ||

A ''path-based route'' specifies a path component, allowing the same host to server multiple routes. | |||

== | ===Route with Hostname=== | ||

Routes let you associate a service with an externally reachable hostname. If the hostname is not provided, OpenShift will generate on based on the <routename>-.<namespace>].<suffix> pattern. | |||

== | ===Default Routing Subdomain=== | ||

The suffix and the default routing subdomain can be configured in the [[Master-config.yml#subdomain|master configuration file]]. | |||

== | ===Route Operations=== | ||

{{Internal|OpenShift Route Operations|Route Operations}} | |||

==Networking Workflow== | |||

The networking workflow is implemented at the [[#Networking_Layer|networking layer]]. | |||

* A user requests a page by pointing the browser to http://myapp.mydomain.com | |||

* The [[#External_DNS_Server|external DNS server]] resolves that request to the IP address of one of the hosts that host the [[#Default_Router|default router]]. That usually requires a wildcard C name in the DNS server pointed to the node that hosts the router container. | |||

* Default Router selects the pod from the list of pods listed by the [[#Service|service]] and acts as a proxy for that pod (this is where the port can be translated from the external 443 to the internal 8443, for example). | |||

=Resources= | |||

* cpu, requests.cpu | * cpu, requests.cpu | ||

| Line 135: | Line 625: | ||

* resourcequotas | * resourcequotas | ||

* services | * services | ||

* | * [[OpenShift_Security_Concepts#Secret|Secrets]] | ||

* configmaps | * [[OpenShift_Volume_Concepts#ConfigMap|configmaps]] | ||

* persistentvolumeclaims | * persistentvolumeclaims | ||

* openshif.io/imagestreams | * openshif.io/imagestreams | ||

==Resource | Most resources can be defined in JSON or YAML files, or via an API call. Resources can be exposed via the [[OpenShift_Volume_Concepts#Downward_API|downward API]] to the container. | ||

=<span id='Resource_Quotas'></span>Resource Management= | |||

{{Internal|OpenShift Resource Management Concepts|Resource Management}} | |||

=Project= | |||

<span id='Projects'></span> | |||

Projects allows groups of [[OpenShift_Security_Concepts#User|users]] to work together, define ownership of resources and manage resources - they can be seen as the central vehicle for managing regular users' access to resources. The project restricts and tracks use of resources with quotas and limits. A project is a [[Kubernetes Concepts#Namespace|Kubernetes namespace]] with additional annotations. A project can contain any number of containers of any kind, and any grouping or structure can be enforced using labels. The project is the closest concept to an application, OpenShift does not know of applications, though it provides a [[oc new-app|new-app command]]. A project lets a community of users to organize and manage their content in isolation from other communities. Users must be given access to projects by administrators—unless they are given permission to create projects, in which case they automatically have access to their own projects. | |||

Most objects in the system are scoped by a namespace, with the exception of: | |||

* [[OpenShift_Security_Concepts#User|users]] | |||

* [[OpenShift_Security_Concepts#Group|groups]] | |||

* [[#Node|nodes]] | |||

* [[#Persistent_Volume|persistent volumes]] | |||

* [[#Images|images]] | |||

More details about OpenShift types are available here [[oc types|OpenShift types]]. | |||

Each project has its own set of <span id='Objects'>''objects''</span>: [[#Pod|pods]], [[#Service|services]], [[#Replication_Controller|replication controllers]], etc. The names of each resource are unique within a project. Developers may request projects to be create, but administrators control the resources allocated to the projects. | |||

What actions a user can or cannot perform on a project's objects are specified by <span id='Policies'>[[OpenShift_Security_Concepts#Policy|policies]]</span>. | |||

<span id='Constraints'>''Constraints''</span> are quotas for each kind of [[#Object|object]] that can be limited. | |||

New projects are created with: | |||

[[Oc new-project#Overview|oc new-project]] | |||

==Current Project== | |||

The ''current project'' is a concept that applies to [[Oc#Namespace_Selection|oc]], and specifies the project oc commands apply to, without to explicitly having to use the -n <project-name> qualifier. The current project can be set with [[Oc_project|oc project]] and read with [[oc status]]. The current project is part of the CLI tool's [[OpenShift_Command_Line_Tools#Context|current context]], maintained in user's [[.kube config|.kube/config]]. | |||

==Global Project== | |||

In the context of an [[OpenShift_Network_Plugins#multitenant|ovs-multitenant]] SDN plugin, a project is ''global'' if if can receive [[#The_Cluster_Network|cluster network]] traffic from any pods, belonging to any project, and it can send traffic to any pods and services. <font color=red>Is the [[#Default_Project|default]] project a global project?</font>. A project can be made global with: | |||

[[OpenShift_Network_Operations#Pod_Network_Management|oadm pod-network]] make-projects-global <''project-1-name''> <''project-2-name''> | |||

==Standard Projects== | |||

===Default Project=== | |||

The "default" project is also referred to as "default namespace". It contains the following pods: | |||

* the [[OpenShift_Concepts#Integrated_Docker_Registry|integrated docker registry]] pod (memory consumption based on a test installation: 280 MB) | |||

* the [[OpenShift_Concepts#The_Registry_Console|registry console]] pod (memory consumption based on a test installation: 34 MB) | |||

* the [[#Router|router]] pod (memory consumption based on a test installation: 140 MB) | |||

In case the [[OpenShift_Network_Plugins#multitenant|ovs-multitenant]] SDN plug-in is installed, the "default" project has [[OpenShift_Network_Plugins#Virtual_Network_ID_.28VNID.29|VNID]] 0 and all its pods can send and receive traffic from any other pods. | |||

The "default" project can be used to store a new project template, if the default one needs to be modified. See [[OpenShift_Template_Operations#Modify_the_Template_for_New_Projects|Template Operations - Modify the Template for New Projects]]. | |||

==="logging" Project=== | |||

If logging support is deployed at installation or later, the participating pods ([[Kibana and OpenShift|kibana]], [[ElasticSearch and OpenShift|ElasticSearch]], [[Fluentd and OpenShift|fluentd]], [[OpenShift Curator|curator]]) are members of the [[OpenShift_Logging_Concepts#The_.22logging.22_Project|"logging" project]]. | |||

This is the memory consumption based on a test installation: | |||

* kibana pod: 95 MB | |||

* elasticsearch pod: 1.4GB | |||

* curator pod: 10 MB | |||

* fluentd pods max 130 MB | |||

==="openshift-infra" Project=== | |||

Contains the [[OpenShift Metrics Concepts#Overview|metrics components]]: | |||

* the [[OpenShift_Hawkular#Cassandra|Hawkular Cassandra]] pod | |||

* Hawkular pod | |||

* Heapster pod | |||

==="openshift" Project=== | |||

Contains standard [[#Template|templates]] and [[#.22openshift.22_Image_Streams|image streams]]. Images to use with OpenShift projects can be installed during the [[OpenShift_3.6_Installation#Load_Default_Image_Streams_and_Templates|OpenShift installation phase]], or they can be added later running command similar to: | |||

oc -n openshift [[OpenShift_Image_and_ImageStream_Operations#Create_an_Individual_Image_Stream_with_import-image|import-image]] jboss-eap64-openshift:1.6 | |||

===Other Standard Projects=== | |||

* "kube-system" | |||

* "management-infra" | |||

==Projects and Applications== | |||

Each project may have multiple applications, and each application can be managed individually. | |||

A new application is created with: | |||

[[oc new-app]] | |||

===The "app" Label=== | |||

<font color=red> | |||

'''TODO''': app vs. application | |||

There is no OpenShift object that represent an applications. However, the pods belonging to a specific application are grouped together in the Web console project window, under the same "Application" logical category. The grouping is done based on the "app" label value: all pods with the same "app" label value are represented as belonging to the same "application". The "app" label value can be explicitly set in the template that is used to instantiate the application with, [[OpenShift_Template_Definition#Labels|in the "labels:" section]]. Some templates [[OpenShift_Build_and_Deploy_a_JEE_Application_with_S2I#Create_the_Application|expose this as parameter]], so it can be set on command line with a syntax similar to: | |||

--param APPLICATION_NAME=<''application-name''> | |||

</font> | |||

===Application Operations=== | |||

{{Internal|OpenShift_Application_Operations#Overview|Application Operations}} | |||

==Project Operations== | |||

{{Internal|OpenShift Project Operations|Project Operations}} | |||

=Template= | =Template= | ||

A template describes a set of objects that can be parameterized and processed to | {{External|https://docs.openshift.com/container-platform/latest/dev_guide/templates.html}} | ||

{{External|https://docs.openshift.org/latest/dev_guide/templates.html}} | |||

A template is a resource that describes a set of objects that can be parameterized and processed, so they can be created at once. The template can be processed to create anything within a project, provided that permissions exist. The template may define a set of labels to apply to every object defined in the template. A template can use preset variables or randomized values, like passwords. | |||

Templates can be stored in, and processed from files, or they can be exposed via the OpenShift API and stored as part of the project. Users can define their own templates within their projects. | |||

The objects define in a template collectively define a ''desired state''. OpenShift's responsibility is to make sure that the current state matches the desired state. | |||

{{Internal|OpenShift Template Definition|Template Definition}} | |||

Specifying parameters in a template allows all objects instantiated by the template to see consistent values for these parameters when the template is processed. The parameters can be specified explicitly, or generated automatically. | |||

The configuration can be generated from template with [[oc process]] command. | |||

Most templates use pre-built S2I builder images, that includes the programming language runtime and its dependencies. These builder images can also be used by themselves, without the corresponding template, for simple use cases. | |||

==New Project Template== | |||

The master provisions projects based on the template that is identified by the [[Master-config.yml#projectRequestTemplate|"projectRequestTemplate" in master-config.yaml file]]. If nothing is specified there, new projects will be created based on a [[OpenShift Default New Project Template|built-in new project template]] that can be obtained with: | |||

oadm create-bootstrap-project-template -o yaml | |||

==New Application Template== | |||

[[oc new-app]] ''<template-name>'' | |||

= | ==Template Libraries== | ||

* Global template library - <font color=red>is this the "openshift" project?</font> | |||

* Project template library. A project template can be based on a JSON file and uploaded with [[Oc_create#Uploads_a_Template_to_the_Project_Template_Library|oc create]] to the project library. A template stored in the library can be instantiate with [[oc new-app]] command. | |||

* https://github.com/openshift/library | |||

* https://github.com/jboss-openshift/application-templates | |||

==Template Operations== | |||

{{Internal|OpenShift Template Operations|Template Operations}} | |||

=Build= | =Build= | ||

{{External|https://docs.openshift.com/container-platform/latest/architecture/core_concepts/builds_and_image_streams.html}} | |||

A ''build'' is the process of transforming input parameters into a resulting object. In most cases, that means transforming source code, other images, Dockerfiles, etc. into a ''runnable image''. A build is run inside of a [[Docker_Concepts#Privileged_Container|privileged container]] and has the same restrictions normal pods have. A build usually results in an image pushed to a Docker registry, subject to post-build tests. Builds are run with the builder [[OpenShift_Security_Concepts#Service_Account|service account]], which must have access to any secrets it needs, such as repository credentials - in addition to the builder service account having to have access to the build secrets, the [[#Build_Configuration|build configuration]] must [[OpenShift_Concepts#Build_Configuration_and_Build_Secrets|contain the required build secret]]. Builds can be [[#Build_Trigger|triggered]] manually or automatically. | |||

OpenShift supports several types of builds. The type of build is also referred to as the [[#Build_Strategy|build strategy]]: | |||

* [[#Docker_Build|Docker build]] | |||

* [[#Source-to-Image_.28S2I.29_Build|Source-to-Image (S2I or source) build]] | |||

* [[#Pipeline_Build|Pipeline build]] | |||

* [[#Custom_Build|Custom build]] | |||

Builds for a project can be reviewed by navigating with the web console to the project -> Builds -> Builds or invoking [[Oc_get#build.2C_builds|oc get builds]] from command line. Note these are actual executed builds, not build configurations. | |||

==Build Strategy== | ==Build Strategy== | ||

| Line 174: | Line 795: | ||

===Docker Build=== | ===Docker Build=== | ||

The Docker build expects repository with [[Dockerfile]] and all artifacts required to produce runnable image. It invokes the [[docker build]] command, and the result is a runnable image. | Docker can be used to build [[Docker Concepts#Container_Image|images]] directly. The Docker build expects a repository with [[Dockerfile]] and all artifacts required to produce runnable image. It invokes the [[docker build]] command, and the result is a runnable image. | ||

{{Internal|OpenShift_Build_Operations#Docker_Build|Create a Docker Build}} | |||

===Source-to-Image (S2I) Build=== | ===Source-to-Image (S2I) Build=== | ||

{{External|https://docs.openshift.com/container-platform/latest/architecture/core_concepts/builds_and_image_streams.html#source-build}} | |||

{{External|https://docs.openshift.com/container-platform/latest/creating_images/s2i.html#creating-images-s2i}} | |||

A ''source to image build strategy'' is a process that takes a base image, called the [[#Builder_Image|builder image]], application source code and [[#S2I_Scripts|S2I scripts]], compiles the source and injects the build artifacts into the builder image, producing a ready-to-run new Docker image, which is the end product of the build. The image is then pushed into the [[#Integrated_Docker_Registry|integrated Docker registry]]. | |||

The essential characteristic of the source build is that the [[#Builder_Image|builder image]] provides both the build environment and the runtime image in which the build artifact is supposed to run in. The build logic is encapsulated within the [[#S2I_Scripts|S2I scripts]]. The S2I scripts usually come with builder image, but they can also be overridden by scripts placed in the source code or in different location, specified in the build configuration. OpenShift supports a wide variety of languages and base images: Java, PHP, Ruby, Python, Node.js. [[#Incremental_Build|Incremental builds]] are supported. With a source build, the assemble process performs a large number of complex operations without creating a new layer at each step, resulting in a compact final image. | |||

The source strategy is specified in the [[#Build_Configuration|build configuration]] as follows: | |||

kind: BuildConfig | |||

spec: | |||

'''strategy''': | |||

'''type''': <font color=teal>'''Source'''</font> | |||

<font color=teal>'''sourceStrategy'''</font>: | |||

'''from''': | |||

kind: ImageStreamTag | |||

name: jboss-eap70-openshift:1.5 | |||

namespace: openshift | |||

'''source''': | |||

'''type''': Git | |||

'''git''': | |||

uri: ... | |||

'''output''': | |||

'''to''': | |||

kind: '''ImageStreamTag''' | |||

name: <''app-image-repository-name''>:latest | |||

The [[#Builder_Image|builder image]] is specified with a 'spec.strategy.sourceStrategy.from' element. Source code origin is specified with 'spec.strategy.source' element. Assuming that the build is successful, the resulted image is pushed into the <font color=red>?</font> image stream and tagged with the 'output' image stream tag. | |||

A working S2I Build Example is available here: {{Internal|OpenShift Build and Deploy a JEE Application with S2I|OpenShift Build and Deploy a JEE Application with S2I}} | |||

An example to create a source build configuration from scratch is available here: {{Internal|OpenShift_Build_Operations#Source_Build|Create a Source Build}} | |||

====The Build Process==== | |||

For a source build, the build process takes place in the [[#Builder_Image|builder image]] container. It consists in the following steps: | |||

* Download the [[#S2I_Scripts|S2I scripts]]. | |||

* If it is an [[#Incremental_Build|incremental build]], save the previous build's artifacts with [[#save-artifacts|save-artifacts]]. | |||

* A work directory is created. | |||

* Pull the source code from the repository into the work directory. | |||

* Heuristics is applied to detect how to build the code. | |||

* Create a TAR that contains [[#S2I_Scripts|S2I scripts]] and source code. | |||

* Untar [[#S2I_Scripts|S2I scripts]], sources and artifacts. | |||

* Invoke the [[#assemble|assemble]] script. | |||

* The build process changes to 'contextDir' - anything that resides outside 'contextDir' is ignored by the build. | |||

* Run build. | |||

* Push image to docker-registry.default.svc:5000/<''project-name''>/... | |||

====Builder Image==== | |||

The builder image is an image that must contain both compile time dependencies and build tools, because the build process take place inside it, and runtime dependencies and the application runtime, because the image will be used to create containers that execute the application. The builder image must also contain the tar archiving utility, available in $PATH, which is used during the build process to extract source code and S2I scripts, and the /bin/sh command line interpreter. A builder image should be able to generate some usage information by running the image with [[docker run]]. An article that shows how to create builder images is available here: https://blog.openshift.com/create-s2i-builder-image/. | |||

====Extended Build==== | |||

An extended build uses a [[#Builder_Image|builder image]] and a ''runtime image'' as two separate images. In this case the builder image contains the build tooling but not the application runtime. The runtime image contains the application runtime. This is useful when we don't want the build tooling laying around in the runtime image. | |||

====S2I Scripts==== | |||

The S2I scripts encapsulate the build logic and must be executable inside the [[#Builder_Image|builder image]]. They must be provided as an input of the source build and play an essential role in the [[#The_Build_Process|build process]]. They come from one of the following locations, listed below in the inverse order of their precedence (if the same script is available in more than one location, the script from the location listed last in the list is used): | |||

* Bundled with the [[#Builder_Image|builder image]], in a location specified as "io.openshift.s2i.scripts-url" label. A common value is "image:///usr/local/s2i". As an example, these are the S2I scripts that come with an EAP7 builder image: [https://github.com/NovaOrdis/playground/blob/master/openshift/sample-s2i-scripts/eap7-image/assemble assemble], [https://github.com/NovaOrdis/playground/blob/master/openshift/sample-s2i-scripts/eap7-image/run run] and [https://github.com/NovaOrdis/playground/blob/master/openshift/sample-s2i-scripts/eap7-image/save-artifacts save-artifacts]. | |||

* Bundled with the source code in an .s2i/bin directory of the application source repository. | |||

* Published at an URL that is specified in the [[OpenShift_Build_Configuration_Definition#scripts|build configuration definition]]. | |||

Both the "io.openshift.s2i.scripts-url" label value specified in the image and the script specified in the [[OpenShift_Build_Configuration_Definition#scripts|build configuration]] can take one of the following forms: | |||

* image:///<''path-to-script-directory''> - the absolute path inside the image. | |||

* file:///<''path-to-script-directory''> - relative or absolute path to a directory on the host where the scripts are located. | |||

* http(s)://<''path-to-script-directory''> - URL to a directory where the scripts are located. | |||

The scripts are: | |||

=====assemble===== | |||

This is a required script. It builds the application artifacts from source and places them into appropriate directories inside the image. The script's main responsibility is to turn source code into a runnable application. It can also be used to inject configuration into the system. | |||

It should execute the following sequence: | |||

* Restore build artifacts, if the build is incremental. In this case [[#save-artifacts|save-artifacts]] must be also defined. | |||

* Place the application source in the build location. | |||

* Build. | |||

* Install the artifacts into locations that are appropriate for them to run. | |||

EAP7 builder image example: [https://github.com/NovaOrdis/playground/blob/master/openshift/sample-s2i-scripts/eap7-image/assemble assemble]. | |||

=====run===== | |||

This is a required script. It is invoked when the container is instantiated to execute the application. | |||

EAP7 builder image example: [https://github.com/NovaOrdis/playground/blob/master/openshift/sample-s2i-scripts/eap7-image/run run]. | |||

=====save-artifacts===== | |||

This is an optional script, needed when incremental builds are enabled. It gathers all dependencies that can speed up the build process, from the build image that has just completed the successful build (the Maven .m2 directory, for example). The dependencies are assembled into a tar file and streamed to standard output. | |||

EAP7 builder image example: [https://github.com/NovaOrdis/playground/blob/master/openshift/sample-s2i-scripts/eap7-image/save-artifacts save-artifacts]. | |||

=====usage===== | |||

This is an optional script. Informs the user how to properly use the image. | |||

=====test/run===== | |||

This is an optional script. Allows to create a simple process to check whether the image is working correctly. For more details see: | |||

{{External|https://docs.openshift.com/container-platform/latest/creating_images/s2i_testing.html#creating-images-s2i-testing}} | |||

====Incremental Build==== | |||

The source build may be configured as an ''incremental build'', which re-uses the previously downloaded dependencies and previously built artifacts, in order to speed up the build. Incremental builds reuse previously downloaded dependencies, previously built artifacts, etc. | |||

strategy | |||

type: "Source" | |||

sourceStrategy: | |||

from: | |||

... | |||

<font color=teal>'''incremental'''</font>: true | |||

====Webhooks==== | |||

A source build can be configured to be automatically trigged when a new event - most commonly a push - is detected by the source repository. When the repository identifies a push, or any other kind of event it was configured for, it makes a HTTP invocation into an OpenShift URL. For internal repositories that run within the OpenShift cluster, such as [[OpenShift Gogs|Gogs]], the URL is https://openshift.default.svc.cluster.local/oapi/v1/namespaces/<''project-name''>/buildconfigs/<''build-configuration-name''>/webhooks/<''generic-secret-value''>/generic. The secret value can be [[OpenShift_Build_Configuration_Definition#Enable_a_Generic_Webhook_Trigger|manually configured in the build configuration]], as shown here, but it is usually set to a randomly generated value when the build configuration is created. For an external URL, such as GitHub, the URL must be publicly accessible '''<font color=red>TODO</font>'''. Once the build is triggered, it proceed as it would otherwise proceed if it was triggered by other means. Note that no build server is required for this mechanism to work, though a build server such as [[OpenShift_CI/CD_Concepts#Overview|Jenkins]] can be integrated and configured to drive a [[#Pipeline_Build|pipeline build]]. | |||

The detailed procedure to configure a webhook trigger is available here: | |||

{{Internal|OpenShift_Build_Operations#Configure_a_Webhook_Trigger_for_a_Source_Build|Configure a Webhook Trigger for a Source Build}} | |||

====Tagging the Build Artifact==== | |||

Once the build process (the [[#assemble|assemble]] script) completes successfully, the build runtime sets the image's command to the [[#run|run]] script, and tags the image with the [[OpenShift Build Configuration Definition#output|output name]] specified in the build configuration. | |||

<font color=red>'''TODO'''</font>. How to tag the output image with a dynamic tag that is generated from the information in the source code itself? | |||

==== MAVEN_MIRROR_URL==== | |||

<font color=red>'''TODO'''</font> 'MAVEN_MIRROR_URL' is an environment variable interpreted by the s2i builder, which use the Maven repository whose URL is specified as a source of artifacts. For more details see: {{Internal|OpenShift_Nexus#MAVEN_MIRROR_URL|OpenShift Nexus}} | |||

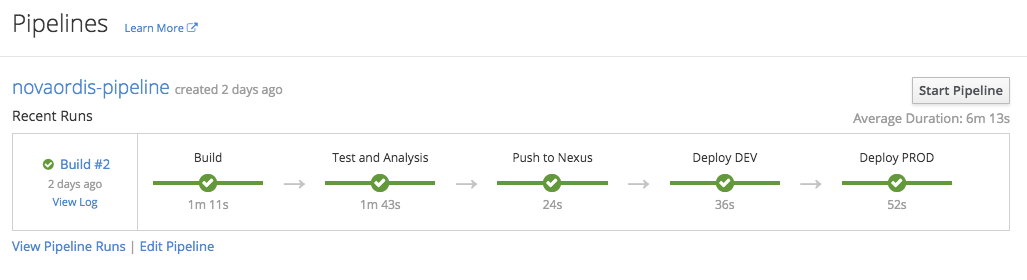

===Pipeline Build=== | |||

{{External|https://docs.openshift.com/container-platform/latest/architecture/core_concepts/builds_and_image_streams.html#pipeline-build}} | |||

{{External|https://docs.openshift.com/container-platform/latest/install_config/configuring_pipeline_execution.html#install-config-configuring-pipeline-execution}} | |||

{{External|https://docs.openshift.com/container-platform/latest/dev_guide/dev_tutorials/openshift_pipeline.html}} | |||

The ''pipeline build strategy'' allows the developers to defined the build logic externally, as a [[Jenkins_Concepts#Pipeline|Jenkins Pipeline]]. The logic is executed by an [[OpenShift_CI/CD_Concepts#Jenkins_Integration|OpenShift-integrated Jenkins instance]], which uses a series of specialized plug-ins to work with OpenShift. More details about how OpenShift and Jenkins interact are available in the [[OpenShift_CI/CD_Concepts#Overview|OpenShift CI/CD Concepts]] page. The specification of the build logic can be embedded directly into an OpenShift build configuration object, as shown below, or it can be specified externally into a [[OpenShift_CI/CD_Concepts#Jenkinsfile|Jenkinsfile]] which is then later automatically integrated with OpenShift. The build can then be started, monitored, and managed by OpenShift in the same way as any other build type, but it can at the same time be managed from the Jenkins UI - these two representations are kept in sync. The pipeline's graphical representation is available both in the integrated Jenkins instance and it OpenShift directly, as a "Pipeline": | |||

[[Image:OpenShiftPipeline.png]] | |||

The pipeline build strategy is specified in the [[#Build_Configuration|build configuration]] as follows: | |||

kind: BuildConfig | |||

spec: | |||

'''strategy''': | |||

'''type''': <font color=teal>'''JenkinsPipeline'''</font> | |||

<font color=teal>'''jenkinsPipelineStrategy'''</font>: | |||

<font color=teal>'''jenkinsfile'''</font>: |- | |||

node('mvn') { | |||

// | |||

// Groovy script that defines the pipeline | |||

// | |||

} | |||

'''source''': | |||

'''type''': none | |||

'''output''': {} | |||

This is an example of specifying the build logic inline in the build configuration. It is also possible to specify the build logic externally in a [[OpenShift_CI/CD_Concepts#Jenkinsfile|Jenkinsfile]]. Note that unlike in the [[#Source-to-Image_.28S2I.29_Build|source build]]'s case, no "output" are specified, because this is fully defined in the Jenkins Groovy script. "source" may be used to specify a source repository URL that contains the [[OpenShift_CI/CD_Concepts#Jenkinsfile|Jenkinsfile]] pipeline definition. | |||