Amazon EKS Concepts: Difference between revisions

| (129 intermediate revisions by the same user not shown) | |||

| Line 4: | Line 4: | ||

=Overview= | =Overview= | ||

EKS is the Amazon Kubernetes-based implementation of a generic infrastructure platform [[Infrastructure_Concepts#Container_Clusters|container cluster]]. | |||

=EKS Cluster= | =EKS Cluster= | ||

| Line 9: | Line 10: | ||

==Control Plane== | ==Control Plane== | ||

==EKS Worker Node== | ==EKS Worker Node== | ||

===Amazon EKS-optimized AMI=== | ===Amazon EKS-optimized AMI=== | ||

==Worker Node Group== | |||

A '''worker node group''' is a named EKS management entity that facilitates creation and management of [[Amazon_EC2_Concepts#Instance|EC2 instance]]-based Kubernetes [[Kubernetes_Control_Plane_and_Data_Plane_Concepts#Worker_Node|worker nodes]]. There could be [[#Managed_Node_Group|managed node groups]] and [[Amazon_EKS_Concepts#Self-Managed_Node_Group|self-managed node groups]]. When the EKS cluster is created, the operator has the option to [[Amazon_EKS_Create_and_Delete_Cluster#Create_Node_Group|create on or more node groups]]. The node groups created this way are [[#Managed_Node_Group|managed node groups]]. An EKS cluster can use more than one node group. | |||

===Node Group Name=== | ===Node Group Name=== | ||

===Managed Node Group=== | |||

{{External|https://docs.aws.amazon.com/eks/latest/userguide/managed-node-groups.html}} | |||

Managed node groups are worker node groups created by default during the provisioning of an EKs cluster. | |||

The main characteristic of a managed node group is that it abstracts out the creation and management of individual EC2 instances. The user does not need to concern themselves with creation of individual EC2 VMs, but only indicate the type and how many are needed. The EC2 instances created by a managed node group are based on EKS-optimized AMIs. When the nodes are updated or terminated, the pods running on them are gracefully drained, in such a way to ensure that the applications stay available. The updates respect the pod disruption budgets. | |||

Each managed node group has a one-to-one association with an [[Amazon_EC2_Auto-Scaling_Concepts#Auto-Scaling_Group|EC2 Auto-Scaling group]], and all nodes provisioned by the group are automatically made part of the EC2 Auto-Scaling group, so the nodes managed by a group can [[#Autoscaling|autoscale]]: the nodes launched as part of a group are automatically tagged for auto-discovery by the Kuberenetes [[#Cluster_Autoscaler|Cluster Autoscaler]]. The associated auto-scaling group name can be retrieved from the managed group configuration. | |||

The nodes managed by a group can run across multiple availability zones. | |||

The node group can be used to apply Kubernetes labels to nodes. | |||

When a managed node group is created, the subnets to attach nodes to must be specified. Managed nodes can be launched both in public and private subnets. | |||

When the managed node group is created, a capacity type, which could be either On-Demand or Spot, must be selected. | |||

===Self-Managed Node Group=== | ===Self-Managed Node Group=== | ||

Contains self-managed worker nodes. The node group name can be used later to identity the Auto Scaling node group that is created for these worker nodes. | Contains self-managed worker nodes. The node group name can be used later to identity the Auto Scaling node group that is created for these worker nodes. | ||

===Node Group Operations=== | |||

* [[Amazon_EKS_Create_and_Delete_Cluster#Provision_Compute_Nodes|Create a node group]] | |||

* [[Amazon_EKS_Operations#Scale_Up_Node_Group|Scale up the node group]] | |||

== | ==Autoscaling== | ||

Autoscaling is implemented in EKS using [[#Worker_Node_Group|node groups]], which in turn use [[Amazon_EC2_Auto-Scaling_Concepts#Auto-Scaling_Group|EC2 auto scaling groups]]. The node groups can be created and associated with the EKS instance via the AWS console, when the [[Amazon_EKS_Create_and_Delete_Cluster#Create_Node_Group|EKS instance is created]]. | |||

==Cluster | If you are running a stateful application across multiple availability zones that is backed by EBS volumes and using the [[#Cluster_Autoscaler|cluster autoscaler]], you should configure multiple node groups, each scoped to a single availability zone, and you should enable the --balance-simiar-node-groups feature. | ||

===Cluster Autoscaler=== | |||

{{Internal|Kubernetes_Autoscaling_Concepts#Cluster_Autoscaler|Kubernetes Cluster Autoscaler}} | |||

===Horizontal Pod Autoscaler=== | |||

{{Internal|Kubernetes_Autoscaling_Concepts#Horizontal_Pod_Autoscaling|Kubernetes Horizontal Pod Autoscaling}} | |||

===Vertical Pod Autoscaler=== | |||

{{Internal|Kubernetes_Autoscaling_Concepts#Vertical_Pod_Autoscaling|Vertical Pod Autoscaling}} | |||

==Cluster Endpoint== | ==Cluster Endpoint== | ||

| Line 32: | Line 59: | ||

==Subnets== | ==Subnets== | ||

==Security Groups== | ==Security Groups== | ||

See [[#EKS_Security_Groups|EKS Security Groups]] below. | |||

=EKS Platform Versions and Kubernetes Versions= | =EKS Platform Versions and Kubernetes Versions= | ||

| Line 61: | Line 89: | ||

==API Server User Management and Access Control== | ==API Server User Management and Access Control== | ||

When an EKS cluster is created, the IAM entity (user or role) that creates the cluster is automatically granted "system:master" permissions in the cluster's RBAC configuration. <font color=darkgray>Where?</font>. Additional IAM users and roles can be added after cluster creation by editing the [[#aws-auth_ConfigMap|aws-auth ConfigMap]]. For more details on how kubectl picks up the caller identity, see [[Amazon_EKS_Operations#Connect_to_an_EKS_Cluster_with_kubectl|Connect to an EKS Cluster with kubectl]]. | |||

==aws-auth ConfigMap== | |||

The "aws-auth" ConfigMap is initially created to allow the nodes to join the cluster. It is created only after the cluster is configured with nodes. | |||

<syntaxhighlight lang='text'> | |||

kubectl -n kube-system -o yaml get cm aws-auth | |||

apiVersion: v1 | |||

kind: ConfigMap | |||

metadata: | |||

name: aws-auth | |||

namespace: kube-system | |||

data: | |||

mapRoles: | | |||

- groups: | |||

- system:bootstrappers | |||

- system:nodes | |||

rolearn: arn:aws:iam::999999999999:role/playground-eks-compute-role | |||

username: system:node:{{EC2PrivateDNSName}} | |||

</syntaxhighlight> | |||

However, the same ConfigMap can be used used to [[Amazon_EKS_Operations#Allowing_Additional_Users_to_Access_the_Cluster|add RBAC access to IAM users and roles]], as described below: | |||

* [[Amazon_EKS_Operations#Allow_Individual_IAM_User_Access|Add individual user access]] | |||

* [[Amazon_EKS_Operations#Allow_Role_Access|Add role access]] | |||

==<span id='IAM_Role'></span>IAM Roles Needed to Operate an EKS Cluster== | |||

There are several IAM roles relevant to an EKS cluster operation, which need to be specified during the installation sequence: | |||

===Cluster Service Role=== | |||

The cluster service role allows the Kubernetes control plane to manage AWS resources. Its trusted entity is AWS Service:eks and contains an "AmazonEKSClusterPolicy" policy. The cluster service role is needed when creating the EKS cluster. The cluster service role is different from the [[#Node_IAM_Role|Node IAM role]], which is associated with the compute nodes and can be created independently. | |||

====Cluster Service Role Operations==== | |||

* [[Amazon_EKS_Create_and_Delete_Cluster#Create_the_Cluster_Service_Role|Create a Cluster Service Role]]. | |||

===<span id='EKS_Worker_Node_IAM_Role'></span>Node IAM Role=== | |||

{{External|https://docs.aws.amazon.com/eks/latest/userguide/create-node-role.html}} | |||

The node IAM role, also known as "cluster compute role" an IAM role compute nodes use to operate under. The Node IAM Role gives permissions to the kubelet running on a worker node to make calls to other API on your behalf. The trusted entity for this role is AWS Service:ec2. This includes permissions to access container registries where the application containers are stored, etc. The policies associated with it are ""AmazonEKSWorkerNodePolicy, "AmazonEC2ContainerRegistryReadOnly" and "AmazonEKS_CNI_Policy". | |||

It can be determined from the AWS console by going to EC2, then to a specific node, then looking up "IAM Role" for that node. Also if node groups are used, the IAM role is associated with the node group. | |||

====Node IAM Role Operations==== | |||

* [[Amazon_EKS_Operations#Create_a_Node_IAM_Role|Create a Node IAM Role]] | |||

==EKS IAM Permissions== | ==EKS IAM Permissions== | ||

| Line 82: | Line 140: | ||

==Pod Security Policy== | ==Pod Security Policy== | ||

{{External|https://docs.aws.amazon.com/eks/latest/userguide/pod-security-policy.html}} | {{External|https://docs.aws.amazon.com/eks/latest/userguide/pod-security-policy.html}} | ||

Also see: {{Internal|Kubernetes_Pod_Security_Policy_Concepts#Pod_Security_Policy_in_EKS|Pod Security Policy Concepts}} | |||

By default, the PodSecurityPolicy admission controller is enabled, but a fully permissive security policy with no restrictions, named "eks.privileged" is applied. The permission to "use" "eks.privileged" is imparted by the "eks:podsecuritypolicy:privileged" ClusterRole: | |||

<syntaxhighlight lang='yaml'> | |||

apiVersion: rbac.authorization.k8s.io/v1 | |||

kind: ClusterRole | |||

metadata: | |||

name: eks:podsecuritypolicy:privileged | |||

rules: | |||

- apiGroups: | |||

- policy | |||

resourceNames: | |||

- eks.privileged | |||

resources: | |||

- podsecuritypolicies | |||

verbs: | |||

- use | |||

</syntaxhighlight> | |||

The "eks:podsecuritypolicy:privileged" ClusterRole is bound by the "eks:podsecuritypolicy:authenticated" ClusterRoleBinding to all members of the "system:authenticated" [[Kubernetes_Security_Concepts#Group|Group]], which results in the fact that any authenticated identity can use it. | |||

==Bearer Tokens== | |||

===Webhook Token Authentication=== | |||

EKS supports natively bearer tokens via [[Kubernetes_Security_Concepts#Webhook_Token_Authentication|webhook token authentication]]. For more details see: {{Internal|EKS_Webhook_Token_Authentication|EKS Webhook Token Authentication}} | |||

==EKS Security Groups== | |||

{{External|https://docs.aws.amazon.com/eks/latest/userguide/sec-group-reqs.html}} | |||

= | <font color=darkgray> | ||

TODO: | |||

https://docs.aws.amazon.com/eks/latest/userguide/sec-group-reqs.html | |||

These are the security groups to apply to the EKS-managed Elastic Network Interfaces that are created in the worker subnets. | |||

Use the security group created by the CloudFormation stack <cluster-name>-*-ControlPlaneSecurityGroup-* | |||

If reusing infrastructure from an existing EKS cluster, get them from Networking → Cluster Security Group | |||

</font> | |||

===Cluster Security Group=== | |||

<font color=darkgray>TODO: next time read and NOKB this: https://docs.aws.amazon.com/eks/latest/userguide/sec-group-reqs.html</font> | |||

The cluster security group is <font color=darkgray>?</font>. | |||

The cluster security group is automatically created during the EKS cluster creation process, and its name is similar to "eks-cluster-sg-<cluster-name>-1234". For an existing cluster, the cluster security group can be retrieved by navigating to the cluster page in AWS console, then Configuration → Networking → Cluster Security Group, or by executing: | |||

<syntaxhighlight lang='bash'> | |||

aws eks describe-cluster --name <cluster-name> --query cluster.resourcesVpcConfig.clusterSecurityGroupId | |||

</syntaxhighlight> | |||

To start with, it should include an inbound rule for "All Traffic", all protocols and all port ranges having itself as source. | |||

If nodes inside the VPC need access to the API server, add an inbound rule for HTTPS and the VPC CIDR block. | |||

The cluster security group is automatically updated with NLB-generated access rules. | |||

The cluster security group may get into an invalid state, due to automated and manual operations, and symptoms include [[EKS_Node_Group_Nodes_Not_Able_to_Join_the_Cluster#Root_Cause|node groups nodes not being able to join the cluster]]. | |||

===Additional Security Groups=== | |||

Additional security groups are <font color=darkgray>?</font> | |||

Additional security groups can be specified during the EKS cluster [[Amazon_EKS_Create_and_Delete_Cluster#Security_groups|creation process via the AWS console]]. | |||

The additional security groups can be retrieved by navigating to the cluster page in AWS console, then Configuration → Networking → Additional Security Groups. | |||

== | ===EKS Security Groups Operations=== | ||

* [[Amazon_EKS_Create_and_Delete_Cluster#Security_groups|Assing security groups during EKS cluster creation with AWS Console]]. | |||

=Load Balancing and Ingress= | =Load Balancing and Ingress= | ||

{{External|https://docs.aws.amazon.com/eks/latest/userguide/load-balancing-and-ingress.html}} | {{External|https://docs.aws.amazon.com/eks/latest/userguide/load-balancing-and-ingress.html}} | ||

==Using an Ingress== | |||

{{Internal|Kubernetes Ingress Concepts|Kubernetes Ingress Concepts}} | {{Internal|Kubernetes Ingress Concepts|Kubernetes Ingress Concepts}} | ||

==Using a NLB== | |||

{{External|https://kubernetes.io/docs/concepts/services-networking/service/#aws-nlb-support}} | |||

{{External|https://kubernetes.io/docs/concepts/services-networking/service/#ssl-support-on-aws}} | |||

A dedicated NLB instance can be deployed per LoadBalancer service if the service is annotated with the "service.beta.kubernetes.io/aws-load-balancer-type" annotation with the value set to "nlb". | |||

<syntaxhighlight lang='yaml'> | |||

metadata: | |||

name: my-service | |||

annotations: | |||

service.beta.kubernetes.io/aws-load-balancer-type: 'nlb' | |||

</syntaxhighlight> | |||

The NLB is created upon service deployment, and destroyed upon service removal. The name of the load balancer becomes part of the automatically provisioned DNS name, so the NLB can be identified by getting the service DNS name with <code>kubectl get svc</code>, extracting the first part of the name, which comes before the first dash, and using that ID to look up the load balancer in the AWS EC2 console. | |||

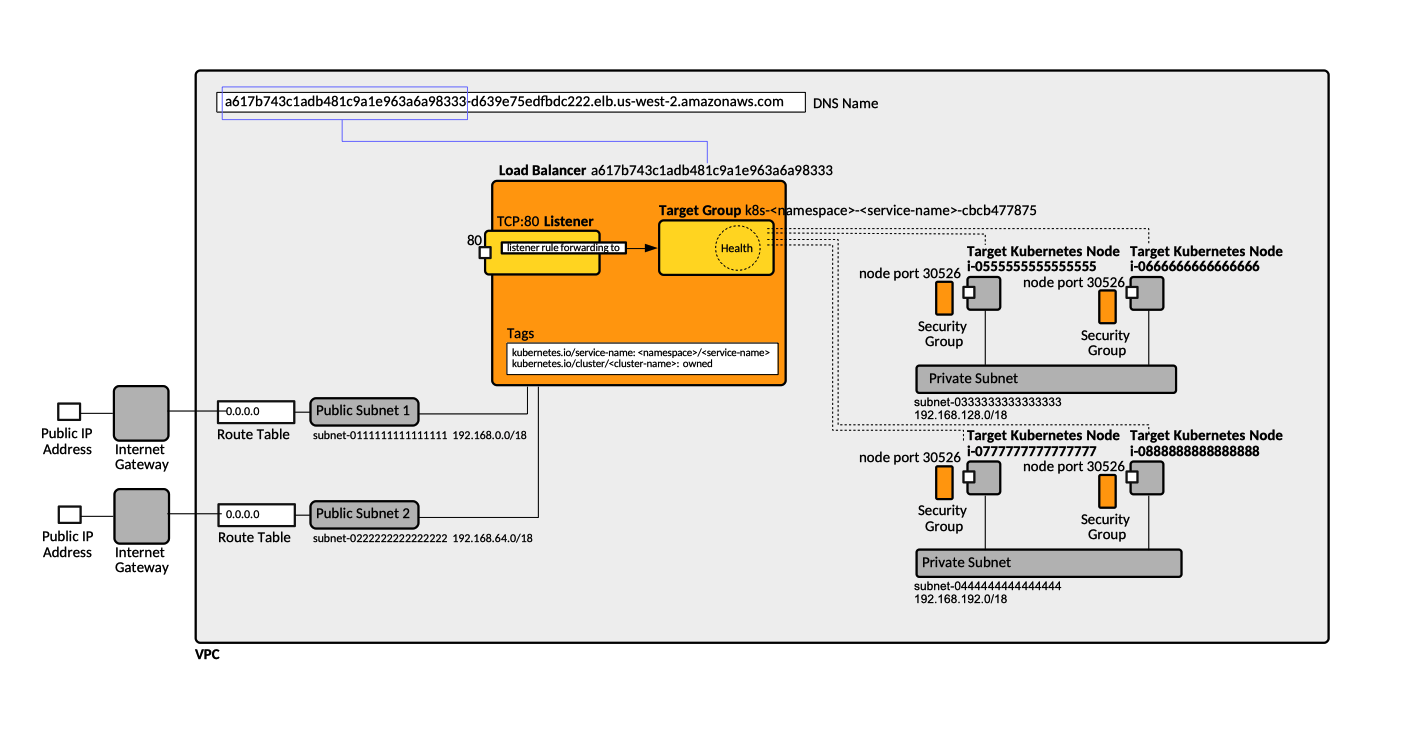

The load balancer will create an [[AWS_Elastic_Load_Balancing_Concepts#Listener|AWS ELB listener]] for each [[Kubernetes_Service_Concepts#Service_Port.28s.29|service port]] exposed by the EKS [[Kubernetes_Service_Concepts#LoadBalancer_Service|LoadBalancer service]], where the [[AWS_Elastic_Load_Balancing_Concepts#Listener_Port|listener port]] matches the service port. The load balancer listener will have s single forward rule to a dynamically created [[AWS_Elastic_Load_Balancing_Concepts#Target_Group|target group]], which includes all the Kubernetes nodes that are part of the cluster. The target group's [[AWS_Elastic_Load_Balancing_Concepts#Target_Group_Port_vs_Target_Port|target port]] matches the corresponding [[Kubernetes_Service_Concepts#NodePort_Service|NodePort service]] port, which is the same on all Kubernetes nodes. | |||

When a request arrives to the publicly exposed port of the NLB listener, the load balancer forwards it to the target group. The target group randomly picks up a Kubernetes node from the group, and forwards the invocation to the Kubernetes node's NodePort service. The NodePort service forwards the invocation to its associated [[Kubernetes_Service_Concepts#Service_.28ClusterIP_Service.29|ClusterIP service]], which in turn forwards the request to a random pod from its pool. More details in [[Kubernetes_Service_Concepts#NodePort_Service|NodePort Service]] section. | |||

If we want to expose a service, for example, only on port 443, we need to declare only port 443 in the LoadBalancer service definition. | |||

If the Service's <code>.spec.externalTrafficPolicy</code> is set to "Cluster", the client's IP address is not propagated to the end Pods. For more details see [[Kubernetes_Service_Concepts#Node.2FPod_Relationship|Node/Pod Relationship]] and [[Kubernetes_Service_Concepts#Client_IP_Preservation|Client IP Preservation]]. | |||

[[Image:EKSLoadBalancer.png]] | |||

===Kubernetes Node Security Group Dynamic Configuration=== | |||

To allow client traffic to reach instances behind an NLB, the nodes' security groups are modified with the following inbound rules: | |||

# "Health Check" rule. TCP on the NodePort ports (<code>.spec.healthCheckNodePort</code> for <code>.spec.externalTrafficPolicy</code> = Local), for the VPC CIDR. The rule is annotated with "kubernetes.io/rule/nlb/health=<loadBalancerName>" | |||

# Client traffic rule. TCP on the NodePort ports, for the <code>.spec.loadBalancerSourceRanges</code> (defaults to 0.0.0.0/0) IP range. The rule is annotated with "kubernetes.io/rule/nlb/client=<loadBalancerName>" | |||

# "MTU Discovery" rule. ICMP on ports 3 and 4, for <code>.spec.loadBalancerSourceRanges</code> (defaults to 0.0.0.0/0) IP range. The rule is annotated with "kubernetes.io/rule/nlb/mtu=<loadBalancerName>" | |||

===TLS Support=== | |||

Add the following annotation on the LoadBalancer service: | |||

====<tt>aws-load-balancer-ssl-cert</tt>==== | |||

<syntaxhighlight lang='yaml'> | |||

metadata: | |||

name: my-service | |||

annotations: | |||

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: arn:aws:acm:us-east-1:123456789012:certificate/12345678-1234-1234-1234-123456789012 | |||

</syntaxhighlight> | |||

====<tt>aws-load-balancer-backend-protocol</tt>==== | |||

<syntaxhighlight lang='yaml'> | |||

metadata: | |||

name: my-service | |||

annotations: | |||

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: (https|http|ssl|tcp) | |||

</syntaxhighlight> | |||

This annotation specifies the protocol supported by the pod. For "https" and "ssl", the load balancer expects the pod to authenticate itself over an encrypted connection using a certificate. | |||

"http" and "https" selects layer 7 proxying: the ELB terminates the connection with the user, parses headers, and injects the X-Forwarded-For header with the user's IP address (pods only see the IP address of the ELB at the other end of its connection) when forwarding requests. | |||

"tcp" and "ssl" selects layer 7: the ELB forwards traffic without modifying the headers. | |||

In a mixed-use environment where some ports are secured and others are left unencrypted, you can use the following annotations: | |||

<syntaxhighlight lang='yaml'> | <syntaxhighlight lang='yaml'> | ||

metadata: | |||

name: my-service | |||

annotations: | annotations: | ||

service.beta.kubernetes.io/aws-load-balancer- | service.beta.kubernetes.io/aws-load-balancer-backend-protocol: http | ||

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: "443,8443" | |||

service.beta.kubernetes.io/aws-load-balancer-ssl- | |||

</syntaxhighlight> | </syntaxhighlight> | ||

In the above example, if the Service contained three ports, 80, 443, and 8443, then 443 and 8443 would use the SSL certificate, but 80 would just be proxied HTTP. | |||

====<tt>aws-load-balancer-ssl-ports</tt>==== | |||

<syntaxhighlight lang='yaml'> | |||

metadata: | |||

name: my-service | |||

annotations: | |||

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: "*" | |||

</syntaxhighlight> | |||

<font color=darkgray>TODO</font> | |||

====<tt>aws-load-balancer-connection-idle-timeout</tt>==== | |||

<syntaxhighlight lang='yaml'> | |||

metadata: | |||

name: my-service | |||

annotations: | |||

service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "3600" | |||

</syntaxhighlight> | |||

<font color=darkgray>TODO</font> | |||

====Predefined AWS SSL Policies==== | |||

<font color=darkgray>TODO: Predefined AWS SSL policies.</font> | |||

====Testing==== | |||

If correctly deployed, the SSL certificate is reported in the AWS EC2 Console → Load Balancer → search → Listeners → SSL Certificate. | |||

The service must be accessed within the DNS domain the certificate was released for, otherwise the browser will warn NET::ERR_CERT_COMMON_NAME_INVALID | |||

===Additional Configuration=== | |||

Additional configuration elements can also be specified as annotations: | |||

<syntaxhighlight lang='yaml'> | |||

metadata: | |||

name: my-service | |||

annotations: | |||

service.beta.kubernetes.io/aws-load-balancer-security-groups: 'sg-00000000000000000' | |||

</syntaxhighlight> | |||

Also see: {{Internal|Kubernetes_Service_Concepts#EKS|Kubernetes Service Concepts}} {{Internal|AWS_Elastic_Load_Balancing_Concepts#Network_Load_Balancer_and_EKS|AWS Elastic Load Balancing Concepts | Network Load Balancer}} | |||

===<span id='Idiosyncrasies'></span>NLB Idiosyncrasies=== | |||

It seems that a LoadBalancer deployment causes updating Kubernetes nodes' security group. Rules area added, and if too many rules are added, the LoadBalancer deployment fails with: | |||

<syntaxhighlight lang='text'> | |||

Warning CreatingLoadBalancerFailed 17m service-controller Error creating load balancer (will retry): failed to ensure load balancer for service xx/xxxxx: error authorizing security group ingress: "RulesPerSecurityGroupLimitExceeded: The maximum number of rules per security group has been reached.\n\tstatus code: 400, request id: a4fc17a6-8803-4fb4-ac78-ee0db99030e0" | |||

</syntaxhighlight> | |||

The solution was to locate a node → Security → Security groups → pick the SG with "kubernetes.io/rule/nlb/" rules, and delete inbound "kubernetes.io/rule/nlb/" rules. | |||

=Storage= | =Storage= | ||

| Line 113: | Line 323: | ||

==Amazon EFS CSI== | ==Amazon EFS CSI== | ||

{{Internal|Amazon EFS CSI|Amazon EFS CSI}} | {{Internal|Amazon EFS CSI|Amazon EFS CSI}} | ||

=eksctl= | |||

{{Internal|eksctl|eksctl}} | |||

Latest revision as of 02:57, 10 January 2022

Internal

Overview

EKS is the Amazon Kubernetes-based implementation of a generic infrastructure platform container cluster.

EKS Cluster

Control Plane

EKS Worker Node

Amazon EKS-optimized AMI

Worker Node Group

A worker node group is a named EKS management entity that facilitates creation and management of EC2 instance-based Kubernetes worker nodes. There could be managed node groups and self-managed node groups. When the EKS cluster is created, the operator has the option to create on or more node groups. The node groups created this way are managed node groups. An EKS cluster can use more than one node group.

Node Group Name

Managed Node Group

Managed node groups are worker node groups created by default during the provisioning of an EKs cluster.

The main characteristic of a managed node group is that it abstracts out the creation and management of individual EC2 instances. The user does not need to concern themselves with creation of individual EC2 VMs, but only indicate the type and how many are needed. The EC2 instances created by a managed node group are based on EKS-optimized AMIs. When the nodes are updated or terminated, the pods running on them are gracefully drained, in such a way to ensure that the applications stay available. The updates respect the pod disruption budgets.

Each managed node group has a one-to-one association with an EC2 Auto-Scaling group, and all nodes provisioned by the group are automatically made part of the EC2 Auto-Scaling group, so the nodes managed by a group can autoscale: the nodes launched as part of a group are automatically tagged for auto-discovery by the Kuberenetes Cluster Autoscaler. The associated auto-scaling group name can be retrieved from the managed group configuration.

The nodes managed by a group can run across multiple availability zones.

The node group can be used to apply Kubernetes labels to nodes.

When a managed node group is created, the subnets to attach nodes to must be specified. Managed nodes can be launched both in public and private subnets.

When the managed node group is created, a capacity type, which could be either On-Demand or Spot, must be selected.

Self-Managed Node Group

Contains self-managed worker nodes. The node group name can be used later to identity the Auto Scaling node group that is created for these worker nodes.

Node Group Operations

Autoscaling

Autoscaling is implemented in EKS using node groups, which in turn use EC2 auto scaling groups. The node groups can be created and associated with the EKS instance via the AWS console, when the EKS instance is created.

If you are running a stateful application across multiple availability zones that is backed by EBS volumes and using the cluster autoscaler, you should configure multiple node groups, each scoped to a single availability zone, and you should enable the --balance-simiar-node-groups feature.

Cluster Autoscaler

Horizontal Pod Autoscaler

Vertical Pod Autoscaler

Cluster Endpoint

AWS Infrastructure Requirements

TODO: Topology diagram

Cluster VPC

Subnets

Security Groups

See EKS Security Groups below.

EKS Platform Versions and Kubernetes Versions

Amazon EKS platform version.

Integration with ECR

Logging

Control Plane Logging

SLA

aws-iam-authenticator

Page 17.

aws-iam-authenticator Operations

.kube/config Configuration

AWS documentation refers to the Kubernetes configuration file as "kubeconfig".

EKS Security

API Server User Management and Access Control

When an EKS cluster is created, the IAM entity (user or role) that creates the cluster is automatically granted "system:master" permissions in the cluster's RBAC configuration. Where?. Additional IAM users and roles can be added after cluster creation by editing the aws-auth ConfigMap. For more details on how kubectl picks up the caller identity, see Connect to an EKS Cluster with kubectl.

aws-auth ConfigMap

The "aws-auth" ConfigMap is initially created to allow the nodes to join the cluster. It is created only after the cluster is configured with nodes.

kubectl -n kube-system -o yaml get cm aws-auth

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

data:

mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::999999999999:role/playground-eks-compute-role

username: system:node:{{EC2PrivateDNSName}}

However, the same ConfigMap can be used used to add RBAC access to IAM users and roles, as described below:

IAM Roles Needed to Operate an EKS Cluster

There are several IAM roles relevant to an EKS cluster operation, which need to be specified during the installation sequence:

Cluster Service Role

The cluster service role allows the Kubernetes control plane to manage AWS resources. Its trusted entity is AWS Service:eks and contains an "AmazonEKSClusterPolicy" policy. The cluster service role is needed when creating the EKS cluster. The cluster service role is different from the Node IAM role, which is associated with the compute nodes and can be created independently.

Cluster Service Role Operations

Node IAM Role

The node IAM role, also known as "cluster compute role" an IAM role compute nodes use to operate under. The Node IAM Role gives permissions to the kubelet running on a worker node to make calls to other API on your behalf. The trusted entity for this role is AWS Service:ec2. This includes permissions to access container registries where the application containers are stored, etc. The policies associated with it are ""AmazonEKSWorkerNodePolicy, "AmazonEC2ContainerRegistryReadOnly" and "AmazonEKS_CNI_Policy".

It can be determined from the AWS console by going to EC2, then to a specific node, then looking up "IAM Role" for that node. Also if node groups are used, the IAM role is associated with the node group.

Node IAM Role Operations

EKS IAM Permissions

These are technically "actions", but they are commonly referred to as "permissions", which implies that the action is part of a formal permission construct associated with the entity requiring it.

- eks:DescribeCluster

Pod Security Policy

Also see:

By default, the PodSecurityPolicy admission controller is enabled, but a fully permissive security policy with no restrictions, named "eks.privileged" is applied. The permission to "use" "eks.privileged" is imparted by the "eks:podsecuritypolicy:privileged" ClusterRole:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: eks:podsecuritypolicy:privileged

rules:

- apiGroups:

- policy

resourceNames:

- eks.privileged

resources:

- podsecuritypolicies

verbs:

- use

The "eks:podsecuritypolicy:privileged" ClusterRole is bound by the "eks:podsecuritypolicy:authenticated" ClusterRoleBinding to all members of the "system:authenticated" Group, which results in the fact that any authenticated identity can use it.

Bearer Tokens

Webhook Token Authentication

EKS supports natively bearer tokens via webhook token authentication. For more details see:

EKS Security Groups

TODO:

https://docs.aws.amazon.com/eks/latest/userguide/sec-group-reqs.html

These are the security groups to apply to the EKS-managed Elastic Network Interfaces that are created in the worker subnets.

Use the security group created by the CloudFormation stack <cluster-name>-*-ControlPlaneSecurityGroup-*

If reusing infrastructure from an existing EKS cluster, get them from Networking → Cluster Security Group

Cluster Security Group

TODO: next time read and NOKB this: https://docs.aws.amazon.com/eks/latest/userguide/sec-group-reqs.html

The cluster security group is ?.

The cluster security group is automatically created during the EKS cluster creation process, and its name is similar to "eks-cluster-sg-<cluster-name>-1234". For an existing cluster, the cluster security group can be retrieved by navigating to the cluster page in AWS console, then Configuration → Networking → Cluster Security Group, or by executing:

aws eks describe-cluster --name <cluster-name> --query cluster.resourcesVpcConfig.clusterSecurityGroupId

To start with, it should include an inbound rule for "All Traffic", all protocols and all port ranges having itself as source.

If nodes inside the VPC need access to the API server, add an inbound rule for HTTPS and the VPC CIDR block.

The cluster security group is automatically updated with NLB-generated access rules.

The cluster security group may get into an invalid state, due to automated and manual operations, and symptoms include node groups nodes not being able to join the cluster.

Additional Security Groups

Additional security groups are ?

Additional security groups can be specified during the EKS cluster creation process via the AWS console.

The additional security groups can be retrieved by navigating to the cluster page in AWS console, then Configuration → Networking → Additional Security Groups.

EKS Security Groups Operations

Load Balancing and Ingress

Using an Ingress

Using a NLB

A dedicated NLB instance can be deployed per LoadBalancer service if the service is annotated with the "service.beta.kubernetes.io/aws-load-balancer-type" annotation with the value set to "nlb".

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: 'nlb'

The NLB is created upon service deployment, and destroyed upon service removal. The name of the load balancer becomes part of the automatically provisioned DNS name, so the NLB can be identified by getting the service DNS name with kubectl get svc, extracting the first part of the name, which comes before the first dash, and using that ID to look up the load balancer in the AWS EC2 console.

The load balancer will create an AWS ELB listener for each service port exposed by the EKS LoadBalancer service, where the listener port matches the service port. The load balancer listener will have s single forward rule to a dynamically created target group, which includes all the Kubernetes nodes that are part of the cluster. The target group's target port matches the corresponding NodePort service port, which is the same on all Kubernetes nodes.

When a request arrives to the publicly exposed port of the NLB listener, the load balancer forwards it to the target group. The target group randomly picks up a Kubernetes node from the group, and forwards the invocation to the Kubernetes node's NodePort service. The NodePort service forwards the invocation to its associated ClusterIP service, which in turn forwards the request to a random pod from its pool. More details in NodePort Service section.

If we want to expose a service, for example, only on port 443, we need to declare only port 443 in the LoadBalancer service definition.

If the Service's .spec.externalTrafficPolicy is set to "Cluster", the client's IP address is not propagated to the end Pods. For more details see Node/Pod Relationship and Client IP Preservation.

Kubernetes Node Security Group Dynamic Configuration

To allow client traffic to reach instances behind an NLB, the nodes' security groups are modified with the following inbound rules:

- "Health Check" rule. TCP on the NodePort ports (

.spec.healthCheckNodePortfor.spec.externalTrafficPolicy= Local), for the VPC CIDR. The rule is annotated with "kubernetes.io/rule/nlb/health=<loadBalancerName>" - Client traffic rule. TCP on the NodePort ports, for the

.spec.loadBalancerSourceRanges(defaults to 0.0.0.0/0) IP range. The rule is annotated with "kubernetes.io/rule/nlb/client=<loadBalancerName>" - "MTU Discovery" rule. ICMP on ports 3 and 4, for

.spec.loadBalancerSourceRanges(defaults to 0.0.0.0/0) IP range. The rule is annotated with "kubernetes.io/rule/nlb/mtu=<loadBalancerName>"

TLS Support

Add the following annotation on the LoadBalancer service:

aws-load-balancer-ssl-cert

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: arn:aws:acm:us-east-1:123456789012:certificate/12345678-1234-1234-1234-123456789012

aws-load-balancer-backend-protocol

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: (https|http|ssl|tcp)

This annotation specifies the protocol supported by the pod. For "https" and "ssl", the load balancer expects the pod to authenticate itself over an encrypted connection using a certificate.

"http" and "https" selects layer 7 proxying: the ELB terminates the connection with the user, parses headers, and injects the X-Forwarded-For header with the user's IP address (pods only see the IP address of the ELB at the other end of its connection) when forwarding requests.

"tcp" and "ssl" selects layer 7: the ELB forwards traffic without modifying the headers.

In a mixed-use environment where some ports are secured and others are left unencrypted, you can use the following annotations:

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: http

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: "443,8443"

In the above example, if the Service contained three ports, 80, 443, and 8443, then 443 and 8443 would use the SSL certificate, but 80 would just be proxied HTTP.

aws-load-balancer-ssl-ports

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: "*"

TODO

aws-load-balancer-connection-idle-timeout

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "3600"

TODO

Predefined AWS SSL Policies

TODO: Predefined AWS SSL policies.

Testing

If correctly deployed, the SSL certificate is reported in the AWS EC2 Console → Load Balancer → search → Listeners → SSL Certificate.

The service must be accessed within the DNS domain the certificate was released for, otherwise the browser will warn NET::ERR_CERT_COMMON_NAME_INVALID

Additional Configuration

Additional configuration elements can also be specified as annotations:

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/aws-load-balancer-security-groups: 'sg-00000000000000000'

Also see:

NLB Idiosyncrasies

It seems that a LoadBalancer deployment causes updating Kubernetes nodes' security group. Rules area added, and if too many rules are added, the LoadBalancer deployment fails with:

Warning CreatingLoadBalancerFailed 17m service-controller Error creating load balancer (will retry): failed to ensure load balancer for service xx/xxxxx: error authorizing security group ingress: "RulesPerSecurityGroupLimitExceeded: The maximum number of rules per security group has been reached.\n\tstatus code: 400, request id: a4fc17a6-8803-4fb4-ac78-ee0db99030e0"

The solution was to locate a node → Security → Security groups → pick the SG with "kubernetes.io/rule/nlb/" rules, and delete inbound "kubernetes.io/rule/nlb/" rules.